Study Note 3.3.2: BigQuery Machine Learning Model Deployment using Docker

This lecture outlines the steps to export a BigQuery Machine Learning model, deploy it in a Docker container, and use it for predictions via HTTP requests. 1. Exporting the Machine Learning Model to Google Cloud Storage (GCS) Purpose: To extract the trained BigQuery ML model and store it in GCS for further deployment. Prerequisites: gcloud auth login: Ensure you are authenticated with Google Cloud. This step is crucial for interacting with Google Cloud services. The transcript assumes this step is already completed. Action: Execute a BigQuery query to export the model. The specific query is not provided in the transcript, but it involves exporting the project into a Google Cloud Storage bucket. Verification: Before export, the GCS bucket is empty. After successful execution of the export query, refresh the GCS bucket to confirm the model files are present. 2. Copying the Model from GCS to a Local Directory Purpose: To transfer the exported model files from GCS to your local machine for Docker deployment preparation. Steps: Create a temporary directory: Make a new directory on your local machine to store the model files temporarily. In the transcript, a directory named temp_model is created. Use gsutil cp command: Utilize the gsutil cp command-line tool to copy the model files from the GCS bucket to the newly created local directory. Verification: Check the local temporary directory to ensure the model files have been successfully copied from GCS. 3. Creating a Serving Directory for the Model Purpose: To structure the model files in a specific directory format that TensorFlow Serving can recognize and use for serving predictions. Steps: Create a serving directory: Create a directory that will act as the serving directory. In the transcript, a directory named serving_directory is created within the project. Create a versioned subdirectory: Inside the serving directory, create a version-specific subdirectory. The transcript uses tip_model_v1. Versioning is important for managing model updates. Copy model data: Copy the contents of the temporary model directory (containing the model files from GCS) into the versioned serving subdirectory. Verification: Confirm that the model data is now present within the versioned serving directory. 4. Pulling and Running the TensorFlow Serving Docker Image Purpose: To containerize the model using Docker and TensorFlow Serving, enabling deployment and serving of predictions via a REST API. Steps: Pull TensorFlow Serving Docker image: Use the docker pull command to download the TensorFlow Serving Docker image from a container registry (like Docker Hub). The specific command is not shown, but it would typically be something like docker pull tensorflow/serving. Run Docker image: Execute the docker run command to start a Docker container using the pulled TensorFlow Serving image. Mount serving directory: Mount the serving directory created in the previous step to the Docker container. This makes the model accessible to TensorFlow Serving within the container. Port mapping: Map the container's port (typically 8501 for REST API) to a port on your local machine, allowing you to access the service. Verify Docker container is running: Use docker ps command to check if the TensorFlow Serving container is running and accessible. 5. Making HTTP Requests for Predictions using Postman (or similar tool) Purpose: To test the deployed model by sending prediction requests to the TensorFlow Serving REST API and receiving predictions. Tool: Postman (or any HTTP client tool) is used to send requests. Steps: Check Model Version API: Send a GET request to the /versions/ endpoint of the TensorFlow Serving container's REST API (e.g., http://localhost:8501/v1/models/tip_model/versions/). This verifies that the model is loaded and the version is accessible. Make Prediction Request API: Send a POST request to the /predict endpoint (e.g., http://localhost:8501/v1/models/tip_model:predict). Request Body: The request body should be in JSON format and contain the input parameters for the model prediction. The transcript mentions input features like: passenger_count, trip_distance, PULocationID, DOLocationID, payment_type, fare_amount, total_amount. Example Request: The transcript shows an example request with specific values for these features. Analyze Prediction Response: Examine the JSON response from the API. The response will contain the model's prediction. In the example, the prediction is the tip_amount. Vary Input Parameters: Modify the input parameters in subsequent requests to observe how the predictions change. The transcript demonstrates changing the payment_type and observing the change in the predicted tip_amount. Summary: This process demonstrates how to deploy a BigQuery ML model for online prediction using Docker and TensorFlow Serving. By exporting the model from BigQuery, con

This lecture outlines the steps to export a BigQuery Machine Learning model, deploy it in a Docker container, and use it for predictions via HTTP requests.

1. Exporting the Machine Learning Model to Google Cloud Storage (GCS)

- Purpose: To extract the trained BigQuery ML model and store it in GCS for further deployment.

-

Prerequisites:

- gcloud auth login: Ensure you are authenticated with Google Cloud. This step is crucial for interacting with Google Cloud services.

- The transcript assumes this step is already completed.

- Action: Execute a BigQuery query to export the model. The specific query is not provided in the transcript, but it involves exporting the project into a Google Cloud Storage bucket.

-

Verification:

- Before export, the GCS bucket is empty.

- After successful execution of the export query, refresh the GCS bucket to confirm the model files are present.

2. Copying the Model from GCS to a Local Directory

- Purpose: To transfer the exported model files from GCS to your local machine for Docker deployment preparation.

-

Steps:

-

Create a temporary directory: Make a new directory on your local machine to store the model files temporarily. In the transcript, a directory named

temp_modelis created. -

Use

gsutil cpcommand: Utilize thegsutil cpcommand-line tool to copy the model files from the GCS bucket to the newly created local directory. - Verification: Check the local temporary directory to ensure the model files have been successfully copied from GCS.

-

Create a temporary directory: Make a new directory on your local machine to store the model files temporarily. In the transcript, a directory named

3. Creating a Serving Directory for the Model

- Purpose: To structure the model files in a specific directory format that TensorFlow Serving can recognize and use for serving predictions.

-

Steps:

-

Create a serving directory: Create a directory that will act as the serving directory. In the transcript, a directory named

serving_directoryis created within the project. -

Create a versioned subdirectory: Inside the serving directory, create a version-specific subdirectory. The transcript uses

tip_model_v1. Versioning is important for managing model updates. - Copy model data: Copy the contents of the temporary model directory (containing the model files from GCS) into the versioned serving subdirectory.

- Verification: Confirm that the model data is now present within the versioned serving directory.

-

Create a serving directory: Create a directory that will act as the serving directory. In the transcript, a directory named

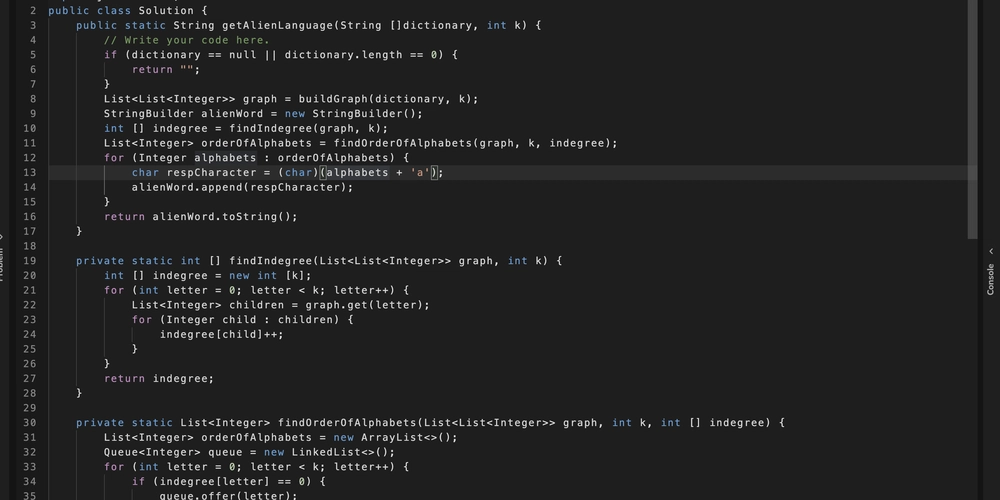

4. Pulling and Running the TensorFlow Serving Docker Image

- Purpose: To containerize the model using Docker and TensorFlow Serving, enabling deployment and serving of predictions via a REST API.

-

Steps:

-

Pull TensorFlow Serving Docker image: Use the

docker pullcommand to download the TensorFlow Serving Docker image from a container registry (like Docker Hub). The specific command is not shown, but it would typically be something likedocker pull tensorflow/serving. -

Run Docker image: Execute the

docker runcommand to start a Docker container using the pulled TensorFlow Serving image.- Mount serving directory: Mount the serving directory created in the previous step to the Docker container. This makes the model accessible to TensorFlow Serving within the container.

- Port mapping: Map the container's port (typically 8501 for REST API) to a port on your local machine, allowing you to access the service.

-

Verify Docker container is running: Use

docker pscommand to check if the TensorFlow Serving container is running and accessible.

-

Pull TensorFlow Serving Docker image: Use the

5. Making HTTP Requests for Predictions using Postman (or similar tool)

- Purpose: To test the deployed model by sending prediction requests to the TensorFlow Serving REST API and receiving predictions.

- Tool: Postman (or any HTTP client tool) is used to send requests.

-

Steps:

-

Check Model Version API: Send a GET request to the

/versions/endpoint of the TensorFlow Serving container's REST API (e.g.,http://localhost:8501/v1/models/tip_model/versions/). This verifies that the model is loaded and the version is accessible. -

Make Prediction Request API: Send a POST request to the

/predictendpoint (e.g.,http://localhost:8501/v1/models/tip_model:predict).-

Request Body: The request body should be in JSON format and contain the input parameters for the model prediction. The transcript mentions input features like:

passenger_count,trip_distance,PULocationID,DOLocationID,payment_type,fare_amount,total_amount. - Example Request: The transcript shows an example request with specific values for these features.

-

Request Body: The request body should be in JSON format and contain the input parameters for the model prediction. The transcript mentions input features like:

-

Analyze Prediction Response: Examine the JSON response from the API. The response will contain the model's prediction. In the example, the prediction is the

tip_amount. -

Vary Input Parameters: Modify the input parameters in subsequent requests to observe how the predictions change. The transcript demonstrates changing the

payment_typeand observing the change in the predictedtip_amount.

-

Check Model Version API: Send a GET request to the

Summary:

This process demonstrates how to deploy a BigQuery ML model for online prediction using Docker and TensorFlow Serving. By exporting the model from BigQuery, containerizing it with Docker, and serving it via a REST API, you can easily integrate your machine learning model into applications that require real-time predictions. This approach leverages the scalability and portability of Docker and the serving capabilities of TensorFlow Serving.

Key Takeaways:

- End-to-End Deployment: The transcript covers the complete workflow from model export to prediction serving.

- Docker Containerization: Docker provides a consistent and portable environment for deploying the ML model.

- TensorFlow Serving: TensorFlow Serving makes it easy to serve TensorFlow models via REST APIs.

- HTTP-based Predictions: The deployed model can be accessed and used for predictions through standard HTTP requests, making it broadly accessible to various applications.

- Model Versioning: Using versioned directories allows for easier model updates and rollbacks.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

(1).webp?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)