Stitching Giant JSONs Together with JSON Patch

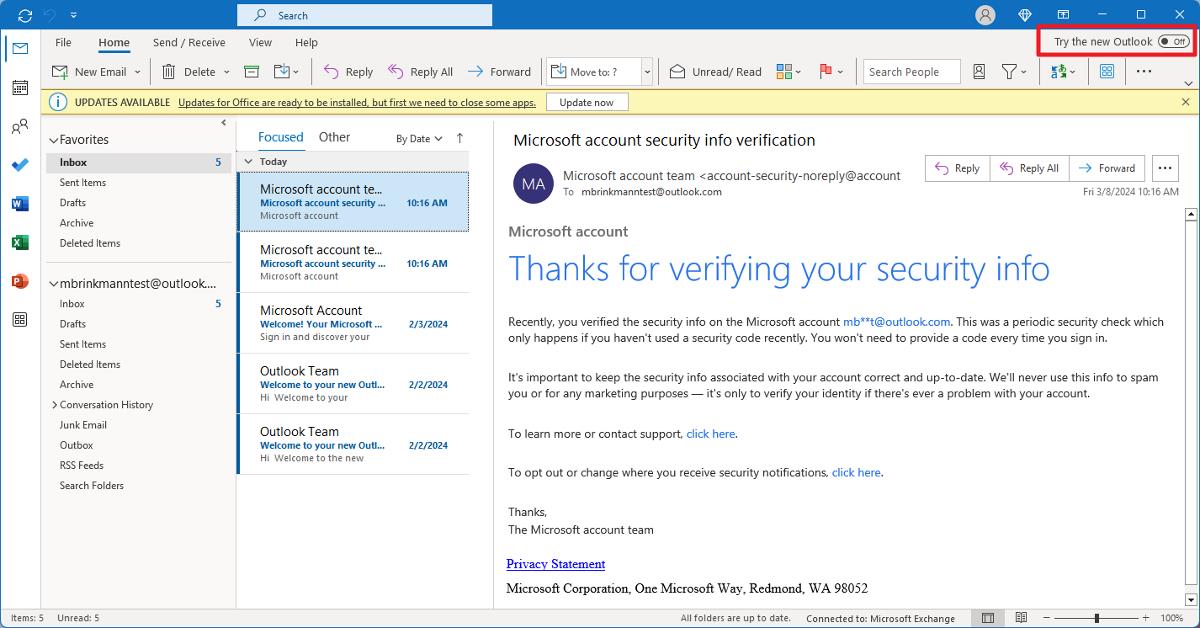

JSON is everywhere. Whether you’re building APIs, managing configs, or wrangling data for machine learning models, you’ve probably dealt with JSON files that feel like they’re bursting at the seams. Now, consider a common problem: large language models (LLMs) with an ~8k token output limit. Suddenly, you’re not just dealing with one big JSON—you’re piecing together multiple chunks from an LLM’s output. Or maybe you’re syncing updates across distributed systems where JSON diffs are your lifeline. This is where JSON Patch shines. It’s a tool for making precise updates to JSON documents without rewriting everything from scratch. Think of it as a surgeon’s scalpel for your data—small, targeted changes instead of a sledgehammer. In this post, we’ll dive into the json-patch library by Evan Phoenix, explore how it solves real-world problems like stitching LLM outputs, and walk through examples to get you started. We’ll cover: Why JSON Patch matters for big JSONs Setting up and configuring the json-patch library Patching LLM output chunks into one JSON Handling common JSON operation combos Tips and tricks for smoother patching Let’s get into it. Why JSON Patch Matters for Big JSONs JSON grows fast. A single LLM generating configs might spit out 8k tokens per response—roughly 6-8k characters depending on the content. If your target JSON exceeds that, you’re stuck stitching pieces together. Manually merging those chunks is a nightmare: you risk overwriting data, missing updates, or introducing bugs from sloppy key-value mismatches. Then there’s the broader world of JSON ops. Maybe you’re: Updating a huge config file in a microservice without redeploying. Syncing client-side changes to a server’s JSON store. Comparing two JSON docs to spot differences. JSON Patch (RFC 6902) (link) lets you define operations like “add this key,” “replace that value,” or “remove this field” in a compact, reusable way. Meanwhile, JSON Merge Patch (RFC 7396) (link) offers a simpler diff-like approach for whole-document updates. The json-patch library supports both, making it a Swiss Army knife for JSON surgery. For LLMs, JSON Patch is a lifesaver. You can take each 8k-token chunk, turn it into a patch, and apply it sequentially to build the final document. No more clunky string concatenation or fragile manual merges. Here’s a quick comparison of the two approaches supported by the library: Approach Spec Best For Example JSON Patch RFC 6902 Precise, operation-based updates [{"op": "add", "path": "/key", "value": "val"}] JSON Merge Patch RFC 7396 Simple, diff-like changes {"key": "newval", "oldkey": null} Let’s set up the tools and see it in action. Setting Up and Configuring json-patch First, grab the library. If you’re using Go (and you probably are if you’re here), it’s a one-liner: go get -u github.com/evanphx/json-patch/v5 That’s the latest version as of April 2025. The library’s defaults are solid, but you can tweak a couple of global settings: SupportNegativeIndices: Set to true by default. Lets you use negative array indices (e.g., -1 for the last element). Turn it off with jsonpatch.SupportNegativeIndices = false if you hate non-standard tricks. AccumulatedCopySizeLimit: Caps the byte size increase from “copy” operations. Defaults to 0 (no limit), but you can set it to avoid memory blowups. For more control, use ApplyWithOptions. It takes an ApplyOptions struct with extra flags: AllowMissingPathOnRemove: Ignores remove ops on non-existent paths if true. Default is false (errors out). EnsurePathExistsOnAdd: Creates missing paths during add ops if true. Default is false. Here’s how to set it up: opts := jsonpatch.NewApplyOptions() opts.EnsurePathExistsOnAdd = true opts.AllowMissingPathOnRemove = true Now you’re ready to patch. Let’s tackle that LLM problem next. Patching LLM Output Chunks into One JSON Imagine an LLM generating a massive config JSON in chunks due to its 8k token limit. Say you’re building a server config with 20k tokens total—split into three parts. Each chunk updates or adds to the previous one. JSON Patch can stitch them together cleanly. Here’s the scenario: Chunk 1: Base config with server name and port. Chunk 2: Adds routes. Chunk 3: Updates port and adds a timeout. Start with an empty JSON doc: {} Chunk 1 comes in: {"server": {"name": "api-1", "port": 8080}} Turn it into a merge patch (simpler for whole-doc updates): original := []byte(`{}`) chunk1 := []byte(`{"server": {"name": "api-1", "port": 8080}}`) patch1, err := jsonpatch.CreateMergePatch(original, chunk1) if err != nil { panic(err) } result, err := jsonpatch.MergePatch(original, patch1) result is now {"server": {"name": "api-1", "port": 8080}}. Chunk 2 adds routes: {"server": {"routes": ["/health", "/status"]}} Since this overlaps with the existing server key, use a JSON Patch for precision: patch2JSON

JSON is everywhere. Whether you’re building APIs, managing configs, or wrangling data for machine learning models, you’ve probably dealt with JSON files that feel like they’re bursting at the seams.

Now, consider a common problem: large language models (LLMs) with an ~8k token output limit. Suddenly, you’re not just dealing with one big JSON—you’re piecing together multiple chunks from an LLM’s output.

Or maybe you’re syncing updates across distributed systems where JSON diffs are your lifeline.

This is where JSON Patch shines. It’s a tool for making precise updates to JSON documents without rewriting everything from scratch.

Think of it as a surgeon’s scalpel for your data—small, targeted changes instead of a sledgehammer.

In this post, we’ll dive into the json-patch library by Evan Phoenix, explore how it solves real-world problems like stitching LLM outputs, and walk through examples to get you started.

We’ll cover:

- Why JSON Patch matters for big JSONs

- Setting up and configuring the

json-patchlibrary - Patching LLM output chunks into one JSON

- Handling common JSON operation combos

- Tips and tricks for smoother patching

Let’s get into it.

Why JSON Patch Matters for Big JSONs

JSON grows fast. A single LLM generating configs might spit out 8k tokens per response—roughly 6-8k characters depending on the content. If your target JSON exceeds that, you’re stuck stitching pieces together. Manually merging those chunks is a nightmare: you risk overwriting data, missing updates, or introducing bugs from sloppy key-value mismatches.

Then there’s the broader world of JSON ops. Maybe you’re:

- Updating a huge config file in a microservice without redeploying.

- Syncing client-side changes to a server’s JSON store.

- Comparing two JSON docs to spot differences.

JSON Patch (RFC 6902) (link) lets you define operations like “add this key,” “replace that value,” or “remove this field” in a compact, reusable way. Meanwhile, JSON Merge Patch (RFC 7396) (link) offers a simpler diff-like approach for whole-document updates. The json-patch library supports both, making it a Swiss Army knife for JSON surgery.

For LLMs, JSON Patch is a lifesaver. You can take each 8k-token chunk, turn it into a patch, and apply it sequentially to build the final document. No more clunky string concatenation or fragile manual merges.

Here’s a quick comparison of the two approaches supported by the library:

| Approach | Spec | Best For | Example |

|---|---|---|---|

| JSON Patch | RFC 6902 | Precise, operation-based updates | [{"op": "add", "path": "/key", "value": "val"}] |

| JSON Merge Patch | RFC 7396 | Simple, diff-like changes | {"key": "newval", "oldkey": null} |

Let’s set up the tools and see it in action.

Setting Up and Configuring json-patch

First, grab the library. If you’re using Go (and you probably are if you’re here), it’s a one-liner:

go get -u github.com/evanphx/json-patch/v5

That’s the latest version as of April 2025.

The library’s defaults are solid, but you can tweak a couple of global settings:

-

SupportNegativeIndices: Set totrueby default. Lets you use negative array indices (e.g.,-1for the last element). Turn it off withjsonpatch.SupportNegativeIndices = falseif you hate non-standard tricks. -

AccumulatedCopySizeLimit: Caps the byte size increase from “copy” operations. Defaults to 0 (no limit), but you can set it to avoid memory blowups.

For more control, use ApplyWithOptions. It takes an ApplyOptions struct with extra flags:

-

AllowMissingPathOnRemove: Ignoresremoveops on non-existent paths iftrue. Default isfalse(errors out). -

EnsurePathExistsOnAdd: Creates missing paths duringaddops iftrue. Default isfalse.

Here’s how to set it up:

opts := jsonpatch.NewApplyOptions()

opts.EnsurePathExistsOnAdd = true

opts.AllowMissingPathOnRemove = true

Now you’re ready to patch. Let’s tackle that LLM problem next.

Patching LLM Output Chunks into One JSON

Imagine an LLM generating a massive config JSON in chunks due to its 8k token limit. Say you’re building a server config with 20k tokens total—split into three parts. Each chunk updates or adds to the previous one. JSON Patch can stitch them together cleanly.

Here’s the scenario:

- Chunk 1: Base config with server name and port.

- Chunk 2: Adds routes.

- Chunk 3: Updates port and adds a timeout.

Start with an empty JSON doc:

{}

Chunk 1 comes in:

{"server": {"name": "api-1", "port": 8080}}

Turn it into a merge patch (simpler for whole-doc updates):

original := []byte(`{}`)

chunk1 := []byte(`{"server": {"name": "api-1", "port": 8080}}`)

patch1, err := jsonpatch.CreateMergePatch(original, chunk1)

if err != nil {

panic(err)

}

result, err := jsonpatch.MergePatch(original, patch1)

result is now {"server": {"name": "api-1", "port": 8080}}.

Chunk 2 adds routes:

{"server": {"routes": ["/health", "/status"]}}

Since this overlaps with the existing server key, use a JSON Patch for precision:

patch2JSON := []byte(`[{"op": "add", "path": "/server/routes", "value": ["/health", "/status"]}]`)

patch2, err := jsonpatch.DecodePatch(patch2JSON)

if err != nil {

panic(err)

}

result, err = patch2.Apply(result)

Now result is {"server": {"name": "api-1", "port": 8080, "routes": ["/health", "/status"]}}.

Chunk 3 updates the port and adds a timeout:

[

{"op": "replace", "path": "/server/port", "value": 9090},

{"op": "add", "path": "/server/timeout", "value": 30}

]

Apply it:

patch3JSON := []byte(`[{"op": "replace", "path": "/server/port", "value": 9090}, {"op": "add", "path": "/server/timeout", "value": 30}]`)

patch3, err := jsonpatch.DecodePatch(patch3JSON)

if err != nil {

panic(err)

}

result, err = patch3.Apply(result)

Final result: {"server": {"name": "api-1", "port": 9090, "routes": ["/health", "/status"], "timeout": 30}}.

Key takeaway: Use Merge Patch for big initial diffs, then JSON Patch for targeted updates. For LLMs, process each chunk as it arrives, building the full doc incrementally. Check out RFC 6902 for the full JSON Patch spec.

Handling Common JSON Operation Combos

JSON Patch isn’t just for LLMs. It’s a tool for combining operations in other scenarios too. Here are two common ones:

Syncing Client-Server Updates

A client updates a user profile JSON. The server has its own copy. You need to merge changes without clobbering unrelated fields.

Server JSON:

{"user": {"name": "John", "age": 24, "role": "dev"}}

Client JSON:

{"user": {"name": "Jane", "age": 25}}

Create a merge patch:

original := []byte(`{"user": {"name": "John", "age": 24, "role": "dev"}}`)

modified := []byte(`{"user": {"name": "Jane", "age": 25}}`)

patch, err := jsonpatch.CreateMergePatch(original, modified)

result, err := jsonpatch.MergePatch(original, patch)

result: {"user": {"name": "Jane", "age": 25, "role": "dev"}}. The role stays intact.

Combining Multiple Patches

Say two devs edit the same JSON config independently. Merge their changes into one patch:

Patch 1:

{"name": "Jane", "port": null}

Patch 2:

{"timeout": 60}

Combine them:

patch1 := []byte(`{"name": "Jane", "port": null}`)

patch2 := []byte(`{"timeout": 60}`)

combined, err := jsonpatch.MergeMergePatches(patch1, patch2)

combined: {"name": "Jane", "port": null, "timeout": 60}. Apply it to the original doc in one shot.

Key takeaway: Use MergeMergePatches to squash multiple merge patches into one. It’s cleaner than chaining applies.

Tips and Tricks for Smoother Patching

Here’s some practical advice to avoid headaches:

-

Validate patches: Always check

errafterDecodePatchorCreateMergePatch. Bad JSON will bite you. -

Test equality: Use

jsonpatch.Equal(doc1, doc2)to confirm patches produce the expected output. It ignores whitespace and key order. -

Handle missing paths: Set

EnsurePathExistsOnAddtotrueif your LLM chunks assume nested paths exist. Saves you from “path not found” errors. -

CLI bonus: Install the

json-patchCLI (go install github.com/evanphx/json-patch/cmd/json-patch) to test patches from the terminal. Pipe in a doc and apply patches likecat doc.json | json-patch -p patch.json.

Example CLI run:

echo '{"name": "John"}' | json-patch -p '[{"op": "add", "path": "/age", "value": 30}]'

Output: {"name": "John", "age": 30}.

That’s it! You’ve got the tools to knit massive JSONs together, whether it’s LLM chunks or everyday diffs. Start small with the examples, tweak the options, and scale up. JSON Patch isn’t flashy, but it gets the job done.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![GrandChase tier list of the best characters available [April 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

.webp?#)

![Here’s everything new in Android 16 Beta 4 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/11/Android-16-logo-top-down.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New Beats USB-C Charging Cables Now Available on Amazon [Video]](https://www.iclarified.com/images/news/97060/97060/97060-640.jpg)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)