Shadow Testing Superpowers: Four Ways To Bulletproof APIs

Read this article on Signadot's blog. Shadow testing is unlocking a new generation of testing approaches that provide deeper insights and more confidence than traditional methods. I’ve spent the last 20+ years building distributed systems, and one truth remains constant: testing microservices is challenging. Shadow testing is a powerful approach to validate microservice changes safely because engineering leaders are eager for better ways to test complex microservice architectures. While our previous article focused on the fundamental concept of shadow testing — running two versions of a service side by side against the same traffic — there’s much more to explore about how this approach unlocks entirely new testing signals. The real magic happens when we leverage this parallel execution to derive actionable insights that traditional testing methods simply can’t provide. Let’s explore four powerful testing approaches that shadow testing enables, each providing unique signals that help developers ship with confidence. 1. API Contract Testing: Detecting Breaking Changes API contract testing is perhaps the most immediately valuable application of shadow testing. Traditional contract testing relies on mock services and schema validation, which can miss subtle compatibility issues. Shadow testing takes contract validation to the next level by comparing actual API responses between versions. Here’s how it works: Deploy your updated service alongside the baseline version. Route identical traffic to both versions. Compare the responses in real time. Identify any divergence that indicates potential contract breaks. This approach catches breaking changes that would be invisible to conventional testing: field type changes, new required fields, altered error responses and even performance degradation that might violate service-level agreements (SLAs). This approach combines the best of specification-based contract testing with runtime validation. Rather than just checking if your new API implementation conforms to a static specification, you’re directly measuring compatibility with everything currently consuming it. For instance, when a team at a fintech company implemented this approach, it caught a subtle breaking change where a field was being converted from string to integer — something its OpenAPI validators hadn’t flagged, but that would have broken several downstream consumers. The shadow testing system highlighted the discrepancy immediately, allowing the team to fix it before merging. 2. Performance Testing: Compare Side by Side Performance testing is another area where shadow testing shines. Traditional performance testing usually happens late in the development cycle in dedicated environments with synthetic loads that often don’t reflect real-world usage patterns. With shadow testing, you can: Run your new code against actual production traffic patterns. Compare key metrics against the baseline version in real time. Identify performance regressions before they affect users. Validate that optimizations actually deliver expected improvements. This approach is particularly powerful when combined with canary analysis tools like Kayenta, which can automatically analyze statistical significance in performance differences. A retail platform I worked with used this approach to validate a major database query optimization. While its synthetic benchmarks showed a 40% improvement, shadow testing against real traffic revealed edge cases where certain user queries were performing worse. The team was able to address these issues before deploying, avoiding what would have been a serious production incident during the company’s peak shopping season. The beauty of this approach is that it doesn’t just test whether your code functions correctly — it tests whether it functions correctly under real-world conditions with diverse traffic patterns that would be nearly impossible to simulate accurately. 3. Log Analysis: Uncovering Hidden Issues Log analysis is often overlooked in traditional testing approaches, yet logs contain rich information about application behavior. Shadow testing enables sophisticated log comparisons that can surface subtle issues before they manifest as user-facing problems. With log-based shadow testing, you can: Capture logs from both baseline and test versions. Apply machine learning (ML) clustering to identify new or changed log patterns. Highlight potentially problematic log sequences. Discover errors, warnings or unexpected behaviors that might not trigger test failures. I’ve seen this approach catch issues that would have slipped through every other testing layer. One engineering team discovered their new code was generating a cluster of database connection warnings that weren’t present in the baseline version. While these warnings didn’t cause immediate failures, they were an early indicator of connection pool exhaustion that would have

Read this article on Signadot's blog.

Shadow testing is unlocking a new generation of testing approaches that provide deeper insights and more confidence than traditional methods.

I’ve spent the last 20+ years building distributed systems, and one truth remains constant: testing microservices is challenging. Shadow testing is a powerful approach to validate microservice changes safely because engineering leaders are eager for better ways to test complex microservice architectures.

While our previous article focused on the fundamental concept of shadow testing — running two versions of a service side by side against the same traffic — there’s much more to explore about how this approach unlocks entirely new testing signals.

The real magic happens when we leverage this parallel execution to derive actionable insights that traditional testing methods simply can’t provide. Let’s explore four powerful testing approaches that shadow testing enables, each providing unique signals that help developers ship with confidence.

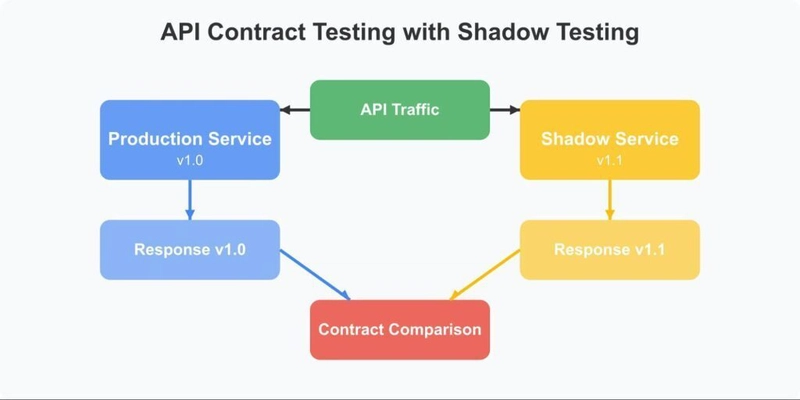

1. API Contract Testing: Detecting Breaking Changes

API contract testing is perhaps the most immediately valuable application of shadow testing. Traditional contract testing relies on mock services and schema validation, which can miss subtle compatibility issues. Shadow testing takes contract validation to the next level by comparing actual API responses between versions.

Here’s how it works:

- Deploy your updated service alongside the baseline version.

- Route identical traffic to both versions.

- Compare the responses in real time.

- Identify any divergence that indicates potential contract breaks.

This approach catches breaking changes that would be invisible to conventional testing: field type changes, new required fields, altered error responses and even performance degradation that might violate service-level agreements (SLAs).

This approach combines the best of specification-based contract testing with runtime validation. Rather than just checking if your new API implementation conforms to a static specification, you’re directly measuring compatibility with everything currently consuming it.

For instance, when a team at a fintech company implemented this approach, it caught a subtle breaking change where a field was being converted from string to integer — something its OpenAPI validators hadn’t flagged, but that would have broken several downstream consumers. The shadow testing system highlighted the discrepancy immediately, allowing the team to fix it before merging.

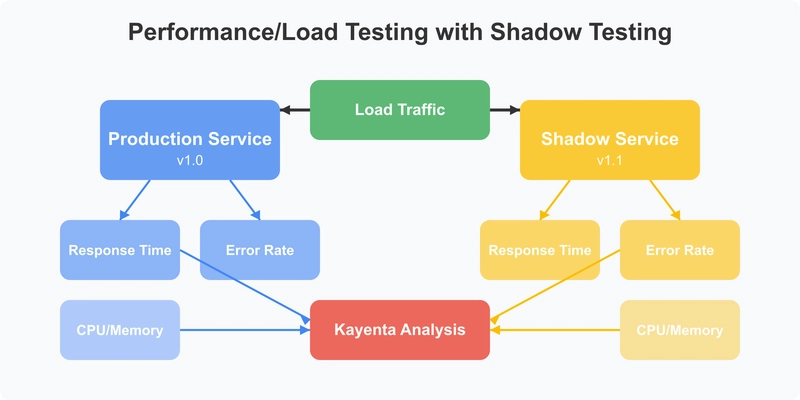

2. Performance Testing: Compare Side by Side

Performance testing is another area where shadow testing shines. Traditional performance testing usually happens late in the development cycle in dedicated environments with synthetic loads that often don’t reflect real-world usage patterns.

With shadow testing, you can:

- Run your new code against actual production traffic patterns.

- Compare key metrics against the baseline version in real time.

- Identify performance regressions before they affect users.

- Validate that optimizations actually deliver expected improvements.

This approach is particularly powerful when combined with canary analysis tools like Kayenta, which can automatically analyze statistical significance in performance differences.

A retail platform I worked with used this approach to validate a major database query optimization. While its synthetic benchmarks showed a 40% improvement, shadow testing against real traffic revealed edge cases where certain user queries were performing worse. The team was able to address these issues before deploying, avoiding what would have been a serious production incident during the company’s peak shopping season.

The beauty of this approach is that it doesn’t just test whether your code functions correctly — it tests whether it functions correctly under real-world conditions with diverse traffic patterns that would be nearly impossible to simulate accurately.

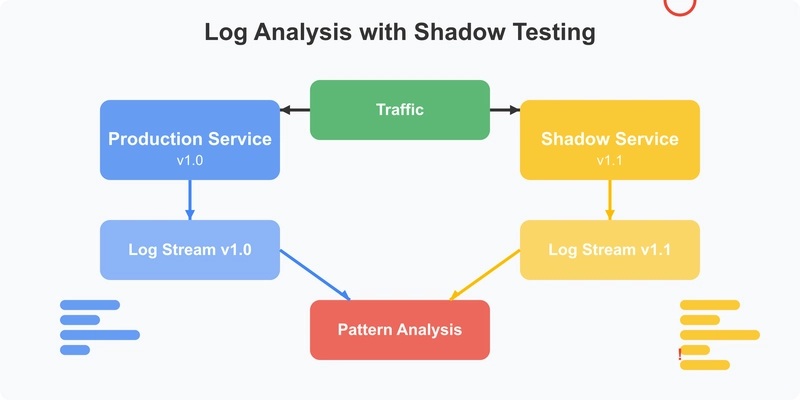

3. Log Analysis: Uncovering Hidden Issues

Log analysis is often overlooked in traditional testing approaches, yet logs contain rich information about application behavior. Shadow testing enables sophisticated log comparisons that can surface subtle issues before they manifest as user-facing problems.

With log-based shadow testing, you can:

- Capture logs from both baseline and test versions.

- Apply machine learning (ML) clustering to identify new or changed log patterns.

- Highlight potentially problematic log sequences.

- Discover errors, warnings or unexpected behaviors that might not trigger test failures.

I’ve seen this approach catch issues that would have slipped through every other testing layer. One engineering team discovered their new code was generating a cluster of database connection warnings that weren’t present in the baseline version. While these warnings didn’t cause immediate failures, they were an early indicator of connection pool exhaustion that would have eventually led to cascading timeouts under load.

This approach bridges testing and observability, creating a feedback loop that helps developers understand the operational impact of their changes before deployment.

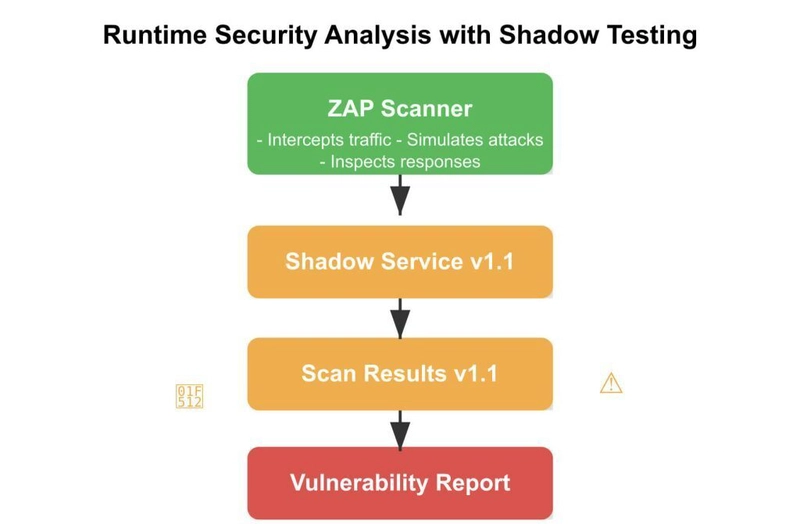

4. API Security Analysis: Shift-Left Security Validation

Perhaps the most innovative application of shadow testing is in the security domain. Traditional security testing often happens too late in the development process, after code has already been deployed. Shadow testing enables a true shift left for security by enabling dynamic analysis against real traffic patterns.

The approach works like this:

- Deploy your service changes to a sandbox environment.

- Run security scanning tools like OWASP ZAP against the sandboxed version.

- Identify and fix security vulnerabilities before merging your code.

- Prevent security issues from reaching production in the first place.

Runtime Security Analysis With Shadow Testing

Zed Attack Proxy (ZAP) is an open source security tool that acts as a “man-in-the-middle” proxy, intercepting and inspecting messages between client and server. It automatically scans for security vulnerabilities by analyzing responses and simulating attacks to discover weaknesses like SQL injection, cross-site scripting and authentication flaws.

This approach is particularly powerful for detecting issues like new API endpoints that might be missing authentication checks, input validation flaws or information disclosure vulnerabilities — all before your code gets merged.

According to a recent report from Gartner, “By 2025, 60% of organizations will use automated security testing embedded in CI/CD pipelines to detect security vulnerabilities before deployment, up from 20% in 2021.” Shadow testing provides an ideal framework for this kind of automated security validation.

By integrating security scanning directly into your pre-merge testing workflow, you catch vulnerabilities during development when they’re easiest and cheapest to fix. This eliminates the traditional security bottleneck where issues are discovered late in the development cycle, requiring costly rework and delaying releases.

Self-Maintaining Tests: Automatic Pattern Detection

What makes these shadow testing approaches particularly valuable is their inherently low-maintenance nature. Unlike traditional testing approaches that require constantly updating test suites, mocks and assertions, shadow testing uses real traffic and automated comparisons to detect issues with minimal human intervention.

The power lies in the baseline comparison: by running both versions side by side and automatically identifying differences in behavior, these tests essentially write themselves. They can detect subtle issues, emerging patterns and regressions without requiring engineers to anticipate every possible edge case.

This fundamentally changes the testing paradigm. Instead of spending countless hours maintaining brittle test fixtures, teams can focus on building features while automated differential analysis provides the safety net.

The pioneers of microservices at companies like Netflix and Uber have been using variations of these techniques for years. The difference now is that modern tools are making these low-maintenance, high-signal testing approaches accessible to engineering teams of all sizes.

Conclusion

Shadow testing is unlocking a new generation of testing approaches that provide deeper insights and more confidence than traditional methods. By detecting API contract issues, performance regressions, suspicious log patterns and security vulnerabilities before they reach production, teams can ship faster with greater confidence.

If you’re interested in exploring how shadow testing could transform your microservices testing strategy, I’d love to continue the conversation. Check us out at signadot.com or join our community discussions.

The future of microservices testing isn’t just about running more tests earlier — it’s about getting better signals that truly predict production behavior. Shadow testing is leading this evolution, proving that with the right approach, we can have both speed and quality.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

-All-will-be-revealed-00-35-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-All-will-be-revealed-00-17-36.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Jack-Black---Steve's-Lava-Chicken-(Official-Music-Video)-A-Minecraft-Movie-Soundtrack-WaterTower-00-00-32_lMoQ1fI.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![What iPhone 17 model are you most excited to see? [Poll]](https://9to5mac.com/wp-content/uploads/sites/6/2025/04/iphone-17-pro-sky-blue.jpg?quality=82&strip=all&w=290&h=145&crop=1)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)

![Mike Rockwell is Overhauling Siri's Leadership Team [Report]](https://www.iclarified.com/images/news/97096/97096/97096-640.jpg)

![Instagram Releases 'Edits' Video Creation App [Download]](https://www.iclarified.com/images/news/97097/97097/97097-640.jpg)

![Inside Netflix's Rebuild of the Amsterdam Apple Store for 'iHostage' [Video]](https://www.iclarified.com/images/news/97095/97095/97095-640.jpg)