Root Cause Analysis Guide: Ensuring Uptime Post-Incident

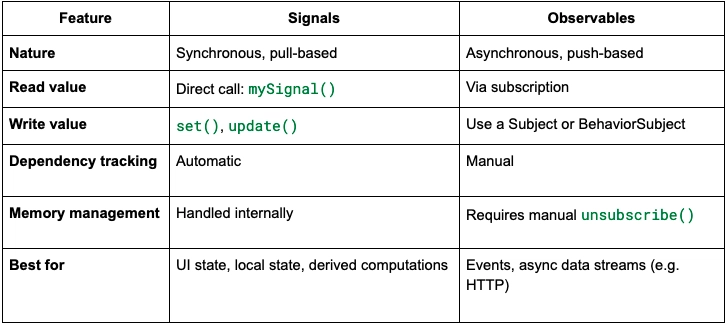

Untitled It's Monday morning. Your phone explodes with notifications. Your main service is down, customers are complaining on Twitter, and the executive team wants answers. What do you do after you've put out the fire? How do you make sure it never happens again? This is where Root Cause Analysis (RCA) comes in—the systematic approach that helps you get beyond the symptoms to find and fix the actual causes of your outages. Beyond "It's Fixed Now" We've all been there. The service is restored, everyone breathes a sigh of relief, and there's pressure to move on to the next thing. But without proper RCA, you're setting yourself up for the same outage to happen again. WRONG APPROACH: 1. Service goes down 2. Restart the server 3. Service comes back up 4. Close the incident 5. Repeat next week RIGHT APPROACH: 1. Service goes down 2. Restore service (temporary fix) 3. Investigate WHY it went down 4. Implement systemic solution 5. Prevent recurrence Effective uptime monitoring is only half the battle. Understanding why failures happen is how you achieve long-term reliability. The True Cost of Skipping RCA Let's put this in perspective. A single hour of downtime can cost: E-commerce: $10,000-$50,000+ in lost sales SaaS providers: Customer churn and damaged reputation Financial services: Regulatory scrutiny and compliance issues Beyond the immediate costs, there's the compounding effect: Incident → Quick fix → Recurrence → Larger incident → Emergency fix → Worse recurrence → Major outage → All hands on deck → Exhausted team → Decreased quality → More incidents... Breaking this cycle requires systematic RCA after every significant incident. Practical RCA Techniques That Actually Work The 5 Whys: Simple But Powerful The 5 Whys technique is deceptively simple—keep asking "why" until you get to the root cause: Problem: "The payment service was down for 45 minutes." Why #1: "Because the API server crashed." Why #2: "Because it ran out of memory." Why #3: "Because there was a memory leak in the new code." Why #4: "Because the code wasn't properly reviewed." Why #5: "Because we rushed the deployment to meet the deadline." ROOT CAUSE: Inadequate code review process and prioritizing deadlines over quality. This simple example reveals that the real solution isn't just fixing the memory leak, but improving your code review process and development lifecycle. Fishbone Diagram: For Complex Multi-Factor Issues When multiple factors contribute to an incident, a fishbone (Ishikawa) diagram helps visualize them: ┌─ Process ─┐ ┌─ People ──┐ │ No rollback procedure │ New team member │ Missing monitoring │ No on-call training │ │ ┌─ Environment ─┐ │ │ ┌─ Technology ─┐ │ Cloud provider outage │ Memory leak │ Network congestion │ │ │ Scaling issue │ │ │ │ Database locks │ └──────────┬──────────┬───────┘ │ └────────────────────┘ │ │ └─────────────────┘ ▼ ▼ [PAYMENT SERVICE OUTAGE] This visualization helps you identify all contributing factors and address them systematically. Conducting Effective RCA: A Step-by-Step Guide 1. Gather the Facts (Not Opinions) Start with hard data—timestamps, logs, metrics, alerts. Create a timeline of the incident: # Example incident timeline 2023-05-15 14:32:18 UTC - First error appears in payment service logs 2023-05-15 14:33:42 UTC - Memory utilization reaches 92% on API servers 2023-05-15 14:35:01 UTC - First customer reports of payment failures on Twitter 2023-05-15 14:37:28 UTC - Automated alert triggered for high error rates 2023-05-15 14:42:15 UTC - Engineering team acknowledges incident 2023-05-15 14:55:32 UTC - Temporary fix deployed (service restart + memory increase) 2023-05-15 15:17:45 UTC - Service fully restored Concrete data helps prevent speculation and blame, focusing the team on what actually happened. 2. Assemble a Cross-Functional Team RCA works best with diverse perspectives. Include: Engineers who worked on the affected system Operations/SRE team members Someone from customer support (they know the user impact) A facilitator who wasn't involved in the incident response The key is to create a safe environment where people can speak freely without fear of blame. 3. Distinguish Between Contributing Factors and Root Causes Many RCAs fail because they stop at identifying symptoms or intermediate causes: Contributing Factor vs. Root Cause: ✗ "The database crashed" (symptom) ✗ "The query was inefficie

Untitled

It's Monday morning. Your phone explodes with notifications. Your main service is down, customers are complaining on Twitter, and the executive team wants answers.

What do you do after you've put out the fire? How do you make sure it never happens again?

This is where Root Cause Analysis (RCA) comes in—the systematic approach that helps you get beyond the symptoms to find and fix the actual causes of your outages.

Beyond "It's Fixed Now"

We've all been there. The service is restored, everyone breathes a sigh of relief, and there's pressure to move on to the next thing. But without proper RCA, you're setting yourself up for the same outage to happen again.

WRONG APPROACH:

1. Service goes down

2. Restart the server

3. Service comes back up

4. Close the incident

5. Repeat next week

RIGHT APPROACH:

1. Service goes down

2. Restore service (temporary fix)

3. Investigate WHY it went down

4. Implement systemic solution

5. Prevent recurrence

Effective uptime monitoring is only half the battle. Understanding why failures happen is how you achieve long-term reliability.

The True Cost of Skipping RCA

Let's put this in perspective. A single hour of downtime can cost:

E-commerce: $10,000-$50,000+ in lost sales

SaaS providers: Customer churn and damaged reputation

Financial services: Regulatory scrutiny and compliance issues

Beyond the immediate costs, there's the compounding effect:

Incident → Quick fix → Recurrence → Larger incident → Emergency fix →

Worse recurrence → Major outage → All hands on deck → Exhausted team →

Decreased quality → More incidents...

Breaking this cycle requires systematic RCA after every significant incident.

Practical RCA Techniques That Actually Work

The 5 Whys: Simple But Powerful

The 5 Whys technique is deceptively simple—keep asking "why" until you get to the root cause:

Problem: "The payment service was down for 45 minutes."

Why #1: "Because the API server crashed."

Why #2: "Because it ran out of memory."

Why #3: "Because there was a memory leak in the new code."

Why #4: "Because the code wasn't properly reviewed."

Why #5: "Because we rushed the deployment to meet the deadline."

ROOT CAUSE: Inadequate code review process and prioritizing deadlines over quality.

This simple example reveals that the real solution isn't just fixing the memory leak, but improving your code review process and development lifecycle.

Fishbone Diagram: For Complex Multi-Factor Issues

When multiple factors contribute to an incident, a fishbone (Ishikawa) diagram helps visualize them:

┌─ Process ─┐ ┌─ People ──┐

│ No rollback procedure │ New team member

│ Missing monitoring │ No on-call training

│ │

┌─ Environment ─┐ │ │ ┌─ Technology ─┐

│ Cloud provider outage │ Memory leak

│ Network congestion │ │ │ Scaling issue

│ │ │ │ Database locks

│ └──────────┬──────────┬───────┘ │

└────────────────────┘ │ │ └─────────────────┘

▼ ▼

[PAYMENT SERVICE OUTAGE]

This visualization helps you identify all contributing factors and address them systematically.

Conducting Effective RCA: A Step-by-Step Guide

1. Gather the Facts (Not Opinions)

Start with hard data—timestamps, logs, metrics, alerts. Create a timeline of the incident:

# Example incident timeline

2023-05-15 14:32:18 UTC - First error appears in payment service logs

2023-05-15 14:33:42 UTC - Memory utilization reaches 92% on API servers

2023-05-15 14:35:01 UTC - First customer reports of payment failures on Twitter

2023-05-15 14:37:28 UTC - Automated alert triggered for high error rates

2023-05-15 14:42:15 UTC - Engineering team acknowledges incident

2023-05-15 14:55:32 UTC - Temporary fix deployed (service restart + memory increase)

2023-05-15 15:17:45 UTC - Service fully restored

Concrete data helps prevent speculation and blame, focusing the team on what actually happened.

2. Assemble a Cross-Functional Team

RCA works best with diverse perspectives. Include:

Engineers who worked on the affected system

Operations/SRE team members

Someone from customer support (they know the user impact)

A facilitator who wasn't involved in the incident response

The key is to create a safe environment where people can speak freely without fear of blame.

3. Distinguish Between Contributing Factors and Root Causes

Many RCAs fail because they stop at identifying symptoms or intermediate causes:

Contributing Factor vs. Root Cause:

✗ "The database crashed" (symptom)

✗ "The query was inefficient" (intermediate cause)

✓ "We don't have a query review process for new code" (root cause)

✗ "The CDN configuration was wrong" (symptom)

✗ "The deployment included an invalid setting" (intermediate cause)

✓ "Our deployment process lacks validation tests" (root cause)

True root causes are typically process or system issues, not individual mistakes or technical failures.

4. Document and Share Learnings

Create a standardized template for RCA reports that includes:

# Incident RCA: Payment Service Outage (May 15, 2023)

## Summary

Brief description of the incident, its duration, and impact.

## Timeline

Detailed sequence of events with timestamps.

## Root Causes

Primary and contributing factors that led to the incident.

## Immediate Actions Taken

Steps taken to mitigate and resolve the incident.

## Long-term Preventive Measures

Systemic changes to prevent recurrence.

## Lessons Learned

Key insights gained from this incident.

## Action Items

Specific tasks, owners, and deadlines.

Share this report broadly within your organization to spread the learning.

Building a Robust RCA Process

1. Set Up Proper Monitoring Before Incidents Happen

You can't analyze what you don't measure. Comprehensive website uptime monitoring gives you the data you need for effective RCA:

Essential Monitoring Coverage:

- Application performance

- Infrastructure metrics

- User experience data

- Dependency health

- Business impact metrics

Tools like Bubobot provide the uptime monitoring foundation you need, recording the critical data points that make RCA possible when incidents occur.

2. Automate Data Collection During Incidents

Create automated tools that gather relevant data during an incident:

# Simplified pseudocode for incident data collection

def collect_incident_data(service_name, start_time, end_time):

data = {

"logs": fetch_logs(service_name, start_time, end_time),

"metrics": fetch_metrics(service_name, start_time, end_time),

"alerts": get_triggered_alerts(start_time, end_time),

"deployments": get_recent_deployments(service_name, start_time - timedelta(hours=24), end_time),

"config_changes": get_config_changes(service_name, start_time - timedelta(hours=24), end_time),

"user_reports": get_support_tickets(start_time, end_time)

}

create_incident_folder(incident_id)

save_incident_data(incident_id, data)

return generate_initial_timeline(data)

This data becomes the foundation for your RCA process.

3. Focus on Systems, Not Blame

The language you use in RCA matters tremendously:

Blame-oriented: "John deployed the code without proper testing."

System-oriented: "Our deployment process lacks automated test gates."

Blame-oriented: "The on-call engineer responded too slowly."

System-oriented: "Our alerting system failed to prioritize critical issues."

Always frame findings in terms of systems and processes that can be improved, not individuals who made mistakes.

Common Pitfalls to Avoid

Stopping at the technical cause

The memory leak isn't the root cause; the process that allowed it into production is.The "human error" cop-out

"Human error" is never a root cause—it's a symptom of inadequate systems and safeguards.Focusing only on prevention

Sometimes improving detection and response is more practical than complete prevention.Not allocating time for fixes

An RCA without scheduled implementation time for the solutions is just a document.

Tools That Support Effective RCA

Various tools can enhance your RCA process:

Log aggregation platforms for centralized log analysis

Tracing systems for tracking requests across services

Timeline visualization tools for mapping incident progression

Collaborative documentation for team input during analysis

For the foundation of effective RCA, tools like Bubobot provide essential uptime monitoring capabilities that:

Establish performance baselines

Capture detailed metrics during incidents

Retain historical data for trend analysis

Alert teams promptly when issues arise

The Bottom Line

Root Cause Analysis isn't just a technical exercise—it's an investment in your system's future reliability. Every incident is an opportunity to make your systems more resilient, your processes more robust, and your team more effective.

By implementing a systematic RCA process, you transform outages from recurring nightmares into one-time learning experiences. The time you spend on thorough RCA will pay dividends in reduced downtime, happier customers, and fewer middle-of-the-night pages.

What's your team's approach to post-incident analysis? Have you found techniques that work particularly well for your environment?

For a deeper dive into implementing effective RCA processes with practical templates and examples, check out our comprehensive guide on the Bubobot blog.

RootCauseAnalysis #DevOps #UptimeMonitoring

Read more at https://bubobot.com/blog/root-cause-analysis-guide-ensuring-uptime-post-incident?utm_source=dev.to

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

(1).webp?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)