Prompt engineering and other fantastic creatures

Harnessing the Power of Prompt Engineering in AI Development Prompt engineering serves as a crucial interface between human operators and Artificial Intelligence (AI), particularly in optimizing the utility of Large Language Models (LLMs). Here, we explore how various prompt engineering techniques enhance interactions with AI to achieve precise and practical outcomes, fundamentally advancing AI functionalities. Understanding Foundation Models and LLMs At the foundation of prompt engineering are the robust LLMs, trained on vast datasets to comprehend and replicate human-like text interaction. These models are adept at tasks that require little to no additional tuning, leveraging their capabilities through effectively designed prompts. Practical Applications In practical terms, LLMs have been instrumental across various fields, from easing content creation processes in digital marketing to enhancing decision-making in financial analysis. The flexibility of LLMs to adapt to different tasks makes them invaluable across sectors. The Essence of Prompt Engineering: Dissecting the Components of a Prompt Prompt engineering is crucial for optimizing interactions with AI models, ensuring they produce accurate and relevant responses. Each prompt consists of several key elements: 1. Instruction This is the directive part of the prompt, clearly telling the AI the task to perform, such as “summarize the text.” 2. Data Input It represents the content on which the AI acts, like a specific text or question, directly influencing the AI’s response. 3. Output Format This specifies how the response should be structured, ensuring it meets certain criteria like a full sentence or a list. 4. Context Providing relevant background or additional information can significantly enhance the effectiveness and relevance of the AI’s output. Crafting the Perfect Prompt: For example, to analyze customer feedback sentiment: Instruction: Report the sentiment of the feedback. Data Input: The text of the feedback. Output Format: Categorize as positive, negative, or neutral. Context: Information about the related product/service. By meticulously combining these components, prompt engineering transforms the capabilities of AI models to deliver more precise and practical outcomes. Prompt engineering refines how these models receive and process inputs, thereby shaping their outputs. It ensures that the interactions are aligned with the specific needs of the task, which can dramatically affect the usability and accuracy of the AI's responses. Here's a deeper look at some of the pivotal prompt engineering techniques: Zero-Shot Prompting Zero-shot prompting is a technique where the model is directed to execute a task without the use of examples or demonstrations within the prompt itself. This approach relies purely on the model’s pre-trained understanding to carry out specific instructions. In our exploration, we employed several zero-shot prompts, such as the following text classification example: Prompt: Classify the text into neutral, negative, or positive. Text: I think the vacation is okay. Sentiment: Output: Neutral Enhancements in zero-shot learning have been linked to instruction tuning, as demonstrated by research from Wei et al. (2022), which can be found here. Instruction tuning involves refining models through training on datasets that are explicitly described via instructions. Additionally, the method of Reinforcement Learning from Human Feedback (RLHF) explored here has been instrumental in scaling instruction tuning by aligning the model more closely with human preferences. Few-Shot Prompting Few-shot prompting is a technique that enhances model performance by providing specific examples within the prompt. These examples condition the model, helping it learn the context and improve its responses for subsequent queries. Research by Touvron et al. (2023) indicates that the benefits of few-shot prompting emerged as models were scaled up significantly (Kaplan et al., 2020). Here's an application of few-shot prompting demonstrated by Brown et al. (2020): Prompt: A "whatpu" is a small, furry animal native to Tanzania. Use "whatpu" in a sentence. Example: We were traveling in Africa and we saw these very cute whatpus. To define a "farduddle" means to jump up and down really fast. Use "farduddle" in a sentence. Output: When we won the game, we all started to farduddle in celebration. This example shows that the model can effectively apply a new word in context with just a single example provided (1-shot). For more complex tasks, increasing the number of examples (3-shot, 5-shot, etc.) might be necessary. According to Min et al. (2022), when using few-shot prompting, the choice of labels and the distribution of input text provided as examples are crucial—even if the labels aren't individ

Harnessing the Power of Prompt Engineering in AI Development

Prompt engineering serves as a crucial interface between human operators and Artificial Intelligence (AI), particularly in optimizing the utility of Large Language Models (LLMs). Here, we explore how various prompt engineering techniques enhance interactions with AI to achieve precise and practical outcomes, fundamentally advancing AI functionalities.

Understanding Foundation Models and LLMs

At the foundation of prompt engineering are the robust LLMs, trained on vast datasets to comprehend and replicate human-like text interaction. These models are adept at tasks that require little to no additional tuning, leveraging their capabilities through effectively designed prompts.

Practical Applications

In practical terms, LLMs have been instrumental across various fields, from easing content creation processes in digital marketing to enhancing decision-making in financial analysis. The flexibility of LLMs to adapt to different tasks makes them invaluable across sectors.

The Essence of Prompt Engineering: Dissecting the Components of a Prompt

Prompt engineering is crucial for optimizing interactions with AI models, ensuring they produce accurate and relevant responses. Each prompt consists of several key elements:

1. Instruction

This is the directive part of the prompt, clearly telling the AI the task to perform, such as “summarize the text.”

2. Data Input

It represents the content on which the AI acts, like a specific text or question, directly influencing the AI’s response.

3. Output Format

This specifies how the response should be structured, ensuring it meets certain criteria like a full sentence or a list.

4. Context

Providing relevant background or additional information can significantly enhance the effectiveness and relevance of the AI’s output.

Crafting the Perfect Prompt: For example, to analyze customer feedback sentiment:

- Instruction: Report the sentiment of the feedback.

- Data Input: The text of the feedback.

- Output Format: Categorize as positive, negative, or neutral.

- Context: Information about the related product/service.

By meticulously combining these components, prompt engineering transforms the capabilities of AI models to deliver more precise and practical outcomes.

Prompt engineering refines how these models receive and process inputs, thereby shaping their outputs. It ensures that the interactions are aligned with the specific needs of the task, which can dramatically affect the usability and accuracy of the AI's responses. Here's a deeper look at some of the pivotal prompt engineering techniques:

Zero-Shot Prompting

Zero-shot prompting is a technique where the model is directed to execute a task without the use of examples or demonstrations within the prompt itself. This approach relies purely on the model’s pre-trained understanding to carry out specific instructions.

In our exploration, we employed several zero-shot prompts, such as the following text classification example:

Prompt:

Classify the text into neutral, negative, or positive.

Text: I think the vacation is okay. Sentiment:

Output:

Neutral

Enhancements in zero-shot learning have been linked to instruction tuning, as demonstrated by research from Wei et al. (2022), which can be found here. Instruction tuning involves refining models through training on datasets that are explicitly described via instructions. Additionally, the method of Reinforcement Learning from Human Feedback (RLHF) explored here has been instrumental in scaling instruction tuning by aligning the model more closely with human preferences.

Few-Shot Prompting

Few-shot prompting is a technique that enhances model performance by providing specific examples within the prompt. These examples condition the model, helping it learn the context and improve its responses for subsequent queries.

Research by Touvron et al. (2023) indicates that the benefits of few-shot prompting emerged as models were scaled up significantly (Kaplan et al., 2020).

Here's an application of few-shot prompting demonstrated by Brown et al. (2020):

Prompt:

A "whatpu" is a small, furry animal native to Tanzania.

Use "whatpu" in a sentence.

Example: We were traveling in Africa and we saw these very cute whatpus.

To define a "farduddle" means to jump up and down really fast.

Use "farduddle" in a sentence.

Output:

When we won the game, we all started to farduddle in celebration.

This example shows that the model can effectively apply a new word in context with just a single example provided (1-shot). For more complex tasks, increasing the number of examples (3-shot, 5-shot, etc.) might be necessary.

According to Min et al. (2022), when using few-shot prompting, the choice of labels and the distribution of input text provided as examples are crucial—even if the labels aren't individually accurate. The format of the examples also significantly influences performance; surprisingly, using random labels is more effective than providing no labels. Optimal results are obtained when labels are randomly chosen from an accurate distribution rather than uniformly, which underscores the importance of how demonstrations are structured.

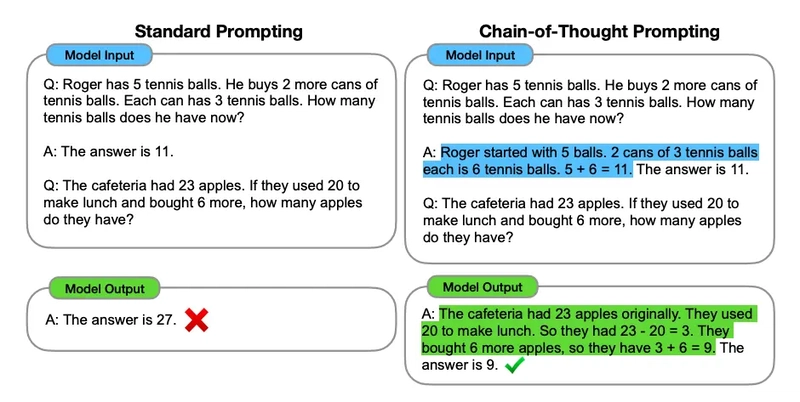

Chain-of-Thought Prompting

Introduced by Wei et al. (2022) in their research, chain-of-thought (CoT) prompting facilitates complex reasoning in AI models by guiding them through intermediate steps before arriving at a final response. This method can be further enhanced by combining it with few-shot prompting for tasks that demand intricate reasoning.

Prompt:

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

Adding all the odd numbers (9, 15, 1) totals 25.

The answer is False.

The odd numbers in this group add up to an even number: 17, 10, 19, 4, 8, 12, 24.

Adding all the odd numbers (17, 19) totals 36.

The answer is True.

The odd numbers in this group add up to an even number: 16, 11, 14, 4, 8, 13, 24.

Adding all the odd numbers (11, 13) totals 24.

The answer is True.

The odd numbers in this group add up to an even number: 17, 9, 10, 12, 13, 4, 2.

Adding all the odd numbers (17, 9, 13) totals 39.

The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

Output:

Adding all the odd numbers (15, 5, 13, 7, 1) totals 41.

The answer is False.

This example clearly demonstrates the precision achieved with CoT prompting. Remarkably, we observed that even a single example can sufficiently guide the model to solve such arithmetic tasks correctly:

Prompt:

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1. Adding all the odd numbers (9, 15, 1) totals 25. The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

Output:

Adding all the odd numbers (15, 5, 13, 7, 1) totals 41. The answer is False.

Step-back Prompting

Step-Back Prompting is a method aimed at enhancing the problem-solving capabilities of LLMs by prompting them to abstract general principles before diving into detailed reasoning. This technique encourages models to focus on high-level concepts, thereby minimizing errors in the processing steps and improving the accuracy of their conclusions. Zheng et al. (2024) outline this approach in their work, highlighting its efficacy in reasoning-heavy tasks across fields like STEM, knowledge-based QA, and multi-hop reasoning. The method has shown notable improvements in models like PaLM-2L, GPT-4, and Llama2-70B, outperforming traditional techniques such as Chain-of-Thought Prompting.

How Step-Back Prompting Operates:

- Abstraction: Initially, the model is prompted to consider the overarching principle or concept relevant to the query.

- Reasoning: Leveraging this high-level abstraction, the model then addresses the specifics of the question.

Prompt:

Question: "What happens to the pressure of an ideal gas if the temperature is increased by a factor of 2 and the volume is increased by a factor of 8?"

Abstraction: The model determines the relevant principle: "Ideal Gas Law."

Output:

Answer: Applying this law, it calculates that the pressure decreases by a factor of 4.

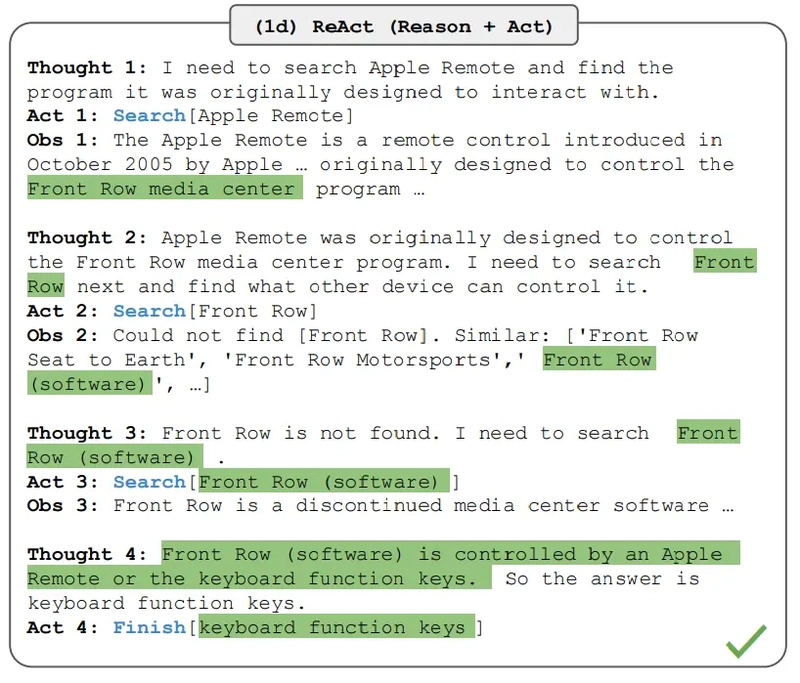

ReAct Prompting

In 2022, Yao et al. introduced the ReAct framework, which enhances the functionality of Large Language Models (LLMs) by enabling them to generate reasoning traces and perform task-specific actions in a synchronized manner.

The capability to produce reasoning traces allows the model to develop, monitor, and revise action plans effectively, and even manage exceptions as they arise. The action component of ReAct facilitates the integration with external sources, such as knowledge bases or other environments, enabling the model to gather supplementary information.

ReAct significantly augments the capacity of LLMs to interact with external tools, securing more accurate and fact-based responses. The framework has demonstrated superior performance over various state-of-the-art methods in tasks involving language processing and decision-making. Moreover, the integration of ReAct with the chain-of-thought (CoT) prompting enriches the model's responses with both internal knowledge and externally acquired information, enhancing the interpretability and trustworthiness of LLMs.

ReAct is predicated on the interplay between 'acting' and 'reasoning', a combination pivotal for humans learning new tasks or making reasoned decisions. While CoT prompting has proven effective in enabling LLMs to trace logical reasoning in solving problems, it traditionally lacks the capability to interact with the external environment, which can lead to inaccuracies such as fact fabrication.

As a solution, ReAct amalgamates the concepts of reasoning and acting. It instructs LLMs to articulate verbal reasoning passages and enact specific actions relevant to the task at hand. This dual capability not only allows for dynamic and adaptable reasoning but also the integration of new information from external sources into the model’s framework, as depicted in the following example illustration of ReAct for a question-answering task.

The Impact and Future of Prompt Engineering

The advancement in prompt engineering techniques is not just enhancing the functionality of AI models but also expanding their potential applications. By improving how we structure inputs and interpret outputs, AI becomes a more powerful tool, capable of undertaking complex tasks with greater autonomy and accuracy.

As we continue to push the boundaries of what AI can achieve through sophisticated prompt engineering, the prospects for future technology—where humans and machines collaborate seamlessly—are immensely promising.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)