Practical Applications of AI in Test Automation - Context, Demo with UI-Tars LLM & Midscene (Part 1)

This series of articles will provide a clear and practical guide to AI applications in end-to-end test automation. I will use AI to verify a product's end-to-end functionality, ensuring that it meets the required specifications. 1. Reviewing the Role of End-to-End Testing It is crucial to emphasize once again that: the primary goal of end-to-end testing is to validate that new features and regression functionality match the product requirements and design, by simulating the customers' behavior. End-to-end testing is a testing approach widely used in regression validation. It can be performed either manually—such as writing a sanity checklist and executing tests manually, or through automation by writing test scripts using tools like Playwright or Appium. The three key aspects of end-to-end testing are described in the above figure: Understand How Users Benefit from the Feature – Identify the value the feature brings to users. Design Test Cases from the User's Perspective – Create test scenarios that align with real user interactions. Iterate Test Execution During Development – Continuously run test cases throughout the development process to verify that the implemented code meets the required functionality. 2. In Action – Executing Your Test Cases with an AI Agent In traditional end-to-end test automation, the typical approach is as follows: Analyze the functionality – Understand the feature and its expected behavior. Analyze and write test cases – Define test scenarios based on user interactions and requirements. Write automation scripts – Implement test cases using automation frameworks. When writing automated test cases, we usually create Page Object-like classes to represent the HTML tree, allowing the test script to interact with or retrieve elements efficiently. Now, let's see how an AI Agent can optimize this process. 2.1 - A Multiple-decisions AI Agen can optimize the test process Watch a Demo First – See How the AI Agent Works from Its Own Perspective. (Video is not accelerated) The video above demonstrates how the AI Agent perceives the process—autonomously interpreting test cases, evaluating the webpage's current state(screenshot), making plans and decisions, and executing the test. It engages in multi-step decision-making, leveraging various types of reasoning to achieve its goal. This AI Agent is setup based UI-Tars LLM(7B-SFT) and Midscene(https://midscenejs.com/) I will introduce the Models and Tools in the later section This is the test case that AI Agent read: Scenario: Customer can search and open product detail page from a search result Go to https://www.vinted.com The country selection popup is visible Select France as the country that I'm living Accept all privacy preferences Search 'Chanel', and press the Enter Scroll down to the 1st product Click the 2nd product from the 1st row in the product list Assert Price is visible To run this test, It requires the following hardware: Nvidia L40s - 1 x GPU, 48GB GPU Memory, 7 x vCPU, 40GB CPU memory 2.2 - What problems were solved by this AI Agent Reflecting on what we mentioned in Section 1, end-to-end testing can be performed in two ways: by writing automated test scripts or executing tests manually. With the introduction of an AI Agent, a new approach emerges—simply providing your test cases to the AI Agent without writing test scripts. The AI Agent then replaces manual execution by autonomously carrying out the test cases. Specifically, it addresses the following problems: Reduces Manual Testing Costs – The AI Agent can interpret test cases written by anyone, eliminating the need to write test scripts and allowing tests to be executed at any time. Lowers Test Script Maintenance Effort – The AI Agent autonomously determines the next browser action, reducing the need to modify tests for minor UI changes. Increases Accessibility and Participation – Shifting from traditional QA engineers writing automation scripts to a decentralized model where developers contribute, and now to a stage where anyone proficient in English and familiar with the product can write end-to-end test cases. 2.3 - Implementation with Playwright test("[UI-Tars - Business]a user can search then view a product", async ({ page, ai, aiAssert, aiWaitFor }) => { await page.goto("https://www.vinted.com") await aiWaitFor('The country selection popup is visible') await ai("Select France as the country that I'm living") await page.waitForURL((url: URL) => url.hostname.indexOf('vinted.fr') >= 0) await ai("Accept all privacy preferences") await ai("Click Search bar, then Search 'Chanel', and press the Enter") await ai("Scroll down to the 1st product") await ai("Click the 2nd product from the 1st row in the product list") expect(page.url()).toContain("/items/") await aiAssert("Price is visible") }) 3. Intro

This series of articles will provide a clear and practical guide to AI applications in end-to-end test automation. I will use AI to verify a product's end-to-end functionality, ensuring that it meets the required specifications.

1. Reviewing the Role of End-to-End Testing

It is crucial to emphasize once again that: the primary goal of end-to-end testing is to validate that new features and regression functionality match the product requirements and design, by simulating the customers' behavior.

End-to-end testing is a testing approach widely used in regression validation. It can be performed either manually—such as writing a sanity checklist and executing tests manually, or through automation by writing test scripts using tools like Playwright or Appium.

The three key aspects of end-to-end testing are described in the above figure:

- Understand How Users Benefit from the Feature – Identify the value the feature brings to users.

- Design Test Cases from the User's Perspective – Create test scenarios that align with real user interactions.

- Iterate Test Execution During Development – Continuously run test cases throughout the development process to verify that the implemented code meets the required functionality.

2. In Action – Executing Your Test Cases with an AI Agent

In traditional end-to-end test automation, the typical approach is as follows:

- Analyze the functionality – Understand the feature and its expected behavior.

- Analyze and write test cases – Define test scenarios based on user interactions and requirements.

- Write automation scripts – Implement test cases using automation frameworks.

When writing automated test cases, we usually create Page Object-like classes to represent the HTML tree, allowing the test script to interact with or retrieve elements efficiently.

Now, let's see how an AI Agent can optimize this process.

2.1 - A Multiple-decisions AI Agen can optimize the test process

Watch a Demo First – See How the AI Agent Works from Its Own Perspective.

The video above demonstrates how the AI Agent perceives the process—autonomously interpreting test cases, evaluating the webpage's current state(screenshot), making plans and decisions, and executing the test. It engages in multi-step decision-making, leveraging various types of reasoning to achieve its goal.

This AI Agent is setup based UI-Tars LLM(7B-SFT) and Midscene(https://midscenejs.com/)

I will introduce the Models and Tools in the later section

This is the test case that AI Agent read:

Scenario: Customer can search and open product detail page from a search result

Go to https://www.vinted.com

The country selection popup is visible

Select France as the country that I'm living

Accept all privacy preferences

Search 'Chanel', and press the Enter

Scroll down to the 1st product

Click the 2nd product from the 1st row in the product list

Assert Price is visible

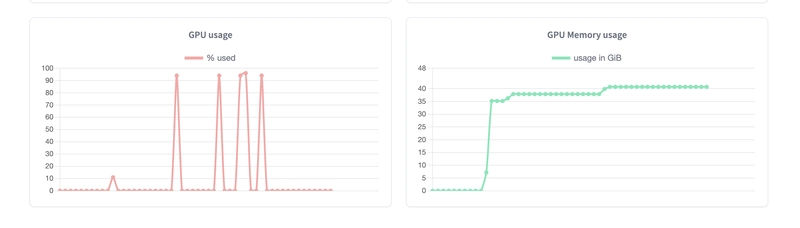

To run this test, It requires the following hardware:

- Nvidia L40s - 1 x GPU, 48GB GPU Memory, 7 x vCPU, 40GB CPU memory

2.2 - What problems were solved by this AI Agent

Reflecting on what we mentioned in Section 1, end-to-end testing can be performed in two ways: by writing automated test scripts or executing tests manually.

With the introduction of an AI Agent, a new approach emerges—simply providing your test cases to the AI Agent without writing test scripts. The AI Agent then replaces manual execution by autonomously carrying out the test cases.

Specifically, it addresses the following problems:

- Reduces Manual Testing Costs – The AI Agent can interpret test cases written by anyone, eliminating the need to write test scripts and allowing tests to be executed at any time.

- Lowers Test Script Maintenance Effort – The AI Agent autonomously determines the next browser action, reducing the need to modify tests for minor UI changes.

- Increases Accessibility and Participation – Shifting from traditional QA engineers writing automation scripts to a decentralized model where developers contribute, and now to a stage where anyone proficient in English and familiar with the product can write end-to-end test cases.

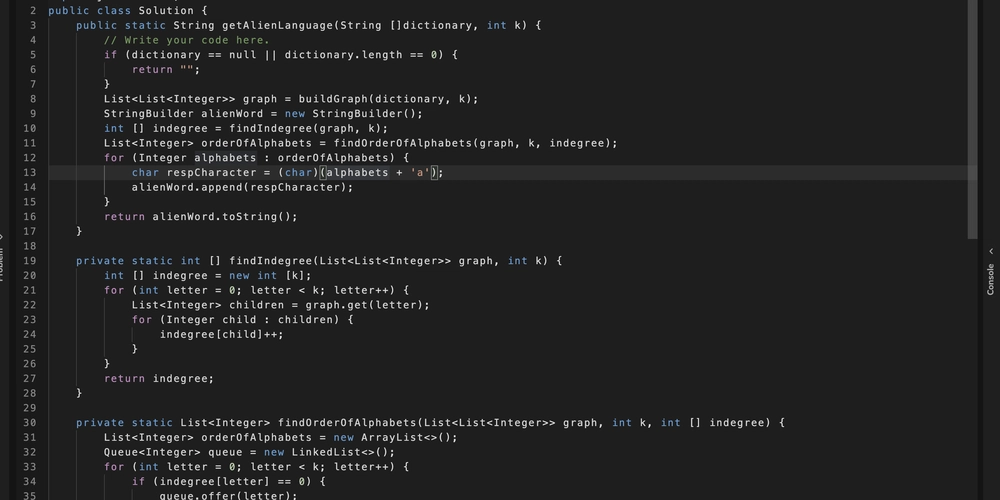

2.3 - Implementation with Playwright

test("[UI-Tars - Business]a user can search then view a product", async ({ page, ai, aiAssert, aiWaitFor }) => {

await page.goto("https://www.vinted.com")

await aiWaitFor('The country selection popup is visible')

await ai("Select France as the country that I'm living")

await page.waitForURL((url: URL) => url.hostname.indexOf('vinted.fr') >= 0)

await ai("Accept all privacy preferences")

await ai("Click Search bar, then Search 'Chanel', and press the Enter")

await ai("Scroll down to the 1st product")

await ai("Click the 2nd product from the 1st row in the product list")

expect(page.url()).toContain("/items/")

await aiAssert("Price is visible")

})

3. Introduction UI-Tars LLM and Midscene

3.1 UI-Tars

UI-Tars is a native, open-source GUI Multimodality, which is re-built on top of qwen-2.5-VL(通义前问2.5 VL). This model can process both text and GUI images simultaneously, and provides STF and DPO 2 kinds of models, with a huge amount of GUI screenshots. UI-Tars is specifically designed for interacting with GUI.

It performs well in:

- Browser application

- Desktop and Desktop application

- Mobile and mobile application

It supports prompts in 2 languages:

- Chinese

- English

More details - please read it from the Paper

3.2 Midscene

Midscene is a state machine, it builds a multiple-reasoning AI-Agent with provided Models.

It supports:

- UI-Tars (the main branch doesn't support AIAssert, AIQuery, and AIWaitfor, but you can check my branch)

- Qwen-2.5 VL (通义千问2.5, I really love this name...)

- GPT-4o

3.3. What's the difference with other solutions

In the market, we had several of these tools, such as KaneAI (from LambdaTest, which is not open-source), and auto-playwright/zero-step.

4 The mechanism between UI-Tars & Midscene

4.1 Orchestrations and Comparisons

Its core capability is to plan, reason, and execute multiple steps autonomously, just like a human, based on both visual input and instructions—continuing until it determines that the task is complete.

It possesses 3 key abilities:

- Multi-Step Planning Across Platforms – Given an instruction, it can plan multiple actions across web browsers, desktop, or mobile applications.

- Tool Utilization for Execution – It can leverage external tools to carry out the planned actions.

- Autonomous Reasoning & Adaptation – It can determine whether the task is complete or take additional actions if necessary.

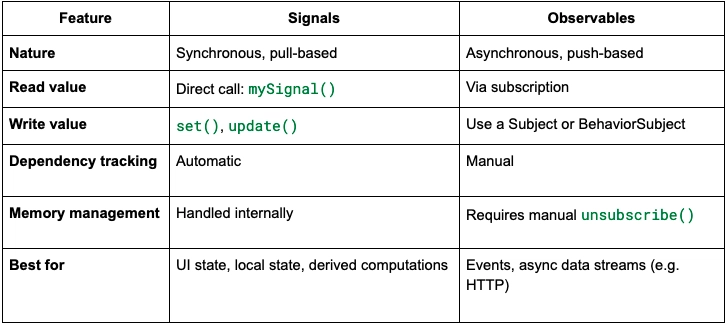

I compared the most popular solutions in the market until the end of 2025-02:

| Solutions | is it an AI Agent | Cost | Additional Input to LLM | how to get html element | Multiple Step Decision & Autonomous Reasoning | Playwright Integration | Mobile App support | Desktop App |

|---|---|---|---|---|---|---|---|---|

| UI-Tars(/GPT-4o) + Midscene | Yes | 1.8$ /h when use UI-Tars:7B OR 0.007$ / tests when use OpenAI GPT-4o | GUI Screenshot | GUI Screenshot Processing | Yes | Yes | Yes | Yes |

| Llama 3.2 + Binded Tools + LangGraph | Yes | 0.2$ / tests | HTML | HTML DOM processing | No | Yes | Not yet | No |

| ZeroStep / auto-playwright | Kind of | Unknown | HTML | HTML DOM processing | No | Yes | Unknown | No |

| StageHand(GPT-4o or Claude 3.5) | Yes | Unknown | HTML & GUI Screenshot | HTML DOM Processing | Not yet | Yes | Not yet | No |

To summarise - a solution UI-Tars(or GPT-4o) with Midscene seems is the most applicable and cheapest approach.

4.2 Multiple-Step decisions and reasoning

Let's have a look at an actual step - Search 'Chanel', and press the Enter from the above example.

4.2.1 Midscene sends a system message to UI-Tars

Midscene sends the test step as part of the System Message to the LLM, together with the current screenshot.

4.2.2 UI-Tars return the through and Action

Because this "User step" requires multiple browser actions, like identifying where is the search bar, then click the search bar, then type "channel", and pressing "Enter" at the end. Thus UI-Tars make 1st decision to "click the search bar".

4.2.3 UI-Tars start reasoning and plan the next browser actions for the same user step iteratively

Midscene currently takes screenshots before each reasoning, so UI-Tars always knows the latest state in the browser, besides of that, UI-Tars currently sends all chat history back to UI-Tars when it is reasoning.

4.2.4 UI-Tars autonomously check whether the user step is achieved

5. Summary

Using UI-TARS and MidScene, with its multi-step decision-making and autonomous reasoning, it can already partially replace manual test & manual test scripting/execution against common e-commerce websites.

To help UI-TARS better understand your product, we need to build our own RAG on top of MidScene, passing the extracted documents to UI-TARS as references.

We can introduce different AI agents to analyze in parallel, but that doesn't mean we need a large number of AI agents.

The biggest bottleneck is that, compared to the Playwright Test, it is relatively slow. On an LS40S instance (1 GPU, 48GB GPU memory), a single LLM query takes 2–3 seconds.(for UI-Tars:7B), to use better LLM(such as UI-Tars-72B, the investment will be a bit higher)

(UI-Tars:7B - GPU usage when executing the example test)

(UI-Tars:72B - GPU usage when executing the example test)

6. Code

7. References

@software{Midscene.js,

author = {Zhou, Xiao and Yu, Tao},

title = {Midscene.js: Let AI be your browser operator.},

year = {2025},

publisher = {GitHub},

url = {https://github.com/web-infra-dev/midscene}

}

@article{qin2025ui,

title={UI-TARS: Pioneering Automated GUI Interaction with Native Agents},

author={Qin, Yujia and Ye, Yining and Fang, Junjie and Wang, Haoming and Liang, Shihao and Tian, Shizuo and Zhang, Junda and Li, Jiahao and Li, Yunxin and Huang, Shijue and others},

journal={arXiv preprint arXiv:2501.12326},

year={2025}

}

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

(1).webp?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)