Part 10: How Google Uses Edge Computing to Push Data Closer to You

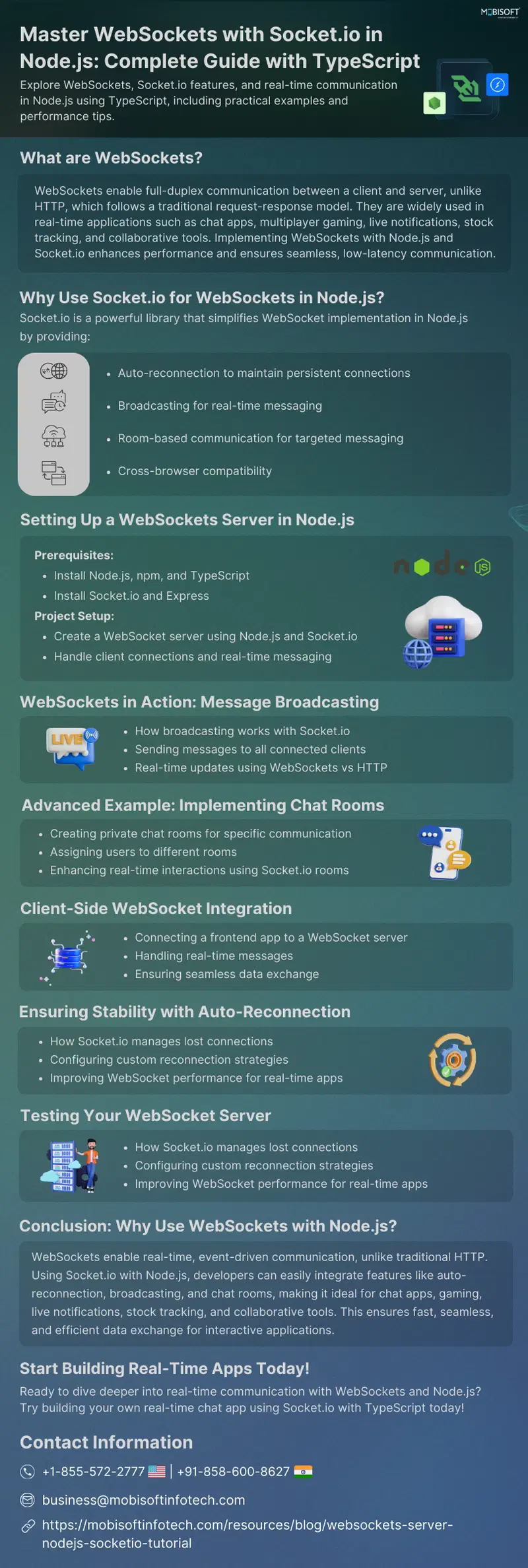

Every time a user searches something, watches a video, or opens Gmail — data is delivered in milliseconds. How? Through Edge Computing. Google has built one of the most sophisticated edge infrastructure systems that keeps data physically closer to users, minimizing latency and load on central servers. Let’s unpack how this system works — and how it powers speed, scalability, and real-time responsiveness across Google services. Real-Life Analogy: Local Kirana vs Warehouse Shopping Imagine ordering biscuits — from a local Kirana store vs from a warehouse 400 km away. Local store (Edge) gives instant access. Warehouse (Central server) adds delay, cost, and traffic. Google uses thousands of edge locations as your digital "Kirana stores" — pre-caching data, managing requests, and reducing round trips. What is Edge Computing? Edge computing means: Processing and serving data from the nearest network edge node (not the central data center) Bringing computation, caching, and logic closer to the user This reduces: Latency Bandwidth cost Backend overload Google’s Edge Architecture Component Description Google Global Cache Content delivery platform for static & streaming content Edge PoPs Points of Presence across ISPs & countries Cloud CDN Google’s developer-facing edge delivery platform Compute at Edge Functions & microservices run directly at edge nodes Google has over 150+ edge locations in 90+ countries. Edge in Action – YouTube Example When you open a YouTube video: Your device hits Google DNS (8.8.8.8) DNS returns the nearest edge node IP If the video is already cached at that edge, it's served instantly If not, edge node requests it from central DC and caches it Result: Video starts in

Every time a user searches something, watches a video, or opens Gmail — data is delivered in milliseconds.

How?

Through Edge Computing.

Google has built one of the most sophisticated edge infrastructure systems that keeps data physically closer to users, minimizing latency and load on central servers.

Let’s unpack how this system works — and how it powers speed, scalability, and real-time responsiveness across Google services.

Real-Life Analogy: Local Kirana vs Warehouse Shopping

- Imagine ordering biscuits — from a local Kirana store vs from a warehouse 400 km away.

- Local store (Edge) gives instant access.

- Warehouse (Central server) adds delay, cost, and traffic.

Google uses thousands of edge locations as your digital "Kirana stores" — pre-caching data, managing requests, and reducing round trips.

What is Edge Computing?

Edge computing means:

- Processing and serving data from the nearest network edge node (not the central data center)

- Bringing computation, caching, and logic closer to the user

This reduces:

- Latency

- Bandwidth cost

- Backend overload

Google’s Edge Architecture

| Component | Description |

|---|---|

| Google Global Cache | Content delivery platform for static & streaming content |

| Edge PoPs | Points of Presence across ISPs & countries |

| Cloud CDN | Google’s developer-facing edge delivery platform |

| Compute at Edge | Functions & microservices run directly at edge nodes |

Google has over 150+ edge locations in 90+ countries.

Edge in Action – YouTube Example

When you open a YouTube video:

- Your device hits Google DNS (

8.8.8.8) - DNS returns the nearest edge node IP

- If the video is already cached at that edge, it's served instantly

- If not, edge node requests it from central DC and caches it

Result: Video starts in <200ms, buffering is minimized

Core Advantages

| Benefit | Traditional Servers | Edge Computing (Google) |

|---|---|---|

| Latency | High (Geo-distance) | Low (local PoP) |

| Scalability | Central scaling only | Distributed scaling |

| Availability | Region-specific downtime | Multi-region failover |

| Cost Efficiency | High inter-DC traffic | Reduced backbone traffic |

| Personalization | Slower (cookie fetch delays) | Faster local decision making |

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)