Overcoming Challenges in Selenium Scraping with Proxies

Every day, billions of people interact with websites—leaving behind a wealth of valuable data. If you're in the business of collecting and analyzing this information, you're already aware of the challenges posed by modern websites. With JavaScript-rendered pages, static scrapers just don’t cut it. Enter Selenium scraping—a tool that mimics human behavior and interacts with websites just like a real user would. Selenium scraping is a game-changer. In this guide, I’m diving into why it’s the go-to solution for scraping dynamic, JavaScript-heavy websites. I’ll also show you how to optimize your scraping efforts with proxies, tackle common challenges, and reveal pro tips for success. Why Selenium Scraping Selenium isn't your run-of-the-mill scraper. Traditional methods often fall short when dealing with JavaScript-heavy websites. While basic tools like BeautifulSoup or Scrapy only extract static HTML, they miss out on dynamic content powered by JavaScript—content that is often crucial to your data needs. That’s where Selenium shines. It interacts with the page just like a human user, triggering actions like clicks, scrolling, and form submissions. This dynamic interaction makes it the best tool for scraping complex sites. How Does Selenium Work Selenium works by automating browser interactions through WebDrivers. It’s like controlling a browser remotely, letting you perform actions such as: Launching a WebDriver – Open a browser (Chrome, Firefox). Navigating the Page – Load the target website. Interacting with Elements – Click buttons, fill out forms, scroll. Extracting Data – Pull text, images, tables once visible. Handling Dynamic Content – Wait for JavaScript to load before scraping. With this level of interaction, Selenium can collect data from pages that won’t even load for basic scrapers. Let’s dive deeper into the advantages of using Selenium. Major Benefits of Selenium Scraping Optimized for JavaScript Pages Most modern websites use JavaScript to load content. If you're relying on traditional scrapers, you're probably missing out on vital data. Selenium waits for the content to load, clicks to reveal hidden data, and even handles AJAX calls to get the job done. Emulates Real User Interaction To avoid being blocked, Selenium mimics human-like actions. It clicks buttons, scrolls through pages, and can even handle CAPTCHAs with third-party services. This reduces the risk of detection. Handles Authentication and Forms Websites that require login or form submission? No problem. Selenium can log you in, save cookies, and automate form submissions, allowing you to collect data from authenticated sections of the site. How to Tackle the Challenges of Selenium Scraping While Selenium is powerful, it’s not immune to challenges. Websites are getting smarter at detecting bots. But don’t worry—there are ways to stay ahead of the game. IP Blocking & Rate Limiting Websites often block or slow down IPs that make too many requests. The solution? Use rotating residential proxies. This keeps your scraping operation under the radar by changing your IP with every request. Add small delays between requests, and distribute the traffic across multiple proxies to mimic natural behavior. Pro Tip: When scraping high-traffic sites like Amazon or eBay, keep your request frequency low and rotate proxies often. CAPTCHA Challenges CAPTCHAs stop bots in their tracks. Solve them using CAPTCHA-solving services like 2Captcha or Anti-Captcha. If you're triggering CAPTCHAs often, slow down your interactions, and avoid using headless browsing, which some sites flag. Pro Tip: Sites track mouse movements. Use Selenium’s ActionChains to simulate them, making it harder for sites to detect automated activity. from selenium.webdriver.common.action_chains import ActionChains actions = ActionChains(driver) actions.move_by_offset(100, 200).click().perform() Browser Fingerprinting Websites track details like user-agent, screen resolution, and installed fonts to identify bots. To mask this, use anti-detect tools like Stealthfox or rotate your browser fingerprints. Pro Tip: Change your user-agent frequently and disable WebDriver flags to avoid detection. driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})") Dynamic Content (AJAX & Infinite Scrolling) Many sites load content dynamically, and traditional scrapers won’t capture everything unless you manually trigger these loading events. Use Selenium’s scroll functionality to load new content, and make sure to wait for AJAX requests to finish before scraping. Pro Tip: For infinite scroll sites like Twitter or Instagram, repeatedly scroll to the bottom using this code: while True: driver.execute_script("window.scrollTo(0, document.body.scrollHeight);") time.sleep(2) Step-by-Step Guide to Setting Up Selenium Scraping Ready to dive in? Here’s how to set up

Every day, billions of people interact with websites—leaving behind a wealth of valuable data. If you're in the business of collecting and analyzing this information, you're already aware of the challenges posed by modern websites. With JavaScript-rendered pages, static scrapers just don’t cut it. Enter Selenium scraping—a tool that mimics human behavior and interacts with websites just like a real user would.

Selenium scraping is a game-changer. In this guide, I’m diving into why it’s the go-to solution for scraping dynamic, JavaScript-heavy websites. I’ll also show you how to optimize your scraping efforts with proxies, tackle common challenges, and reveal pro tips for success.

Why Selenium Scraping

Selenium isn't your run-of-the-mill scraper. Traditional methods often fall short when dealing with JavaScript-heavy websites. While basic tools like BeautifulSoup or Scrapy only extract static HTML, they miss out on dynamic content powered by JavaScript—content that is often crucial to your data needs. That’s where Selenium shines. It interacts with the page just like a human user, triggering actions like clicks, scrolling, and form submissions. This dynamic interaction makes it the best tool for scraping complex sites.

How Does Selenium Work

Selenium works by automating browser interactions through WebDrivers. It’s like controlling a browser remotely, letting you perform actions such as:

- Launching a WebDriver – Open a browser (Chrome, Firefox).

- Navigating the Page – Load the target website.

- Interacting with Elements – Click buttons, fill out forms, scroll.

- Extracting Data – Pull text, images, tables once visible.

- Handling Dynamic Content – Wait for JavaScript to load before scraping.

With this level of interaction, Selenium can collect data from pages that won’t even load for basic scrapers. Let’s dive deeper into the advantages of using Selenium.

Major Benefits of Selenium Scraping

- Optimized for JavaScript Pages Most modern websites use JavaScript to load content. If you're relying on traditional scrapers, you're probably missing out on vital data. Selenium waits for the content to load, clicks to reveal hidden data, and even handles AJAX calls to get the job done.

- Emulates Real User Interaction To avoid being blocked, Selenium mimics human-like actions. It clicks buttons, scrolls through pages, and can even handle CAPTCHAs with third-party services. This reduces the risk of detection.

- Handles Authentication and Forms Websites that require login or form submission? No problem. Selenium can log you in, save cookies, and automate form submissions, allowing you to collect data from authenticated sections of the site.

How to Tackle the Challenges of Selenium Scraping

While Selenium is powerful, it’s not immune to challenges. Websites are getting smarter at detecting bots. But don’t worry—there are ways to stay ahead of the game.

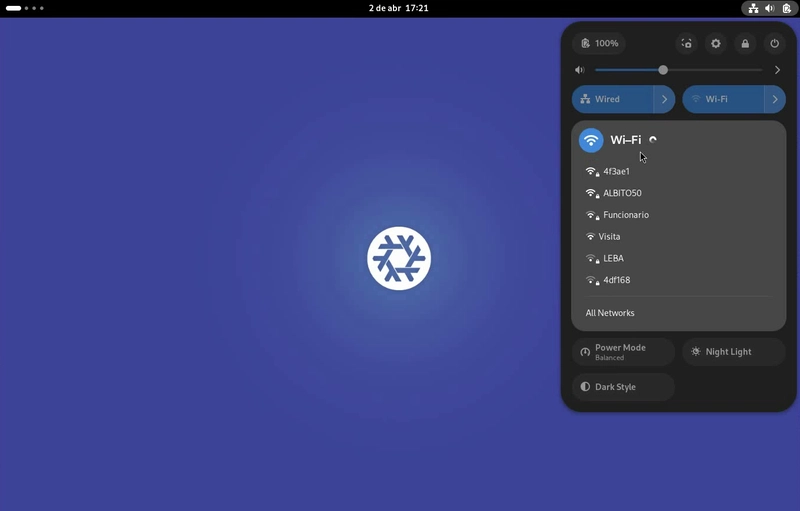

IP Blocking & Rate Limiting

Websites often block or slow down IPs that make too many requests. The solution? Use rotating residential proxies. This keeps your scraping operation under the radar by changing your IP with every request. Add small delays between requests, and distribute the traffic across multiple proxies to mimic natural behavior.

Pro Tip: When scraping high-traffic sites like Amazon or eBay, keep your request frequency low and rotate proxies often.

CAPTCHA Challenges

CAPTCHAs stop bots in their tracks. Solve them using CAPTCHA-solving services like 2Captcha or Anti-Captcha. If you're triggering CAPTCHAs often, slow down your interactions, and avoid using headless browsing, which some sites flag.

Pro Tip: Sites track mouse movements. Use Selenium’s ActionChains to simulate them, making it harder for sites to detect automated activity.

from selenium.webdriver.common.action_chains import ActionChains

actions = ActionChains(driver)

actions.move_by_offset(100, 200).click().perform()

Browser Fingerprinting

Websites track details like user-agent, screen resolution, and installed fonts to identify bots. To mask this, use anti-detect tools like Stealthfox or rotate your browser fingerprints.

Pro Tip: Change your user-agent frequently and disable WebDriver flags to avoid detection.

driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

Dynamic Content (AJAX & Infinite Scrolling)

Many sites load content dynamically, and traditional scrapers won’t capture everything unless you manually trigger these loading events. Use Selenium’s scroll functionality to load new content, and make sure to wait for AJAX requests to finish before scraping.

Pro Tip: For infinite scroll sites like Twitter or Instagram, repeatedly scroll to the bottom using this code:

while True:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(2)

Step-by-Step Guide to Setting Up Selenium Scraping

Ready to dive in? Here’s how to set up Selenium scraping.

Install Selenium

Install Selenium using pip:

pip install selenium

Download WebDriver

Choose the WebDriver for your browser (ChromeDriver for Chrome, GeckoDriver for Firefox).

Launch a Browser with Selenium

Example for Chrome:

from selenium import webdriver

driver = webdriver.Chrome()

driver.get("https://example.com")

print(driver.title)

driver.quit()

Extract Data

Extract data from elements:

element = driver.find_element("xpath", "//h1")

print(element.text)

Handle Dynamic Content

Use WebDriverWait to ensure elements load before scraping:

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

wait = WebDriverWait(driver, 10)

element = wait.until(EC.presence_of_element_located((By.XPATH, "//div[@id='content']")))

print(element.text)

Final Thoughts

Selenium scraping provides the ability to collect dynamic content from complex websites. However, success depends on understanding the challenges and knowing how to overcome them. With this guide and the right proxies, you can enhance your scraping capabilities.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)