Microsoft Copilot: First 90 Days - A Developer's Perspective

Technical teams across enterprises are discovering that Copilot implementation involves more than just feature enablement. The first three months of adoption have revealed fascinating technical patterns and implementation insights that challenge our assumptions about AI integration in development workflows. Technical Integration Realities The initial assumption that Copilot would seamlessly integrate into existing development workflows proved both right and wrong. Development teams discovered that while the technical integration was straightforward, the impact on development practices was profound. API Integration Patterns Early implementation data revealed interesting patterns in API usage: REST API calls needed optimization for AI-assisted operations Graph API integration required rethinking for Copilot scenarios Custom development workflows needed adaptation Authentication patterns evolved for AI-assisted processes Development Workflow Impact Traditional development practices faced unexpected challenges: Code review processes needed revision for AI-suggested code Documentation practices evolved to include prompt engineering Testing strategies expanded to cover AI-generated content Version control adapted to track AI-assisted changes Performance Considerations Technical teams identified critical performance patterns: API response times varied based on context complexity Resource utilization showed unexpected peaks Caching strategies needed optimization Service dependencies required careful management Security Implementation Lessons Security implementation revealed several key insights: Traditional security boundaries needed redefinition Permission models evolved for AI operations Data access patterns required new monitoring approaches Authentication flows adapted to AI-assisted scenarios Integration Architecture The technical architecture evolved to accommodate: New service dependencies Modified data flows Enhanced monitoring requirements Adapted security boundaries Monitoring and Telemetry Implementation teams developed new monitoring approaches: AI-assisted operation tracking Usage pattern analysis Performance impact monitoring Error tracking for AI scenarios Error Handling Patterns Error handling evolved to address: AI-specific error scenarios Graceful degradation patterns Recovery strategies User feedback loops Development Best Practices New best practices emerged around: Prompt engineering in code AI-assisted code review Documentation standards Testing methodologies Technical Challenges Solved Teams overcame several key challenges: Resource optimization for AI operations Integration with existing tools Performance bottleneck resolution Security boundary management Looking Forward: Technical Evolution The technical landscape continues to evolve: API optimization patterns are emerging New development workflows are being established Testing strategies are adapting Security models are maturing The first 90 days of Copilot implementation have shown that success requires more than technical knowledge - it demands a fundamental rethinking of development practices and patterns. These insights offer valuable lessons for technical teams preparing for their own Copilot journey. Share your technical implementation experiences in the comments. What challenges have you encountered? What solutions have you discovered?

Technical teams across enterprises are discovering that Copilot implementation involves more than just feature enablement. The first three months of adoption have revealed fascinating technical patterns and implementation insights that challenge our assumptions about AI integration in development workflows.

Technical Integration Realities

The initial assumption that Copilot would seamlessly integrate into existing development workflows proved both right and wrong. Development teams discovered that while the technical integration was straightforward, the impact on development practices was profound.

API Integration Patterns

Early implementation data revealed interesting patterns in API usage:

- REST API calls needed optimization for AI-assisted operations

- Graph API integration required rethinking for Copilot scenarios

- Custom development workflows needed adaptation

- Authentication patterns evolved for AI-assisted processes

Development Workflow Impact

Traditional development practices faced unexpected challenges:

- Code review processes needed revision for AI-suggested code

- Documentation practices evolved to include prompt engineering

- Testing strategies expanded to cover AI-generated content

- Version control adapted to track AI-assisted changes

Performance Considerations

Technical teams identified critical performance patterns:

- API response times varied based on context complexity

- Resource utilization showed unexpected peaks

- Caching strategies needed optimization

- Service dependencies required careful management

Security Implementation Lessons

Security implementation revealed several key insights:

- Traditional security boundaries needed redefinition

- Permission models evolved for AI operations

- Data access patterns required new monitoring approaches

- Authentication flows adapted to AI-assisted scenarios

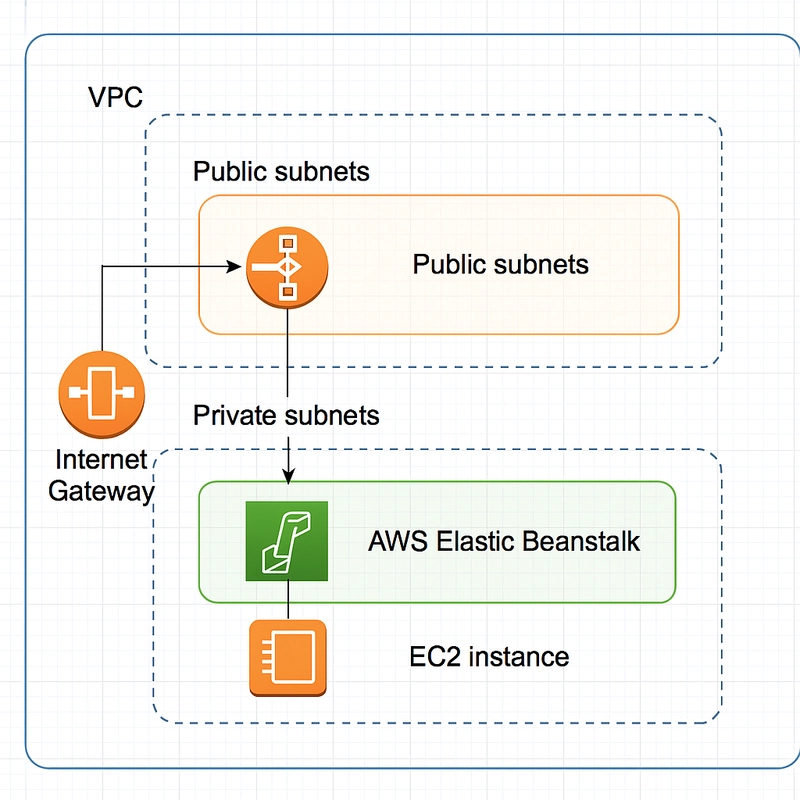

Integration Architecture

The technical architecture evolved to accommodate:

- New service dependencies

- Modified data flows

- Enhanced monitoring requirements

- Adapted security boundaries

Monitoring and Telemetry

Implementation teams developed new monitoring approaches:

- AI-assisted operation tracking

- Usage pattern analysis

- Performance impact monitoring

- Error tracking for AI scenarios

Error Handling Patterns

Error handling evolved to address:

- AI-specific error scenarios

- Graceful degradation patterns

- Recovery strategies

- User feedback loops

Development Best Practices

New best practices emerged around:

- Prompt engineering in code

- AI-assisted code review

- Documentation standards

- Testing methodologies

Technical Challenges Solved

Teams overcame several key challenges:

- Resource optimization for AI operations

- Integration with existing tools

- Performance bottleneck resolution

- Security boundary management

Looking Forward: Technical Evolution

The technical landscape continues to evolve:

- API optimization patterns are emerging

- New development workflows are being established

- Testing strategies are adapting

- Security models are maturing

The first 90 days of Copilot implementation have shown that success requires more than technical knowledge - it demands a fundamental rethinking of development practices and patterns. These insights offer valuable lessons for technical teams preparing for their own Copilot journey.

Share your technical implementation experiences in the comments. What challenges have you encountered? What solutions have you discovered?

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)