Kubernetes 101: Deploying and Managing Containers in the Cloud

Introduction In today's cloud-native world, Kubernetes has become the go-to solution for deploying, managing, and scaling containerized applications. Whether you're a beginner or an experienced DevOps engineer, understanding Kubernetes is crucial for cloud deployments. This blog will cover the basics of Kubernetes, its architecture, and how to deploy and manage containers effectively. What is Kubernetes? Kubernetes (K8s) is an open-source container orchestration platform that automates the deployment, scaling, and operation of application containers. Originally developed by Google, it is now maintained by the Cloud Native Computing Foundation (CNCF). Key Features of Kubernetes: Automated Deployment & Scaling: Ensures that applications run efficiently by managing container lifecycles. Self-healing: Automatically restarts failed containers and replaces unresponsive ones. Load Balancing & Service Discovery: Manages internal traffic efficiently. Rolling Updates & Rollbacks: Enables seamless application updates without downtime. Storage Orchestration: Supports dynamic storage provisioning for applications. Kubernetes Architecture Overview Kubernetes follows a master-worker architecture comprising the following components: Master Node (Control Plane): API Server: Acts as the front end for Kubernetes, handling all communication. Controller Manager: Manages controllers responsible for maintaining desired states. Scheduler: Assigns workloads to worker nodes based on resource availability. etcd: A key-value store that holds cluster data. Worker Nodes: Kubelet: Ensures the node runs the assigned pods and reports back to the master. Kube Proxy: Handles networking and forwarding requests inside the cluster. Container Runtime: Runs the actual containers (Docker, containerd, etc.). Deploying an Application on Kubernetes Let’s go step by step to deploy a simple Nginx web server using Kubernetes. Step 1: Install Kubernetes To get started, you need to install Kubernetes using tools like Minikube (for local development) or set up a cloud-based cluster on AWS (EKS), Google Cloud (GKE), or Azure (AKS). Step 2: Create a Deployment YAML File A deployment ensures the desired number of pods run continuously. apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 Step 3: Apply the Deployment Run the following command to deploy the application: kubectl apply -f nginx-deployment.yaml Step 4: Expose the Deployment as a Service To access the application, expose it as a service. apiVersion: v1 kind: Service metadata: name: nginx-service spec: selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 80 type: LoadBalancer Apply the service: kubectl apply -f nginx-service.yaml Step 5: Verify the Deployment Check if the pods are running: kubectl get pods Check the service details: kubectl get svc Once the LoadBalancer is assigned an external IP, you can access the Nginx web server via the given address. Managing Kubernetes Workloads Once your application is deployed, Kubernetes provides various management capabilities: Scaling Applications: kubectl scale --replicas=5 deployment/nginx-deployment This increases the number of running pods to 5. Rolling Updates: Update the application version without downtime: kubectl set image deployment/nginx-deployment nginx=nginx:latest Monitoring Logs: View application logs for debugging: kubectl logs -f Kubernetes Networking Kubernetes networking ensures seamless communication between pods, services, and external clients. Networking Components: ClusterIP: Exposes services internally within the cluster. NodePort: Allows access to services on a specific port of each node. LoadBalancer: Provides external access to services. Ingress Controller: Manages external HTTP and HTTPS traffic. Kubernetes Security Best Practices Security is critical when managing Kubernetes clusters. Some best practices include: RBAC (Role-Based Access Control): Restrict user and service access. Network Policies: Control communication between pods. Secrets Management: Store sensitive data securely. Pod Security Policies: Define security settings for pods. Helm: Kubernetes Package Manager Helm simplifies Kubernetes application deployment by using pre-configured templates called charts. Basic Helm Commands: Install a Helm chart: helm install my-release stable/nginx List installed Helm charts:

Introduction

In today's cloud-native world, Kubernetes has become the go-to solution for deploying, managing, and scaling containerized applications. Whether you're a beginner or an experienced DevOps engineer, understanding Kubernetes is crucial for cloud deployments. This blog will cover the basics of Kubernetes, its architecture, and how to deploy and manage containers effectively.

What is Kubernetes?

Kubernetes (K8s) is an open-source container orchestration platform that automates the deployment, scaling, and operation of application containers. Originally developed by Google, it is now maintained by the Cloud Native Computing Foundation (CNCF).

Key Features of Kubernetes:

- Automated Deployment & Scaling: Ensures that applications run efficiently by managing container lifecycles.

- Self-healing: Automatically restarts failed containers and replaces unresponsive ones.

- Load Balancing & Service Discovery: Manages internal traffic efficiently.

- Rolling Updates & Rollbacks: Enables seamless application updates without downtime.

- Storage Orchestration: Supports dynamic storage provisioning for applications.

Kubernetes Architecture Overview

Kubernetes follows a master-worker architecture comprising the following components:

Master Node (Control Plane):

- API Server: Acts as the front end for Kubernetes, handling all communication.

- Controller Manager: Manages controllers responsible for maintaining desired states.

- Scheduler: Assigns workloads to worker nodes based on resource availability.

- etcd: A key-value store that holds cluster data.

Worker Nodes:

- Kubelet: Ensures the node runs the assigned pods and reports back to the master.

- Kube Proxy: Handles networking and forwarding requests inside the cluster.

- Container Runtime: Runs the actual containers (Docker, containerd, etc.).

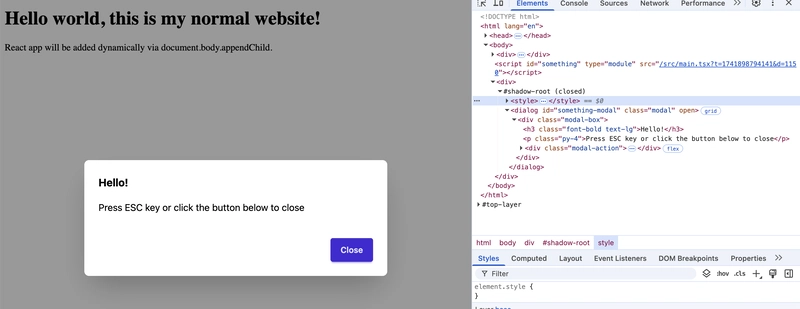

Deploying an Application on Kubernetes

Let’s go step by step to deploy a simple Nginx web server using Kubernetes.

Step 1: Install Kubernetes

To get started, you need to install Kubernetes using tools like Minikube (for local development) or set up a cloud-based cluster on AWS (EKS), Google Cloud (GKE), or Azure (AKS).

Step 2: Create a Deployment YAML File

A deployment ensures the desired number of pods run continuously.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Step 3: Apply the Deployment

Run the following command to deploy the application:

kubectl apply -f nginx-deployment.yaml

Step 4: Expose the Deployment as a Service

To access the application, expose it as a service.

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

Apply the service:

kubectl apply -f nginx-service.yaml

Step 5: Verify the Deployment

Check if the pods are running:

kubectl get pods

Check the service details:

kubectl get svc

Once the LoadBalancer is assigned an external IP, you can access the Nginx web server via the given address.

Managing Kubernetes Workloads

Once your application is deployed, Kubernetes provides various management capabilities:

- Scaling Applications:

kubectl scale --replicas=5 deployment/nginx-deployment

This increases the number of running pods to 5.

- Rolling Updates: Update the application version without downtime:

kubectl set image deployment/nginx-deployment nginx=nginx:latest

- Monitoring Logs: View application logs for debugging:

kubectl logs -f

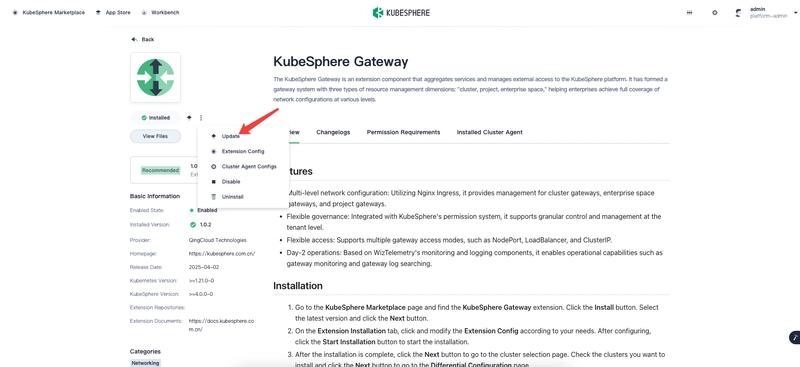

Kubernetes Networking

Kubernetes networking ensures seamless communication between pods, services, and external clients.

Networking Components:

- ClusterIP: Exposes services internally within the cluster.

- NodePort: Allows access to services on a specific port of each node.

- LoadBalancer: Provides external access to services.

- Ingress Controller: Manages external HTTP and HTTPS traffic.

Kubernetes Security Best Practices

Security is critical when managing Kubernetes clusters. Some best practices include:

- RBAC (Role-Based Access Control): Restrict user and service access.

- Network Policies: Control communication between pods.

- Secrets Management: Store sensitive data securely.

- Pod Security Policies: Define security settings for pods.

Helm: Kubernetes Package Manager

Helm simplifies Kubernetes application deployment by using pre-configured templates called charts.

Basic Helm Commands:

- Install a Helm chart:

helm install my-release stable/nginx

- List installed Helm charts:

helm list

- Uninstall a Helm chart:

helm uninstall my-release

Monitoring and Logging in Kubernetes

Observability is crucial for maintaining Kubernetes clusters.

Tools for Monitoring:

- Prometheus & Grafana: Collect and visualize metrics.

- Fluentd & ELK Stack: Centralized logging.

- Kubernetes Dashboard: Provides a web-based UI for monitoring cluster status.

Conclusion

Kubernetes simplifies the deployment and management of containerized applications, making it a must-have skill for DevOps engineers. By understanding the basics of Kubernetes and practicing with real-world deployments, you can unlock the full potential of cloud-native development.

If you found this guide helpful, share it with your network and stay tuned for more DevOps insights!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

.webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)