Implementing RAG with Spring AI and Pinecone: A Practical Guide

Introduction Retrieval-Augmented Generation (RAG) has emerged as a powerful technique for building AI applications that combine information retrieval with generative language models. This guide demonstrates how to implement a RAG system using Spring AI with Pinecone as the vector database, specifically for creating a documentation chatbot. What is RAG? RAG combines two key components: Retrieval: Finds relevant information from a knowledge base using semantic search Generation: Uses a language model to generate contextual responses based on retrieved information System Architecture [Documentation Website] → [Scraper] → [Chunking] → [Pinecone Vector DB] ↑ [User Query] → [Spring AI] → [Semantic Search] → [LLM Generation] → [Response] Prerequisites Pinecone account (free tier available) Spring Boot application (3.x recommended) Basic understanding of vector databases Implementation Steps 1. Setting Up Pinecone Integration Gradle Dependency implementation "org.springframework.ai:spring-ai-pinecone-store-spring-boot-starter" Configuration (application.yml) spring: ai: vectorstore: pinecone: apiKey: ${PINECONE_API_KEY} environment: ${PINECONE_ENV} index-name: ${PINECONE_INDEX} project-id: ${PINECONE_PROJECT_ID} 2. Document Processing Pipeline Web Scraper Implementation public class DocumentationScraper { private final Set visitedUrls = new HashSet(); private final String baseDomain; public DocumentationScraper(String baseUrl) { this.baseDomain = extractDomain(baseUrl); } public List scrape(String startUrl) { List documents = new ArrayList(); scrapeRecursive(startUrl, documents); return documents; } // Includes URL normalization, same-domain checking, and content extraction // ... (full implementation as in original) } Document Chunking Service @Service public class DocumentationService { private final VectorStore vectorStore; private final TokenTextSplitter textSplitter; public DocumentationService(VectorStore vectorStore) { this.vectorStore = vectorStore; this.textSplitter = new TokenTextSplitter( 2000, // Optimal chunk size for technical documentation 300, // Minimum chunk size 100, // Overlap for context preservation 15, // Max chunks per page true // Preserve document structure ); } public List processDocument(String content, Map metadata) { Document originalDoc = new Document(content, metadata); List chunks = textSplitter.split(originalDoc); // Enhance metadata for better retrieval for (int i = 0; i

Introduction

Retrieval-Augmented Generation (RAG) has emerged as a powerful technique for building AI applications that combine information retrieval with generative language models. This guide demonstrates how to implement a RAG system using Spring AI with Pinecone as the vector database, specifically for creating a documentation chatbot.

What is RAG?

RAG combines two key components:

- Retrieval: Finds relevant information from a knowledge base using semantic search

- Generation: Uses a language model to generate contextual responses based on retrieved information

System Architecture

[Documentation Website] → [Scraper] → [Chunking] → [Pinecone Vector DB]

↑

[User Query] → [Spring AI] → [Semantic Search] → [LLM Generation] → [Response]

Prerequisites

- Pinecone account (free tier available)

- Spring Boot application (3.x recommended)

- Basic understanding of vector databases

Implementation Steps

1. Setting Up Pinecone Integration

Gradle Dependency

implementation "org.springframework.ai:spring-ai-pinecone-store-spring-boot-starter"

Configuration (application.yml)

spring:

ai:

vectorstore:

pinecone:

apiKey: ${PINECONE_API_KEY}

environment: ${PINECONE_ENV}

index-name: ${PINECONE_INDEX}

project-id: ${PINECONE_PROJECT_ID}

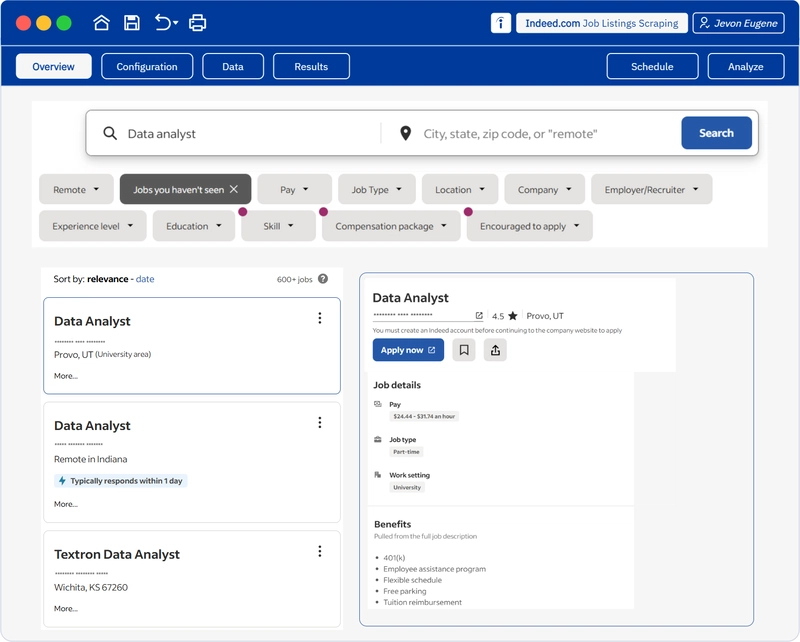

2. Document Processing Pipeline

Web Scraper Implementation

public class DocumentationScraper {

private final Set<String> visitedUrls = new HashSet<>();

private final String baseDomain;

public DocumentationScraper(String baseUrl) {

this.baseDomain = extractDomain(baseUrl);

}

public List<Document> scrape(String startUrl) {

List<Document> documents = new ArrayList<>();

scrapeRecursive(startUrl, documents);

return documents;

}

// Includes URL normalization, same-domain checking, and content extraction

// ... (full implementation as in original)

}

Document Chunking Service

@Service

public class DocumentationService {

private final VectorStore vectorStore;

private final TokenTextSplitter textSplitter;

public DocumentationService(VectorStore vectorStore) {

this.vectorStore = vectorStore;

this.textSplitter = new TokenTextSplitter(

2000, // Optimal chunk size for technical documentation

300, // Minimum chunk size

100, // Overlap for context preservation

15, // Max chunks per page

true // Preserve document structure

);

}

public List<Document> processDocument(String content, Map<String, Object> metadata) {

Document originalDoc = new Document(content, metadata);

List<Document> chunks = textSplitter.split(originalDoc);

// Enhance metadata for better retrieval

for (int i = 0; i < chunks.size(); i++) {

chunks.get(i).getMetadata()

.put("chunk_number", i)

.put("total_chunks", chunks.size());

}

return chunks;

}

}

3. Knowledge Base Initialization

REST Endpoint for Loading Data

@RestController

@RequestMapping("/document")

@Tag(name = "AI Module API")

public class DocumentController {

private final DocumentationService documentationService;

@PostMapping("/load-data")

public ResponseEntity<String> loadDocumentation() {

documentationService.scrapeAndStoreDocumentation("https://docs.openwes.top");

return ResponseEntity.ok("Documentation loaded successfully");

}

}

4. Implementing RAG in Chat Completions

@Service

public class ChatService {

private final ChatModel chatModel;

private final VectorStore vectorStore;

public String generateResponse(String query) {

SearchRequest searchRequest = SearchRequest.defaults()

.withTopK(5) // Retrieve top 5 relevant chunks

.withSimilarityThreshold(0.7);

return ChatClient.create(chatModel)

.prompt()

.advisors(new QuestionAnswerAdvisor(vectorStore, searchRequest))

.call()

.content();

}

}

Best Practices

- Optimal Chunking:

- Technical content: 1500-2500 tokens

- Narrative content: 500-1000 tokens

Include overlap (100-200 tokens) for context preservation

Enhanced Metadata:

metadata.put("document_type", "API Reference");

metadata.put("last_updated", "2024-03-01");

metadata.put("relevance_score", 0.95);

- Hybrid Search:

SearchRequest hybridRequest = SearchRequest.defaults()

.withTopK(5)

.withHybridSearch(true)

.withKeywordWeight(0.3);

- Prompt Engineering:

PromptTemplate template = new PromptTemplate("""

Answer the question based on the following context:

{context}

Question: {question}

If you don't know the answer, say "I don't know".

""");

Performance Optimization

- Caching: Implement Redis caching for frequent queries

-

Async Processing: Use

@Asyncfor document ingestion - Batch Processing: Process documents in batches of 50-100

Evaluation Metrics

| Metric | Target | Measurement Method |

|---|---|---|

| Retrieval Precision | >85% | Human evaluation |

| Response Latency | <2s | Performance testing |

| User Satisfaction | >4/5 | Feedback surveys |

Conclusion

This implementation demonstrates how to build a production-ready RAG system using Spring AI and Pinecone. Key advantages include:

- Accurate, context-aware responses for documentation queries

- Scalable vector search capabilities

- Easy integration with existing Spring applications

Next Steps

- Implement user feedback mechanism:

@PostMapping("/feedback")

public void logFeedback(@RequestBody FeedbackDTO feedback) {

// Store feedback for continuous improvement

}

- Add analytics dashboard for query patterns

- Implement automatic periodic document updates

Project Reference: The complete implementation is available on GitHub in the module-ai package. Contributions and feedback are welcome!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Koofr Cloud Storage: Lifetime Subscription (1TB) (80% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_roibu_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

.webp?#)

![M4 MacBook Air Drops to Just $849 - Act Fast! [Lowest Price Ever]](https://www.iclarified.com/images/news/97140/97140/97140-640.jpg)

![Apple Smart Glasses Not Close to Being Ready as Meta Targets 2025 [Gurman]](https://www.iclarified.com/images/news/97139/97139/97139-640.jpg)

![iPadOS 19 May Introduce Menu Bar, iOS 19 to Support External Displays [Rumor]](https://www.iclarified.com/images/news/97137/97137/97137-640.jpg)