How to Scrape Google Scholar Results

Google Scholar is an important tool for academic researchers around the world to find and obtain literature, covering papers, monographs and conference papers in various fields. However, due to its strict anti-crawler mechanism, it is not easy to directly crawl Google Scholar data, especially for users who need large-scale data collection. In this article, we will introduce two methods for crawling Google Scholar data: manual crawling (Scrapy/Selenium) and Scrapeless API. Manual crawling is suitable for small-scale data collection, but may encounter IP restrictions and verification code problems. Scrapeless API provides a more stable and efficient solution, especially for large-scale data crawling, without the need to maintain additional anti-detection strategies. By comparing the advantages and disadvantages of the two methods, this article will help you choose the most suitable solution for you to achieve efficient data collection. Why Scrape Google Scholar? Google Scholar provides valuable academic resources, including research papers, citations, author profiles, and more. By scraping Google Scholar, you can: Collect research papers on a specific topic. Extract citation counts for academic impact analysis. Retrieve author profiles and their published work. Automate literature reviews for research purposes. ## Challenges in Scraping Google Scholar Scraping Google Scholar comes with challenges such as: CAPTCHAs: Frequent requests can trigger Google's anti-bot protection. IP Blocking: Google can block IPs making repeated automated requests. Dynamic Content: Some results may be dynamically loaded via JavaScript. To overcome these challenges, we recommend using a dedicated API such as Scrapeless API. Method 1: How to Scrape Google Scholar - Traditional Web Scraping (Not Recommended) Web scraping Google Scholar manually using Python (e.g., BeautifulSoup, Selenium) is difficult due to Google's restrictions. Example using requests: import requests from bs4 import BeautifulSoup url = "https://scholar.google.com/scholar?q=machine+learning" headers = {"User-Agent": "Mozilla/5.0"} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.text, "html.parser") results = soup.find_all("div", class_="gs_r") for result in results: title = result.find("h3").text if result.find("h3") else "No Title" print(title)

Google Scholar is an important tool for academic researchers around the world to find and obtain literature, covering papers, monographs and conference papers in various fields. However, due to its strict anti-crawler mechanism, it is not easy to directly crawl Google Scholar data, especially for users who need large-scale data collection.

In this article, we will introduce two methods for crawling Google Scholar data: manual crawling (Scrapy/Selenium) and Scrapeless API. Manual crawling is suitable for small-scale data collection, but may encounter IP restrictions and verification code problems. Scrapeless API provides a more stable and efficient solution, especially for large-scale data crawling, without the need to maintain additional anti-detection strategies.

By comparing the advantages and disadvantages of the two methods, this article will help you choose the most suitable solution for you to achieve efficient data collection.

Why Scrape Google Scholar?

Google Scholar provides valuable academic resources, including research papers, citations, author profiles, and more. By scraping Google Scholar, you can:

- Collect research papers on a specific topic.

- Extract citation counts for academic impact analysis.

- Retrieve author profiles and their published work.

- Automate literature reviews for research purposes. ## Challenges in Scraping Google Scholar

Scraping Google Scholar comes with challenges such as:

- CAPTCHAs: Frequent requests can trigger Google's anti-bot protection.

- IP Blocking: Google can block IPs making repeated automated requests.

- Dynamic Content: Some results may be dynamically loaded via JavaScript.

To overcome these challenges, we recommend using a dedicated API such as Scrapeless API.

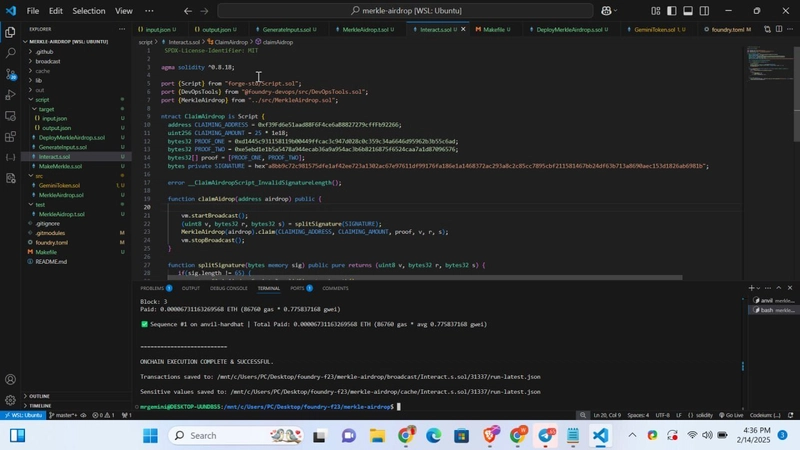

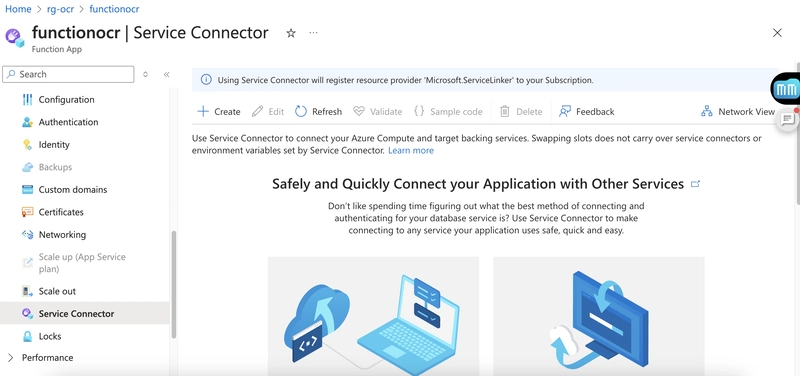

Method 1: How to Scrape Google Scholar - Traditional Web Scraping (Not Recommended)

Web scraping Google Scholar manually using Python (e.g., BeautifulSoup, Selenium) is difficult due to Google's restrictions. Example using requests:

import requests

from bs4 import BeautifulSoup

url = "https://scholar.google.com/scholar?q=machine+learning"

headers = {"User-Agent": "Mozilla/5.0"}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, "html.parser")

results = soup.find_all("div", class_="gs_r")

for result in results:

title = result.find("h3").text if result.find("h3") else "No Title"

print(title)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)