Hogwild! Parallel LLM: Up to 3.9x Faster Text Generation Without Retraining

This is a Plain English Papers summary of a research paper called Hogwild! Parallel LLM: Up to 3.9x Faster Text Generation Without Retraining. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Overview Hogwild! Inference enables parallel generation of tokens in large language models Achieves up to 3.9x speedup over sequential generation Uses concurrent attention mechanism without locks or synchronization Maintains output quality comparable to sequential generation Works with existing transformer architectures without model retraining Plain English Explanation Language models traditionally generate text one token at a time - like writing a sentence word by word. This sequential approach is slow because each new word has to wait for the previous one to be completed. Hogwild! Inference changes this by letting multiple tokens (words) b... Click here to read the full summary of this paper

This is a Plain English Papers summary of a research paper called Hogwild! Parallel LLM: Up to 3.9x Faster Text Generation Without Retraining. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

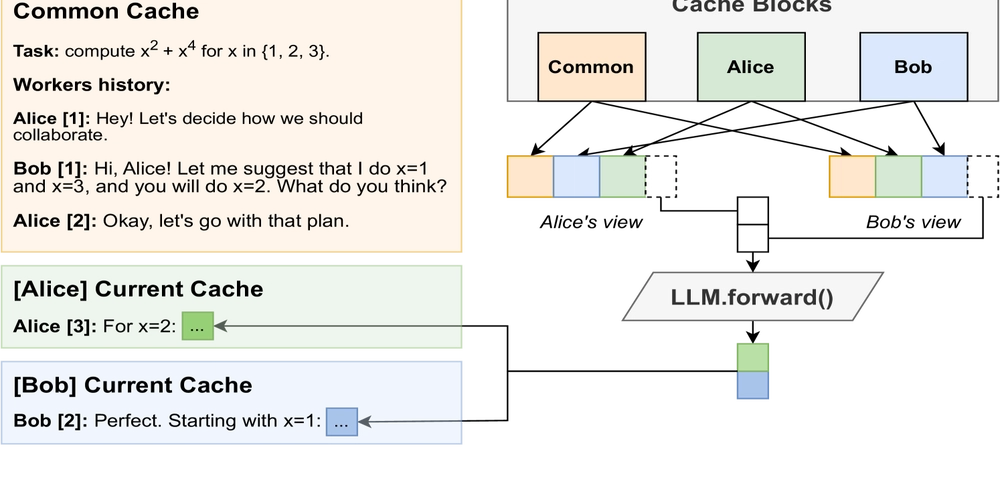

- Hogwild! Inference enables parallel generation of tokens in large language models

- Achieves up to 3.9x speedup over sequential generation

- Uses concurrent attention mechanism without locks or synchronization

- Maintains output quality comparable to sequential generation

- Works with existing transformer architectures without model retraining

Plain English Explanation

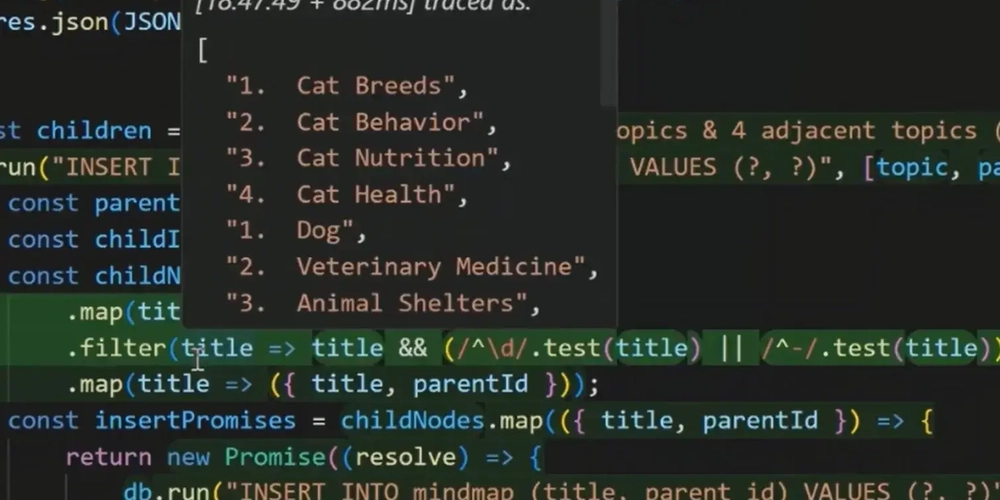

Language models traditionally generate text one token at a time - like writing a sentence word by word. This sequential approach is slow because each new word has to wait for the previous one to be completed.

Hogwild! Inference changes this by letting multiple tokens (words) b...

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

-Baldur’s-Gate-3-The-Final-Patch---An-Animated-Short-00-03-43.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Nanoleaf Announces New Pegboard Desk Dock With Dual-Sided Lighting [Video]](https://www.iclarified.com/images/news/97030/97030/97030-640.jpg)

![Apple's Foldable iPhone May Cost Between $2100 and $2300 [Rumor]](https://www.iclarified.com/images/news/97028/97028/97028-640.jpg)

![Apple Releases Public Betas of iOS 18.5, iPadOS 18.5, macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97024/97024/97024-640.jpg)