Having Trouble with Lovable?

Modern AI website builders promise to turn ideas into apps with breathtaking speed. Tools like Lovable, Bolt.new, Databutton, and Cursor let you describe a product in plain English and get a working web app in minutes. Lovable, for instance, touts itself as a “superhuman full stack engineer” that goes from “idea to app in seconds” and even claims to be “20x faster than coding” (Lovable). It’s a thrilling leap forward — especially for startup founders eager to launch and for non-coders empowered to build software. But amid the excitement, seasoned developers are noticing cracks beneath the glossy surface. Rapid AI-generated development can come with hidden costs: accumulating technical debt, mysterious code that’s hard to debug, and a long-term maintainability headache. In other words, there’s trouble in this new no-code paradise. The Hidden Costs of AI-Built Code AI builders undoubtedly accelerate development, but speed without oversight can breed chaos. Industry experts are already sounding alarms that code pumped out by AI assistants often flouts best practices. One recent analysis found that AI-driven coding is leading to skyrocketing duplication and sinking maintainability in codebases (Why AI-generated code is creating a technical debt nightmare | Okoone). In essence, these tools achieve quick results by trading away structural quality. They may get you an MVP fast, but under the hood you’ll often find convoluted logic, inconsistent patterns, and piles of technical debt waiting to be paid down. Technical debt — the cost of quick-and-dirty solutions that will need refactoring later — tends to accumulate faster with AI-generated projects. “I don’t think I have ever seen so much technical debt being created in such a short period of time,” remarks technologist Kin Lane regarding the recent proliferation of AI-generated code (How AI generated code compounds technical debt — LeadDev). AI coding assistants code by brute force: they’ll copy-paste chunks of code instead of abstracting or reusing components, violating the Don’t Repeat Yourself principle and bloating the codebase (Why AI-generated code is creating a technical debt nightmare | Okoone). It’s common to see two slightly different functions where a human would have written one and reused it. These duplicate patterns might work fine initially, but down the road they become multiple points of failure and complexity. As one software report noted, all this extra code means developers end up spending more time debugging AI-generated code and patching issues, erasing the initial productivity gains (How AI generated code compounds technical debt — LeadDev). GitClear’s research shows an explosion of code being copy-pasted (brown line) in recent years, while code reuse (green line) has sharply declined — a trend attributed to AI-generated code. The result is an epidemic of redundant code that inflates codebases. Seasoned experts warn that they’ve “never seen technical debt pile up this fast,” and fear companies could end up maintaining bloated, unstable code for years if this trend continues (Why AI-generated code is creating a technical debt nightmare | Okoone). The implications for a startup are serious. Early-stage companies live or die by their ability to iterate quickly and build something sustainable. If your app’s foundation is a tangle of AI-written code, adding new features or scaling usage can become progressively harder. Each “quick fix” is like adding another patch to a leaky ship — eventually you spend more time fixing holes than sailing forward. What began as an “instant” app can slow you down later, as developers must rewrite fragile sections or untangle logic to accommodate growth. The short-term win of shipping fast can turn into a long-term maintenance tax on your team’s productivity. Citizen Developers and the Maintainability Mirage A big selling point of tools like Lovable and Databutton is that they empower citizen developers — folks with no formal coding background — to build software. This developer democratization is undeniably positive in many ways. It allows domain experts and entrepreneurs to prototype ideas without hiring a full engineering team. However, when an organization leans heavily on non-technical creators for core products, it can inadvertently trade away long-term code health and value. Citizen developers often aren’t aware of software engineering best practices around architecture, testing, security, and maintainability. They’re focused on making it work now, which is exactly what these AI builders optimize for. The result can be applications that appear feature-complete, but hide a minefield of technical challenges. According to one analysis, while professional developers follow standards that ensure quality and scalability, citizen-built apps can suffer from inconsistent code and lack the resilience needed for the long haul. In fact, without proper training or oversight, non-developers ca

Modern AI website builders promise to turn ideas into apps with breathtaking speed. Tools like Lovable, Bolt.new, Databutton, and Cursor let you describe a product in plain English and get a working web app in minutes. Lovable, for instance, touts itself as a “superhuman full stack engineer” that goes from “idea to app in seconds” and even claims to be “20x faster than coding” (Lovable). It’s a thrilling leap forward — especially for startup founders eager to launch and for non-coders empowered to build software. But amid the excitement, seasoned developers are noticing cracks beneath the glossy surface. Rapid AI-generated development can come with hidden costs: accumulating technical debt, mysterious code that’s hard to debug, and a long-term maintainability headache. In other words, there’s trouble in this new no-code paradise.

The Hidden Costs of AI-Built Code

AI builders undoubtedly accelerate development, but speed without oversight can breed chaos. Industry experts are already sounding alarms that code pumped out by AI assistants often flouts best practices. One recent analysis found that AI-driven coding is leading to skyrocketing duplication and sinking maintainability in codebases (Why AI-generated code is creating a technical debt nightmare | Okoone). In essence, these tools achieve quick results by trading away structural quality. They may get you an MVP fast, but under the hood you’ll often find convoluted logic, inconsistent patterns, and piles of technical debt waiting to be paid down.

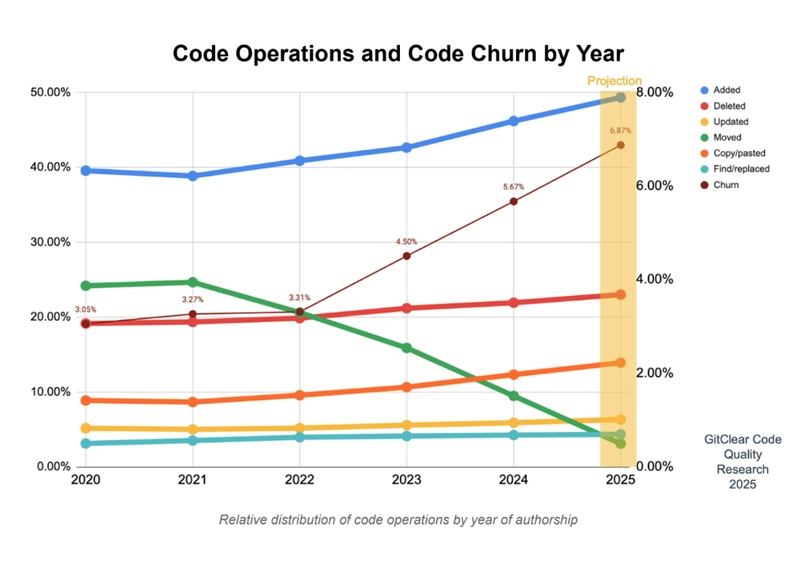

Technical debt — the cost of quick-and-dirty solutions that will need refactoring later — tends to accumulate faster with AI-generated projects. “I don’t think I have ever seen so much technical debt being created in such a short period of time,” remarks technologist Kin Lane regarding the recent proliferation of AI-generated code (How AI generated code compounds technical debt — LeadDev). AI coding assistants code by brute force: they’ll copy-paste chunks of code instead of abstracting or reusing components, violating the Don’t Repeat Yourself principle and bloating the codebase (Why AI-generated code is creating a technical debt nightmare | Okoone). It’s common to see two slightly different functions where a human would have written one and reused it. These duplicate patterns might work fine initially, but down the road they become multiple points of failure and complexity. As one software report noted, all this extra code means developers end up spending more time debugging AI-generated code and patching issues, erasing the initial productivity gains (How AI generated code compounds technical debt — LeadDev).

GitClear’s research shows an explosion of code being copy-pasted (brown line) in recent years, while code reuse (green line) has sharply declined — a trend attributed to AI-generated code. The result is an epidemic of redundant code that inflates codebases. Seasoned experts warn that they’ve “never seen technical debt pile up this fast,” and fear companies could end up maintaining bloated, unstable code for years if this trend continues (Why AI-generated code is creating a technical debt nightmare | Okoone).

The implications for a startup are serious. Early-stage companies live or die by their ability to iterate quickly and build something sustainable. If your app’s foundation is a tangle of AI-written code, adding new features or scaling usage can become progressively harder. Each “quick fix” is like adding another patch to a leaky ship — eventually you spend more time fixing holes than sailing forward. What began as an “instant” app can slow you down later, as developers must rewrite fragile sections or untangle logic to accommodate growth. The short-term win of shipping fast can turn into a long-term maintenance tax on your team’s productivity.

Citizen Developers and the Maintainability Mirage

A big selling point of tools like Lovable and Databutton is that they empower citizen developers — folks with no formal coding background — to build software. This developer democratization is undeniably positive in many ways. It allows domain experts and entrepreneurs to prototype ideas without hiring a full engineering team. However, when an organization leans heavily on non-technical creators for core products, it can inadvertently trade away long-term code health and value.

Citizen developers often aren’t aware of software engineering best practices around architecture, testing, security, and maintainability. They’re focused on making it work now, which is exactly what these AI builders optimize for. The result can be applications that appear feature-complete, but hide a minefield of technical challenges. According to one analysis, while professional developers follow standards that ensure quality and scalability, citizen-built apps can suffer from inconsistent code and lack the resilience needed for the long haul. In fact, without proper training or oversight, non-developers can inadvertently create inefficient, low-quality, and even vulnerable solutions — negating the benefits of accelerated development (7 Reasons Why the Idea of the Citizen Developer Never Materialized).

Consider maintainability: when the original creator (say, a non-coder on your team) moves on to a new project or role, who will understand and update their AI-fabricated code? If no one on the engineering team truly knows how the internals were generated, even a minor update can turn into a major ordeal. Companies have learned that simply having a working app is not the same as having a maintainable, extensible codebase. An application built by a citizen developer might work fine at small scale but buckle under real-world conditions. “Applications developed by citizen developers in low-code platforms can be effective on a small scale. When those same applications attempt to scale up…they can encounter significant performance issues,” one industry piece notes (7 Reasons Why the Idea of the Citizen Developer Never Materialized). Without careful engineering, that prototype which impressed early users may require a near rewrite to become a robust product. This gap between initial success and long-term sustainability is where many startups falter.

There’s also the issue of reduced long-term value. If your company’s core product is built with a proprietary AI tool and mostly understood by non-engineers, it may deter serious investors or acquirers who factor technical due diligence into company valuations. Savvy investors know that a shaky technical foundation can lead to higher costs down the line. Relying purely on no-code builders without involving experienced developers can thus become a liability. The key is finding the right balance: empower domain experts to prototype, but bring in software engineers to solidify and productionize the results. It’s not an either/or choice — it’s about pairing the creative speed of citizen developers with the seasoned eye of professional developers to ensure quality.

Debugging the Black Box: Developers vs AI-Generated Code

For professional developers, diving into an AI-generated codebase can feel like wandering in a labyrinth with no map. The code works — it might even be clever — but nobody truly understands how. When an error or slow-down occurs, the usual comfort of “I wrote this, I know where to look” is replaced by bewilderment. As one engineer wryly noted, if you let AI code “run wild” in your codebase, it can create “an avalanche of code that nobody understands” (AI is Writing Your Code — But Who’s Making Sure It’s Right? | by Dr. Vineeth Veetil @UMich @IIT B | Feb, 2025 | Medium). The same article warned that unfettered AI contributions introduce “debugging nightmares” alongside that pile of inscrutable code. This is the dark side of handing over the keyboard to a generative model — you get volume, not clarity.

AI-generated code can leave even seasoned developers scratching their heads. The algorithms churn out hundreds of lines in a flash, but often with minimal comments, inconsistent style, and odd logic quirks. Teams report that debugging such code can turn into a time-consuming nightmare. Without the AI’s “intent” on hand, deciphering its code is like debugging a junior developer’s project where the junior has already left the company.

Part of the challenge is that these AI systems don’t inherently prioritize code readability or performance unless explicitly instructed. They’re optimized to fulfill the prompt and pass basic tests, not to produce elegant code. It’s not uncommon to find functions that technically work but are far from optimal — maybe an O(n²) loop that a human would spot as a performance issue, or an API call made in a way that slows down response time. Developers attempting to optimize such code must first unravel it. Performance tuning becomes a forensic exercise: why did the AI choose this approach? Is there a hidden dependency or side effect? The lack of human reasoning in the code’s design means there’s little rhyme or reason documented.

Furthermore, AI-generated code can be bloated and inconsistent. An apt analogy is that AI can behave like an overenthusiastic intern — extremely fast, but with no regard for documentation or long-term upkeep. It may churn out a solution in hours that would take a human days, but that solution might ignore the intended architecture or produce a maze of spaghetti code. As one observer put it, AI sometimes works “faster than a senior engineer but [refuses] to leave comments, follow architecture guidelines, or prioritize maintainability.” (AI is Writing Your Code — But Who’s Making Sure It’s Right? | by Dr. Vineeth Veetil @UMich @IIT B | Feb, 2025 | Medium). In other words, it delivers the letter of the request, not the spirit of good software design. Developers stepping in later effectively have to refactor and teach proper coding discipline to this unruly code after the fact — a task that can be more difficult than writing it correctly from scratch.

All of this amounts to friction in the development process. The promise was that AI would save developer time, and it does at the moment of creation. But who saves the time AI costs us later, when we’re poring over its output, trying to make sense of it or bring it up to production standards? Without additional tools or guardrails, rapid AI development can outpace our ability to maintain control and clarity. The distance between code and developer grows, and that’s a risky place to be when you’re responsible for a product’s reliability. If we as developers can’t confidently explain how our system works because “the AI just did it,” we lose a degree of control over our own creations.

When AI Speed Outpaces Clarity

It’s worth reflecting on what happens when development accelerates beyond what traditional processes can handle. We are entering an era where you can ship an application faster than you can thoroughly document or comprehend it. That raises a fundamental question: Are we building software, or is the software building itself with us merely overseeing? The ideal answer is that these AI tools remain just that — tools under developer supervision. But there’s a fine line where the pace and volume of AI-generated output can overwhelm human oversight. When rapid AI development outpaces developer control, the risks manifest as unclear ownership of code, difficulty in knowledge transfer, and unpredictable behavior in edge cases.

The solution is not to shun these powerful new tools, but to adapt our approach to using them. Developers and engineering leads need greater visibility into what the AI is doing and mechanisms to verify the quality of its output. In traditional development, best practices like code review, testing, and refactoring are our safety nets. In AI-generated development, we need equivalent safety nets — possibly AI-assisted code analysis or other checks — to keep quality on track. This is precisely the gap that a new category of tools is beginning to fill.

One such tool is In Spades AI, which emerged to address the very pitfalls we’ve been discussing. In Spades AI was born out of firsthand frustration: its founder was using Lovable to quickly build a web app and was thrilled at how fast the prototype came together — until they tried to gauge its performance and quality. After the AI builder did its magic, the founder looked under the hood and realized they had no clear insight into how efficient or robust the site was. Lovable had generated the code, but it wasn’t telling them if the resulting site was slow, if it followed SEO best practices, or how it fared on core web vitals. In short, there was no Lighthouse in this dense AI-generated forest. Lacking feedback on these issues, the founder saw the need for a tool that could shine a light on an AI-built site’s real-world readiness.

Bridging the Gap with In Spades AI

In Spades AI is designed as a bridge between rapid AI-built development and professional-grade quality assurance. It’s essentially a quality compass for those fast, AI-generated websites. The tool works by performing automated audits (leveraging Google Lighthouse under the hood) on your AI-built site, evaluating aspects like performance, accessibility, SEO, and best practices compliance. The genius of In Spades is that it doesn’t stop at pointing out problems — it becomes an AI co-pilot for fixing them. After analyzing a site, it produces AI-generated prompts and recommendations tailored to the specific platform you used (be it Lovable, Bolt.new, etc.).

These prompts are written in plain language so that even a non-technical user can take them and plug them back into their website builder. For example, if your images are too large and hurting load time, In Spades might suggest a prompt like: “Optimize all images by reducing resolution for mobile devices and implement lazy loading for off-screen images.” A user of Lovable or Databutton can paste that into their builder’s interface, essentially telling the AI to apply that fix. In Spades acts like a translator between professional web performance know-how and the AI builder’s prompt interface. And if you have a developer team, the tool’s report and suggestions serve as a quick audit that they can use to prioritize optimizations. It’s bridging the knowledge gap by packaging expert recommendations into actionable steps for anyone to follow.

The mission of In Spades AI is to ensure that democratizing development doesn’t equate to accepting lower quality. In their view, “use AI wisely, use In Spades.” It’s about pairing the power of generative AI (which gives you speed and creative freedom) with the power of diagnostic AI (which gives you insight and guidance on quality). Non-technical founders get to have their cake and eat it too: they can build their product with AI in the morning, and by afternoon run an audit and get a list of concrete improvements to make it faster, more SEO-friendly, and more robust. It turns what could have been a black-box deliverable into a transparent, improvable project. Essentially, In Spades AI is offering the safety net that catches the shortcomings of AI builders — before those shortcomings come back to bite you in production or in front of users.

From Frustration to Solution: The Inspiration Behind In Spades AI

The story that sparked In Spades AI perfectly illustrates the current state of AI web development. The founder was an early adopter of Lovable, building a new idea with just prompts and watching it come to life rapidly. It felt like magic — until they started asking questions the platform couldn’t answer. How well does the site perform under load? Are there any obvious SEO issues? Why does the page feel a bit sluggish on mobile? Lovable’s AI had written the code, but it provided no performance dashboard or clear guidance on optimization. Traditional developers would run Lighthouse audits or use debugging tools, but Lovable’s target user (a non-coder) wouldn’t know where to begin with that. The founder realized that many other non-technical builders must be hitting the same wall: you’ve built something cool with AI, but now you need to make sure it’s actually good by web standards.

Rather than manually comb through the generated code — a daunting task for anyone, let alone a non-engineer — the founder envisioned an assistant that could do the hard analysis work. This led to creating In Spades AI: a tool that would give anyone the eyes of a seasoned web developer. It runs the audits, interprets the results, and even drafts the exact instructions you can give back to your AI builder to fix the problems. In essence, In Spades was born to answer the question: “Now that the AI built my site, how do I make it better?” The founder’s bet is that as AI-driven development becomes more widespread, the need for this kind of meta-AI quality control will only grow.

Conclusion: Balancing Speed and Sustainability

AI website builders like Lovable, Bolt.new, Databutton, and Cursor are undeniably powerful tools. They represent a new paradigm where software creation is no longer the exclusive domain of those who can code. This democratization lowers barriers and speeds up innovation — a win for entrepreneurs and developers alike. However, as we’ve explored, speed and convenience can mask accumulating problems if left unchecked. The code still needs to run fast, scale well, and be secure and maintainable months and years down the line. That’s why embracing these AI builders should go hand in hand with adopting tools and practices that ensure quality.

The emergence of solutions like In Spades AI shows a path forward: pair generative AI with analytical AI to get the best of both worlds. Use the first to build, and the second to review and refine. In practice, this means you don’t blindly trust the first draft your AI co-engineer gives you. You test it, audit it, and iteratively improve it — just as you would with human-written code. If something’s slow or shaky, you address it now rather than letting a weak foundation ossify. In Spades AI and similar tools are essentially bringing seasoned developer wisdom into the loop of no-code development, ensuring that what you launch isn’t just lovable today, but solid and scalable for the future.

In the end, the goal isn’t to dissuade anyone from using these AI builders — they truly can be game-changers. Rather, it’s to approach them with eyes wide open. As one early user concluded about Bolt.new, “Tools like these aren’t here to replace us developers. They’re here to handle the repetitive stuff while we focus on the complex problems… powerful, but still needs a skilled developer to make the most of it.” (Testing Bolt.new: A Web Developer’s Review of an AI-Powered App Builder | Medium) In the same vein, a startup founder using Lovable or Databutton should still keep a developer mindset: stay curious about what’s under the hood, leverage quality tools like In Spades AI for oversight, and be ready to intervene when something doesn’t smell right in the code or performance. With the right balance, we can harness the speed of AI-driven development **without **surrendering the reins on quality and maintainability. In a fast-moving tech landscape, that balance will be key to building products that aren’t just impressive demos, but lasting successes.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)