Harnessing the Power of Vector Embeddings in Google Apps Script with Vertex AI

Introduction In today's data-driven world, the ability to understand and process text semantically has become increasingly important. Vector embeddings provide a powerful way to represent text as numerical vectors, enabling semantic search, content recommendation, and other advanced natural language processing capabilities. This blog post explores how to leverage Google's Vertex AI to generate vector embeddings directly within Google Apps Script. Straight to the code at https://github.com/jpoehnelt/apps-script/tree/main/projects/vector-embeddings. What are Vector Embeddings? Vector embeddings are numerical representations of text (or other data) in a high-dimensional space. Unlike traditional keyword-based approaches, embeddings capture semantic meaning, allowing us to measure similarity between texts based on their actual meaning rather than just matching keywords. For example, the phrases "I love programming" and "Coding is my passion" would be recognized as similar in an embedding space, despite having no words in common. Why Use Vertex AI with Apps Script? Google Apps Script provides a powerful platform for automating tasks within Google Workspace. By combining it with Vertex AI's embedding capabilities, you can: Build semantic search functionality in Google Sheets or Docs Create content recommendation systems Implement intelligent document classification Enhance chatbots and virtual assistants Perform sentiment analysis and topic modeling Implementation Guide Prerequisites A Google Cloud Platform account with Vertex AI API enabled A Google Apps Script project Step 1: Set Up Your Project First, you'll need to set up your Apps Script project and configure it to use the Vertex AI API. Make sure to store your project ID in the script properties. You can do this by going to the Script Editor, clicking on the "Script properties" icon, and adding your project ID. Step 2: Generate Embeddings The core functionality is generating embeddings from text. Here's how to implement it: /** * Generate embeddings for the given text. * @param {string|string[]} text - The text to generate embeddings for. * @returns {number[][]} - The generated embeddings. */ function batchedEmbeddings_( text, { model = "text-embedding-005" } = {} ) { if (!Array.isArray(text)) { text = [text]; } const token = ScriptApp.getOAuthToken(); const PROJECT_ID = PropertiesService.getScriptProperties().getProperty("PROJECT_ID"); const REGION = "us-central1"; const requests = text.map((content) => ({ url: `https://${REGION}-aiplatform.googleapis.com/v1/projects/${PROJECT_ID}/locations/${REGION}/publishers/google/models/${model}:predict`, method: "post", headers: { Authorization: `Bearer ${token}`, "Content-Type": "application/json", }, muteHttpExceptions: true, contentType: "application/json", payload: JSON.stringify({ instances: [{ content }], parameters: { autoTruncate: true, }, }), })); const responses = UrlFetchApp.fetchAll(requests); const results = responses.map((response) => { if (response.getResponseCode() !== 200) { throw new Error(response.getContentText()); } return JSON.parse(response.getContentText()); }); return results.map((result) => result.predictions[0].embeddings.values); } Step 3: Calculate Similarity Between Embeddings Once you have embeddings, you'll want to compare them to find similar content. The cosine similarity is a common metric for this purpose. It measures the cosine of the angle between two vectors. The cosine of the angle is calculated as the dot product of the vectors divided by the product of their magnitudes. The cosine similarity takes values between -1 (completely dissimilar) and 1 (completely similar) and is defined as cosine similarity = dot product / (magnitude of x * magnitude of y). /** * Calculates the cosine similarity between two vectors. * @param {number[]} x - The first vector. * @param {number[]} y - The second vector. * @returns {number} The cosine similarity value between -1 and 1. */ function similarity_(x, y) { return dotProduct_(x, y) / (magnitude_(x) * magnitude_(y)); } function dotProduct_(x, y) { let result = 0; for (let i = 0, l = Math.min(x.length, y.length); i

Introduction

In today's data-driven world, the ability to understand and process text semantically has become increasingly important. Vector embeddings provide a powerful way to represent text as numerical vectors, enabling semantic search, content recommendation, and other advanced natural language processing capabilities. This blog post explores how to leverage Google's Vertex AI to generate vector embeddings directly within Google Apps Script.

Straight to the code at https://github.com/jpoehnelt/apps-script/tree/main/projects/vector-embeddings.

What are Vector Embeddings?

Vector embeddings are numerical representations of text (or other data) in a high-dimensional space. Unlike traditional keyword-based approaches, embeddings capture semantic meaning, allowing us to measure similarity between texts based on their actual meaning rather than just matching keywords.

For example, the phrases "I love programming" and "Coding is my passion" would be recognized as similar in an embedding space, despite having no words in common.

Why Use Vertex AI with Apps Script?

Google Apps Script provides a powerful platform for automating tasks within Google Workspace. By combining it with Vertex AI's embedding capabilities, you can:

- Build semantic search functionality in Google Sheets or Docs

- Create content recommendation systems

- Implement intelligent document classification

- Enhance chatbots and virtual assistants

- Perform sentiment analysis and topic modeling

Implementation Guide

Prerequisites

- A Google Cloud Platform account with Vertex AI API enabled

- A Google Apps Script project

Step 1: Set Up Your Project

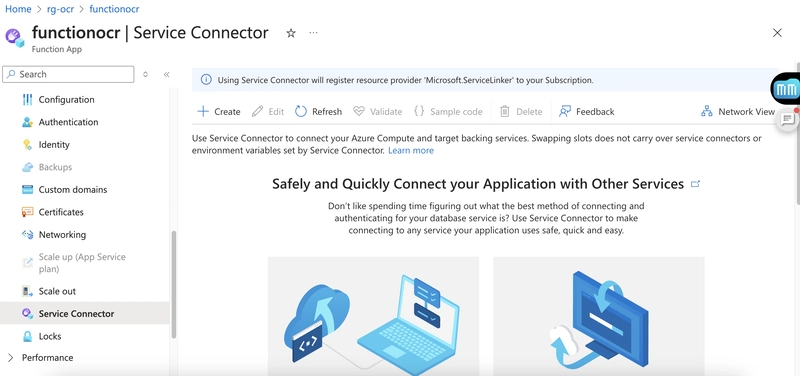

First, you'll need to set up your Apps Script project and configure it to use the Vertex AI API. Make sure to store your project ID in the script properties. You can do this by going to the Script Editor, clicking on the "Script properties" icon, and adding your project ID.

Step 2: Generate Embeddings

The core functionality is generating embeddings from text. Here's how to implement it:

/**

* Generate embeddings for the given text.

* @param {string|string[]} text - The text to generate embeddings for.

* @returns {number[][]} - The generated embeddings.

*/

function batchedEmbeddings_(

text,

{ model = "text-embedding-005" } = {}

) {

if (!Array.isArray(text)) {

text = [text];

}

const token = ScriptApp.getOAuthToken();

const PROJECT_ID = PropertiesService.getScriptProperties().getProperty("PROJECT_ID");

const REGION = "us-central1";

const requests = text.map((content) => ({

url: `https://${REGION}-aiplatform.googleapis.com/v1/projects/${PROJECT_ID}/locations/${REGION}/publishers/google/models/${model}:predict`,

method: "post",

headers: {

Authorization: `Bearer ${token}`,

"Content-Type": "application/json",

},

muteHttpExceptions: true,

contentType: "application/json",

payload: JSON.stringify({

instances: [{ content }],

parameters: {

autoTruncate: true,

},

}),

}));

const responses = UrlFetchApp.fetchAll(requests);

const results = responses.map((response) => {

if (response.getResponseCode() !== 200) {

throw new Error(response.getContentText());

}

return JSON.parse(response.getContentText());

});

return results.map((result) => result.predictions[0].embeddings.values);

}

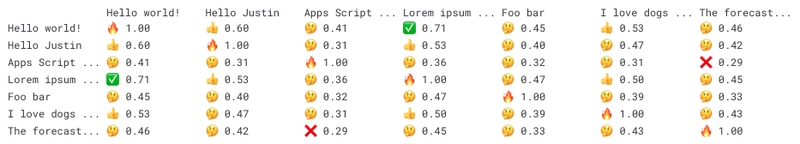

Step 3: Calculate Similarity Between Embeddings

Once you have embeddings, you'll want to compare them to find similar content. The cosine similarity is a common metric for this purpose. It measures the cosine of the angle between two vectors. The cosine of the angle is calculated as the dot product of the vectors divided by the product of their magnitudes. The cosine similarity takes values between -1 (completely dissimilar) and 1 (completely similar) and is defined as cosine similarity = dot product / (magnitude of x * magnitude of y).

/**

* Calculates the cosine similarity between two vectors.

* @param {number[]} x - The first vector.

* @param {number[]} y - The second vector.

* @returns {number} The cosine similarity value between -1 and 1.

*/

function similarity_(x, y) {

return dotProduct_(x, y) / (magnitude_(x) * magnitude_(y));

}

function dotProduct_(x, y) {

let result = 0;

for (let i = 0, l = Math.min(x.length, y.length); i < l; i += 1) {

result += x[i] * y[i];

}

return result;

}

function magnitude_(x) {

let result = 0;

for (let i = 0, l = x.length; i < l; i += 1) {

result += x[i] ** 2;

}

return Math.sqrt(result);

}

I tested out the code with a small corpus of texts and it worked well.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)