Hands-On WrenAI Review: Text-to-SQL Powered by RAG

WrenAI is a text to sql solution that I've been following for a while. Recently, I have some time to try it out, so let me share my experience. First of all, according to the installation guide provided by the official document, we can generally deploy WrenAI to the local machine. As for the integration with local Ollama, there are some sample configuration files that can be used. However, there are still some details to be adjusted that are not mentioned in the document. For example, if we use the ollama/nomic-embed-text mentioned in the document as the embedder, then we need to change the embedding_model_dim of the configuration file from 3072 to 768, which is a detail that can be easily overlooked. Just provide the appropriate settings and WrenAI will work fine. By the way, I am using MySQL and the official MySQL test dataset. WrenAI Advantage In addition to schema-based chat Q&A, WrenAI has another awesome feature. It provides the flexibility to customize prompts, and under WrenAI's Knowledge page, it is possible to enter pre-designed questions and corresponding SQL answers. In addition, we can also enter additional commands to provide the AI with additional “parameters”. After I disassembled WrenAI's prompt, I realized that these Knowledge play a very important role in determining the final SQL's appearance. This customization provides a reliable fine tuning opportunity when integrating a usage-based data source like BigQuery. WrenAI Disadvantage This is a fairly new project (0.19.2 at the moment), so there are bound to be some bugs. I've encountered two problems that I find quite annoying. First, when I first logged in the homepage, WrenAI will provide some recommended questions based on the data model, so that users can get into the situation quickly. However, this kind of full model scanning consumes a lot of computing power, and if the model is not strong enough, basically we cannot get the result. It doesn't matter if we can't get the recommended questions, but WrenAI's error handle is not well designed, it will provide a lot of unimportant built-in questions, and there is no align project setting for the language. I've mentioned a GitHub issue about this. Secondly, even though there are not many data models in the source and not many columns, the llama3.1:8b model still has a certain percentage of AI hallucination. Since I'm a data engineer, it's easy for me to read SQL, so it's easy for me to find the problem, and WrenAI also provides a good correction mechanism to correct the original answer, so I haven't encountered too many obstacles in using it. However, I am worried that people who are not familiar with datasets and SQL may have unexpected surprises if they use it directly. Lastly, and this is both a strength and a weakness of WrenAI, WrenAI is based on a RAG implementation to generate SQL, which requires a strong model to support. Take my llama3.1:8b running on MacBook Pro M2, a simple problem (joining a few tables) would take more than 5 minutes, not to mention the complicated problems, and it's common to run into internal server error. I won't go into the details of some minor Web UI issues and design flaws in the interaction with the backend. Wrap Up WrenAI uses RAG as the foundation to implement a pretty good text to sql solution, and because it is based on RAG, there is a lot of flexibility to customize the prompt. However, because it is a RAG, the computing power and model capacity are high, and the effect on small model scenes needs to be strengthened. I will do more experiments with more powerful models and expect to get better results. Overall, WrenAI is a product worth trying.

WrenAI is a text to sql solution that I've been following for a while. Recently, I have some time to try it out, so let me share my experience.

First of all, according to the installation guide provided by the official document, we can generally deploy WrenAI to the local machine. As for the integration with local Ollama, there are some sample configuration files that can be used.

However, there are still some details to be adjusted that are not mentioned in the document. For example, if we use the ollama/nomic-embed-text mentioned in the document as the embedder, then we need to change the embedding_model_dim of the configuration file from 3072 to 768, which is a detail that can be easily overlooked.

Just provide the appropriate settings and WrenAI will work fine.

By the way, I am using MySQL and the official MySQL test dataset.

WrenAI Advantage

In addition to schema-based chat Q&A, WrenAI has another awesome feature.

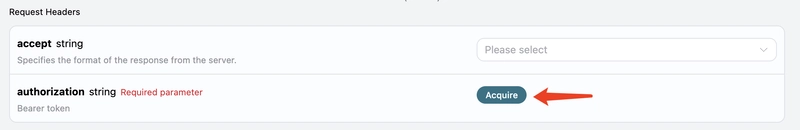

It provides the flexibility to customize prompts, and under WrenAI's Knowledge page, it is possible to enter pre-designed questions and corresponding SQL answers. In addition, we can also enter additional commands to provide the AI with additional “parameters”.

After I disassembled WrenAI's prompt, I realized that these Knowledge play a very important role in determining the final SQL's appearance. This customization provides a reliable fine tuning opportunity when integrating a usage-based data source like BigQuery.

WrenAI Disadvantage

This is a fairly new project (0.19.2 at the moment), so there are bound to be some bugs.

I've encountered two problems that I find quite annoying.

First, when I first logged in the homepage, WrenAI will provide some recommended questions based on the data model, so that users can get into the situation quickly. However, this kind of full model scanning consumes a lot of computing power, and if the model is not strong enough, basically we cannot get the result.

It doesn't matter if we can't get the recommended questions, but WrenAI's error handle is not well designed, it will provide a lot of unimportant built-in questions, and there is no align project setting for the language. I've mentioned a GitHub issue about this.

Secondly, even though there are not many data models in the source and not many columns, the llama3.1:8b model still has a certain percentage of AI hallucination.

Since I'm a data engineer, it's easy for me to read SQL, so it's easy for me to find the problem, and WrenAI also provides a good correction mechanism to correct the original answer, so I haven't encountered too many obstacles in using it.

However, I am worried that people who are not familiar with datasets and SQL may have unexpected surprises if they use it directly.

Lastly, and this is both a strength and a weakness of WrenAI, WrenAI is based on a RAG implementation to generate SQL, which requires a strong model to support. Take my llama3.1:8b running on MacBook Pro M2, a simple problem (joining a few tables) would take more than 5 minutes, not to mention the complicated problems, and it's common to run into internal server error.

I won't go into the details of some minor Web UI issues and design flaws in the interaction with the backend.

Wrap Up

WrenAI uses RAG as the foundation to implement a pretty good text to sql solution, and because it is based on RAG, there is a lot of flexibility to customize the prompt.

However, because it is a RAG, the computing power and model capacity are high, and the effect on small model scenes needs to be strengthened.

I will do more experiments with more powerful models and expect to get better results. Overall, WrenAI is a product worth trying.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

.jpg?#)

.jpg?#)

![CarPlay app with web browser for streaming video hits App Store [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/11/carplay-apple.jpeg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/21]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![Apple Seeds watchOS 11.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97079/97079/97079-640.jpg)