Getting structured output from OpenAI with AWS Lambda

Photo by Hannes Richter on Unsplash You can ensure that LLM returns the specific JSON shape. This way, your output is typesafe and can be used by other systems. Goal Let's say I have a small service that creates screenplays for YouTube videos. I need to ensure that every screenplay has a consistent shape. I want to generate PDFs based on LLM output, and I need it to fit a specific template. Problem LLMs, by definition, are not the best in following the specific schema, as they generate responses token by token. Still, different AI providers offer different ways to force the exact response shape. In this blog post, I use OpenAI API, which allows the response_format property when calling newer models. Architecture I am building the lambda function in Rust. The code is available IN THIS REPO For interaction with OpenAI API, I use rig.rs, which is a lightweight yet powerful tool for building agentic workflows in Rust. I use only basic features to parse requests and responses to OpenAI API easily. Creating JSON Schema directly from structs is covered by a wonderful schemars crate. Deserialization is handled with serde and serde_json Lambda - initial draft Service For now, I don't use models at all. I create a simple service to call OpenAI with a passed prompt without any additional settings. //screenplay/src/screenplay/service.rs use rig::{agent::Agent, completion::Prompt, providers::{self, openai::CompletionModel}}; pub struct ScreenplayService { agent: Agent, } impl ScreenplayService { pub fn new() -> Self { let client = providers::openai::Client::from_env(); let agent = client .agent("gpt-4o") .build(); Self { agent } } pub async fn generate_screenplay(&self, prompt: &str) -> String { let response = self.agent .prompt(prompt) .await .unwrap(); response } } Handler In the root folder, I run cargo lambda new and choose the option for the HTTP handler. The current template contains two files: main and handler, but I used to merge them into a single file. Cargo lambda doesn't update the workspace automatically, so Cargo.toml in the root file needs to be updated manually. The handler is straightforward - I create a screenplay service and use it in the lambda handler. //screenplay_handler/src/main.rs use lambda_http::{run, service_fn, tracing, Body, Error, Request, RequestExt, Response}; use screenplay::screenplay::service::ScreenplayService; #[tokio::main] async fn main() -> Result { tracing::init_default_subscriber(); let agent = ScreenplayService::new(); run(service_fn(|ev| { function_handler(&agent, ev) })).await } pub(crate) async fn function_handler(service: &ScreenplayService, event: Request) -> Result { // Extract some useful information from the request let prompt = event .query_string_parameters_ref() .and_then(|params| params.first("prompt")) .ok_or("expected prompt paramter")?; let response = service .generate_screenplay(prompt) .await; let resp = Response::builder() .status(200) .header("content-type", "text/html") .body(response.into()) .map_err(Box::new)?; Ok(resp) } With cargo lambda, it is seamless to run lambda locally and check if things are properly lined up. I also create a .env file with my OPENAI_API_KEY For the test input, I use the template for aws lambda events repo and update rawQueryString "rawQueryString": "prompt=bermuda%20triangle", I use two terminals. In the first I run cargo lambda watch --env-file .env. Once it runs, I can invoke my lambda in the second terminal cargo lambda invoke -F screenplay_handler/test/input.json The response is generic, as expected Context and response schema Now, the fun part. OpenAI API allows adding the response_format parameter to the call. It is not part of common AI APIs, so rig.rs doesn't have it as a built-in method, but it is easy to add additional parameters to the call with additional_parameters. The shape of the request is described in the OpenAI documentation. Long story short, we are expected to pass a json schema. Here is a simple helper function to create an expected JSON value for rig.rs // screenplay/src/screenplay/utils.rs pub fn create_response_format(schema: &Schema, schema_name: &str) -> Value { json!({ "response_format": { "type": "json_schema", "json_schema": { "name": schema_name, "strict": true, "schema": schema } } }) } I want to create JSON Schema directly from domain models to make sure that the whole integration is typesafe. I use the shemars crate to seamlessly create a schema from my structs // screenplay/src/screenplay/models.rs use schemars::JsonSchema; use ser

Photo by Hannes Richter on Unsplash

You can ensure that LLM returns the specific JSON shape. This way, your output is typesafe and can be used by other systems.

Goal

Let's say I have a small service that creates screenplays for YouTube videos. I need to ensure that every screenplay has a consistent shape. I want to generate PDFs based on LLM output, and I need it to fit a specific template.

Problem

LLMs, by definition, are not the best in following the specific schema, as they generate responses token by token. Still, different AI providers offer different ways to force the exact response shape.

In this blog post, I use OpenAI API, which allows the response_format property when calling newer models.

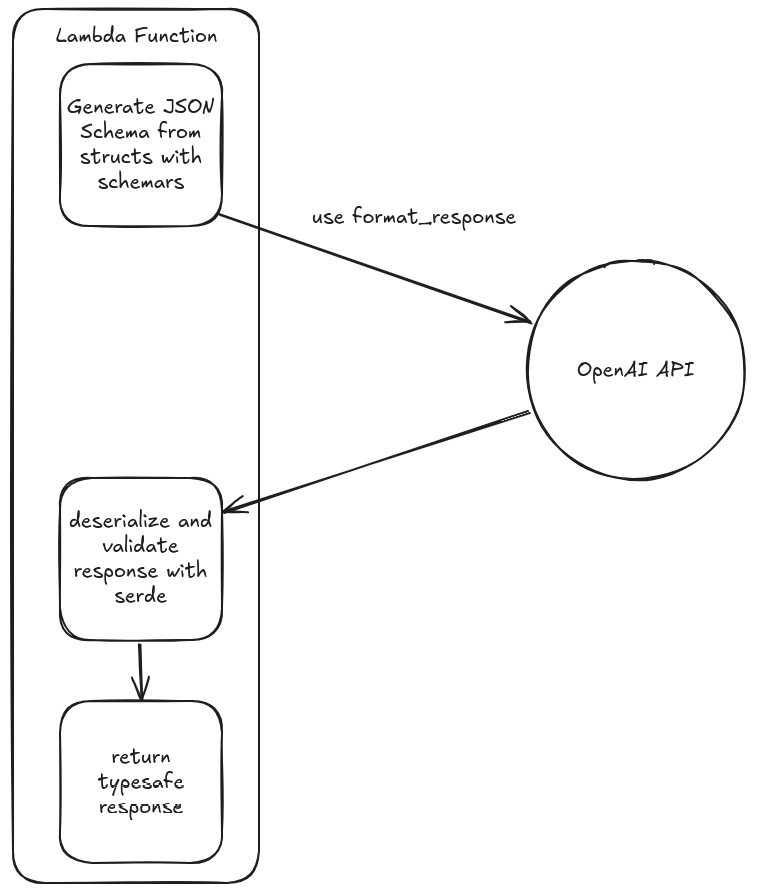

Architecture

I am building the lambda function in Rust.

The code is available IN THIS REPO

For interaction with OpenAI API, I use rig.rs, which is a lightweight yet powerful tool for building agentic workflows in Rust. I use only basic features to parse requests and responses to OpenAI API easily.

Creating JSON Schema directly from structs is covered by a wonderful schemars crate.

Deserialization is handled with serde and serde_json

Lambda - initial draft

Service

For now, I don't use models at all. I create a simple service to call OpenAI with a passed prompt without any additional settings.

//screenplay/src/screenplay/service.rs

use rig::{agent::Agent, completion::Prompt, providers::{self, openai::CompletionModel}};

pub struct ScreenplayService {

agent: Agent<CompletionModel>,

}

impl ScreenplayService {

pub fn new() -> Self {

let client = providers::openai::Client::from_env();

let agent = client

.agent("gpt-4o")

.build();

Self { agent }

}

pub async fn generate_screenplay(&self, prompt: &str) -> String {

let response = self.agent

.prompt(prompt)

.await

.unwrap();

response

}

}

Handler

In the root folder, I run cargo lambda new and choose the option for the HTTP handler. The current template contains two files: main and handler, but I used to merge them into a single file. Cargo lambda doesn't update the workspace automatically, so Cargo.toml in the root file needs to be updated manually.

The handler is straightforward - I create a screenplay service and use it in the lambda handler.

//screenplay_handler/src/main.rs

use lambda_http::{run, service_fn, tracing, Body, Error, Request, RequestExt, Response};

use screenplay::screenplay::service::ScreenplayService;

#[tokio::main]

async fn main() -> Result<(), Error> {

tracing::init_default_subscriber();

let agent = ScreenplayService::new();

run(service_fn(|ev| {

function_handler(&agent, ev)

})).await

}

pub(crate) async fn function_handler(service: &ScreenplayService, event: Request) -> Result<Response<Body>, Error> {

// Extract some useful information from the request

let prompt = event

.query_string_parameters_ref()

.and_then(|params| params.first("prompt"))

.ok_or("expected prompt paramter")?;

let response = service

.generate_screenplay(prompt)

.await;

let resp = Response::builder()

.status(200)

.header("content-type", "text/html")

.body(response.into())

.map_err(Box::new)?;

Ok(resp)

}

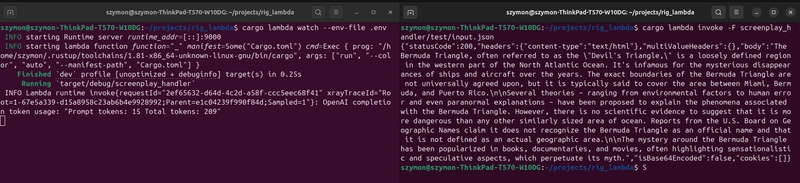

With cargo lambda, it is seamless to run lambda locally and check if things are properly lined up. I also create a .env file with my OPENAI_API_KEY

For the test input, I use the template for aws lambda events repo and update rawQueryString "rawQueryString": "prompt=bermuda%20triangle",

I use two terminals. In the first I run cargo lambda watch --env-file .env. Once it runs, I can invoke my lambda in the second terminal cargo lambda invoke -F screenplay_handler/test/input.json

The response is generic, as expected

Context and response schema

Now, the fun part.

OpenAI API allows adding the response_format parameter to the call. It is not part of common AI APIs, so rig.rs doesn't have it as a built-in method, but it is easy to add additional parameters to the call with additional_parameters.

The shape of the request is described in the OpenAI documentation. Long story short, we are expected to pass a json schema.

Here is a simple helper function to create an expected JSON value for rig.rs

// screenplay/src/screenplay/utils.rs

pub fn create_response_format(schema: &Schema, schema_name: &str) -> Value {

json!({

"response_format": {

"type": "json_schema",

"json_schema": {

"name": schema_name,

"strict": true,

"schema": schema

}

}

})

}

I want to create JSON Schema directly from domain models to make sure that the whole integration is typesafe. I use the shemars crate to seamlessly create a schema from my structs

// screenplay/src/screenplay/models.rs

use schemars::JsonSchema;

use serde::{Deserialize, Serialize};

#[derive(Serialize, Deserialize, JsonSchema)]

pub struct Screenplay {

pub title: String,

pub scenes: Vec<Scene>,

}

#[derive(Serialize, Deserialize, JsonSchema)]

pub enum Scene {

Studio {

location: String,

time: String,

description: Option<String>,

dialogue: Vec<Dialogue>,

},

CutTo {

cut_to: String,

voiceover: Voiceover,

},

FadeOut {

fade_out: bool,

screen_text: Option<String>,

music: Option<String>,

}

}

#[derive(Serialize, Deserialize, JsonSchema)]

pub struct Dialogue {

pub character: String,

pub tone: Option<String>,

pub lines: String,

}

#[derive(Serialize, Deserialize, JsonSchema)]

pub struct Voiceover {

pub character: String,

pub lines: String,

}

Great, now I update the ScreenplayService:

// screenplay/src/screenplay/service.rs

use rig::{agent::Agent, completion::Prompt, providers::{self, openai::CompletionModel}};

use schemars::schema_for;

use super::{models::Screenplay, utils::create_response_format};

static PREAMBLE: &str = r#"

You are a screenplay writer for YouTube videos. You will be given a prompt, and you will write a screenplay based on the prompt.

Make it engaging and non-trivial. Try to find interesting facts and characters to write about.

"#;

pub struct ScreenplayService {

agent: Agent<CompletionModel>,

}

impl ScreenplayService {

pub fn new() -> Self {

let client = providers::openai::Client::from_env();

let schema = schema_for!(Screenplay);

let response_format = create_response_format(&schema, "screenplay_schema");

let agent = client

.agent("gpt-4o")

.preamble(PREAMBLE)

.additional_params(response_format)

.build();

Self { agent }

}

pub async fn generate_screenplay(&self, prompt: &str) -> String {

let response = self.agent

.prompt(prompt)

.await

.unwrap();

response

}

}

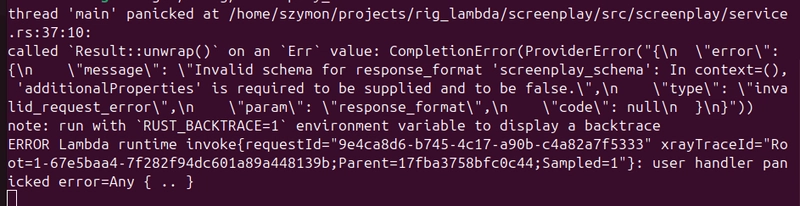

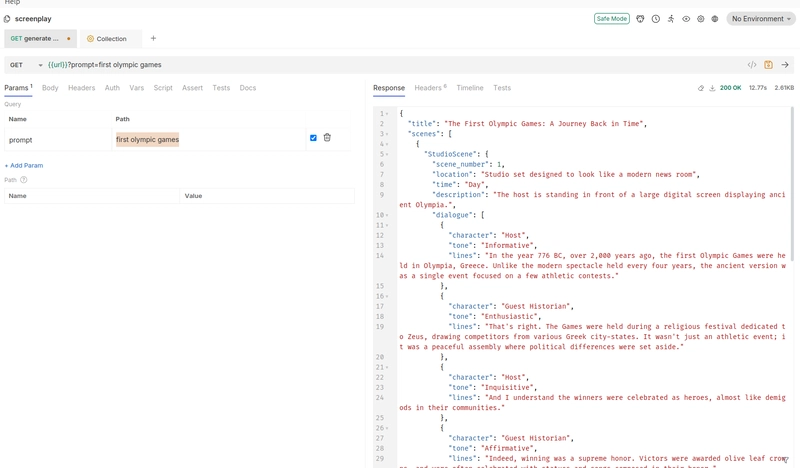

I send another request and see the following output:

Schema tweaks

The thing is that OpenAI APIs have some restrictions regarding the schema you can use in the request. From the error above, we see that addintional_properties needs to be present in the schema and set to false. All restrictions are described in the documentation

From my perspective, the biggest restriction is that the OneOff property is not allowed. In Rust, it is common to use enums when defining domain, but it won't work with OpenAI API.

I would like to return a response similar to this

#[derive(Serialize, Deserialize)]

pub struct ScreenplayResponse {

pub title: String,

pub scenes: Vec<Scene>,

}

#[derive(Serialize, Deserialize)]

pub enum Scene {

StudioScene(StudioScene),

CutToScene(CutToScene),

FadeOutScene(FadeOutScene),

}

// ....

I can't create JSON schema directly from this struct. My workaround is to have a different object as a response_format for the model. I will map it later to my expected shape.

To avoid OneOff limitations, I created a struct without enums

I use the newest alpha version of schemars 1.x library because I wanted to play with the functionalities it offers.

#[derive(Serialize, Deserialize, JsonSchema)]

#[schemars(transform = add_additional_properties)]

pub struct Screenplay {

pub title: String,

pub studio_scenes: Vec<StudioScene>,

pub cut_to_scenes: Vec<CutToScene>,

pub fade_out_scenes: Vec<FadeOutScene>,

}

#[derive(Serialize, Deserialize, JsonSchema)]

#[schemars(transform = add_additional_properties)]

pub struct StudioScene {

#[schemars(transform = sanitize_number)]

pub scene_number: i32,

pub location: String,

pub time: String,

pub description: String,

pub dialogue: Vec<Dialogue>,

}

// ...

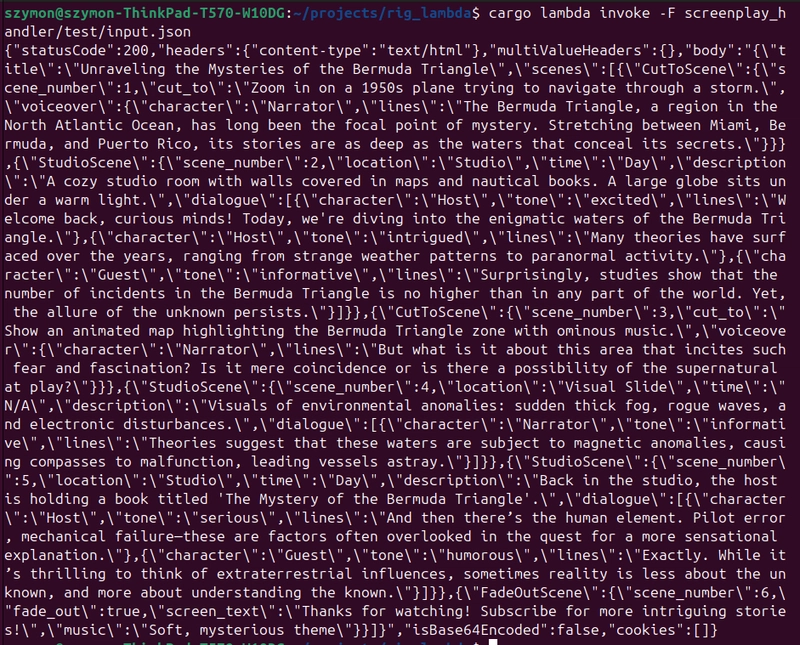

My hope is that I can receive the output anyway and sort it later on. Let's try

Ok, quite decent result!

Deployment

Let's run cargo lambda build -r

ooops.

Ok, it is a very common issue. The solution is not to use openssl, or to add openssl as a package with a vendored flag.

Sometimes, the second option is the only one if a library you are using doesn't let you differ from nativetls. Luckily, the current version of rig allows us to use reqwest-rustls flag. After checking the default flags in dosc, I see that I also need to disable default features to get rid of the default reqwest version. Here is the final Cargo.toml for my screenplay library

[package]

name = "screenplay"

version = "0.1.0"

edition = "2021"

[dependencies]

rig-core = { version = "0.10.0", features = ["reqwest-rustls"], default-features = false }

schemars = { version = "1.0.0-alpha.17", features = ["schemars_derive"] }

serde = { version = "1.0.219", features = ["derive"] }

serde_json = "1.0.140"

Now build should work seamlessly

For small POC and prototyping, cargo lambda deployment works great. I define timeout and env properties in the handler's Cargo.toml

[package]

name = "screenplay_handler"

version = "0.1.0"

edition = "2021"

[dependencies]

lambda_http = "0.13.0"

tokio = { version = "1", features = ["macros"] }

screenplay = { path = "../screenplay" }

serde = "1.0.219"

serde_json = "1.0.140"

[package.metadata.lambda.deploy]

timeout = 120

env_file = ".env"

and run

cargo lambda deploy --enable-function-url

Now I have a URL to test the function

Testing

I use Bruno to test my function

Looks good!

Summary

In this blog, I created a simple Lambda Function that calls OpenAI API and uses response_format to make sure that the result has an expected shape. The rich Rust's ecosystem, including serde and schemars makes it easy to create a typesafe bridge between LLM's answer and our system.

Having the typesafe output from LLM simplifies the pipeline and allows seamless utilization. It also allows estimating tokens' usage more precisely, as no additional steps for formatting text are needed.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

(1).webp?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)