Fixing the Agent Handoff Problem in LlamaIndex's AgentWorkflow System

LlamaIndex AgentWorkflow, as a brand-new multi-agent orchestration framework, still has some shortcomings. The most significant issue is that after an agent hands off control, the receiving agent fails to continue responding to user requests, causing the workflow to halt. In today's article, I'll explore several experimental solutions to this problem with you and discuss the root cause behind it: the positional bias issue in LLMs. I've included all relevant source code at the end of this article. Feel free to read or modify it without needing my permission. Introduction My team and I have been experimenting with LlamaIndex AgentWorkflow recently. After some localization adaptations, we hope this framework can eventually run in our production system. During the adaptation, we encountered many obstacles. I've documented these problem-solving experiences in my article series. You might want to read them first to understand the full context. Today, I'll address the issue where after the on-duty agent hands off control to the next agent, the receiving agent fails to continue responding to the user's most recent request. Here's what happens: After the handoff, the receiving agent doesn't immediately respond to the user's latest request - the user has to repeat their question. Why should I care? In this article, we'll examine this unique phenomenon and attempt to solve it from multiple perspectives, including developer recommendations and our own experience. During this process, we'll intentionally review AgentWorkflow's excellent source code, having a cross-temporal conversation with its authors through code to better understand Agentic AI design principles. We'll also touch upon LLM position bias for the first time, understanding how position bias in chat history affects LLM responses. These insights aren't limited to LlamaIndex - they'll help us handle similar situations when working with other multi-agent orchestration frameworks. Let's go. The Developer-Recommended Solution First, let's see what the developers say Before we begin, if you need background on LlamaIndex AgentWorkflow, feel free to read my previous article: Diving into LlamaIndex AgentWorkflow: A Nearly Perfect Multi-Agent Orchestration Solution In short, LlamaIndex AgentWorkflow builds upon the excellent LlamaIndex Workflow framework, encapsulating agent function calling, handoff, and other cutting-edge Agentic AI developments. It lets you focus solely on your agent's business logic. In my previous article, I first mentioned the issue where agents fail to continue processing user requests after handoff. Others have noticed this too. In this thread, someone referenced my article's solution when asking the developers about it. I'm glad I could help: I was building a multi-agent workflow, where each agent has multiple tools. I started the... Developer Logan M proposed including the original user request in the handoff method's output to ensure the receiving agent continues processing. Unfortunately, as of this writing, LlamaIndex's release version hasn't incorporated this solution yet. So today's article starts with the developer's response - we'll try rewriting the handoff method implementation ourselves to include the original user request in the handoff output. First attempt Since this solution modifies the handoff method implementation, we don't need to rewrite FunctionAgent code. Instead, we'll modify AgentWorkflow's implementation. The handoff method is core to AgentWorkflow's handoff capability. It identifies which agent the LLM wants to hand off to and sets it in the context's next_agent. During workflow execution, this method merges with the agent's tools and gets called via function calling when the LLM needs to hand off. This is how AgentWorkflow implements multi-agent handoff. In the original code, after handoff sets the next_agent, it returns a prompt as the tool call result to the receiving agent. The prompt looks like this: DEFAULT_HANDOFF_OUTPUT_PROMPT = """ Agent {to_agent} is now handling the request due to the following reason: {reason}. Please continue with the current request. """ This prompt includes {to_agent} and {reason} fields. But since the prompt goes to the receiving agent, {to_agent} isn't very useful. Unless {reason} contains the original user request, the receiving agent can't get relevant information from this prompt. That's why the developer suggested including the user request in the prompt output. Let's modify this method first. We'll create an enhanced_agent_workflow.py file and write the modified HANDOFF_OUTPUT_PROMPT: ENHANCED_HANDOFF_OUTPUT_PROMPT = """ Agent {to_agent} is now handling the request. Check the previous chat history and continue responding to the user's request: {user_request}. """ Compared to the original, I added a requirement for the LLM to review chat history and included the

LlamaIndex AgentWorkflow, as a brand-new multi-agent orchestration framework, still has some shortcomings. The most significant issue is that after an agent hands off control, the receiving agent fails to continue responding to user requests, causing the workflow to halt.

In today's article, I'll explore several experimental solutions to this problem with you and discuss the root cause behind it: the positional bias issue in LLMs.

I've included all relevant source code at the end of this article. Feel free to read or modify it without needing my permission.

Introduction

My team and I have been experimenting with LlamaIndex AgentWorkflow recently. After some localization adaptations, we hope this framework can eventually run in our production system.

During the adaptation, we encountered many obstacles. I've documented these problem-solving experiences in my article series. You might want to read them first to understand the full context.

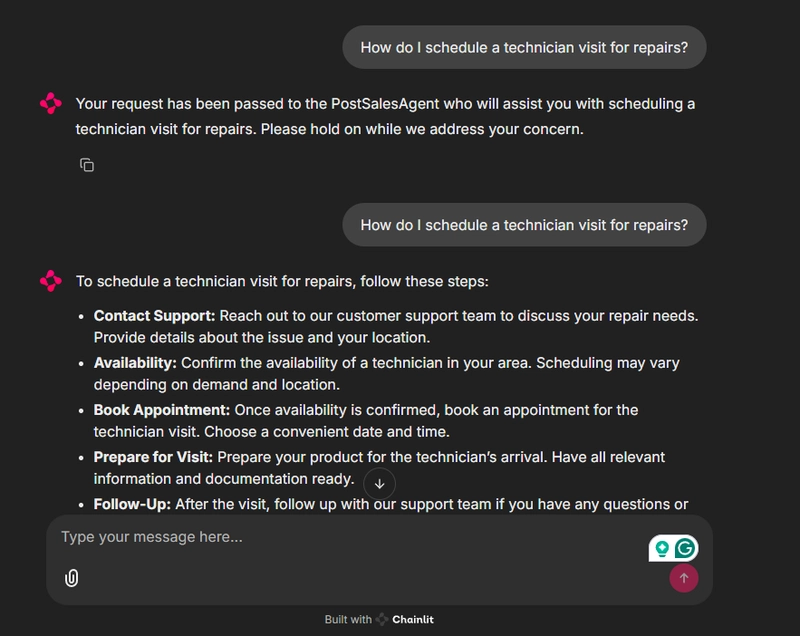

Today, I'll address the issue where after the on-duty agent hands off control to the next agent, the receiving agent fails to continue responding to the user's most recent request.

Here's what happens:

After the handoff, the receiving agent doesn't immediately respond to the user's latest request - the user has to repeat their question.

Why should I care?

In this article, we'll examine this unique phenomenon and attempt to solve it from multiple perspectives, including developer recommendations and our own experience.

During this process, we'll intentionally review AgentWorkflow's excellent source code, having a cross-temporal conversation with its authors through code to better understand Agentic AI design principles.

We'll also touch upon LLM position bias for the first time, understanding how position bias in chat history affects LLM responses.

These insights aren't limited to LlamaIndex - they'll help us handle similar situations when working with other multi-agent orchestration frameworks.

Let's go.

The Developer-Recommended Solution

First, let's see what the developers say

Before we begin, if you need background on LlamaIndex AgentWorkflow, feel free to read my previous article:

Diving into LlamaIndex AgentWorkflow: A Nearly Perfect Multi-Agent Orchestration Solution

In short, LlamaIndex AgentWorkflow builds upon the excellent LlamaIndex Workflow framework, encapsulating agent function calling, handoff, and other cutting-edge Agentic AI developments. It lets you focus solely on your agent's business logic.

In my previous article, I first mentioned the issue where agents fail to continue processing user requests after handoff.

Others have noticed this too. In this thread, someone referenced my article's solution when asking the developers about it. I'm glad I could help:

I was building a multi-agent workflow, where each agent has multiple tools. I started the...

Developer Logan M proposed including the original user request in the handoff method's output to ensure the receiving agent continues processing.

Unfortunately, as of this writing, LlamaIndex's release version hasn't incorporated this solution yet.

So today's article starts with the developer's response - we'll try rewriting the handoff method implementation ourselves to include the original user request in the handoff output.

First attempt

Since this solution modifies the handoff method implementation, we don't need to rewrite FunctionAgent code. Instead, we'll modify AgentWorkflow's implementation.

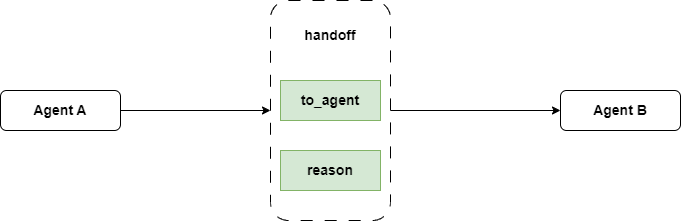

The handoff method is core to AgentWorkflow's handoff capability. It identifies which agent the LLM wants to hand off to and sets it in the context's next_agent. During workflow execution, this method merges with the agent's tools and gets called via function calling when the LLM needs to hand off.

This is how AgentWorkflow implements multi-agent handoff.

In the original code, after handoff sets the next_agent, it returns a prompt as the tool call result to the receiving agent. The prompt looks like this:

DEFAULT_HANDOFF_OUTPUT_PROMPT = """

Agent {to_agent} is now handling the request due to the following reason: {reason}.

Please continue with the current request.

"""

This prompt includes {to_agent} and {reason} fields. But since the prompt goes to the receiving agent, {to_agent} isn't very useful. Unless {reason} contains the original user request, the receiving agent can't get relevant information from this prompt. That's why the developer suggested including the user request in the prompt output.

Let's modify this method first.

We'll create an enhanced_agent_workflow.py file and write the modified HANDOFF_OUTPUT_PROMPT:

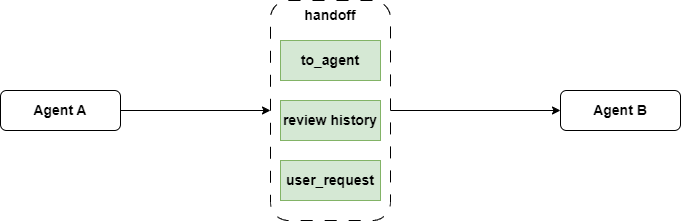

ENHANCED_HANDOFF_OUTPUT_PROMPT = """

Agent {to_agent} is now handling the request.

Check the previous chat history and continue responding to the user's request: {user_request}.

"""

Compared to the original, I added a requirement for the LLM to review chat history and included the user's most recent request.

Next, I rewrote the handoff method to return the new prompt:

async def handoff(ctx: Context, to_agent: str, user_request: str):

"""Handoff control of that chat to the given agent."""

agents: list[str] = await ctx.get('agents')

current_agent_name: str = await ctx.get("current_agent_name")

if to_agent not in agents:

valid_agents = ", ".join([x for x in agents if x != current_agent_name])

return f"Agent {to_agent} not found. Please select a valid agent to hand off to. Valid agents: {valid_agents}"

await ctx.set("next_agent", to_agent)

handoff_output_prompt = PromptTemplate(ENHANCED_HANDOFF_OUTPUT_PROMPT)

return handoff_output_prompt.format(to_agent=to_agent, user_request=user_request)

The rewrite is simple - I just changed the reason parameter to user_request and returned the new prompt. The LLM will handle everything else.

Since we modified handoff's source code, we also need to modify AgentWorkflow's code that calls this method.

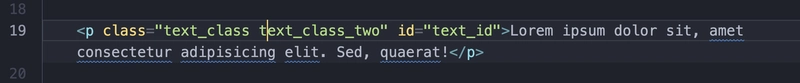

The _get_handoff_tool method in AgentWorkflow calls handoff, so we'll implement an EnhancedAgentWorkflow subclass of AgentWorkflow and override _get_handoff_tool:

class EnhancedAgentWorkflow(AgentWorkflow):

def _get_handoff_tool(

self, current_agent: BaseWorkflowAgent

) -> Optional[AsyncBaseTool]:

"""Creates a handoff tool for the given agent."""

agent_info = {cfg.name: cfg.description for cfg in self.agents.values()}

configs_to_remove = []

for name in agent_info:

if name == current_agent.name:

configs_to_remove.append(name)

elif (

current_agent.can_handoff_to is not None

and name not in current_agent.can_handoff_to

):

configs_to_remove.append(name)

for name in configs_to_remove:

agent_info.pop(name)

if not agent_info:

return None

handoff_prompt = PromptTemplate(ENHANCED_HANDOFF_PROMPT)

fn_tool_prompt = handoff_prompt.format(agent_info=str(agent_info))

return FunctionTool.from_defaults(

async_fn=handoff, description=fn_tool_prompt, return_direct=True

)

Our modifications are complete. Now let's write test code in example_2.py to verify our changes. (example_1.py contains the original AgentWorkflow test.)

I'll base the code on this user's scenario to recreate the situation.

We'll create two agents: search_agent and research_agent. search_agent searches the web and records notes, then hands off to research_agent, who writes a research report based on the notes.

search_agent:

search_agent = FunctionAgent(

name="SearchAgent",

description="You are a helpful search assistant.",

system_prompt="""

You're a helpful search assistant.

First, you'll look up notes online related to the given topic and recorde these notes on the topic.

Once the notes are recorded, you should hand over control to the ResearchAgent.

""",

tools=[search_web, record_notes],

llm=llm,

can_handoff_to=["ResearchAgent"]

)

research_agent:

research_agent = FunctionAgent(

name="ResearchAgent",

description="You are a helpful research assistant.",

system_prompt="""

You're a helpful search assistant.

First, you'll look up notes online related to the given topic and recorde these notes on the topic.

Once the notes are recorded, you should hand over control to the ResearchAgent.

""",

llm=llm

)

search_agent is a multi-tool agent that uses search_web and record_notes methods:

search_web:

async def search_web(ctx: Context, query: str) -> str:

"""

This tool searches the internet and returns the search results.

:param query: user's original request

:return: Then return the search results.

"""

tavily_client = AsyncTavilyClient()

search_result = await tavily_client.search(str(query))

return str(search_result)

record_notes:

async def record_notes(ctx: Context, notes: str, notes_title: str) -> str:

"""

Useful for recording notes on a given topic. Your input should be notes with a title to save the notes under.

"""

return f"{notes_title} : {notes}"

Finally, we'll use EnhancedAgentWorkflow to create a workflow and test our modifications:

workflow = EnhancedAgentWorkflow(

agents=[search_agent, research_agent],

root_agent=search_agent.name

)

async def main():

handler = workflow.run(user_msg="What is LLamaIndex AgentWorkflow, and what problems does it solve?")

async for event in handler.stream_events():

if isinstance(event, AgentOutput):

print("=" * 70)

print(f"

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)