Federated Learning at Scale: Training AI While Preserving Privacy

Imagine this: your phone gets smarter every day—predicting your next word, improving your health tracking, or personalizing your music tastes. But here’s the twist: it does all of that without your data ever leaving your device. That’s the magic of federated learning—a game-changing approach to AI training that lets models learn from user data while keeping privacy intact. What is Federated Learning? In traditional AI training, data is collected and sent to a central server. But in today’s privacy-sensitive world, that approach is increasingly problematic. Federated learning flips the script. Instead of sending data to the cloud, it sends the model to the data. Think of it like this: Instead of gathering all the ingredients in one kitchen, you're sending a chef to every household, cooking locally, and then combining only the final recipes—not the raw ingredients. Real-World Impact: Google & Apple Google was one of the pioneers of federated learning. Remember when Gboard started suggesting words that just made sense? That’s federated learning at work. The model trained locally on millions of devices, learning typing patterns without storing keystrokes. Apple uses a similar approach in Siri and QuickType to personalize experiences without collecting raw conversations or texts. Your iPhone learns you without ever leaking your privacy. How It Works (Without the Math Overload) Let’s simplify the mechanics: A base model is sent to your device. Local training happens using your private data. The device sends back model updates, not the data itself. Updates from thousands (or millions) of devices are aggregated and used to improve the global model. All of this happens using technologies like Secure Aggregation and Differential Privacy to ensure no individual update can be traced back to a specific user. The Scaling Challenge Federated learning works beautifully in theory, but scaling it to millions of devices? That’s where things get tricky. Devices differ in power, connectivity, and availability. Imagine trying to coordinate a symphony when some musicians are on 3G, others on Wi-Fi, and a few show up late with dying batteries. That’s the kind of orchestration required. Platforms like TensorFlow Federated and PySyft are helping bridge that gap—making it easier to deploy federated learning at scale. Why It Matters In an age of data breaches, deepfakes, and growing mistrust, federated learning offers a path forward: powerful AI without the surveillance. It empowers industries like healthcare (think personalized treatment models without sharing patient data), finance (fraud detection without peeking into transactions), and even smart homes. Final Thoughts Federated learning is still evolving, but its promise is bold: smarter systems that don’t trade privacy for performance. As we move towards a more connected, AI-driven world, this paradigm could be the foundation of ethical AI—where privacy isn’t just protected, it’s baked into the model. The future of AI doesn’t have to be creepy. It can be collaborative, private, and still incredibly smart.

Imagine this: your phone gets smarter every day—predicting your next word, improving your health tracking, or personalizing your music tastes. But here’s the twist: it does all of that without your data ever leaving your device.

That’s the magic of federated learning—a game-changing approach to AI training that lets models learn from user data while keeping privacy intact.

What is Federated Learning?

In traditional AI training, data is collected and sent to a central server. But in today’s privacy-sensitive world, that approach is increasingly problematic. Federated learning flips the script. Instead of sending data to the cloud, it sends the model to the data.

Think of it like this: Instead of gathering all the ingredients in one kitchen, you're sending a chef to every household, cooking locally, and then combining only the final recipes—not the raw ingredients.

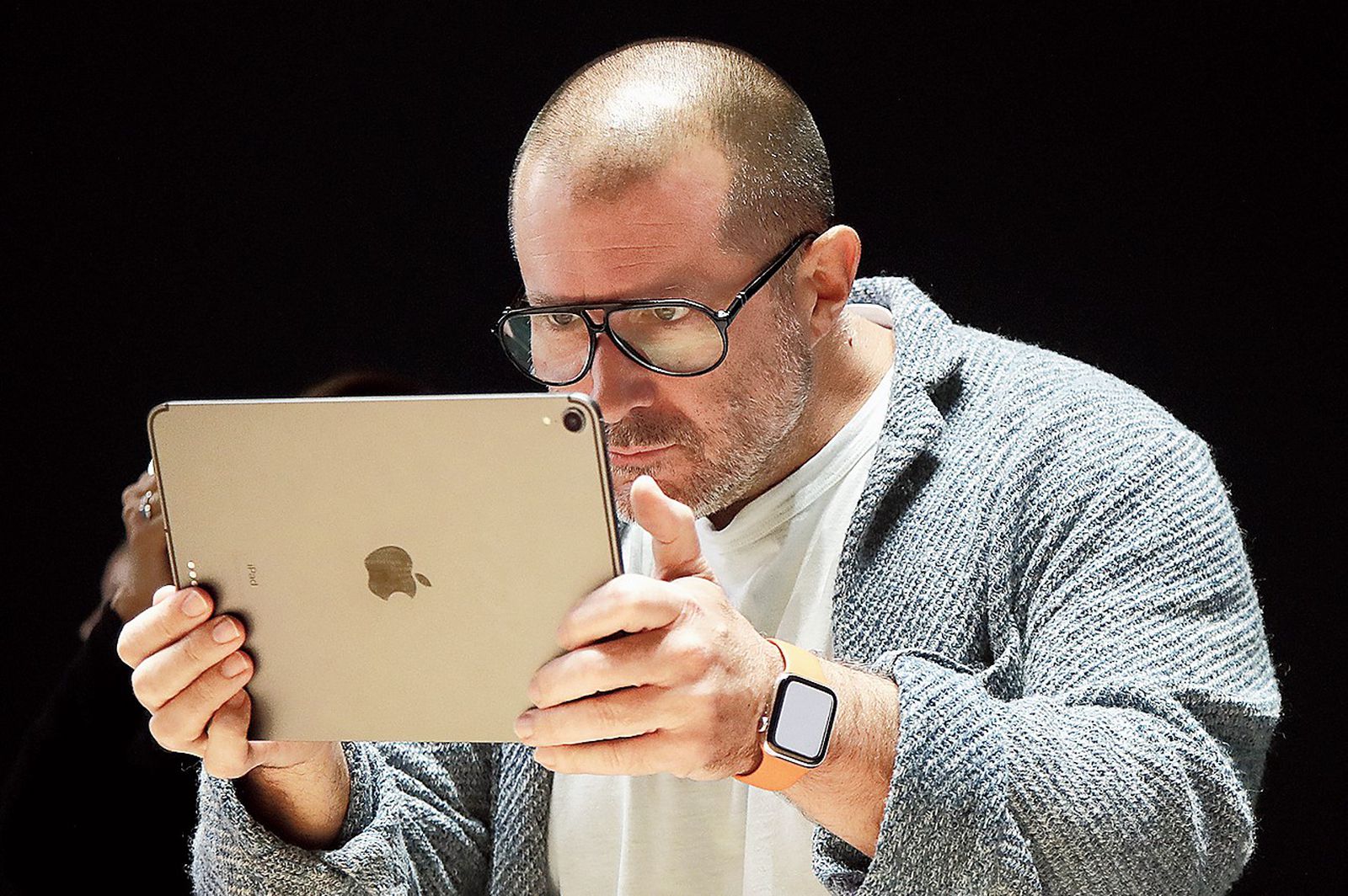

Real-World Impact: Google & Apple

Google was one of the pioneers of federated learning. Remember when Gboard started suggesting words that just made sense? That’s federated learning at work. The model trained locally on millions of devices, learning typing patterns without storing keystrokes.

Apple uses a similar approach in Siri and QuickType to personalize experiences without collecting raw conversations or texts. Your iPhone learns you without ever leaking your privacy.

How It Works (Without the Math Overload)

Let’s simplify the mechanics:

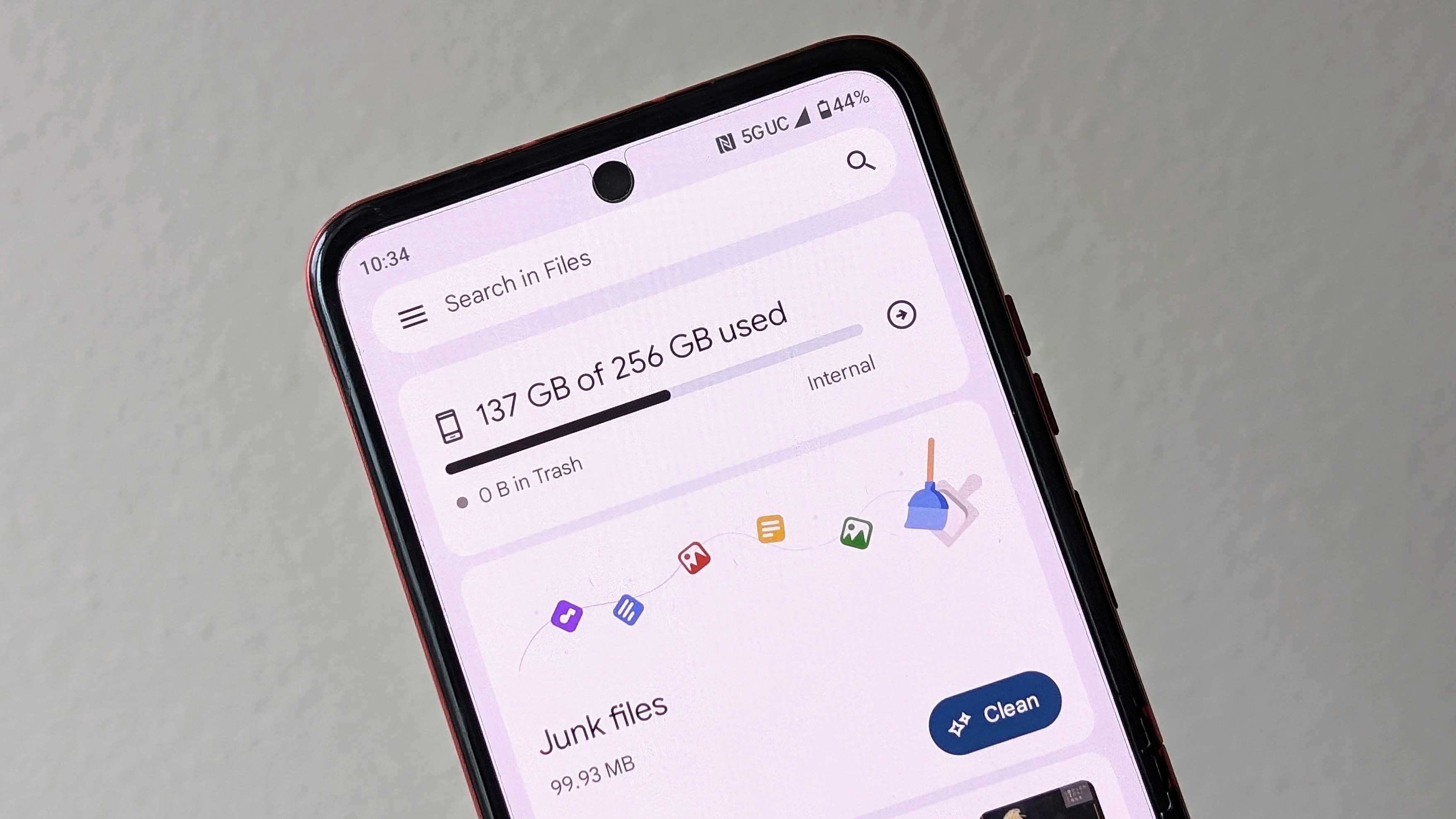

- A base model is sent to your device.

- Local training happens using your private data.

- The device sends back model updates, not the data itself.

- Updates from thousands (or millions) of devices are aggregated and used to improve the global model.

All of this happens using technologies like Secure Aggregation and Differential Privacy to ensure no individual update can be traced back to a specific user.

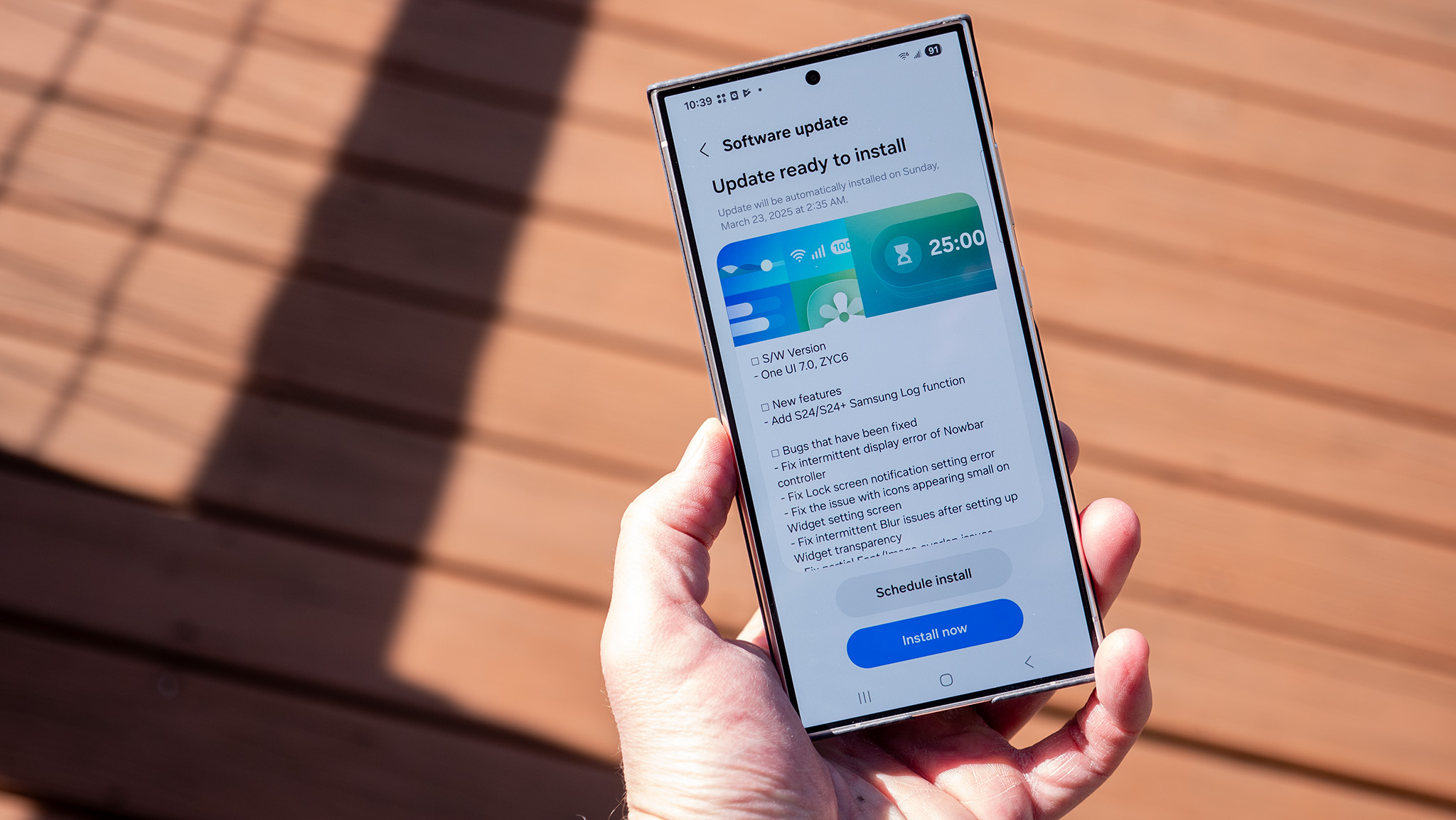

The Scaling Challenge

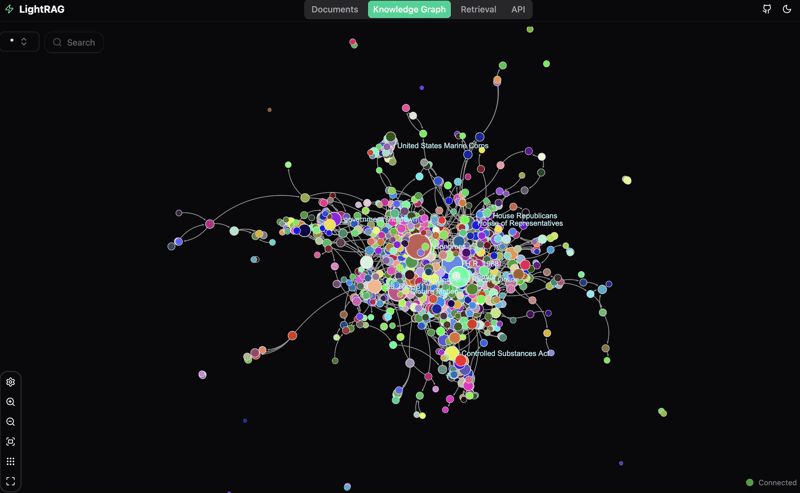

Federated learning works beautifully in theory, but scaling it to millions of devices? That’s where things get tricky.

Devices differ in power, connectivity, and availability. Imagine trying to coordinate a symphony when some musicians are on 3G, others on Wi-Fi, and a few show up late with dying batteries. That’s the kind of orchestration required.

Platforms like TensorFlow Federated and PySyft are helping bridge that gap—making it easier to deploy federated learning at scale.

Why It Matters

In an age of data breaches, deepfakes, and growing mistrust, federated learning offers a path forward: powerful AI without the surveillance. It empowers industries like healthcare (think personalized treatment models without sharing patient data), finance (fraud detection without peeking into transactions), and even smart homes.

Final Thoughts

Federated learning is still evolving, but its promise is bold: smarter systems that don’t trade privacy for performance.

As we move towards a more connected, AI-driven world, this paradigm could be the foundation of ethical AI—where privacy isn’t just protected, it’s baked into the model.

The future of AI doesn’t have to be creepy. It can be collaborative, private, and still incredibly smart.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

(1).jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

-Mario-Kart-World-Hands-On-Preview-Is-It-Good-00-08-36.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_NicoElNino_Alamy.png?#)

_Igor_Mojzes_Alamy.jpg?#)

.webp?#)

.webp?#)

![Gemini can be the biggest AI platform so long as Google integrates it in more areas [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Live-with-Im-in-my-Gemini-era-sticker.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)