Enterprise Networks Unveiled: A Software Engineer's Guide to the Basics (Part 7)

Building on the virtualization of networking we covered in the last post, let's now turn our eyes to the sky! In this post we'll explore how the addition of cloud computing impacts networking design and implementation. We'll explore some of the new cloud native resources these services introduce and how a hybrid network model can be achieved to connect with on-premises networks. Cloud services are hard to avoid in the enterprise at this point and have become much more heavily adopted over the past decade. They come in a mix of models such as Software-as-a-Service (SaaS), Platform-as-a-Service (PaaS), and Infrastructure-as-a-Service(IaaS). While a SaaS service (ex: Microsoft 365) may abstract away most networking concern, and IaaS provider usually leaves most of the responsibility to the customer. Three popular cloud service providers (CSPs) are Amazon Web Services (AWS), Azure, and Google Cloud Platform (GCP). Each is made up of many services, some of which may also function like a SaaS. For example, Google Map's APIs became part of their GCP offering back in 2018. Each service within each cloud service provider may have different "knobs and switches" and levels of configuration responsibilities on the customer. Consider running virtual machines on GCP vs the Maps APIs mentioned above. The concept of a shared responsibility model was created to help customers consider what they must do to maintain their instance of a particular service and what a cloud provider might take care of on their behalf. The image below from the above shared responsibility model link, notes how network controls usually fall within the customer's responsibility for IaaS. Another Layer of shared responsibility may also occur within your company as workloads move to the cloud. Depending on how it is configured, some network configuration responsibilities may fall to software engineers rather than network engineers. With ephemeral resources, there is also a high volume of change compared to on premise servers with static IP addresses. This can create additional challenges to ensure security controls are functioning as expected. Infrastructure as code tooling like terraform may make it easy for software engineers to dynamically create resources in the cloud, but the user should understand the configuration attributes relating to networking and impact of their values. What core network building blocks do CSPs provide to their customers? Cloud providers usually provide instances of their services by region. For example, AWS provides their list of regions here. This allows for computer and data to meet different compliance (ex: GDPR for European data) and performance requirements as needed. Within each region are availability zones (AZs), which can be thought of as geographically distributed data centers within a given region. Cloud providers also provide Virtual Private Clouds (VPCS, AWS/GCP) or Virtual Network (VNets, Azure) to allow customers to create private networks within their tenant (This could be an AWS account, Azure subscription, or GCP project). AWS VPCs and Azure VNets can span multiple Availability zones within a region (as shown below from this documentation, while GCP VPCs can be multi-regional. These virtual networks can use the same private IP addresses (RFC 1918) we discussed in part 5 and can also leverage public IP addresses from the CSP. Once a range is assigned to a VPC or VNet, it can be subnet-ed as we also discussed in part 5 for on-premises sites. Cloud providers also provide optionality to control routing of traffic within these virtual networks. The providers typically default to allowing routing between the subnets within a VPC/VNet, and a default path to the internet. However, this can be overwritten by the customer if needed. As shown above, other virtual resources within a VPC or VNet may also take care of functions we've covered in the past posts. AWS's Internet gateways for example allow for ingress and egress traffic to and from resources within a VPC. AWS also provide other resources like NAT gateways so not all devices require a public IP. VPCs can be logically separated with the subnetting techniques covered in the previous post, to separate public and private resources. For example, you may want to place load balancers for a website in a public subnet, while the app servers reside in private subnets. For the app server's egress traffic to the internet, another virtual resource like a NAT gateway can be used and placed in public subnets as shown below from this user guide for NAT gateways. Azure and GCP offer similar resources (load balancers, internet connections, and NAT-ing). Without any additional resources, VPCs/Vnets would effectively function as "islands" for communication to other cloud resources, end users, or other enterprise networks occurring over the internet. However, there are a number of different options for connectivity. Each of the cloud providers offer a means

Building on the virtualization of networking we covered in the last post, let's now turn our eyes to the sky! In this post we'll explore how the addition of cloud computing impacts networking design and implementation. We'll explore some of the new cloud native resources these services introduce and how a hybrid network model can be achieved to connect with on-premises networks.

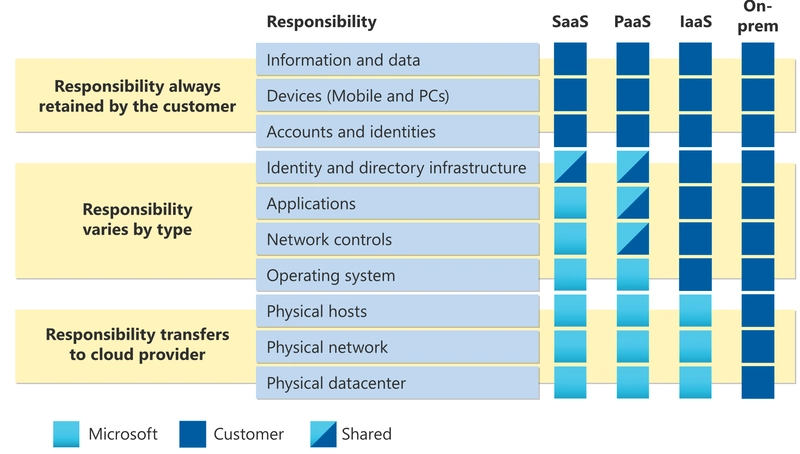

Cloud services are hard to avoid in the enterprise at this point and have become much more heavily adopted over the past decade. They come in a mix of models such as Software-as-a-Service (SaaS), Platform-as-a-Service (PaaS), and Infrastructure-as-a-Service(IaaS). While a SaaS service (ex: Microsoft 365) may abstract away most networking concern, and IaaS provider usually leaves most of the responsibility to the customer.

Three popular cloud service providers (CSPs) are Amazon Web Services (AWS), Azure, and Google Cloud Platform (GCP). Each is made up of many services, some of which may also function like a SaaS. For example, Google Map's APIs became part of their GCP offering back in 2018. Each service within each cloud service provider may have different "knobs and switches" and levels of configuration responsibilities on the customer. Consider running virtual machines on GCP vs the Maps APIs mentioned above. The concept of a shared responsibility model was created to help customers consider what they must do to maintain their instance of a particular service and what a cloud provider might take care of on their behalf. The image below from the above shared responsibility model link, notes how network controls usually fall within the customer's responsibility for IaaS.

Another Layer of shared responsibility may also occur within your company as workloads move to the cloud. Depending on how it is configured, some network configuration responsibilities may fall to software engineers rather than network engineers. With ephemeral resources, there is also a high volume of change compared to on premise servers with static IP addresses. This can create additional challenges to ensure security controls are functioning as expected. Infrastructure as code tooling like terraform may make it easy for software engineers to dynamically create resources in the cloud, but the user should understand the configuration attributes relating to networking and impact of their values.

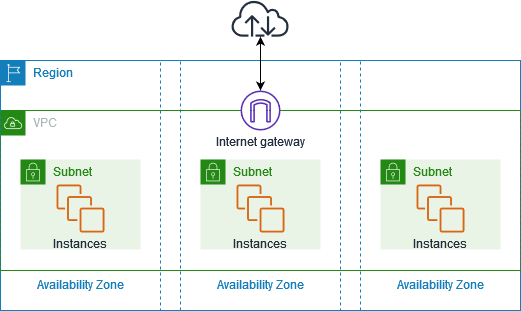

What core network building blocks do CSPs provide to their customers? Cloud providers usually provide instances of their services by region. For example, AWS provides their list of regions here. This allows for computer and data to meet different compliance (ex: GDPR for European data) and performance requirements as needed. Within each region are availability zones (AZs), which can be thought of as geographically distributed data centers within a given region.

Cloud providers also provide Virtual Private Clouds (VPCS, AWS/GCP) or Virtual Network (VNets, Azure) to allow customers to create private networks within their tenant (This could be an AWS account, Azure subscription, or GCP project). AWS VPCs and Azure VNets can span multiple Availability zones within a region (as shown below from this documentation, while GCP VPCs can be multi-regional. These virtual networks can use the same private IP addresses (RFC 1918) we discussed in part 5 and can also leverage public IP addresses from the CSP. Once a range is assigned to a VPC or VNet, it can be subnet-ed as we also discussed in part 5 for on-premises sites. Cloud providers also provide optionality to control routing of traffic within these virtual networks. The providers typically default to allowing routing between the subnets within a VPC/VNet, and a default path to the internet. However, this can be overwritten by the customer if needed.

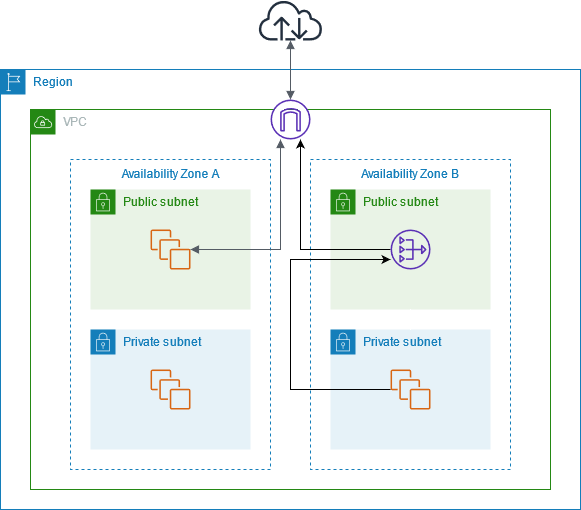

As shown above, other virtual resources within a VPC or VNet may also take care of functions we've covered in the past posts. AWS's Internet gateways for example allow for ingress and egress traffic to and from resources within a VPC. AWS also provide other resources like NAT gateways so not all devices require a public IP. VPCs can be logically separated with the subnetting techniques covered in the previous post, to separate public and private resources.

For example, you may want to place load balancers for a website in a public subnet, while the app servers reside in private subnets. For the app server's egress traffic to the internet, another virtual resource like a NAT gateway can be used and placed in public subnets as shown below from this user guide for NAT gateways. Azure and GCP offer similar resources (load balancers, internet connections, and NAT-ing).

Without any additional resources, VPCs/Vnets would effectively function as "islands" for communication to other cloud resources, end users, or other enterprise networks occurring over the internet. However, there are a number of different options for connectivity.

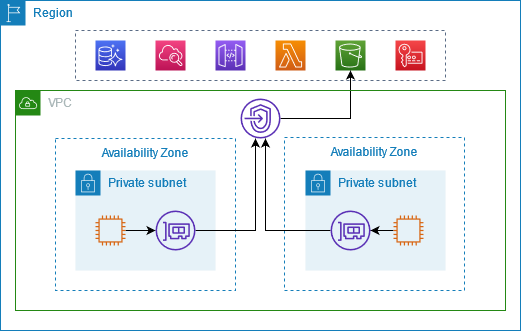

Each of the cloud providers offer a means of connecting to other services from the provider without using the internet route. AWS calls these VPC endpoints. For example an EC2 instance interacting with files in an S3 bucket could make use of this connectivity as shown below from this documentation. This approach may save on egress charges, and is usually more performant.

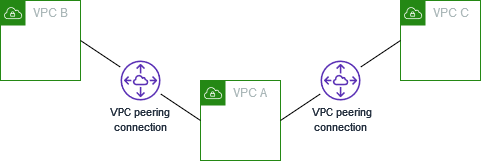

VPCs in AWS and GCP and Vnets in Azure support "peering". This allows routing to occur between the peered resources over the private network without flowing over the internet. This configuration requires non-overlapping IPs (two peered VPCs could not both use 10.0.1.0/24 as their CIDR range. The image below shows a sample of two VPCs being peered with a third as documented here.

While peering works for simple deployments, it can get extremely complex at scale. As noted in the documentation above, VPCs B and C would not be able to communicate in their current configuration. This is due to the fact that AWS does not support transitive peering. Therefore, the peering relationships would have to be fully meshed, creating a complex spider web of connections.

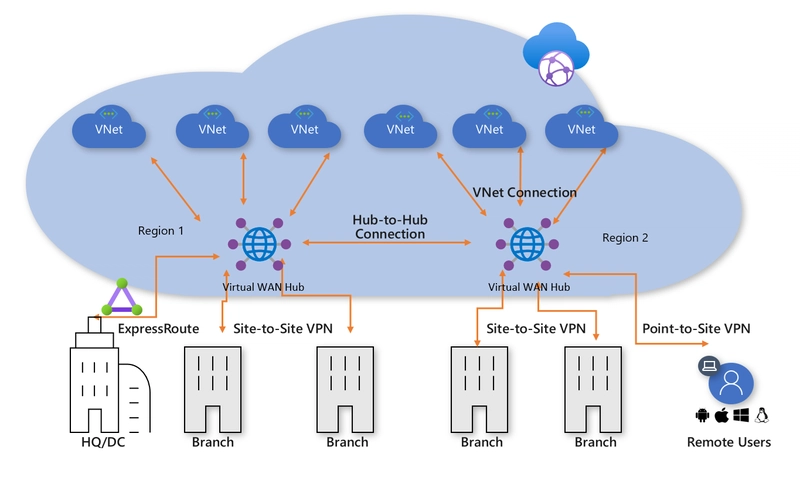

To tackle this complexity, cloud service providers offer other resources. In AWS: transit gateway, in Azure: Virtual Wan, and in GCP: network connectivity center. In Azure many VNets can connect to a WAN hub which then handles the routing amongst all of the associated VNets. This is shown below from the documentation for Virtual WAN.

The above diagram also introduces private connections to physical sites including site-to-site VPNs which we covered in this post. As you recall site-to-site VPN establish a tunnel between locations to allow for private network traffic to flow. The various CSPs support this model with VPN termination points on their side.

Express route is also mentioned in the above diagram. This is a physical dedicated link for traffic heading to private networks in Azure. AWS similarly offers Direct Connect, and GCP offers cloud interconnect. Rather than relying on the internet as the underlying network, these physical links can offer greater bandwidth and lower/consistent latency guarantees for applications which require it.

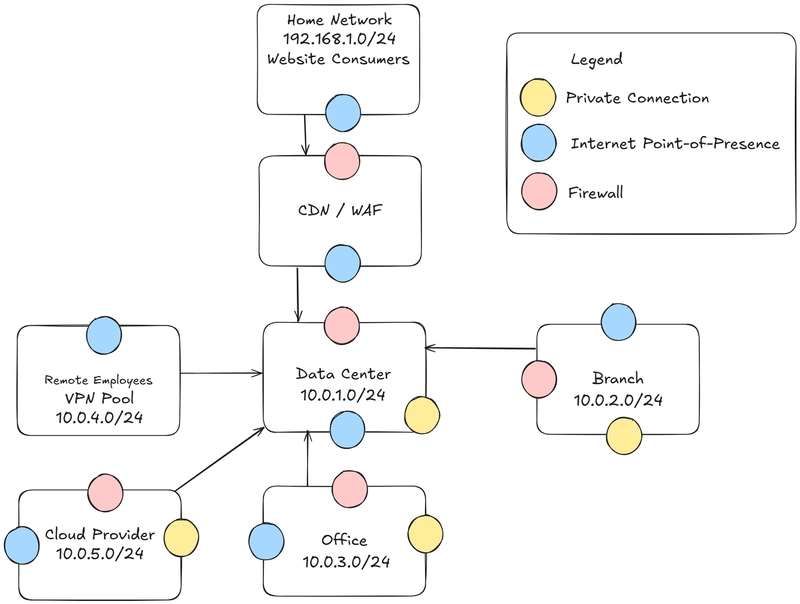

Although an enterprise might leverage one of the above dedicated links, traffic may still flow across the internet for services that do not reside within a VPC or VNet. Thinking back to our Google Maps example and our (updated) network diagram below, the Maps API would see the Public IP of the office if a user was sitting at a desk while developing their application.

Some services provide the customer the option to run an instance of the service within a private network or outside of it. An example of this on AWS is Lambda functions. As you consume different services, review how and where sub resources are deployed and how it may impact the network flow of other services communicating with it.

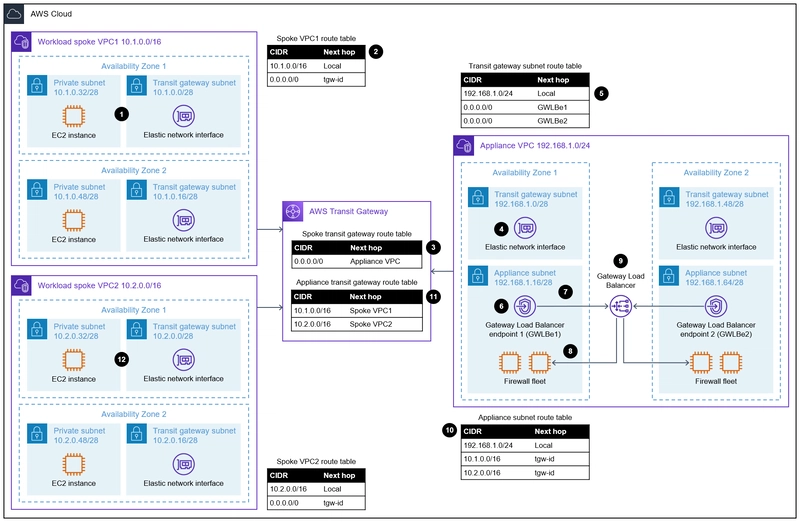

Once a private network exists, there are options to introduce some of the same controls as on-premises networks. Firewalls can be introduced as virtual resources within VPCs or Vnets. CSPs also offer different options to approach firewalling at scale as shown below from this guidance.

The above model allows for segmentation between different VPCs as any traffic destined for another would be inspected by the firewall instances. This may be driven by compliance frameworks like PCI (credit card processing) or policy from your security organization.

Within a given VPC, AWS also offers (network) security groups, which define what traffic may enter or leave an instance it is associated with. Network flow logs also provide insight into what traffic is being sent across the network and if it was accepted or rejected. Azure and GCP also provide similar offerings for granular security groups and flow logs.

Conclusion

- Cloud networking maintains familiar principles but with virtualized components - While cloud providers introduce new terminology and virtual resources, the fundamental networking concepts from on-premises environments still apply.

- Network design flexibility comes with configuration responsibility - Despite the convenience of cloud services, organizations must still carefully design their network architecture with proper segmentation and security controls.

- Connectivity options require strategic planning - Whether using VPC/VNet peering, transit gateways, or dedicated physical connections, each approach has specific use cases, limitations, and scaling considerations.

- Shared responsibility means customers own significant network security - Cloud providers secure the underlying infrastructure, but customers remain responsible for securing their virtual networks, implementing firewalls, and configuring access controls.

- Multi-region and hybrid architectures increase complexity - As organizations expand their cloud footprint across regions or maintain hybrid environments, network design becomes increasingly important to ensure consistent connectivity, performance, and security.

In the next blog post we will revisit the topic of network security, now that we have a full picture of what a modern enterprise network might contain. This will include an exploration of zero trust network access tools, how they differ from client VPNs, and the growing role of identity in network security.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[FREE EBOOKS] Machine Learning Hero, AI-Assisted Programming for Web and Machine Learning & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)