Enterprise Networks Unveiled: A Software Engineer's Guide to the Basics (Part 6)

Until now, this blog series has focused on the physical side of networking. However, it is important to consider how virtualization impacts the networking constructs we've previously discussed. Virtualization can be defined as "a process that allows a computer to share its hardware resources with multiple digitally separated environments. Each virtualized environment runs within its allocated resources, such as memory, processing power, and storage." (Credit to AWS) Virtual Machines When virtualization first became popular this was achieved via "virtual machines" which allowed for multiple servers to function on top of one physical host. These each had their own operating system and dedicated resources from the pool available from the host. As this technology improved this led to clusters that could withstand failure of hosts without impacting availability of the virtual machines. VMware(Broadcom) and Nutanix are two examples of vendors that operate in this space as well as the major cloud service providers (AWS/Azure/Google/etc). The system which orchestrates these virtual machines is known as a hypervisor. Similar to other resources like disks, hypervisors can also provide virtual network resources as shown below (credit to Parallels) As noted above, this virtualization can also assist with resiliency in the network. With virtual network interfaces, packets can be redirected if the underlying host goes down. Hypervisors can also make use of virtual versions of switches, routers, load balancers that we covered in Part 3. There are a few different options when interacting with resources outside of the virtual environment. The hypervisor can use the network address translation (NAT) technique mentioned in the last post, to present as the IP address of the host to other devices. Another approach is known as bridged, where the virtual interfaces directly appear as another device on the network (and would get their own IP address if auto assignment is enabled. Finally, there is also a host-only mode that would allow virtual machines to talk to each other, but no other devices on the network. The image below from this course's lab, highlights the difference between the bridged and NAT approaches. Container Networking Virtualization took another major step forward when Docker containers came on the scene. Although the underlying technology has been around for a while, Docker raised the popularity greatly. While it borrows some similarities from virtual machines, containers are able to share the operating system kernel of the host (while retaining their own minimal filesystem), which makes them more "lightweight" than VMs, with the tradeoff of being less isolated. Virtual machines and containers can be used together, it is not an either-or situation. The image below from here captures how the OS is shared for containers, but how each VM has its own operating system. In terms of networking, Docker offered similar approaches to VMs. Virtual network interfaces can be used to enable different models of communication. By default, Docker uses a bridged approach for inbound traffic. When a request reaches a published port on the host is directed into a container as show below (from this post). If the containers needed to make outbound calls the request would leverage NAT-ing to the host's 192.168.1.2 address by default. The host and overlay network drivers are also popular options. The host driver removes network level isolation between the host and container, meaning it will use the host's IP address (commonly used for performance-critical applications where network isolation isn't a concern). The overlay option allows for a virtual network to exist between multiple clustered hosts in approaches like docker swarm mode. The full list of network driver options can be found here. Similar to VMs, containers needed an orchestrator for multi-host deployments. While there were a few in the running, Kubernetes came out on top. Kubernetes introduces another layer of network virtualization for its resources such as nodes (another name for a host in the cluster), pods, and services. Services act as an internal-to-the-cluster load balancer for pods. Pods are defined as a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers. Pods, services, and nodes all get their own non-overlapping IP addresses as detailed here. There are also a number of options to expose those services to clients outside of the cluster as shown below (credit to Ahmet Alp Balkan, CC BY-SA 4.0) There are various options that implement the standardized networking interfaces (known as the container network interface or CNI) that Kubernetes has defined. Cloud providers like AWS have made their own for their virtual private cloud networks, and there are other popular providers like Cilium, Flannel, or Calico. This means not all Kubernetes clusters will have

Until now, this blog series has focused on the physical side of networking. However, it is important to consider how virtualization impacts the networking constructs we've previously discussed. Virtualization can be defined as "a process that allows a computer to share its hardware resources with multiple digitally separated environments. Each virtualized environment runs within its allocated resources, such as memory, processing power, and storage." (Credit to AWS)

Virtual Machines

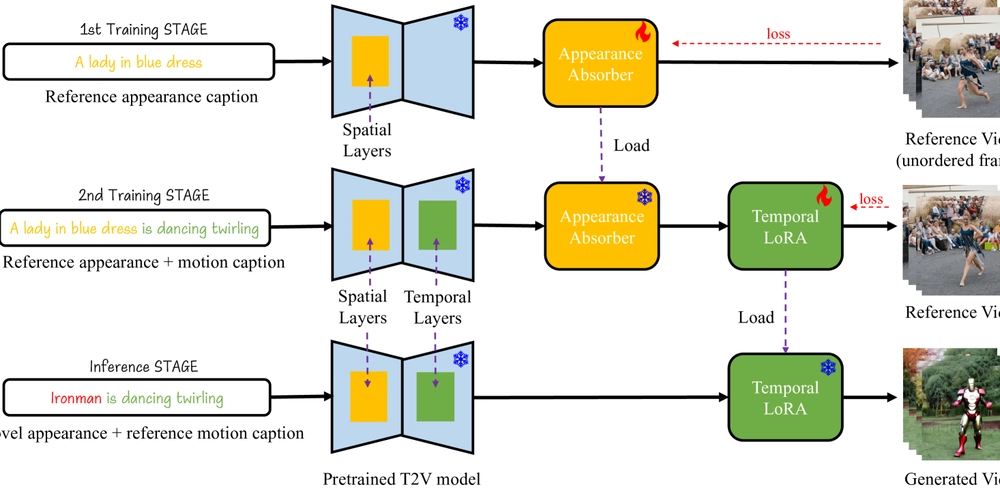

When virtualization first became popular this was achieved via "virtual machines" which allowed for multiple servers to function on top of one physical host. These each had their own operating system and dedicated resources from the pool available from the host. As this technology improved this led to clusters that could withstand failure of hosts without impacting availability of the virtual machines. VMware(Broadcom) and Nutanix are two examples of vendors that operate in this space as well as the major cloud service providers (AWS/Azure/Google/etc). The system which orchestrates these virtual machines is known as a hypervisor. Similar to other resources like disks, hypervisors can also provide virtual network resources as shown below (credit to Parallels)

As noted above, this virtualization can also assist with resiliency in the network. With virtual network interfaces, packets can be redirected if the underlying host goes down. Hypervisors can also make use of virtual versions of switches, routers, load balancers that we covered in Part 3.

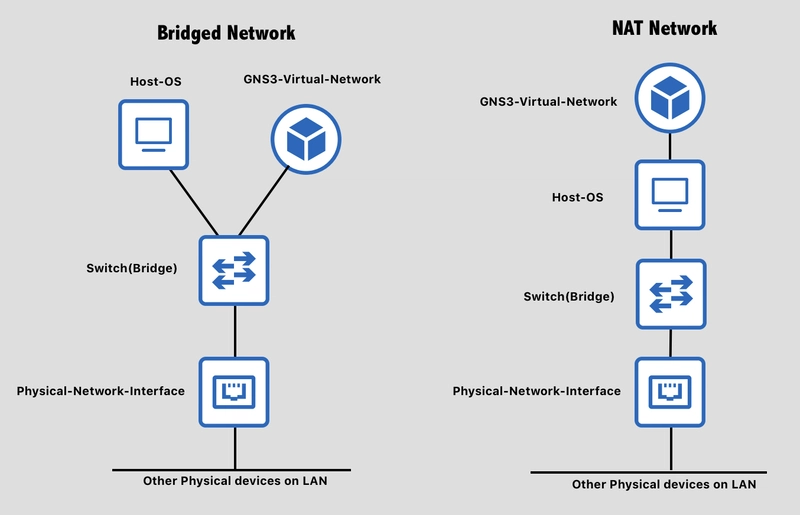

There are a few different options when interacting with resources outside of the virtual environment. The hypervisor can use the network address translation (NAT) technique mentioned in the last post, to present as the IP address of the host to other devices. Another approach is known as bridged, where the virtual interfaces directly appear as another device on the network (and would get their own IP address if auto assignment is enabled. Finally, there is also a host-only mode that would allow virtual machines to talk to each other, but no other devices on the network. The image below from this course's lab, highlights the difference between the bridged and NAT approaches.

Container Networking

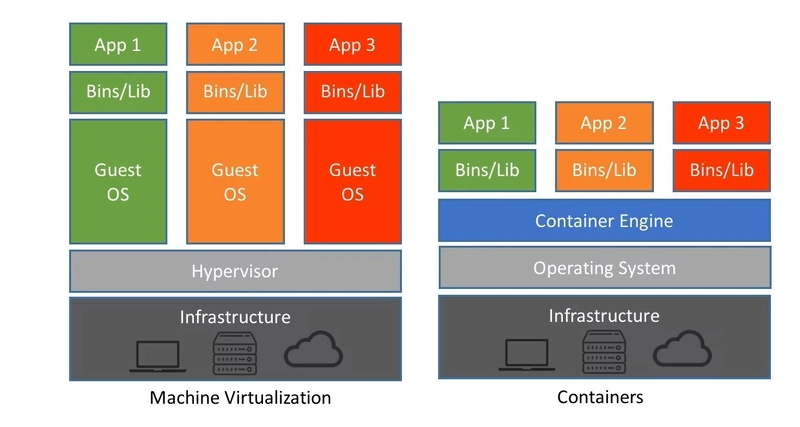

Virtualization took another major step forward when Docker containers came on the scene. Although the underlying technology has been around for a while, Docker raised the popularity greatly. While it borrows some similarities from virtual machines, containers are able to share the operating system kernel of the host (while retaining their own minimal filesystem), which makes them more "lightweight" than VMs, with the tradeoff of being less isolated. Virtual machines and containers can be used together, it is not an either-or situation. The image below from here captures how the OS is shared for containers, but how each VM has its own operating system.

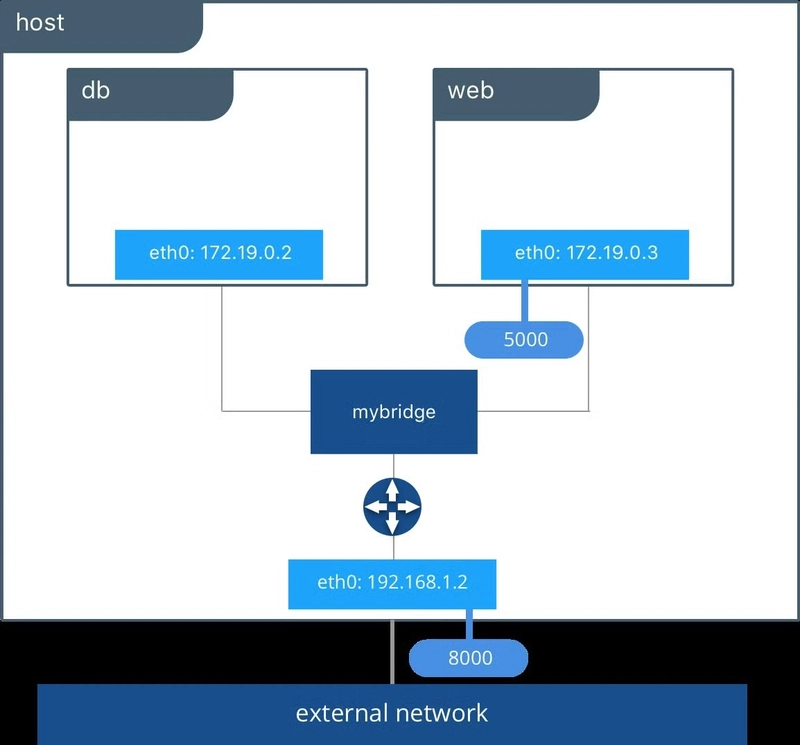

In terms of networking, Docker offered similar approaches to VMs. Virtual network interfaces can be used to enable different models of communication. By default, Docker uses a bridged approach for inbound traffic. When a request reaches a published port on the host is directed into a container as show below (from this post). If the containers needed to make outbound calls the request would leverage NAT-ing to the host's 192.168.1.2 address by default.

The host and overlay network drivers are also popular options. The host driver removes network level isolation between the host and container, meaning it will use the host's IP address (commonly used for performance-critical applications where network isolation isn't a concern). The overlay option allows for a virtual network to exist between multiple clustered hosts in approaches like docker swarm mode. The full list of network driver options can be found here.

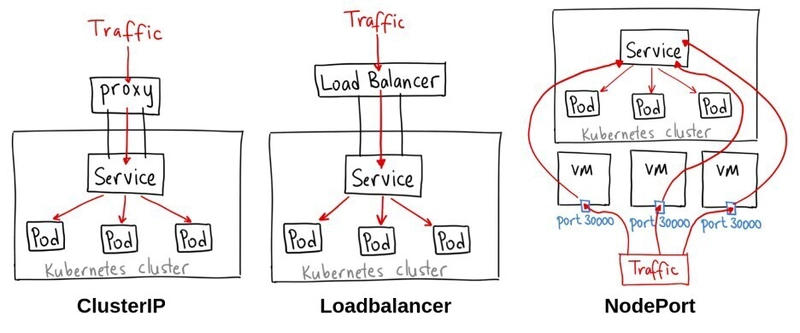

Similar to VMs, containers needed an orchestrator for multi-host deployments. While there were a few in the running, Kubernetes came out on top. Kubernetes introduces another layer of network virtualization for its resources such as nodes (another name for a host in the cluster), pods, and services. Services act as an internal-to-the-cluster load balancer for pods. Pods are defined as a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers. Pods, services, and nodes all get their own non-overlapping IP addresses as detailed here. There are also a number of options to expose those services to clients outside of the cluster as shown below (credit to Ahmet Alp Balkan, CC BY-SA 4.0)

There are various options that implement the standardized networking interfaces (known as the container network interface or CNI) that Kubernetes has defined. Cloud providers like AWS have made their own for their virtual private cloud networks, and there are other popular providers like Cilium, Flannel, or Calico. This means not all Kubernetes clusters will have the same exact networking approach. A Kubernetes cluster network might use one of the other reserved IP ranges we discussed in the last post, if it is not using something like the 10.0.0.0/8 network that the rest of your company devices are. If you review the specific configuration of resources you manage, it will provide some insight into how it is managed at your company.

Local Development

Local development can also take advantage of virtualization of operating systems and/or containers. Docker Desktop for example leverages a virtual machine under the covers. The full details of how it works can be found here. Two alternative tools Rancher and Podman Desktop us a similar VM tooling approach.

These tools usually allow for a local simplified version of Kubernetes as well, allowing developers to test configurations locally before deploying to a "live" environment. These tools leverage the networking stack of your laptop as a means of communicating with the internet or other private network destinations using the same bridged or NAT-ed approaches.

Conclusion

Virtualization has fundamentally transformed enterprise networking, creating layers of abstraction that allow for greater flexibility, better resource utilization, and improved fault tolerance. From virtual machines with their dedicated operating systems to lightweight containers sharing the host kernel, these technologies bring the same networking principles we've explored into virtualized environments.

While the terminology and specific implementations may differ across platforms, whether it's VMware's virtual switches or Kubernetes' service abstractions, the underlying TCP/IP packets and routing concepts remain consistent. Understanding how physical networking principles apply in virtualized contexts is essential for today's software engineers, especially as containerization continues to dominate modern application development and deployment. As we move forward to explore cloud networking in the next post, remember that these virtualization concepts form the foundation upon which cloud providers have built their specialized networking offerings.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)