Elixir for Machine Learning. Part 1 - Theory

In recent times, the role of artificial intelligence in various fields has been gaining momentum. Many companies are already implementing AI to assist users on their portals, while others are developing their own neural networks to solve specialized tasks While Python dominates the ML landscape, Elixir finds its niche in specific areas: real-time data processing systems (Bumblebee), model inference services (Nx.Serving), and distributed machine learning systems. Companies such as DockYard and Dashbit have successfully implemented ML solutions using Elixir in industrial environments This article is divided into two parts — Theory and Practice. In the first part, we will explore the basics of machine learning, familiarize ourselves with key concepts, and learn about libraries that help create neural networks using Elixir What Are Machine Learning and Neural Networks? Machine Learning (ML) is an approach to creating systems that can learn from data and improve performance over time without explicit programming. Instead of writing specific instructions to solve a task, we build models that adapt and "learn" based on experience. Neural networks are one of the most popular methods in machine learning, inspired by how the human brain works. They consist of interconnected nodes (neurons), organized into layers: The input layer receives raw data (e.g., image pixels) Hidden layers process information, identifying increasingly complex patterns The output layer provides the final result (e.g., object classification) Each neuron takes input signals, processes them by applying an activation function to the weighted sum of inputs, and passes the result along the network. Connections between neurons carry weight coefficients that determine signal importance. The training process involves adjusting these weights so that the network produces accurate results. This happens through: Feeding many training examples Calculating the error between the network's predictions and actual values Adjusting weights to minimize this error using the backpropagation algorithm Key Concepts in Machine Learning Models and Their Types Model — a mathematical or algorithmic construct that learns from data to perform a specific task. Models come in various types: Linear models: Relatively simple models that assume a linear relationship between input and output data. Examples: linear regression, logistic regression Neural networks: Multi-layered models capable of identifying complex nonlinear dependencies. Used in computer vision, natural language processing, and other complex problems Ensemble models: Combinations of multiple models to produce more accurate results. Examples: random forests, gradient boosting Tensors and Their Role in ML Tensor — a generalization of scalars, vectors, and matrices to multi-dimensional data arrays. Tensors are fundamental data structures in modern machine learning systems: Scalar: Rank-0 tensor (0-D). Example: the number 5 Vector: Rank-1 tensor (1-D). Example: [1, 2, 3] Matrix: Rank-2 tensor (2-D). Example: [[1, 2, 3], [4, 5, 6]] Multi-dimensional tensor: Rank-3+ tensor (3-D and higher). Example: [[[1, 2], [3, 4]], [[5, 6], [7, 8]]] Tensors are used to represent and process various types of data in ML: Images (4D tensors: [batch_size, height, width, channels]) Text (sequences of vectors representing words or tokens) Time series (sequences of observations over time) Learning Types Learning — the process by which a model adjusts its parameters based on data: Supervised Learning: The model learns from labeled data where the correct answer is known for each input example. Example tasks: image classification, price prediction, speech recognition Unsupervised Learning: The model learns from unlabeled data, uncovering hidden structures and patterns. Example tasks: clustering, dimensionality reduction, anomaly detection Reinforcement Learning: The model learns by interacting with an environment, receiving rewards or penalties for its actions. Applied in robotics, gaming, autonomous systems Hyperparameters and Their Tuning Hyperparameters — model settings defined before training and not altered during the process: Architectural hyperparameters: Number of layers in a neural network, number of neurons per layer, types of activation functions Optimization parameters: Learning rate, batch size, number of training epochs Regularization parameters: L1/L2 regularization coefficients, dropout rate to prevent overfitting Hyperparameter tuning is typically done using methods like grid search, random search, or Bayesian optimization. Model Evaluation Metrics Metrics — quantitative measures used to assess model performance: For classification tasks: Accuracy: Proportion of correct predictions among all predictions Precision & Recall: Metrics reflecting the balance between false p

In recent times, the role of artificial intelligence in various fields has been gaining momentum. Many companies are already implementing AI to assist users on their portals, while others are developing their own neural networks to solve specialized tasks

While Python dominates the ML landscape, Elixir finds its niche in specific areas: real-time data processing systems (Bumblebee), model inference services (Nx.Serving), and distributed machine learning systems. Companies such as DockYard and Dashbit have successfully implemented ML solutions using Elixir in industrial environments

This article is divided into two parts — Theory and Practice. In the first part, we will explore the basics of machine learning, familiarize ourselves with key concepts, and learn about libraries that help create neural networks using Elixir

What Are Machine Learning and Neural Networks?

Machine Learning (ML) is an approach to creating systems that can learn from data and improve performance over time without explicit programming. Instead of writing specific instructions to solve a task, we build models that adapt and "learn" based on experience.

Neural networks are one of the most popular methods in machine learning, inspired by how the human brain works. They consist of interconnected nodes (neurons), organized into layers:

- The input layer receives raw data (e.g., image pixels)

- Hidden layers process information, identifying increasingly complex patterns

- The output layer provides the final result (e.g., object classification)

Each neuron takes input signals, processes them by applying an activation function to the weighted sum of inputs, and passes the result along the network. Connections between neurons carry weight coefficients that determine signal importance.

The training process involves adjusting these weights so that the network produces accurate results. This happens through:

- Feeding many training examples

- Calculating the error between the network's predictions and actual values

- Adjusting weights to minimize this error using the backpropagation algorithm

Key Concepts in Machine Learning

Models and Their Types

Model — a mathematical or algorithmic construct that learns from data to perform a specific task. Models come in various types:

- Linear models: Relatively simple models that assume a linear relationship between input and output data. Examples: linear regression, logistic regression

- Neural networks: Multi-layered models capable of identifying complex nonlinear dependencies. Used in computer vision, natural language processing, and other complex problems

- Ensemble models: Combinations of multiple models to produce more accurate results. Examples: random forests, gradient boosting

Tensors and Their Role in ML

Tensor — a generalization of scalars, vectors, and matrices to multi-dimensional data arrays. Tensors are fundamental data structures in modern machine learning systems:

- Scalar: Rank-0 tensor (0-D). Example: the number 5

- Vector: Rank-1 tensor (1-D). Example: [1, 2, 3]

- Matrix: Rank-2 tensor (2-D). Example: [[1, 2, 3], [4, 5, 6]]

- Multi-dimensional tensor: Rank-3+ tensor (3-D and higher). Example: [[[1, 2], [3, 4]], [[5, 6], [7, 8]]]

Tensors are used to represent and process various types of data in ML:

- Images (4D tensors: [batch_size, height, width, channels])

- Text (sequences of vectors representing words or tokens)

- Time series (sequences of observations over time)

Learning Types

Learning — the process by which a model adjusts its parameters based on data:

- Supervised Learning: The model learns from labeled data where the correct answer is known for each input example. Example tasks: image classification, price prediction, speech recognition

- Unsupervised Learning: The model learns from unlabeled data, uncovering hidden structures and patterns. Example tasks: clustering, dimensionality reduction, anomaly detection

- Reinforcement Learning: The model learns by interacting with an environment, receiving rewards or penalties for its actions. Applied in robotics, gaming, autonomous systems

Hyperparameters and Their Tuning

Hyperparameters — model settings defined before training and not altered during the process:

- Architectural hyperparameters: Number of layers in a neural network, number of neurons per layer, types of activation functions

- Optimization parameters: Learning rate, batch size, number of training epochs

- Regularization parameters: L1/L2 regularization coefficients, dropout rate to prevent overfitting

Hyperparameter tuning is typically done using methods like grid search, random search, or Bayesian optimization.

Model Evaluation Metrics

Metrics — quantitative measures used to assess model performance:

-

For classification tasks:

- Accuracy: Proportion of correct predictions among all predictions

- Precision & Recall: Metrics reflecting the balance between false positives and false negatives

- F1-score: Harmonic mean of precision and recall

- ROC-AUC: Area under the ROC curve, indicating the model's ability to distinguish classes

-

For regression tasks:

- Mean squared error (MSE): Average of squared differences between predicted and actual values

- Mean absolute error (MAE): Average of absolute differences between predicted and actual values

- Coefficient of determination (R²): Measures how well the model explains data variation

The choice of metric depends on the specific task and business context. For instance, in medical diagnostics, minimizing false negatives is critical, while in recommender systems, the quality of top recommendations may be more important.

Now that we’ve covered the key concepts of machine learning, let’s move on to the tools that enable their application in the Elixir ecosystem.

Elixir Tools for Machine Learning

The machine learning ecosystem in Elixir is rapidly evolving, offering increasingly powerful and developer-friendly tools. Let’s explore the key libraries that enable ML on the BEAM platform.

Numerical Elixir (Nx): The Foundation for Computation

Nx is the backbone of Elixir's ML ecosystem, developed by the Dashbit team under the leadership of José Valim (the creator of Elixir). This library provides high-performance numerical computing and support for multi-dimensional tensors, which are critical for machine learning.

Key Features of Nx:

- Tensor operations: A comprehensive set of functions for manipulating multi-dimensional data arrays.

- Numerical stability: High-precision computations with error control.

- Computation compilation: Transforms Elixir code into optimized machine code.

- Automatic differentiation: Essential for backpropagation algorithms.

A particularly powerful feature of Nx is the defn system (definable numerical functions), which compiles computations into efficient low-level code:

defmodule MathOps do

import Nx.Defn

defn multiply_and_add(a, b, c) do

a * b + c

end

end

# Usage

x = Nx.tensor([1, 2, 3])

y = Nx.tensor([4, 5, 6])

z = Nx.tensor([7, 8, 9])

MathOps.multiply_and_add(x, y, z)

# Result: Nx.tensor([11, 18, 27])

Supported Nx Backends:

Nx operates via a backend system that determines where and how computations are executed:

- EXLA: Integration with XLA (Accelerated Linear Algebra) from Google, enabling compilation for CPU, GPU, and TPU.

- Torchx: Connection to PyTorch, leveraging its optimized CUDA operations.

- ONNX: Support for Open Neural Network Exchange for compatibility with a wide range of models.

- BinaryBackend: Native Elixir implementation with no external dependencies.

Backend selection depends on the task and available hardware:

# Global use of EXLA backend

Nx.global_default_backend(EXLA.Backend)

# Using PyTorch for a specific operation

Nx.backend_transfer(tensor, {Torchx.Backend, device: :cuda})

Axon: Neural Networks with a Functional Approach

Axon is a high-level framework for defining, training, and evaluating neural networks, built on top of Nx. Its standout feature is its functional design, which aligns perfectly with Elixir's philosophy.

Features of Axon:

- Declarative model definition: A clear pipeline approach to architecture design.

- Built-in optimizers: Implementations of Adam, SGD, and RMSProp.

- Loss functions: A wide range for various tasks (e.g., MSE, cross-entropy).

- Training management: Tools for early stopping, validation, and callbacks.

Example of creating and training a classification model:

# Model definition

model =

Axon.input("input", shape: {nil, 784})

|> Axon.dense(512)

|> Axon.relu()

|> Axon.dense(10)

|> Axon.softmax()

# Compilation and training

trained_model =

model

|> Axon.Loop.trainer(:categorical_cross_entropy, :adam)

|> Axon.Loop.metric(:accuracy)

|> Axon.Loop.run(train_data, epochs: 5, compiler: EXLA)

Advanced Capabilities of Axon:

Axon supports a wide range of layers and architectures:

-

Convolutional layers: For computer vision tasks (

Axon.conv). - Recurrent networks: LSTM, GRU for sequential data.

- Transformers: Attention mechanisms for NLP.

- Normalization: BatchNorm, LayerNorm for training stability.

- Regularization: Dropout, L1/L2 to combat overfitting.

Example of a convolutional network for image classification:

model =

Axon.input("input", shape: {nil, 1, 28, 28})

|> Axon.conv(32, kernel_size: 3, padding: :same)

|> Axon.relu()

|> Axon.max_pool(kernel_size: 2)

|> Axon.conv(64, kernel_size: 3, padding: :same)

|> Axon.relu()

|> Axon.max_pool(kernel_size: 2)

|> Axon.flatten()

|> Axon.dense(128)

|> Axon.relu()

|> Axon.dense(10)

|> Axon.softmax()

Explorer: Data Processing and Preparation

Explorer is a library for working with tabular data, inspired by Python's Pandas. It integrates seamlessly with Nx, enabling easy conversion of data into tensors.

Key Features of Explorer:

- DataFrame API: Powerful interface for data manipulation.

- Filtering and grouping: Efficient handling of large datasets.

- Loading/saving: Support for CSV, Parquet, JSON, and other formats.

- Visualization: Integration with Vega-Lite for charting.

Example of data processing:

# Loading and processing data

df = Explorer.DataFrame.from_csv!("titanic.csv")

|> Explorer.DataFrame.filter(age > 18)

|> Explorer.DataFrame.select(["survived", "age", "sex", "fare"])

|> Explorer.DataFrame.mutate(fare_category: apply(fare, fn fare ->

cond do

fare < 20 -> "low"

fare < 50 -> "medium"

true -> "high"

end

end))

#### Converting to Tensors for ML

features = Explorer.DataFrame.to_nx(df, ["age", "sex", "fare"])

labels = Explorer.DataFrame.to_nx(df, ["survived"])

Bumblebee: Pre-trained Models for Practical Use

Bumblebee provides pre-trained models and tools for deploying them, simplifying the use of state-of-the-art models without training from scratch.

Key Features:

- Pre-trained models: BERT, GPT-2, ResNet, CLIP, Stable Diffusion, and more.

- Built-in pipelines: Ready-made solutions for common tasks.

- Hugging Face integration: Access to thousands of models.

- Optimized inference: Efficient execution on various hardware.

Example for sentiment analysis:

{:ok, model_info} = Bumblebee.load_model({:hf, "distilbert-base-uncased-finetuned-sst-2-english"})

{:ok, tokenizer} = Bumblebee.load_tokenizer({:hf, "distilbert-base-uncased"})

serving = Bumblebee.Text.sentiment(model_info, tokenizer, compile: [batch_size: 1])

Nx.Serving.run(serving, "I love Elixir, it's an amazing language!")

# => %{predictions: [%{label: "POSITIVE", score: 0.998}]}

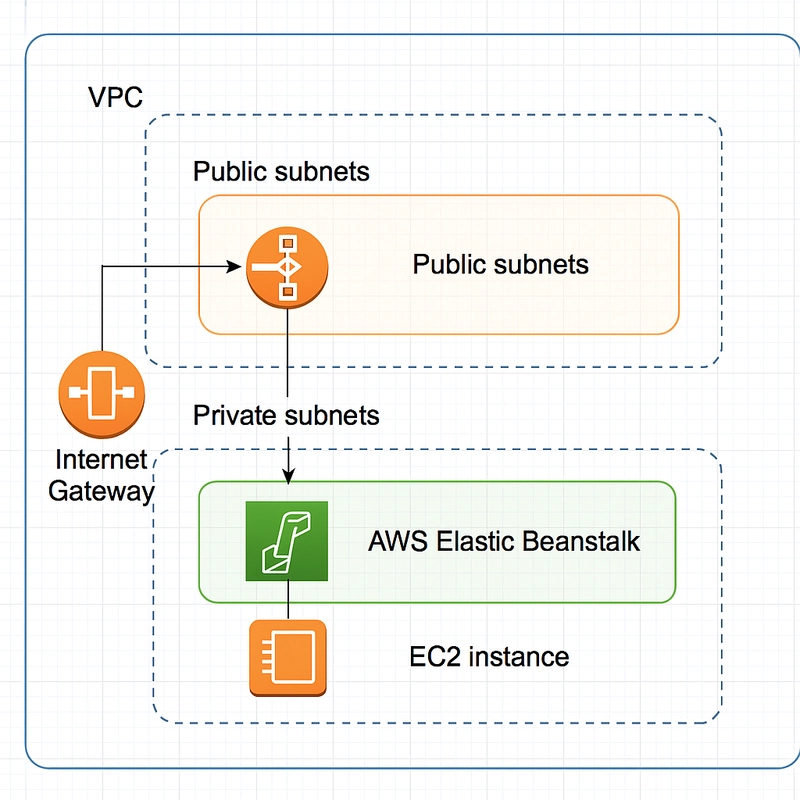

Nx.Serving: Deploying Models in Production

Nx.Serving is a tool for efficiently deploying ML models as services. It offers:

- Request batching: Automatic grouping for efficient execution.

- Queues and priorities: Workload management and resource allocation.

- Hot swapping: Model updates without downtime.

- Monitoring: Performance and resource usage tracking.

Example of an image classification service:

model_info = Bumblebee.load_model({:hf, "microsoft/resnet-50"})

featurizer = Bumblebee.load_featurizer({:hf, "microsoft/resnet-50"})

serving =

Bumblebee.Vision.image_classification(

model_info,

featurizer,

compile: [batch_size: 8], # Dynamic batching

defn_options: [compiler: EXLA] # GPU acceleration

)

# Running as an OTP process

{:ok, pid} = Nx.Serving.start_link(serving, name: ImageClassifier)

# Usage

{:ok, image} = Image.from_file("cat.jpg")

Nx.Serving.batched_run(ImageClassifier, image)

LiveBook: Interactive Development and Experimentation

LiveBook is an interactive environment for developing and documenting Elixir code, ideal for ML experiments. It provides:

- Jupyter-like interface: Interactive code cells.

- Built-in visualization: Charts, graphs, tables.

- Integration with ML libraries: Special widgets for Nx, Axon, Explorer.

- Collaboration: Share and collaborate on notebooks.

- GPU execution: Hardware acceleration support.

LiveBook simplifies learning, experimentation, and documentation for Elixir ML projects:

# Example LiveBook cell

Mix.install([

{:nx, "~> 0.5"},

{:axon, "~> 0.5"},

{:explorer, "~> 0.5"},

{:kino, "~> 0.8"}

])

### Interactive hyperparameter input

form = Kino.Control.form(

[

learning_rate: Kino.Input.text("Learning rate", default: "0.001"),

epochs: Kino.Input.range(1..100, default: 10)

],

submit: "Train model"

)

Scholar: Traditional Machine Learning Algorithms

Scholar extends the Elixir ML ecosystem with classical algorithms, often better suited for certain tasks than deep learning:

- Linear models: Regression, SVM, logistic regression.

- Decision trees: Single trees and ensembles.

- Clustering: K-means, DBSCAN.

- Dimensionality reduction: PCA, t-SNE.

- Statistical methods: Bayesian models, density estimation.

Example of using linear regression

import Scholar.Linear.LinearRegression

import Nx.Defn

# Data preparation

x = Nx.tensor([[1.0, 1.0], [1.0, 2.0], [2.0, 2.0], [2.0, 3.0]])

y = Nx.tensor([6.0, 8.0, 9.0, 11.0])

# Model training

model = fit(x, y)

# Prediction

predict(model, Nx.tensor([[3.0, 5.0]]))

# => #Nx.Tensor

# f32[1]

# [17.0]

# >

Practical Application: Integration with Phoenix

It's worth noting how seamlessly ML models integrate with the Phoenix web framework, enabling powerful intelligent web applications:

defmodule MyAppWeb.MLController do

use MyAppWeb, :controller

alias Nx.Serving

# Model initialization at application start

@serving Bumblebee.Text.sentiment(model_info, tokenizer, compile: [batch_size: 4])

Serving.start_link(@serving, name: SentimentAnalyzer)

def analyze(conn, %{"text" => text}) do

# Asynchronous analysis

Task.async(fn ->

Serving.batched_run(SentimentAnalyzer, text)

end)

|> Task.await()

|> case do

%{predictions: [%{label: label, score: score}]} ->

json(conn, %{sentiment: label, confidence: score})

_ ->

conn

|> put_status(422)

|> json(%{error: "Analysis failed"})

end

end

end

This integration makes it easy to create APIs for text analysis, image classification, recommendation systems, and other ML applications while leveraging BEAM's advantages for horizontal scaling and high availability.

The ML ecosystem in Elixir offers a balanced combination of high-level tools and low-level control, allowing developers to choose the right abstraction level for their tasks. While not as extensive as the Python ecosystem, Elixir's unique advantages make it attractive for certain machine learning scenarios, particularly those involving high-load systems and real-time data processing.

Model Training and Evaluation Example

# Data preparation

train_images = train_images |> Nx.reshape({60000, 784}) |> Nx.divide(255.0)

test_images = test_images |> Nx.reshape({10000, 784}) |> Nx.divide(255.0)

# Creating data batches

batches =

Stream.repeatedly(fn ->

indices = Nx.random_uniform(shape: {32}, min: 0, max: 60000) |> Nx.as_type(:s64)

x = Nx.take(train_images, indices)

y = Nx.take(train_labels, indices)

{x, y}

end)

# Model definition with regularization and dropout

model =

Axon.input("input", shape: {nil, 784})

|> Axon.dense(256, activation: :relu, kernel_regularizer: :l2)

|> Axon.dropout(rate: 0.4)

|> Axon.dense(128, activation: :relu)

|> Axon.dropout(rate: 0.3)

|> Axon.dense(10, activation: :softmax)

# Training with early stopping and hyperparameter optimization

trained_model_state =

model

|> Axon.Loop.trainer(:categorical_cross_entropy, Axon.Optimizers.adam(learning_rate: 0.001))

|> Axon.Loop.metric(:accuracy)

|> Axon.Loop.validate(test_data)

|> Axon.Loop.early_stopping("validation_loss", patience: 5)

|> Axon.Loop.run(batches, epochs: 20, iterations: 1000)

# Model evaluation

test_data = Stream.repeatedly(fn -> {test_images, test_labels} end) |> Stream.take(1)

eval_results =

model

|> Axon.Loop.evaluator()

|> Axon.Loop.metric(:accuracy)

|> Axon.Loop.run(test_data, trained_model_state)

Comparison with Python Tools

Elixir for ML doesn't aim to replace Python completely but rather complements the existing ecosystem:

| Criterion | Elixir (Nx/Axon) | Python (TensorFlow/PyTorch) |

|---|---|---|

| Ecosystem Maturity | Emerging | Mature |

| Performance | High (distributed systems) | GPU/TPU optimized |

| Hardware Support | CPU/GPU (via EXLA) | Extensive (CUDA, TPU, ROCm) |

| Concurrency | Built-in (BEAM VM) | Library-dependent |

| Available Models | Limited (Bumblebee) | Vast model repositories |

| Web Integration | Native (Phoenix LiveView) | Via Flask/FastAPI/Django |

| Deployment | Simple (Mix releases + OTP) | Complex (Docker/K8s) |

| Community | Growing | Massive |

| Documentation | High-quality (hexdocs) | Extensive but fragmented |

| Production Use | Erlang ecosystem (WhatsApp, Discord) | Industry leader (Google, Meta) |

The best strategy often involves a hybrid approach: training models in Python and integrating them into Elixir systems for inference.

Advantages of Elixir for ML

- Distributed Computing: BEAM enables easy distribution of training tasks across cluster nodes.

- Real-time Processing: Thanks to actors and OTP, Elixir excels at streaming data processing (e.g., for IoT).

- Reliability: Models can run in production with minimal downtime.

Limitations and Challenges

Despite rapid development, Elixir's ML ecosystem has some limitations:

- Lack of certain specialized algorithms and optimization methods

- Lower performance for very large models compared to optimized Python libraries

- Limited TPU support

- Fewer documentation and learning resources

- Not all modern architectures (e.g., some transformers) have efficient implementations

Practical Applications

When to use Elixir for ML:

- For integrating ML into existing Elixir/Phoenix applications

- In systems requiring high fault tolerance and scalability

- For real-time data processing and online learning

- In distributed systems where concurrency matters

When Python is preferable:

- For research and prototyping

- When working with very large models (e.g., LLMs)

- When specialized algorithms only available in Python are needed

- With tight deadlines requiring ready-made solutions

The Future of Neural Networks in Elixir

The Elixir ML ecosystem is young but promising. Community efforts focus on:

- Improving hardware acceleration integration (GPU/TPU)

- Expanding pre-trained models in Axon

- Enhancing data processing tools (Explorer, Scholar)

Conclusion

The ML ecosystem in Elixir is growing rapidly thanks to companies like Dashbit and developer contributions. For those wanting to dive deeper, we recommend:

- Official Nx Documentation

- Axon GitHub Repository

- Train a Neural Network in Minutes with Elixir & Axon

- A Practical Guide to Machine Learning in Elixir - Chris Grainger

- Livebook as an ML Experimentation Tool

From the Author

Thank you for reading! I hope this article helped you understand Elixir's potential in machine learning and inspired you to experiment with this powerful technology combination.

The Elixir ML ecosystem is evolving, and I welcome discussions about any questions, suggestions, or corrections. If you spot inaccuracies or have interesting additions—please share them in the comments. Constructive feedback is always valuable

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)