Developing a WebRTC SFU library in Rust

If you're working with WebRTC, you've probably heard of Selective Forwarding Units (SFUs). There are solid open-source SFUs out there- mediasoup, livekit, and Jitsi to name a few. Motivation There is a WebRTC protocol implementation in Rust; it is webrtc-rs. This repository provides some examples that include SFU, but we still need to write a lot of code to implement a practical SFU server. In addition, existing repositories implementing SFU servers with webrtc-rs weren't directly usable for my needs. Introducing Rheomesh: A Rust SFU SDK https://github.com/h3poteto/rheomesh I developed a new SFU library in Rust to build your own WebRTC SFU servers. A key design principle was separating SFU-related logic from signaling protocols. Usually, you need a signaling logic to connect client browsers to your SFU server. I often use WebSocket as the signaling protocol, but, of course, you can choose other protocols, like gRPC or MQTT. Key features Key features of this library are Rust SDKs for SFU server development JavaScript library to communicate with the SFU server Supporting relay It supports: Audio and video streaming DataChannel Simulcast Nowadays, there is Scalable Video Coding (SVC), but it is not supported yet. Currently, Rheomesh supports only temporal layer filtering and doesn't support spatial layer filtering due to patent concerns. An interesting technical challenge during development was implementing a Dependency Descriptor parser. While webrtc-rs provides temporal layer parsing for VP8 and VP9, it lacks support for AV1 and H264, which use Dependency Descriptors for temporal and spatial layer representation. Getting started This library doesn't contain WebSocket server, so please implement a WebSocket server at first, and call Rheomesh methods from it. Server-side https://github.com/h3poteto/rheomesh/blob/master/sfu/README.md First of all, please create a worker and router. Router accommodates multiple transports, and they can communicate with each other. That means transports belonging to the same Router can send/receive their media. Router is like a meeting room. use rheomesh::config::MediaConfig; use rheomesh::router::Router; async fn new() { let config = MediaConfig::default(); let router = Router::new(config); let mut config = WebRTCTransportConfig::default(); config.configuration.ice_servers = vec![RTCIceServer { urls: vec!["stun:stun.l.google.com:19302".to_owned()], ..Default::default() }]; let publish_transport = router.create_publish_transport(config.clone()).await; let subscribe_transport = router.create_subscribe_transport(config.clone()).await; } Please call Rheomesh methods in your WebSocket handler. Regarding publishers: publish_transport .on_ice_candidate(Box::new(move |candidate| { let init = candidate.to_json().expect("failed to parse candidate"); // Send `init` message to the client. The client has to call `addIceCandidate` method with this parameter. })) .await; // When you receive RTCIceCandidateInit message from the client. let _ = publish_transport .add_ice_candidate(candidate) .await .expect("failed to add ICE candidate"); // When you receive offer message from the client. let answer = publish_transport .get_answer(offer) .await .expect("failed to connect publish_transport"); // Send `answer` message to the client. The client has to call `setAnswer` method. // Publish let publisher = publish_transport.publish(track_id).await; Regarding subscribers: subscribe_transport .on_ice_candidate(Box::new(move |candidate| { let init = candidate.to_json().expect("failed to parse candidate"); // Send `init` message to the client. The client has to call `addIceCandidate` method with this parameter. })) .await; subscribe_transport .on_negotiation_needed(Box::new(move |offer| { // Send `offer` message to the client. The client has to call `setOffer` method. })) // When you receive RTCIceCandidateInit message from the client. let _ = subscribe_transport .add_ice_candidate(candidate) .await .expect("failed to add ICE candidate"); // Subscribe let (subscriber, offer) = subscribe_transport .subscribe(track_id) .await .expect("failed to connect subscribe_transport"); // Send `offer` message to the client. The client has to call `setOffer` method. // When you receive answer message from the client. let _ = subscribe_transport .set_answer(answer) .await .expect("failed to set answer"); Client-side https://github.com/h3poteto/rheomesh/blob/master/client/README.md At first, please initialize transports. import { PublishTransport } from 'rheomesh' const peerConnectionConfig: RTCConfiguration = { iceServers: [{ urls: "stun:stun.l.google.com:19302" }], } const publishTransport = new PublishTransport(peerConnectionConfig) const subscribeTransport = new SubscribeTransport(peerConnectionConfi

If you're working with WebRTC, you've probably heard of Selective Forwarding Units (SFUs). There are solid open-source SFUs out there- mediasoup, livekit, and Jitsi to name a few.

Motivation

There is a WebRTC protocol implementation in Rust; it is webrtc-rs. This repository provides some examples that include SFU, but we still need to write a lot of code to implement a practical SFU server. In addition, existing repositories implementing SFU servers with webrtc-rs weren't directly usable for my needs.

Introducing Rheomesh: A Rust SFU SDK

https://github.com/h3poteto/rheomesh

I developed a new SFU library in Rust to build your own WebRTC SFU servers. A key design principle was separating SFU-related logic from signaling protocols. Usually, you need a signaling logic to connect client browsers to your SFU server. I often use WebSocket as the signaling protocol, but, of course, you can choose other protocols, like gRPC or MQTT.

Key features

Key features of this library are

- Rust SDKs for SFU server development

- JavaScript library to communicate with the SFU server

- Supporting relay

It supports:

- Audio and video streaming

- DataChannel

- Simulcast

Nowadays, there is Scalable Video Coding (SVC), but it is not supported yet. Currently, Rheomesh supports only temporal layer filtering and doesn't support spatial layer filtering due to patent concerns.

An interesting technical challenge during development was implementing a Dependency Descriptor parser. While webrtc-rs provides temporal layer parsing for VP8 and VP9, it lacks support for AV1 and H264, which use Dependency Descriptors for temporal and spatial layer representation.

Getting started

This library doesn't contain WebSocket server, so please implement a WebSocket server at first, and call Rheomesh methods from it.

Server-side

https://github.com/h3poteto/rheomesh/blob/master/sfu/README.md

First of all, please create a worker and router. Router accommodates multiple transports, and they can communicate with each other. That means transports belonging to the same Router can send/receive their media. Router is like a meeting room.

use rheomesh::config::MediaConfig;

use rheomesh::router::Router;

async fn new() {

let config = MediaConfig::default();

let router = Router::new(config);

let mut config = WebRTCTransportConfig::default();

config.configuration.ice_servers = vec![RTCIceServer {

urls: vec!["stun:stun.l.google.com:19302".to_owned()],

..Default::default()

}];

let publish_transport = router.create_publish_transport(config.clone()).await;

let subscribe_transport = router.create_subscribe_transport(config.clone()).await;

}

Please call Rheomesh methods in your WebSocket handler.

Regarding publishers:

publish_transport

.on_ice_candidate(Box::new(move |candidate| {

let init = candidate.to_json().expect("failed to parse candidate");

// Send `init` message to the client. The client has to call `addIceCandidate` method with this parameter.

}))

.await;

// When you receive RTCIceCandidateInit message from the client.

let _ = publish_transport

.add_ice_candidate(candidate)

.await

.expect("failed to add ICE candidate");

// When you receive offer message from the client.

let answer = publish_transport

.get_answer(offer)

.await

.expect("failed to connect publish_transport");

// Send `answer` message to the client. The client has to call `setAnswer` method.

// Publish

let publisher = publish_transport.publish(track_id).await;

Regarding subscribers:

subscribe_transport

.on_ice_candidate(Box::new(move |candidate| {

let init = candidate.to_json().expect("failed to parse candidate");

// Send `init` message to the client. The client has to call `addIceCandidate` method with this parameter.

}))

.await;

subscribe_transport

.on_negotiation_needed(Box::new(move |offer| {

// Send `offer` message to the client. The client has to call `setOffer` method.

}))

// When you receive RTCIceCandidateInit message from the client.

let _ = subscribe_transport

.add_ice_candidate(candidate)

.await

.expect("failed to add ICE candidate");

// Subscribe

let (subscriber, offer) = subscribe_transport

.subscribe(track_id)

.await

.expect("failed to connect subscribe_transport");

// Send `offer` message to the client. The client has to call `setOffer` method.

// When you receive answer message from the client.

let _ = subscribe_transport

.set_answer(answer)

.await

.expect("failed to set answer");

Client-side

https://github.com/h3poteto/rheomesh/blob/master/client/README.md

At first, please initialize transports.

import { PublishTransport } from 'rheomesh'

const peerConnectionConfig: RTCConfiguration = {

iceServers: [{ urls: "stun:stun.l.google.com:19302" }],

}

const publishTransport = new PublishTransport(peerConnectionConfig)

const subscribeTransport = new SubscribeTransport(peerConnectionConfig)

Regarding publishers:

publishTransport.on("icecandidate", (candidate) => {

// Send `candidate` to the server. The server has to call `add_ice_candidate` method with this parameter.

})

// When you receive candidateInit message from the server.

publishTransport.addIceCandidate(candidateInit)

// Publish a track.

const stream = await navigator.mediaDevices.getDisplayMedia({

video: true,

audio: false,

})

const offer = await publishTransport.publish(stream)

// Send `offer` to the server. The server has to call `get_answer` method with this parameter.

// When you receive answer message from the server.

publishTransport.setAnswer(answer)

Regarding subscribers:

subscribeTransport.on("icecandidate", (candidate) => {

// Send `candidate` to the server. The server has to call `add_ice_candidate` method with this parameter.

})

// When you receive candidateInit message from the server.

subscribeTransport.addIceCandidate(candidateInit)

// When you receive offer message from the server.

subscribeTransport.setOffer(offer).then((answer) => {

// Send `answer` to the server. The server has to call `set_answer` method with this parameter.

})

// Subscribe a track.

subscribeTransport.subscribe(publisherId).then((track) => {

const stream = new MediaStream([track])

remoteVideo.srcObject = stream

});

Examples

I prepared an example server. Since this flow is complicated, please refer to these examples.

https://github.com/h3poteto/rheomesh/blob/master/sfu/examples/media_server.rs

The corresponding client-side JavaScript code is here.

https://github.com/h3poteto/rheomesh/blob/master/client/example/multiple/src/pages/room.tsx

The Relay Mechanism: A Unique Approach to Load Balancing

One of the library's key innovations is its relay mechanism—a method for distributing load across SFU servers. I called this relay, but there doesn't seem to be a fixed name for it. In libraries like mediasoup, this concept is often called a pipe.

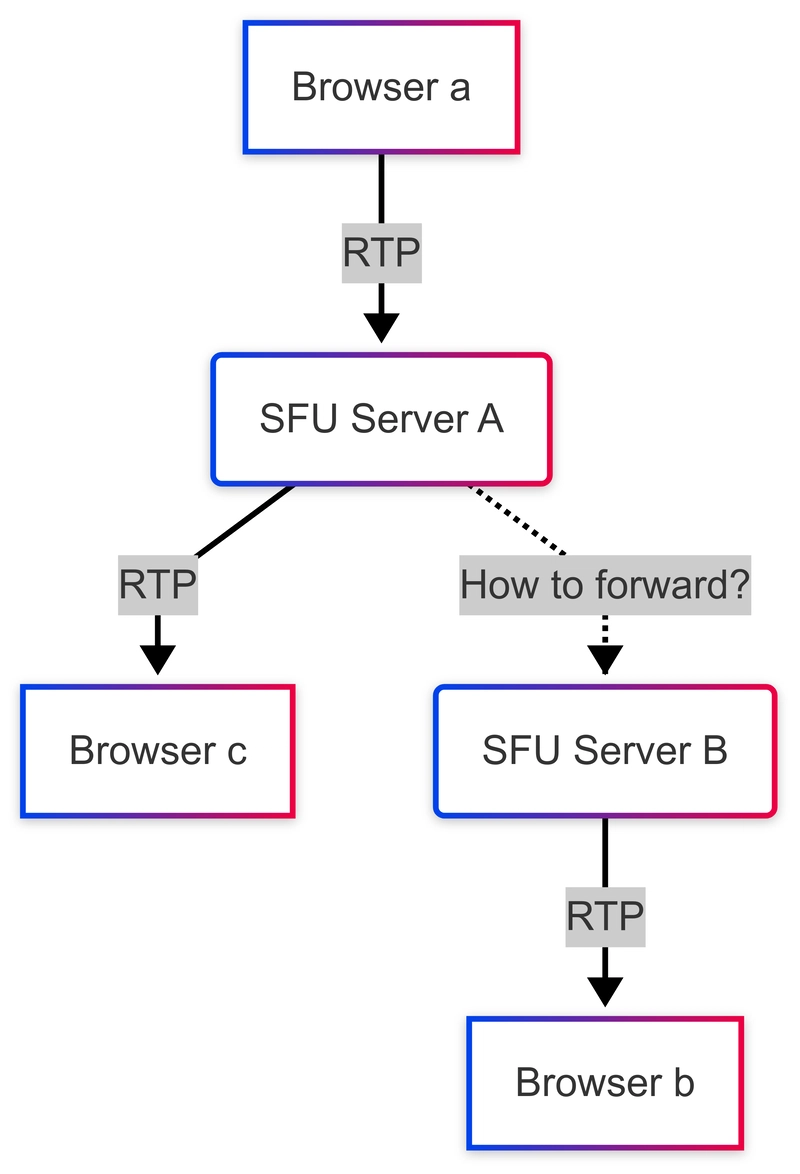

Usually, SFU server, does not support clustering or load balancing. Because an SFU server is just one of peers in WebRTC stack, so the idea of splitting it into multiple instances isn't something that's natively supported. Because of this, performance is inherently limited by the server's specs, and ensuring fault tolerance by running multiple instances is also challenging.

How Relay Works

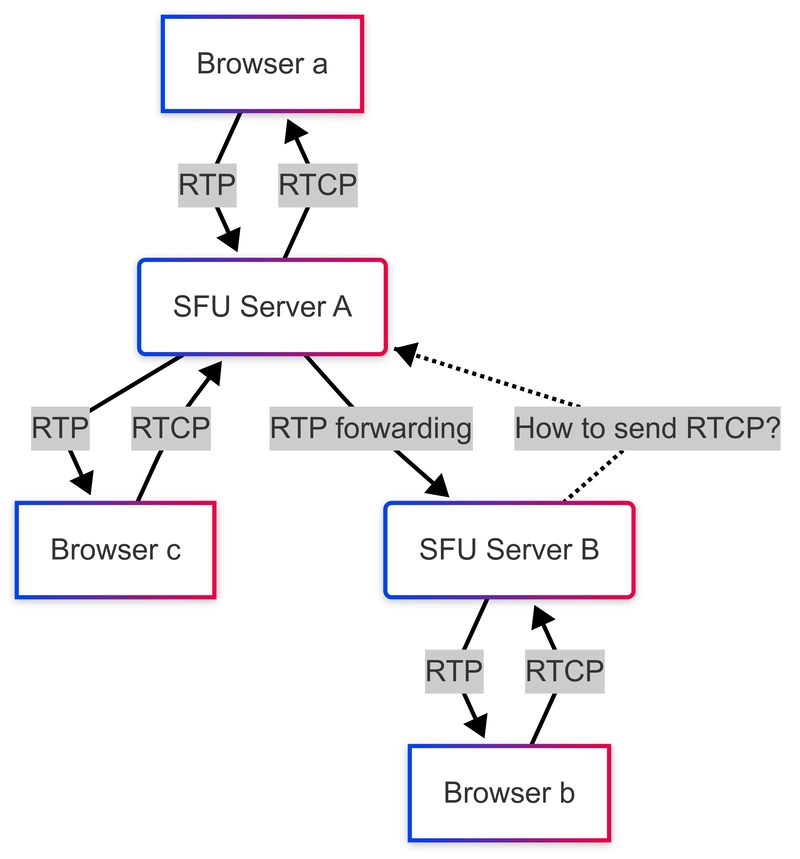

Typically, RTP packets received from client a by server A can only be viewed by clients connected to server A (client c). The relay mechanism allows these packets to be forwarded to clients connected to server B, effectively enabling cross-server streaming.

Current Implementation

For now, I’ve built a simple prototype that transfers RTP packets from server A to server B using UDP. However, this communication does not utilize the WebRTC stack. It’s just a direct transfer of RTP packets over UDP. Of course, I’ve implemented logic to ensure that the source and destination can be identified, but it's not using DTLS, and the receiving side is just a basic UDP server. This means that RTP packets for various destinations are all coming in through the same UDP server. The performance implications of this approach are still unknown.

Issue: RTCP feedbacks

RTCP is a protocol where the receiving side sends feedback to the sending side, and in most WebRTC implementations, it flows from the receiver to the sender. Whether or not to handle it is up to the implementation, but it will be sent regardless. However, as mentioned earlier, the relay servers in the current setup are only forwarding RTP packets, so RTCP, which involves reverse-direction communication, is not being handled.

For server A, since client a is directly connected to it, RTCP packets can simply be forwarded there. However, for server B, the current architecture does not account for communication in the direction of server B -> server A, meaning there's no way to send RTCP feedback back to the original sender. If I need to implement this, it would essentially require bidirectional communication. At that point, if I’m going to establish bidirectional communication over UDP anyway, I might as well switch to WebRTC, which would simplify the process.

Because of this, I might change this approach in the future.

Challenges and Future Improvements

I suspect that making this server-to-server relay WebRTC-based would result in a more stable implementation. However, there are several concerns.

The first is signaling—server-to-server communication requires its own signaling mechanism, separate from client-server signaling. This raises the question of whether signaling should be built into the library itself. I haven’t fully settled on an answer yet, but if I were to use something, gRPC seems like a viable option.

Another concern is port usage. When WebRTC communicates over UDP, it consumes a port, and typically, that port isn't reused. This means that every time a server receives RTP packets from a client, it consumes a port. If another client connects to the same server, another port is consumed. Linux only supports up to 65,535 ports, and many lower-numbered ports are reserved for other purposes, making them unavailable for UDP. This means there’s a hard limit on the number of users that can be supported. Moreover, if server-to-server RTP relaying also consumes ports, the number of ports used can increase significantly. For example, if a server receives a single video stream and relays it to 10 other servers, it would use 1 port for receiving and 10 ports for relaying. On top of that, additional ports are required for clients receiving the stream. This becomes a serious issue.

Performance

I haven't checked the performance in a production environment, but, currently, it works in my local machine properly, so the performance is not completely unusable.

The only way to check the performance is to prepare many clients and stream simultaneously. I plan to try this at some point. However, if you use this library and have any performance issues, I would appreciate it if you could report them to me. I will improve it.

Why I use Rust

First of all, recently, I like to write Rust. It has powerful memory management capabilities that extend to parallel processing. In the SFU, a lot of parallel processing is required, but at the same time, high-speed single-thread performance such as receiving and sending RTP packet is also required. I thought Rust would be a good fit for this requirement.

Conclusion

Developing an SFU library from scratch has been an interesting challenge. By leveraging Rust’s strong memory safety and concurrency features, I’ve been able to build a system that efficiently processes RTP packets while keeping SFU-related logic separate from signaling.

While the current implementation works well locally, significant improvements are needed:

- Enhanced relay mechanism

- Comprehensive performance testing

- E2E tests

- Recording feature

I welcome feedback and contributions from the community. If you're interested in WebRTC SFU development in Rust, I'd love to hear your thoughts!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)