Data Compression, Types and Techniques in Big Data

Cover Photo by Tim Mossholder on Unsplash Introduction This article will discuss compression in the Big Data context, covering the types and methods of compression. I will also highlight why and when each type and method should be used. Diving in What is compression? According to the general English definition of compression which refers to reducing something to occupy a smaller space. In Computer Science, compression is the process of reducing data to a smaller size. Data, in this case, could be represented in text, audio, video files etc. Think of it as anything you store on the hard drive of your computer as data represented in different formats. To provide a more technical definition, compression is the process of encoding data to use fewer bits. There are multiple reasons to compress data. The most common and intuitive reason is to save storage space. Other reasons are as a result of data being smaller. The benefits of working with smaller data include: Quicker Data Transmission Time: Compressed data are smaller in size and take less time to be transmitted from source to destination. Reduced bandwidth consumption: This reason is strongly linked to the advantage of quicker data transmission. Compressed data uses less of the network bandwidth, therefore increasing the throughput and reducing the latency. Improved performance for digital systems that rely heavily on data: This is evident in systems that rely on processing data. Those systems leverage compression to improve the performance of the systems by reducing the volume of data that needs to be processed. Please note that this might be system-specific and will rely on using the appropriate compression technique. Compression techniques will be discussed later in this article. Cost Efficiency: Cloud services charge for the storage of data. By using less storage, cost savings are introduced especially in Big Data systems. Other reasons for compression depend on different compression techniques and formats. Some encryption algorithms can be used as a method of compression. In doing so, it includes a layer of security to the earlier discussed reasons to compress data. Additionally, using common compression formats brings compatibility and room for extensibility to external systems for integration purposes. It is worth noting that the reasons for compression also sound like benefits. However, compression is not without trade-offs. One common trade-off to compression is the need for decompression which might be concerning for resource-constrained systems. Other trade-offs depend on the compression technique and type of data being used. Systems in the article refer to digital systems that make use of data and can take advantage of compression techniques. The word systems is used quite loosely and should be interpreted in context with what is being discussed in that section. Compression Types To discuss the different techniques used to compress data, I will first categorise compression into 2 main categories. This article will then discuss the techniques relevant to each category. Compression can be broadly grouped into Lossy and Lossless compression. As the names give away what they mean already, Lossy compression techniques are techniques that do not preserve the full fidelity of the data. Simply put, some data is discarded but not enough to make what the data represents unrecognisable. Hence, lossy compression can offer a very high level of compression compared to lossless compression which will be introduced shortly. A characteristic of lossy compression is that it is irreversible, i.e. when presented with the compressed file, one cannot restore the raw data with its original fidelity. Certain files and file formats are suitable for lossy compression. It is typically used for images, audio and videos. For instance, JPEG formatted images lend well to compression and compressing a JPEG image, the creator or editor can choose how much loss to introduce. On the other hand, lossless compression is reversible, meaning that when compressed, all data is preserved and restored fully during decompression. This implies that lossless compression is suitable for text-like files, and in the data warehouse and lakehouse world, it would be the only relevant type to use. Some audio (FLAC and ALAC) and image file (GIF, PNG, etc) formats work well with this compression type. Choosing a method There is no general best compression method. Different factors go into choosing what method would be suitable on a case-by-case basis. To buttress this with examples, a data engineer in the finance industry working on tabular data stored would tend to use lossless compression due to the impact of missing data in creating accurate reporting. Alternatively, lossy compression could be the way to go in optimizing the web page with a lot of images by compressing the images and reducing load items by making the website l

Cover Photo by Tim Mossholder on Unsplash

Introduction

This article will discuss compression in the Big Data context, covering the types and methods of compression. I will also highlight why and when each type and method should be used.

Diving in

What is compression?

According to the general English definition of compression which refers to reducing something to occupy a smaller space. In Computer Science, compression is the process of reducing data to a smaller size. Data, in this case, could be represented in text, audio, video files etc. Think of it as anything you store on the hard drive of your computer as data represented in different formats. To provide a more technical definition, compression is the process of encoding data to use fewer bits.

There are multiple reasons to compress data. The most common and intuitive reason is to save storage space. Other reasons are as a result of data being smaller. The benefits of working with smaller data include:

Quicker Data Transmission Time: Compressed data are smaller in size and take less time to be transmitted from source to destination.

Reduced bandwidth consumption: This reason is strongly linked to the advantage of quicker data transmission. Compressed data uses less of the network bandwidth, therefore increasing the throughput and reducing the latency.

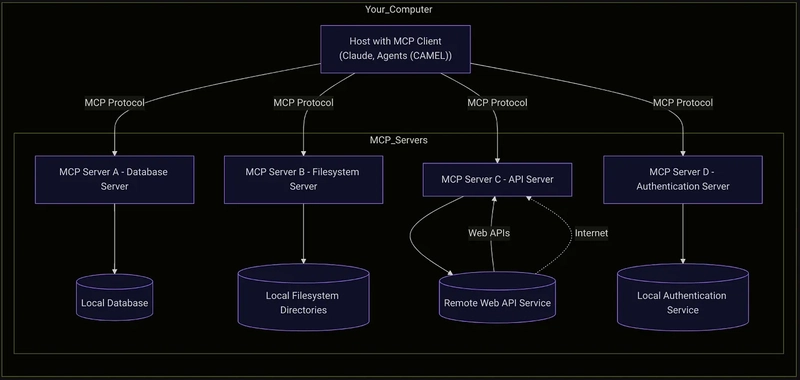

Improved performance for digital systems that rely heavily on data: This is evident in systems that rely on processing data. Those systems leverage compression to improve the performance of the systems by reducing the volume of data that needs to be processed. Please note that this might be system-specific and will rely on using the appropriate compression technique. Compression techniques will be discussed later in this article.

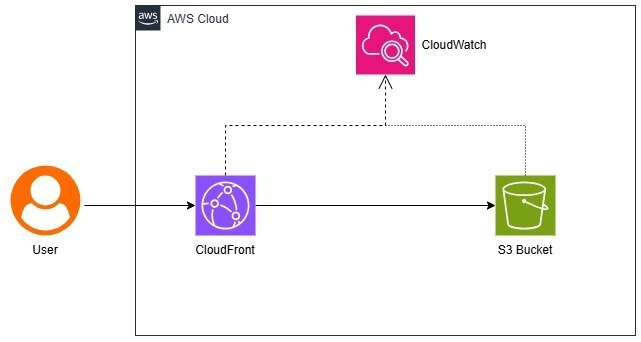

Cost Efficiency: Cloud services charge for the storage of data. By using less storage, cost savings are introduced especially in Big Data systems.

Other reasons for compression depend on different compression techniques and formats. Some encryption algorithms can be used as a method of compression. In doing so, it includes a layer of security to the earlier discussed reasons to compress data. Additionally, using common compression formats brings compatibility and room for extensibility to external systems for integration purposes.

It is worth noting that the reasons for compression also sound like benefits. However, compression is not without trade-offs. One common trade-off to compression is the need for decompression which might be concerning for resource-constrained systems. Other trade-offs depend on the compression technique and type of data being used.

Systems in the article refer to digital systems that make use of data and can take advantage of compression techniques. The word systems is used quite loosely and should be interpreted in context with what is being discussed in that section.

Compression Types

To discuss the different techniques used to compress data, I will first categorise compression into 2 main categories. This article will then discuss the techniques relevant to each category. Compression can be broadly grouped into Lossy and Lossless compression.

As the names give away what they mean already, Lossy compression techniques are techniques that do not preserve the full fidelity of the data. Simply put, some data is discarded but not enough to make what the data represents unrecognisable. Hence, lossy compression can offer a very high level of compression compared to lossless compression which will be introduced shortly.

A characteristic of lossy compression is that it is irreversible, i.e. when presented with the compressed file, one cannot restore the raw data with its original fidelity. Certain files and file formats are suitable for lossy compression. It is typically used for images, audio and videos. For instance, JPEG formatted images lend well to compression and compressing a JPEG image, the creator or editor can choose how much loss to introduce.

On the other hand, lossless compression is reversible, meaning that when compressed, all data is preserved and restored fully during decompression. This implies that lossless compression is suitable for text-like files, and in the data warehouse and lakehouse world, it would be the only relevant type to use. Some audio (FLAC and ALAC) and image file (GIF, PNG, etc) formats work well with this compression type.

Choosing a method

There is no general best compression method. Different factors go into choosing what method would be suitable on a case-by-case basis. To buttress this with examples, a data engineer in the finance industry working on tabular data stored would tend to use lossless compression due to the impact of missing data in creating accurate reporting. Alternatively, lossy compression could be the way to go in optimizing the web page with a lot of images by compressing the images and reducing load items by making the website lighter. Therefore, it is crucial to conduct an assessment to determine the most appropriate compression method that aligns with business requirements.

Compression Techniques

This section will only cover the common compression techniques for both lossy and lossless compression. Please note that this is not in any way exhaustive. Furthermore, the techniques discussed may have slight variations to enhance their performance, as backed by different research.

Lossless compression techniques

Three common lossless techniques are the Run-Length Encoding (RLE), Huffman Coding and the Lempel-Ziv-Welch techniques.

Run-Length Encoding: RLE is based on encoding data, such that, it replaces sequences of repeating data with a single piece of data and the count of that piece of data. It is effective for long runs of repeated data. Also, datasets which have dimensions (fields) that are sorted from a low level to a high level of cardinality benefit from RLE.

For example, take a simple string like AAAAABBCDDD. RLE compresses the data to become A(5)B(2)C(1)D(3). To be more practical, take a table in the image below.

Figure 1 - before RLE. It is important to observe the level of cardinality is increasing on the fields from left to right

Figure 2 - After RLE

Because RLE depends on runs of repeated fields and in the second example, the cardinality and the sort order of the data, the Mouse record on the item column can not be compressed to just Mouse (3) because the preceding column splits all values into IT, Mouse and HR, Mouse. Certain file formats are compatible with RLE such as bitmap file formats like TIFF, BMP etc. Parquet files also support RLE making it very useful in modern data lakehouses using object storage like S3 or GCS.

Huffman Coding: It is based on statistical modelling that assigns variable length codes to values in the raw data based on the frequency at which they occur in the raw data. The representation of this modelling can be referred to as a Huffman tree, which is, similar to a binary tree. This tree is then used to create a Huffman code for each value in the raw data. The algorithm prioritizes encoding the most frequent values to the fewest possible bits.

Let's take the same data used in the RLE example AAAAABBCDDD. The corresponding Huffman tree looks like this.

Huffman Tree

From the tree, we can see that the letter A is represented by 0 likewise D is presented by 10. Compared to letters B: 111 and C:110, we observe that A and D are represented by fewer bits. This is because they have a higher frequency; Hence the Huffman algorithm represents them with fewer bits by design. The resulting compressed data becomes 00000111111110101010.

Huffman Coding uses the prefix rule which states that the code representing a character should not be present in the prefix of any other code. For example, a valid Huffman code can not have letters c and d represented using C: 00 and D: 000 because the representation of C is a prefix of D.

To see this in action, the Computer Science Field Guide has a Huffman Tree Generator you could play with.

Lempel–Ziv–Welch Coding: It was created by Abraham Lempel, Jacob Ziv and Terry Welch in 1984 and is named after the creators, obviously

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)