Creating a Scalable ETL Pipeline with S3, Lambda, and AWS Glue using AWS Glue

This article shows you how to use AWS Glue, AWS Lambda, and Amazon S3 to make ETL processes run automatically. Introduction: Modern companies run on data; hence, effective management of it depends on Extract, Transform, Load (ETL) pipelines. Whether you’re dealing with analytics, reporting, or machine learning, a well-organized ETL process guarantees that raw data is clean, orderly, and kept best for usage. The issue, though, is that ETL processes might choke without automation. Data manually processed slows everything down, raises expenses, and makes scaling challenging. I created an ETL pipeline out of AWS Glue, AWS Lambda, and Amazon S3 to fix the issue. With minimal human involvement, this entirely serverless, scalable, automated configuration can manage vast volumes. By the time this guide ends, your operational ETL pipeline will be capable: ✔ Get data from a database or API and arrange it methodically. ✔ Load it for analytics or additional processing into Amazon S3. For AWS Glue, Lambda, and S3, why? Although there are several ways to create an ETL pipeline, for a few important reasons I decided on AWS Glue, AWS Lambda, and S3: AWS Glue: Engine for Transformation Designed on Apache Spark, AWS Glue is a totally managed, serverless ETL tool. Scales dependent on data volume automatically. It supports several data forms: JSON, CSV, Parquet, and ORC. It generates a data catalog for simpler schema control and querying. AWS Lambda: Trigger for automation Designed as a serverless tool, AWS Lambda runs code in reaction to events. ✔ Gets data from databases or APIs and puts it in S3. ✔ Starts dynamically AWS Glue jobs. ✔ Runs only as required, therefore controlling expenses. S3: The Data Lake: Amazon Raw and processed data find a home on Amazon S3. ✔ Cheaply stores huge databases. ✔ Perfectly interacts with Redshift and AWS Glue. ✔ Advocates both organized and unstructured data. Comparatively to other ETL tools, how AWS Glue stands: Overview of Pipeline Architectural Design: Three steps comprise this ETL pipeline: AWS Lambda holds raw data gleaned from an API in S3. AWS Glue shapes, cleans, and enriches the data. For best searching, the processed data is kept in Parquet form on S3. Step 1: Configuring AWS S3 for Data Storage Our ETL pipeline’s centralized storage comes from Amazon S3. Set it up like this: Visit AWS S3 Console at https://s3.console.aws.amazon.com/s3/home. Click Create bucket and type a distinctive name (my-etl-data-lake). Select an area near your data sources — say, us-east-1. Keep the settings preset and click Create bucket. Step 2: AWS Lambda Data Extraction Data retrieval from an API and storage in S3 falls to AWS Lambda. 2.1 Generate a Lambda Function: Visit AWS Lambda Consultable (https://console.aws.amazon.com/lambda/home) Click Create Function; choose Author from scratch. Enter the function name (e.g., etl_data_extractor). Select Python 3.8 as your runtime. Select Create a new role with basic rights under Permissions. Click Create function. 2.2 Code to Retrieve Information Change the default function code with: import json import boto3 import requests s3_client = boto3.client('s3') API_URL = "https://api.example.com/data" def lambda_handler(event, context): response = requests.get(API_URL) if response.status_code == 200: data = response.json() # Store data in S3 s3_client.put_object( Bucket="my-etl-data-lake", Key="raw/data.json", Body=json.dumps(data) ) return {"statusCode": 200, "body": "Data extracted successfully"} return {"statusCode": response.status_code, "body": "Failed to fetch data"} Step 3: Transforming data using AWS Glue Raw data is formatted structurally by AWS Glue. 3.1 Write an AWS Glue Job. Visit the AWS Glue Console (https://console.aws.amazon.com/glue/home) Click Jobs > Add Job. Enter Job Name (e.g., etl_transformation). Choose a fresh script for your own work. Select an IAM role with S3 read/write authority. Click Create. 3.2 Create the AWS Glue Transformation Script: Change the default script to: from awsglue.transforms import ApplyMapping from awsglue.context import GlueContext from pyspark.context import SparkContext sc = SparkContext() glueContext = GlueContext(sc) spark = glueContext.spark_session # Read raw data from S3 raw_data = glueContext.create_dynamic_frame.from_options( "s3", {"paths": ["s3://my-etl-data-lake/raw/"], "format": "json"} ) # Apply transformations transformed_data = ApplyMapping.apply( frame=raw_data, mappings=[("id", "string", "id", "string"), ("name", "string", "name", "string"), ("age", "int", "age", "int")] ) # Write transformed data back to S3 glueContext.write_dynamic_frame.from_options( frame=transformed_data, connection_type="s3", connection_options={"path": "s3://my-etl-data-lake

This article shows you how to use AWS Glue, AWS Lambda, and Amazon S3 to make ETL processes run automatically.

Introduction:

Modern companies run on data; hence, effective management of it depends on Extract, Transform, Load (ETL) pipelines. Whether you’re dealing with analytics, reporting, or machine learning, a well-organized ETL process guarantees that raw data is clean, orderly, and kept best for usage.

The issue, though, is that ETL processes might choke without automation. Data manually processed slows everything down, raises expenses, and makes scaling challenging.

I created an ETL pipeline out of AWS Glue, AWS Lambda, and Amazon S3 to fix the issue. With minimal human involvement, this entirely serverless, scalable, automated configuration can manage vast volumes.

By the time this guide ends, your operational ETL pipeline will be capable:

✔ Get data from a database or API and arrange it methodically.

✔ Load it for analytics or additional processing into Amazon S3.

For AWS Glue, Lambda, and S3, why?

Although there are several ways to create an ETL pipeline, for a few important reasons I decided on AWS Glue, AWS Lambda, and S3:

AWS Glue: Engine for Transformation

Designed on Apache Spark, AWS Glue is a totally managed, serverless ETL tool.

- Scales dependent on data volume automatically.

- It supports several data forms: JSON, CSV, Parquet, and ORC.

- It generates a data catalog for simpler schema control and querying.

AWS Lambda: Trigger for automation

Designed as a serverless tool, AWS Lambda runs code in reaction to events.

✔ Gets data from databases or APIs and puts it in S3.

✔ Starts dynamically AWS Glue jobs.

✔ Runs only as required, therefore controlling expenses.

S3: The Data Lake: Amazon

Raw and processed data find a home on Amazon S3.

✔ Cheaply stores huge databases.

✔ Perfectly interacts with Redshift and AWS Glue.

✔ Advocates both organized and unstructured data.

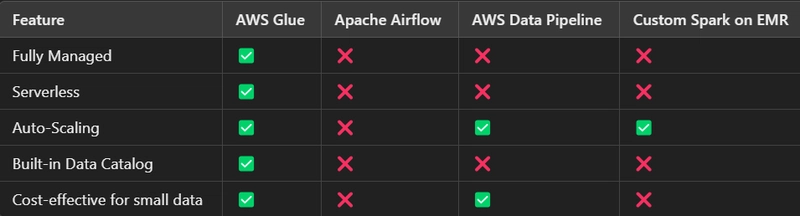

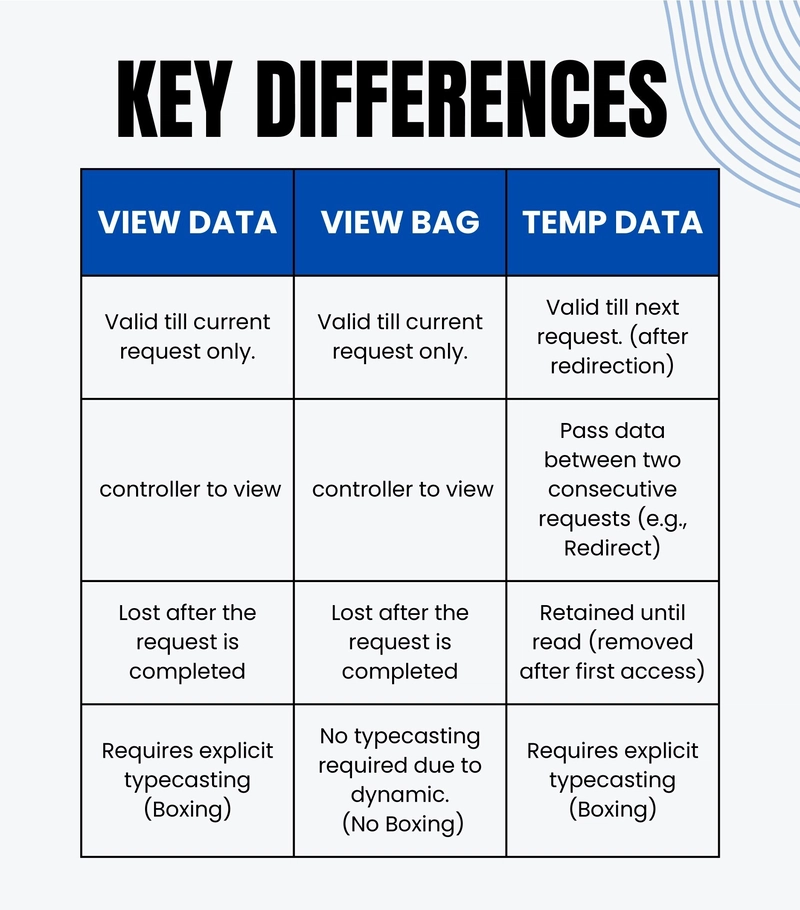

Comparatively to other ETL tools, how AWS Glue stands:

Overview of Pipeline Architectural Design:

Three steps comprise this ETL pipeline:

- AWS Lambda holds raw data gleaned from an API in S3.

- AWS Glue shapes, cleans, and enriches the data.

- For best searching, the processed data is kept in Parquet form on S3.

Step 1: Configuring AWS S3 for Data Storage

Our ETL pipeline’s centralized storage comes from Amazon S3. Set it up like this:

- Visit AWS S3 Console at https://s3.console.aws.amazon.com/s3/home.

- Click Create bucket and type a distinctive name (my-etl-data-lake).

- Select an area near your data sources — say, us-east-1.

- Keep the settings preset and click Create bucket.

Step 2: AWS Lambda Data Extraction

Data retrieval from an API and storage in S3 falls to AWS Lambda.

2.1 Generate a Lambda Function:

Visit AWS Lambda Consultable (https://console.aws.amazon.com/lambda/home)

Click Create Function; choose Author from scratch.

Enter the function name (e.g., etl_data_extractor).

Select Python 3.8 as your runtime.

Select Create a new role with basic rights under Permissions.

Click Create function.

2.2 Code to Retrieve Information

Change the default function code with:

import json

import boto3

import requests

s3_client = boto3.client('s3')

API_URL = "https://api.example.com/data"

def lambda_handler(event, context):

response = requests.get(API_URL)

if response.status_code == 200:

data = response.json()

# Store data in S3

s3_client.put_object(

Bucket="my-etl-data-lake",

Key="raw/data.json",

Body=json.dumps(data)

)

return {"statusCode": 200, "body": "Data extracted successfully"}

return {"statusCode": response.status_code, "body": "Failed to fetch data"}

Step 3: Transforming data using AWS Glue

Raw data is formatted structurally by AWS Glue.

3.1 Write an AWS Glue Job.

Visit the AWS Glue Console (https://console.aws.amazon.com/glue/home)

Click Jobs > Add Job.

Enter Job Name (e.g., etl_transformation).

Choose a fresh script for your own work.

Select an IAM role with S3 read/write authority.

Click Create.

3.2 Create the AWS Glue Transformation Script:

Change the default script to:

from awsglue.transforms import ApplyMapping

from awsglue.context import GlueContext

from pyspark.context import SparkContext

sc = SparkContext()

glueContext = GlueContext(sc)

spark = glueContext.spark_session

# Read raw data from S3

raw_data = glueContext.create_dynamic_frame.from_options(

"s3",

{"paths": ["s3://my-etl-data-lake/raw/"], "format": "json"}

)

# Apply transformations

transformed_data = ApplyMapping.apply(

frame=raw_data,

mappings=[("id", "string", "id", "string"),

("name", "string", "name", "string"),

("age", "int", "age", "int")]

)

# Write transformed data back to S3

glueContext.write_dynamic_frame.from_options(

frame=transformed_data,

connection_type="s3",

connection_options={"path": "s3://my-etl-data-lake/processed/"},

format="parquet"

)

Step 4: Automating Execution

Change AWS Lambda to start AWS Glue on demand:

import boto3

glue_client = boto3.client('glue')

def lambda_handler(event, context):

response = glue_client.start_job_run(JobName="etl_transformation")

return {

"statusCode": 200,

"body": f"Glue ETL job triggered: {response['JobRunId']}"

}

Thoughts Final:

A cost-effective, scalable, production-ready, completely automated, serverless ETL pipeline is here. We reduce handwork by using AWS Glue for transformation, Lambda for automation, and S3 as a data lake, therefore guaranteeing dependability.

Following Steps:

✔ Store analytic data on Amazon Redshift.

✔ Partitioning improves Glue performance.

✔ Lambda’s retries help to create fault tolerance.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

(1).webp?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)