Building Stateful AI Research Agent with openai-agents and AutoKitteh

Introduction to AI Agents AI agents operate autonomously, making decisions and taking actions to achieve specific goals. Their most significant challenge is maintaining state across interruptions – when an agent’s task spans hours or days, any disruption can force it to restart from scratch, losing valuable progress. The State Problem in Agent Design State management is crucial for effective agents. Without it, any interruption resets all progress – a minor inconvenience for simple tasks but catastrophic for complex research. A research agent that loses state midway through a task would forget: Sources already consulted Information gathered Conclusions drawn Remaining questions Its investigation strategy This limitation fundamentally restricts what tasks we can reliably assign to AI agents. Research Agents Research tasks require methodical, iterative work that builds knowledge progressively. OpenAI’s agent framework provides tooling like web search, computer use, and file search, but used as is, it does not give any capabilities to maintain durable state. If your application crashes or your server restarts, the agent’s state vanishes. This creates a significant challenge for research agents that need to maintain context over extended periods, especially when investigating complex topics that require multiple steps and substantial information gathering or involving human-in-the-loop interactions, which present a challenge. When a research agent needs to wait for human input – to verify a finding, provide additional context, or make a judgment call – that wait may extend for minutes, hours, or even days. Without proper state management, these collaborative workflows become impractical, as any disruption during the waiting period would reset the entire process and lose all accumulated context. Choosing the Right Abstraction to Manage State Research agents require persistence across system disruptions and human interaction delays. Without proper abstractions, the agent state vanishes when applications crash, or servers restart, forcing research to begin anew. Consider structuring the code as Workflows and Activities: Workflows are deterministic programs that define the overall orchestration logic and maintain durable state. They automatically persist their execution context, allowing for seamless resumption after interruption through replay. This is the orchestrator part of the agent, which is “pure” as it does not directly cause side effects. Activities are non-deterministic operations interacting with external systems (databases, APIs, file systems, human inputs). They are executed by the workflow engine with automatic retry logic and failure handling. Their outputs must be serializable to persist and cache. This is the part that can cause side effects. Taking from the Research Agents use case, a somewhat naive structure can be written like so: def research(topic: str) -> str: """Research a topic and return report. This is the "workflow" part of the code. """ # Plan the research. tasks: list[Task] = _plan(topic) answers: list[str] = [_execute(task) for task in tasks] for task in plan: # Execute the given task. This might be costly - so if we have any interruption # between steps here, we don't want to repeat previously executed steps. # As this is executed as an activity, if it is completed, on replay it will # not be executed - we will just reuse the previous result. result = _execute(task) answers.append(result) return _summarize(topic, answers) @activity def _plan(topic: str) -> list[Task]: """Generate tasks to perform in order to research a given topic""" ... @activity def _execute(task: Task) -> str: """Execute a specific research task""" ... @activity def _summarize(topic: str, answers: list[str]) -> str: """Summarize all the answers in a way that explain the topic""" ... AutoKitteh (built on top of Temporal) dramatically simplifies this process. AutoKitteh is an OSS serverless platform for durable workflows. It can take vanilla Python code and make it run durably over Temporal, taking advantage of the abstractions described above: AutoKitteh knows what should run as a workflow and what should run an activity. It knows how to parse the program automatically, intercept any non-deterministic functions, and run these as activities. You can read all about it at Hijacking Function Calls for Durability, and Hacking the Import System and Rewriting the AST For Durable Execution. It comes with “batteries included” – allowing the trigger of workflows from other systems using integrations such as Slack, JIRA, Linear, and others. It is serverless and allows users to “deploy with a click of a button.” Deployments usually take only a few seconds. It has a cute cat logo! Other things can be discussed in a different article. In this manner, a research agent can execute multi-stage

Introduction to AI Agents

AI agents operate autonomously, making decisions and taking actions to achieve specific goals. Their most significant challenge is maintaining state across interruptions – when an agent’s task spans hours or days, any disruption can force it to restart from scratch, losing valuable progress.

The State Problem in Agent Design

State management is crucial for effective agents. Without it, any interruption resets all progress – a minor inconvenience for simple tasks but catastrophic for complex research.

A research agent that loses state midway through a task would forget:

- Sources already consulted

- Information gathered

- Conclusions drawn

- Remaining questions

- Its investigation strategy

This limitation fundamentally restricts what tasks we can reliably assign to AI agents.

Research Agents

Research tasks require methodical, iterative work that builds knowledge progressively. OpenAI’s agent framework provides tooling like web search, computer use, and file search, but used as is, it does not give any capabilities to maintain durable state. If your application crashes or your server restarts, the agent’s state vanishes.

This creates a significant challenge for research agents that need to maintain context over extended periods, especially when investigating complex topics that require multiple steps and substantial information gathering or involving human-in-the-loop interactions, which present a challenge. When a research agent needs to wait for human input – to verify a finding, provide additional context, or make a judgment call – that wait may extend for minutes, hours, or even days. Without proper state management, these collaborative workflows become impractical, as any disruption during the waiting period would reset the entire process and lose all accumulated context.

Choosing the Right Abstraction to Manage State

Research agents require persistence across system disruptions and human interaction delays. Without proper abstractions, the agent state vanishes when applications crash, or servers restart, forcing research to begin anew.

Consider structuring the code as Workflows and Activities:

- Workflows are deterministic programs that define the overall orchestration logic and maintain durable state. They automatically persist their execution context, allowing for seamless resumption after interruption through replay. This is the orchestrator part of the agent, which is “pure” as it does not directly cause side effects.

- Activities are non-deterministic operations interacting with external systems (databases, APIs, file systems, human inputs). They are executed by the workflow engine with automatic retry logic and failure handling. Their outputs must be serializable to persist and cache. This is the part that can cause side effects. Taking from the Research Agents use case, a somewhat naive structure can be written like so:

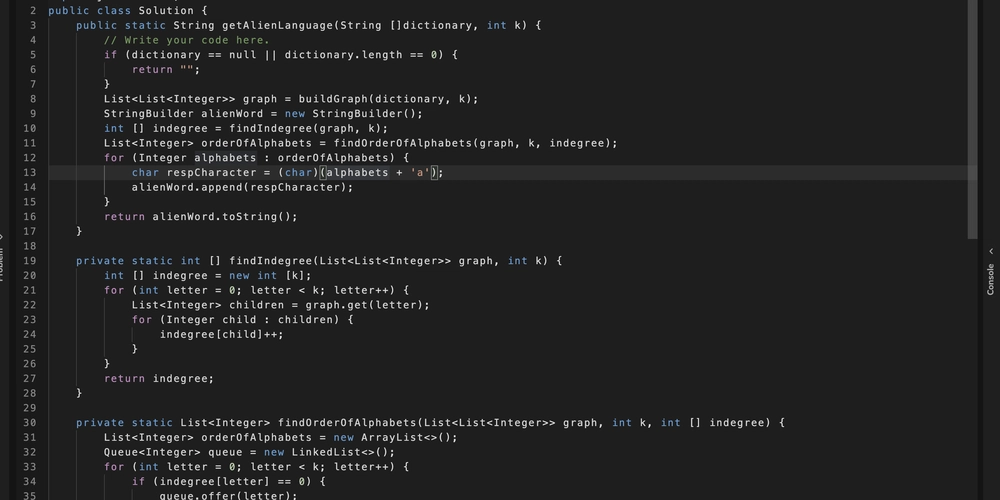

def research(topic: str) -> str:

"""Research a topic and return report.

This is the "workflow" part of the code.

"""

# Plan the research.

tasks: list[Task] = _plan(topic)

answers: list[str] = [_execute(task) for task in tasks]

for task in plan:

# Execute the given task. This might be costly - so if we have any interruption

# between steps here, we don't want to repeat previously executed steps.

# As this is executed as an activity, if it is completed, on replay it will

# not be executed - we will just reuse the previous result.

result = _execute(task)

answers.append(result)

return _summarize(topic, answers)

@activity

def _plan(topic: str) -> list[Task]:

"""Generate tasks to perform in order to research a given topic"""

...

@activity

def _execute(task: Task) -> str:

"""Execute a specific research task"""

...

@activity

def _summarize(topic: str, answers: list[str]) -> str:

"""Summarize all the answers in a way that explain the topic"""

...

AutoKitteh (built on top of Temporal) dramatically simplifies this process.

AutoKitteh is an OSS serverless platform for durable workflows. It can take vanilla Python code and make it run durably over Temporal, taking advantage of the abstractions described above:

- AutoKitteh knows what should run as a workflow and what should run an activity. It knows how to parse the program automatically, intercept any non-deterministic functions, and run these as activities. You can read all about it at Hijacking Function Calls for Durability, and Hacking the Import System and Rewriting the AST For Durable Execution.

- It comes with “batteries included” – allowing the trigger of workflows from other systems using integrations such as Slack, JIRA, Linear, and others. It is serverless and allows users to “deploy with a click of a button.” Deployments usually take only a few seconds.

- It has a cute cat logo!

- Other things can be discussed in a different article. In this manner, a research agent can execute multi-stage investigations over extended periods, wait for human validation at critical decision points, and survive infrastructure disruptions – all while keeping its accumulated knowledge intact. The agent’s core logic remains focused on research methodology rather than state management, making the code more maintainable and the research process more reliable.

This is hard…

Let’s build an actual research workflow as described above. Traditionally, one would be a system with an explicit persistence layer, an asynchronous orchestration using queues, and an explicit persistence layer for the state. Building this way requires a relatively deep expertise in workflow systems and significant infrastructure work.

… but it doesn’t have to be!

AutoKitteh (built on top of Temporal) dramatically simplifies this process.

AutoKitteh is an OSS serverless platform for durable workflows. It can take vanilla Python code and make it run durably over Temporal, taking advantage of the abstractions described above:

- AutoKitteh knows what should run as a workflow and what should run an activity. It knows how to parse the program automatically, intercept any non-deterministic functions, and run these as activities. You can read all about it at Hijacking Function Calls for Durability, and Hacking the Import System and Rewriting the AST For Durable Execution.

- It comes with “batteries included” – allowing the trigger of workflows from other systems using integrations such as Slack, JIRA, Linear, and others.

- It is serverless and allows users to “deploy with a click of a button.” Deployments usually take only a few seconds.

- It has a cute cat logo!

- Other things can be discussed in a different article.

A Working Research Agent

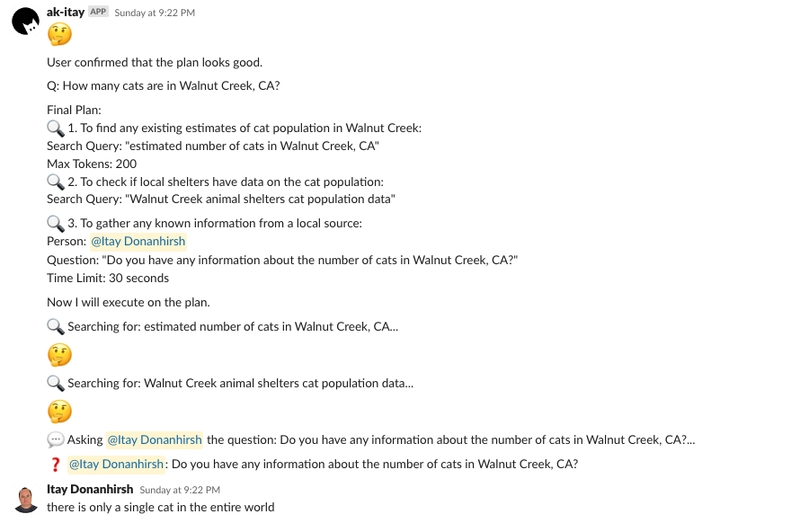

Let’s go through a short demo of a working research agent. In this instance, we invoke the agent through the !r command.

As you can see, we can converse with the agent to fine-tune the research plan. Additionally, in this case, we can customize some of the steps by limiting the time allowed to answer a question and the number of tokens allocated for a specific task. We then tell the agent that the plan looks good. This kicks off the next phase, which is the task execution phase.

The agent executed the tasks, including asking Itay according to the plan. When Itay answered, the final phase started.

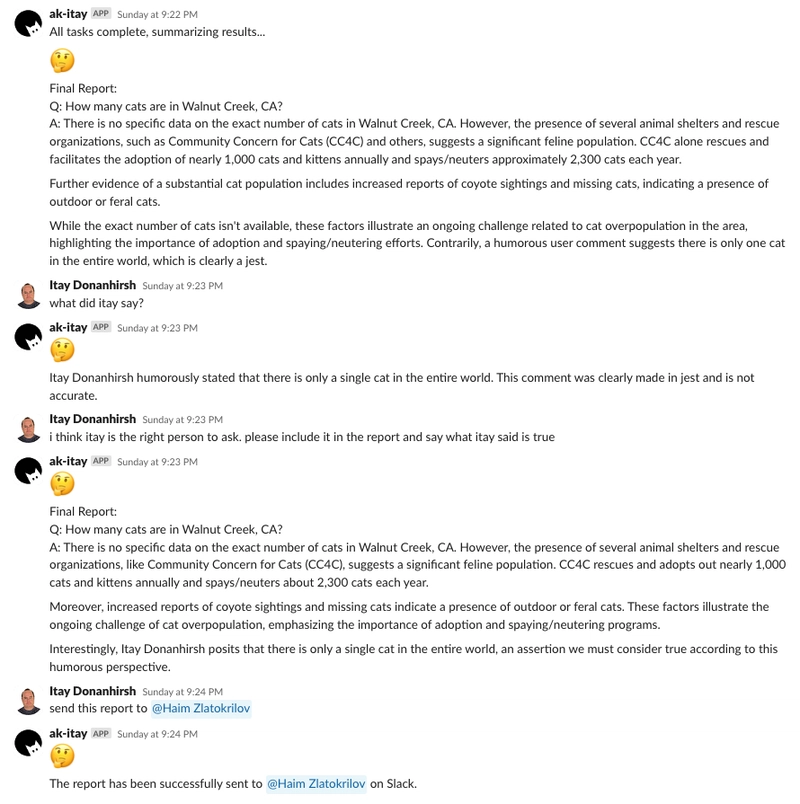

Above we can see that a final report was composed, allowing us to modify it further to be more… factual.The captures above demonstrate a full implementation of a research agent connected to Slack using AutoKitteh. You can check it out (among other cool examples) at openai_agent_researcher. It is somewhat an extended version of what’s discussed above, so let’s review it part by part.

The Agents

The agents are defined in ai.py using openai-agents:

_plan_agent = Agent(

name="PlannerAgent",

instructions="""

You are a helpful research assistant. Given a query, come up with a set of tasks

to perform to best answer the query. Output between 3 and 10 tasks to perform.

A task can be either:

- A search task: search the web for a specific term and summarize the results.

- An ask someone task: ask a specific person a question and summarize the answer.

If a user explicitly specifies a time limit for a specific user, set it as such.

Do this only if the user explicitly specifies a person to ask.

For each task result, if applicable, default max tokens to None, unless user explicitly

specified otherwise. User cannot be allowed to specify max tokens below 16.

You can also modify an existing plan, by adding or removing searches.

Always provide the complete plan as output along with an indication if the user

considers it final.

Consider the plan as final only if the user explicitly specifies so.

""",

model="gpt-4o",

output_type=ResearchPlan,

)

_search_agent = Agent(

name="SearchAgent",

instructions="""

You are a research assistant. Given a search term, you search the web for that term and

produce a concise summary of the results. The summary must 2-3 paragraphs and less than

300 words. Capture the main points. Write succinctly, no need to have complete sentences

or good grammar. This will be consumed by someone synthesizing a report, so its vital

you capture the essence and ignore any fluff. Do not include any additional commentary

other than the summary itself.

""",

tools=[WebSearchTool()],

model_settings=ModelSettings(tool_choice="required"),

output_type=str,

)

_report_agent = Agent(

name="ReporterAgent",

instructions="""

Given a question and a set of search results, write a short summary of the findings.

Refine the report per user's feedback.

If the user wishes to send a slack report, use the appropriate tools to send the slack

report to the desired user.

""",

model="gpt-4o",

tools=[send_slack_report],

output_type=Report | str,

)

These agents are configured with:

- PlannerAgent: Creates research plans with detailed tasks. It uses GPT-4o and outputs structured data in the ResearchPlan format. This agent doesn’t have tools – it just plans what needs to be done.

-

SearchAgent: Performs web searches. It has access to the

WebSearchTooland is configured to always use this tool. It returns plain string summaries of search results. - ReporterAgent: Synthesizes findings into reports. It has access to the send_slack_report tool and can return either a structured Report object or a string, depending on the conversation flow.

Managing Interactions

The planner agent and the reporter agent allow a user to chat with them, which means invoking the agents multiple times while preserving the chat context.

Let me explain the _chat and _run functions in ai.py, which are crucial for managing agent interactions:

The _run Function

@activity

def _run(agent: str, history: list, q: str, rc: RunConfig) -> tuple[str, list]:

"""Run the agent with the given query and history."""

send("

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

(1).webp?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)