Building My Own Google (Sort of)

Okay, so picture this: I had this grand vision, right? Like, “I’m gonna build a search engine!” Cue dramatic music and maybe a montage of me looking intensely at a computer screen. The initial thought was to conquer the whole internet — crawl every website, index every page, the whole shebang. Basically, build my own Google. Then reality hit me like a ton of digital bricks. Crawling the entire web? Yeah, that’s a tad bit ambitious for a weekend project (or even a few years, let’s be honest). Plus, I didn’t want to waste my whole weekend crawling the net — I wanted to do the good stuff and implement the algorithms. But then I looked at my own hard drive, and BAM! Inspiration struck. My friend have this ridiculously huge collection of documents, mostly research papers in PDF format iny my hard-drive. It’s like my own little Library of Alexandria, except instead of papyrus scrolls, it’s endless PDFs. And let me tell you, trying to find one specific paper in that digital jungle is a special kind of torture. So, I thought, “Forget the internet for now! I’ll build a search engine just for these document!” Think of it as going from wanting to build a spaceship to deciding to soup up your car first. Still cool, just a slightly smaller scale (for now!). This way, I wouldn’t have to worry about all that pesky crawling of the web. My data was already there, just waiting to be unleashed. So, that’s how this whole crazy adventure started — my quest to build a search engine . Let’s see how it went! Step 1: Extracting Text From My Mountain of PDFs Alright, first things first. My documents were mostly PDFs, and PDFs can be sneaky little things. Some of them are just text-based (easy peasy), but others are basically glorified images of text. You know, the kind where Ctrl+F is as useless as a chocolate teapot. So, I needed two strategies: For normal PDFs — Just grab the text like a civilized human being. For scanned/image-based PDFs — Time to bring in some OCR magic. Extracting Text from Normal PDFs This part was relatively painless. I used pdf-parse, a nice little JavaScript library that lets you extract text from normal PDFs without breaking a sweat. import fs from 'fs'; import pdfParse from 'pdf-parse'; async function extractTextFromPDF(pdfPath) { const dataBuffer = fs.readFileSync(pdfPath); const data = await pdfParse(dataBuffer); return data.text; } Run this, and BOOM — instant text from your PDF. Tackling Scanned PDFs with OCR (Optical Character Recognition) I had a simple mission: extract images from PDFs. Should be easy, right? Wrong. I scoured npm for a decent library, but nada. So, that night, I did the unthinkable — I went behind JavaScript’s back (forgive me) and turned to Python. One small problem: I don’t actually know much Python. But you know who does? Mr. GPTee. With a little searching, I stumbled upon PyMuPDF and got the images out. Blissfully unaware of the headaches I’d soon face juggling two languages and inter-process communication, I charged ahead, convinced nothing could go wrong. Step 1: Extract images from the PDF. import fitz # PyMuPDF import sys import os def extract_images(pdf_path, output_dir): doc = fitz.open(pdf_path) for i in range(len(doc)): for img in doc.get_page_images(i): xref = img[0] image = doc.extract_image(xref) img_bytes = image["image"] img_ext = image["ext"] img_filename = f"{output_dir}/image_{i+1}_{xref}.{img_ext}" with open(img_filename, "wb") as img_file: img_file.write(img_bytes) print(f"Saved ${img_filename}") if __name__ == "__main__": pdf_path = sys.argv[1] output_dir = sys.argv[2] os.makedirs(output_dir, exist_ok=True) extract_images(pdf_path, output_dir) // Function to execute Python command export async function executePythonCommand(command, args = []) { try { const fullCommand = `${path.resolve( VIRTUAL_PYTHON_EXE )} ${command} ${args.join(' ')}`; coloredLog(fullCommand); const { stdout, stderr } = await exec(fullCommand); if (stderr) { throw new Error(stderr); } return stdout; } catch (error) { console.error(`Error executing command: ${error.message}`); } } Step 2: Run OCR on those extracted images. Now, for OCR, I stumbled upon a pretty awesome project with an even more awesome name — Tesseract.js. I mean, how could I not check it out? This guy could read text from images, so I figured it was exactly what I needed. import { createWorker } from 'tesseract.js'; import path from 'path'; import fs from 'fs'; import { exec } from 'child_process'; const TESERACT_PATH = 'tessdata'; const TEMP_IMAGE_DIR = 'temp_images'; // Directory for extracted images async function getTextFromImages(tempImageDir: string) { const worker = await createWorker('eng', 1, { langPath: path.resolve(TESERACT_PATH), }); const files = fs.readdirSync(TEMP_IMAGE_DIR); /

Okay, so picture this: I had this grand vision, right? Like, “I’m gonna build a search engine!” Cue dramatic music and maybe a montage of me looking intensely at a computer screen. The initial thought was to conquer the whole internet — crawl every website, index every page, the whole shebang. Basically, build my own Google.

Then reality hit me like a ton of digital bricks. Crawling the entire web? Yeah, that’s a tad bit ambitious for a weekend project (or even a few years, let’s be honest). Plus, I didn’t want to waste my whole weekend crawling the net — I wanted to do the good stuff and implement the algorithms. But then I looked at my own hard drive, and BAM! Inspiration struck. My friend have this ridiculously huge collection of documents, mostly research papers in PDF format iny my hard-drive. It’s like my own little Library of Alexandria, except instead of papyrus scrolls, it’s endless PDFs. And let me tell you, trying to find one specific paper in that digital jungle is a special kind of torture.

So, I thought, “Forget the internet for now! I’ll build a search engine just for these document!” Think of it as going from wanting to build a spaceship to deciding to soup up your car first. Still cool, just a slightly smaller scale (for now!). This way, I wouldn’t have to worry about all that pesky crawling of the web. My data was already there, just waiting to be unleashed. So, that’s how this whole crazy adventure started — my quest to build a search engine . Let’s see how it went!

Step 1: Extracting Text From My Mountain of PDFs

Alright, first things first. My documents were mostly PDFs, and PDFs can be sneaky little things. Some of them are just text-based (easy peasy), but others are basically glorified images of text. You know, the kind where Ctrl+F is as useless as a chocolate teapot.

So, I needed two strategies:

For normal PDFs — Just grab the text like a civilized human being.

For scanned/image-based PDFs — Time to bring in some OCR magic.

Extracting Text from Normal PDFs

This part was relatively painless. I used pdf-parse, a nice little JavaScript library that lets you extract text from normal PDFs without breaking a sweat.

import fs from 'fs';

import pdfParse from 'pdf-parse';

async function extractTextFromPDF(pdfPath) {

const dataBuffer = fs.readFileSync(pdfPath);

const data = await pdfParse(dataBuffer);

return data.text;

}

Run this, and BOOM — instant text from your PDF.

Tackling Scanned PDFs with OCR (Optical Character Recognition)

I had a simple mission: extract images from PDFs. Should be easy, right? Wrong. I scoured npm for a decent library, but nada. So, that night, I did the unthinkable — I went behind JavaScript’s back (forgive me) and turned to Python. One small problem: I don’t actually know much Python. But you know who does? Mr. GPTee. With a little searching, I stumbled upon PyMuPDF and got the images out. Blissfully unaware of the headaches I’d soon face juggling two languages and inter-process communication, I charged ahead, convinced nothing could go wrong.

Step 1: Extract images from the PDF.

import fitz # PyMuPDF

import sys

import os

def extract_images(pdf_path, output_dir):

doc = fitz.open(pdf_path)

for i in range(len(doc)):

for img in doc.get_page_images(i):

xref = img[0]

image = doc.extract_image(xref)

img_bytes = image["image"]

img_ext = image["ext"]

img_filename = f"{output_dir}/image_{i+1}_{xref}.{img_ext}"

with open(img_filename, "wb") as img_file:

img_file.write(img_bytes)

print(f"Saved ${img_filename}")

if __name__ == "__main__":

pdf_path = sys.argv[1]

output_dir = sys.argv[2]

os.makedirs(output_dir, exist_ok=True)

extract_images(pdf_path, output_dir)

// Function to execute Python command

export async function executePythonCommand(command, args = []) {

try {

const fullCommand = `${path.resolve(

VIRTUAL_PYTHON_EXE

)} ${command} ${args.join(' ')}`;

coloredLog(fullCommand);

const { stdout, stderr } = await exec(fullCommand);

if (stderr) {

throw new Error(stderr);

}

return stdout;

} catch (error) {

console.error(`Error executing command: ${error.message}`);

}

}

Step 2: Run OCR on those extracted images.

Now, for OCR, I stumbled upon a pretty awesome project with an even more awesome name — Tesseract.js. I mean, how could I not check it out? This guy could read text from images, so I figured it was exactly what I needed.

import { createWorker } from 'tesseract.js';

import path from 'path';

import fs from 'fs';

import { exec } from 'child_process';

const TESERACT_PATH = 'tessdata';

const TEMP_IMAGE_DIR = 'temp_images'; // Directory for extracted images

async function getTextFromImages(tempImageDir: string) {

const worker = await createWorker('eng', 1, {

langPath: path.resolve(TESERACT_PATH),

});

const files = fs.readdirSync(TEMP_IMAGE_DIR);

// Process OCR in parallel for all images

const ocrPromises = files.map((file) =>

processOcrForImage(worker, path.resolve(TEMP_IMAGE_DIR, file))

);

const ocrResults = await Promise.all(ocrPromises);

await worker.terminate();

return ocrResults.filter((res) => res).join('\n');

}

async function processOcrForImage(worker: any, imgPath: string) {

try {

coloredLog(`Extracting text from ${imgPath} using OCR`);

const {

data: { text },

} = await worker.recognize(imgPath);

return text;

} catch (error: any) {

console.error(`Error processing OCR for ${imgPath}:${error.message}`);

return [];

} finally {

// Cleanup temporary image

if (fs.existsSync(imgPath)) {

fs.unlinkSync(imgPath);

}

}

}

Now, every word from those PDFs — whether originally text or an image — is extracted and ready to be searched.

Step 2: Cleaning Up the Chaos (Auto-Suggest & Lemmatization)

Now that I had all this raw text, it was a mess. Duplicate words, random typos, different forms of the same word (e.g., “running” vs. “run”). Enter Natural Language Processing (NLP).

I did three things:

Stopword Removal — Remove “the”, “and”, “of” — basically words that don’t help with searching.

Lemmatization — Convert words to their root form (e.g., “cars” → “car”).

OCR Auto-Suggestion — Fix misspelled words (because no OCR is perfect!).

export function getFilteredTokens(tokens, allowAlphaNumeric = false) {

const stopwordData =

fs.readFileSync(path.resolve(STOP_WORDS_TEXT), 'utf8');

const stopwords = stopwordData.split(/\r?\n/);

const filteredTokens = tokens.filter(

(token) =>

token && allowAlphaNumeric

? true

: /^[a-zA-Z]+$/.test(token) &&

!stopwords.includes(token) &&

token.length > 2

); // Remove empty, alphanumeric and stopwords tokens

return filteredTokens;

}

Using spaCy & a spell checker, I cleaned up the text:

I noticed something interesting — spaCy’s lemmatizer works way better when you feed it an entire chunk of text as context(which makes sense), while SpellChecker didn’t have much effect with or without context, performing best when given one word at a time .And hence we can do both in same loop, so I decided to first lemmatize the whole text and then, for each lemmatized token, if it originally came from OCR and lemmatizing had no effect (i.e., it remained the same), I autosuggested a better alternative. And, for good measure, I lemmatized those suggestions again to keep them conssitent.

import sys

import spacy

from spellchecker import SpellChecker

def process_text(input_file, output_file, auto_suggest, append):

try {

spell = SpellChecker()

nlp = spacy.load("en_core_web_lg")

# Read input text

with open(input_file, "r", encoding="utf-8") as infile:

text = infile.read().replace("\n\n", " ").replace("\n", " ").lower()

doc = nlp(text)

write_mode = "a" if append == 'true' else "w"

with open(output_file, write_mode, encoding="utf-8") as outfile:

for token in doc:

if len(token.text) <= 2: # Skip short and non-alphanumeric words

continue

lemma = token.lemma_

if token.text == lemma and auto_suggest == 'true': # If unchanged, apply auto_suggest

suggestion = spell.correction(token.text)

if suggestion:

lemma = nlp(suggestion)[0].lemma_ # Re-lemmatize the suggested word

outfile.write(f"{lemma}\n")

print(token,lemma)

print("Processing completed successfully.")

except Exception as e:

print(f"Error during processing: ${e}")

if __name__ == "__main__":

if len(sys.argv) != 5:

print("Usage: python process_text.py ")

sys.exit(1)

input_file = sys.argv[1]

output_file = sys.argv[2]

auto_suggest = sys.argv[3]

append = sys.argv[4]

process_text(input_file, output_file, auto_suggest, append)

Now, every word from those PDFs — whether originally text or an image — is extracted and ready to be searched.

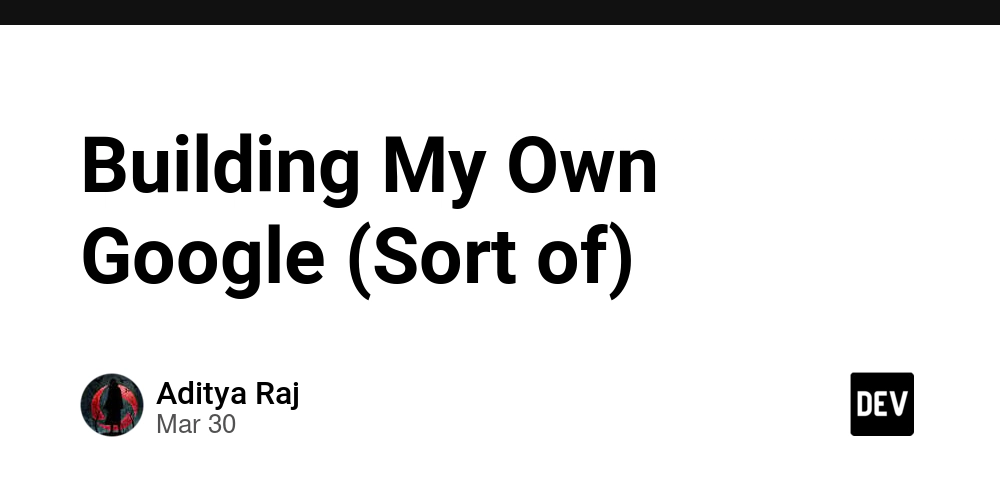

Step 3: Indexing My Documents

Okay, now that I’ve got my text all cleaned up, it’s time to talk about how to make it searchable. This is where indexing comes in, and then I found out about the Inverted Index, which is basically what it sounds like: rather than storing the tokens each document has, we store which documents contain a given token.So basically we will have something like:

token1 : [doc1,doc2];

token2 : [doc2,doc3,doc4];

…

Where to Store This Magical Index?

Now, the question is, where do we keep this inverted index? We could store it in a giant file, but then we’d have to read through the whole file every time we want to search for something. To make that file a bit less of a headache and more of a speed demon, we’d have to implement a structure similar to a B-tree or B+ tree. And guess what? That’s precisely what databases do under the hood — they’re basically pre-built for this kind of organized chaos.

Now, the problem with relational databases is that they like things too nice and organized, which often means breaking data into multiple tables (normalization). It’s like they’re the Monica Geller, Dwight Schrute,Raymond Holt of databases. They’re super efficient, but sometimes, you just want to throw all your stuff in one pile and find it later, you know?

For example, we can’t just store “word1 -> doc1, doc2, doc3” in a single cell of a table. A relational database would throw a fit and insist we split it into a few tables:

Tokens Table: This table stores all the unique words (tokens) we’ve found in our documents. Each word gets a unique ID.

Inverted Index Table: This table stores the relationships between words and documents. It says which word (using the ID from the Tokens table) appears in which document (using the ID from the Reports table), and how often?

Here’s what that might look like in code (using a database schema):

import { sequelize, DataTypes } from '@/database/db';

export const Report = sequelize.define(

'Report',

{

reportId: {

type: DataTypes.INTEGER,

autoIncrement: true,

primaryKey: true,

field: 'REPORT_ID',

},

title: {

type: DataTypes.STRING,

field: 'TITLE',

},

documentFilePath: {

type: DataTypes.STRING,

field: 'DOCUMENT_FILE_PATH',

},

wordCount: {

type: DataTypes.INTEGER,

field: 'WORD_COUNT',

},

},

{

tableName: 'DSE_REPORT',

underscored: true,

}

);

export const Token = sequelize.define(

'Token',

{

tokenId: {

type: DataTypes.INTEGER,

autoIncrement: true,

primaryKey: true,

field: 'TOKEN_ID',

},

token: {

type: DataTypes.STRING,

allowNull: false,

field: 'TOKEN',

},

corpusFrequency: {

type: DataTypes.INTEGER,

allowNull: false,

field: 'CORPUS_FREQUENCY',

},

numberOfReport: {

type: DataTypes.INTEGER,

allowNull: false,

field: 'NUMBER_OF_REPORT',

},

},

{

tableName: 'DSE_TOKEN',

underscored: true,

}

);

export const InvertedIndex = sequelize.define(

'InvertedIndex',

{

id: { type: DataTypes.INTEGER, autoIncrement: true, primaryKey: true },

frequency: {

type: DataTypes.INTEGER,

allowNull: false,

field: 'FREQUENCY',

},

tokenId: {

type: DataTypes.INTEGER,

field: 'TOKEN_ID',

},

reportId: {

type: DataTypes.INTEGER,

field: 'REPORT_ID',

},

},

{

tableName: 'DSE_INVERTED_INDEX',

underscored: true,

}

);

Maybe, I could’ve gone with a NoSQL database, but I already had my SQL database up and running. So, I decided to stick to it. It’s like already owning a gaming PC — no need to buy a PlayStation just to play the same game, right?

Now that the database models are designed, it’s time to roll up our sleeves and get this data into the tables. It’s not too complicated, but there are a few gotchas to keep in mind. Here’s the code:

export async function saveTokens(tokens: any, reportId: any, totalWords: any) {

const transaction = await sequelize.transaction(); // Start transaction

try {

await Report.update(

{ wordCount: totalWords },

{ where: { reportId }, transaction }

);

const tokenList = Object.entries(tokens);

// Get existing tokens in batches

const savedExistingTokens = await findTokensInBatches(

tokenList,

transaction

);

const newTokens: { token: string; frequency: unknown }[] = [];

const existingTokens: any[] = [];

tokenList.forEach(([token, frequency]) => {

const existingToken = savedExistingTokens.find(

(t: { token: string }) => t.token === token

);

if (existingToken) {

existingTokens.push({

token,

frequency,

...existingToken,

});

} else {

newTokens.push({ token, frequency });

}

});

// Insert new tokens

if (newTokens.length) {

const savedTokens = await bulkCreateTokens(

newTokens.map(({ token, frequency }) => ({

token,

corpusFrequency: frequency,

numberOfReport: 1,

})),

transaction

);

await bulkCreateInvertedIndex(

savedTokens.map(({ tokenId, corpusFrequency }: any) => ({

frequency: corpusFrequency,

reportId,

tokenId,

})),

transaction

);

}

// Update existing tokens

await bulkUpdateTokens(existingTokens, transaction);

// Insert inverted index entries

await bulkCreateInvertedIndex(

existingTokens.map(({ tokenId, frequency }) => ({

frequency: frequency,

reportId,

tokenId,

})),

transaction

);

await transaction.commit(); // Commit transaction if everything succeeds

} catch (error: any) {

await transaction.rollback(); // Rollback on failure

console.error('Transaction failed, rolling back:', error.message);

throw error;

}

}

/**

* Find tokens in batches to avoid Oracle's 1000-item IN() limit.

*/

export async function findTokensInBatches(tokenList, transaction) {

let existingTokens = [];

for (let i = 0; i < tokenList.length; i += BATCH_SIZE) {

const batch = tokenList.slice(i, i + BATCH_SIZE);

const tokensBatchDB = await Token.findAll({

where: { token: batch.map((t) => t[0]) },

transaction,

});

const tokensBatch = JSON.parse(JSON.stringify(tokensBatchDB));

existingTokens = existingTokens.concat(tokensBatch);

}

return existingTokens;

}

/**

* Bulk create tokens in batches

*/

export async function bulkCreateTokens(newTokens, transaction) {

let savedTokens = [];

for (let i = 0; i < newTokens.length; i += BATCH_SIZE) {

const newSavedTokensDB = await Token.bulkCreate(

newTokens.slice(i, i + BATCH_SIZE),

{ transaction }

);

const newSavedTokens = JSON.parse(JSON.stringify(newSavedTokensDB));

savedTokens = savedTokens.concat(newSavedTokens);

}

return savedTokens;

}

/**

* Bulk update existing tokens using CASE WHEN to increment corpus frequency.

*/

export async function bulkUpdateTokens(existingTokens, transaction) {

if (existingTokens.length === 0) return;

for (let i = 0; i < existingTokens.length; i += BATCH_SIZE) {

const batch = existingTokens.slice(i, i + BATCH_SIZE);

const tokenIds = batch.map(({ tokenId }) => tokenId).join(', ');

const updateCorpusSQL = `

UPDATE DSE_TOKEN

SET CORPUS_FREQUENCY = CASE

${batch

.map(

({ tokenId, frequency }) =>

`WHEN TOKEN_ID = ${tokenId} THEN CORPUS_FREQUENCY + ${frequency}`

)

.join(' ')}

END

WHERE TOKEN_ID IN (${tokenIds});

`;

const updateReportSQL = `

UPDATE DSE_TOKEN

SET NUMBER_OF_REPORT = NUMBER_OF_REPORT + 1

WHERE TOKEN_ID IN (${tokenIds});

`;

await sequelize.query(updateCorpusSQL, {

type: sequelize.QueryTypes.UPDATE,

transaction,

});

await sequelize.query(updateReportSQL, {

type: sequelize.QueryTypes.UPDATE,

transaction,

});

}

}

/**

* Bulk insert inverted index entries in batches

*/

export async function bulkCreateInvertedIndex(indexEntries, transaction) {

for (let i = 0; i < indexEntries.length; i += BATCH_SIZE) {

await InvertedIndex.bulkCreate(indexEntries.slice(i, i + BATCH_SIZE), {

transaction,

});

}

}

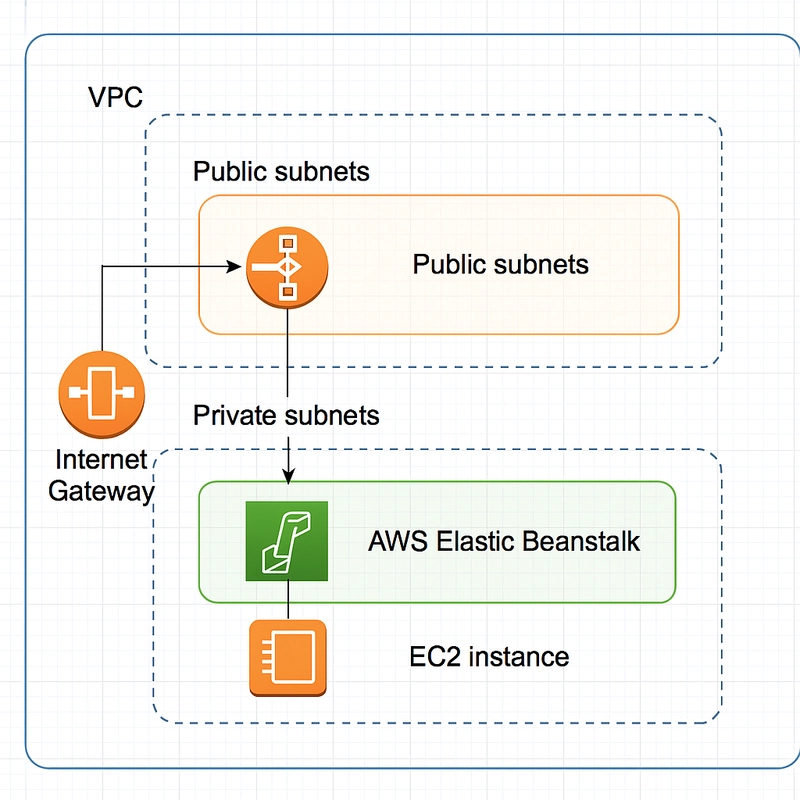

Step 4: Searching the Index

Now that we’ve indexed our documents, it’s time to bring the search function to life. Since most of the heavy lifting happened during indexing, the search function itself is relatively straightforward — just a bit of query cleaning (lowercasing, removing stopwords, etc.). Also, since we lemmatized during indexing, we need to lemmatize the query too.

To do this, we need to pass parameters between our JavaScript code and the Python script we used earlier. Now, we could use files again, but come on — reading and writing files just to process a tiny 6–7 token query feels like starting a whole ass war over a childhood crush. So, I explored few other methods:

Semaphores & Shared Memory — Fancy, but gave me cryptic errors that felt like the system was gaslighting me.

Standard Output (stdout) — I have this approach before but it is not quite reliable (imagine trying to have a serious conversation by shouting across a crowded room).

Message Queues — A solid option, but felt overkill for tiny queries.

Sockets — Great if I wanted to set up a whole networking operation, but that’s exactly what I was trying to avoid.

I’ve always wanted to use semaphores in high-level languages like JS and Python!” But, well… turns out, the system had other plans. It threw some weird errors that I definitely planned to debug (and then completely forgot about). So, in true “I’ll-fix-it-later” fashion, I went back to files.

Since I have to update my search function later anyway for ranking, I thought I would try it then, but now, while writing this article, I remember that forgot about it completly.

Also, for now, I did a quite simple ranking i.e just find the documents with maximum query tokens and in case of a tie, use the sum of the frequency of those tokens in the document to settle, as shown in the code below. We will do Ranking in a bit, I promise it’ll be more sophisticated than this.Definitely.Probably.Maybe

Search Results

export async function search(query: string) {

try {

const start = performance.now();

const random = (Math.random() + 1).toString(36).substring(7); // use session id

const queryInputFile = path.resolve(

`${TEMP_SEARCH_DIR}/${random}/input-search-text.txt`

);

const queryLemmatizedFile = path.resolve(

`${TEMP_SEARCH_DIR}/${random}/lemmatized-search-text.txt`

);

writeTextToFile(query, queryInputFile);

const lemmatizedScriptFilePath = path.resolve(LEMMATIZER_SCRIPT_PATH);

await runLemmatizer(

lemmatizedScriptFilePath,

queryInputFile,

queryLemmatizedFile

);

const lemmatizedTokens = readWordsFromFile(queryInputFile)[0]?.split(" ");

if (lemmatizedTokens.length === 0) {

return [];

}

const filteredTokens = getFilteredTokens(lemmatizedTokens, true);

const lowerCaseTokens = filteredTokens.map((token: string) =>

token

.split("")

.map((char) => (/[a-zA-Z]/.test(char) ? char.toLowerCase() : char))

.join("")

);

const reportListDB: any = await Report.findAll({

include: [

{

model: Token,

where: {

token: lowerCaseTokens,

},

},

],

});

const reportList = await JSON.parse(JSON.stringify(reportListDB));

const end = performance.now();

return {

queryTokenList: lowerCaseTokens,

timeTaken: ((end - start) / 1000).toFixed(4),

reportList: reportList.sort(

(a: any, b: any) =>

b.Tokens.length - a.Tokens.length ||

b.Tokens.reduce(

(x: any, y: any) => x + y.InvertedIndex.frequency,

0

) -

a.Tokens.reduce(

(x: any, y: any) => x + y.InvertedIndex.frequency,

0

)

),

};

// console.log(util.inspect(reportList, false, null, true));

} catch (e) {

console.error(e);

throw e; // Propagate the error to the API route

} finally {

if (fs.existsSync(TEMP_SEARCH_DIR)) {

fs.rmSync(TEMP_SEARCH_DIR, { recursive: true, force: true });

}

}

}

{

queryTokenList: [ 'octamax', 'catalyst' ,'petroleum' ],

timeTaken: '2.6069',

reportList: [

{

reportId: 217,

title: 'Pis Report On Development Of Integrated Reactor Model For Octamax Process',

documentName: 'TR-20-009.pdf',

wordCount: 3638

},

{

reportId: 457,

title: 'Analysis of Damaged Outlet Collector of Reactor-2 in Octamax Unit, MR',

documentName: 'TR-22-077.pdf',

wordCount: 1785

},

{

reportId: 415,

title: 'Residual life assessment of octamax catalyst using solid state NMR and other techniques',

documentName: 'TR-22-035.pdf',

wordCount: 522

},

{

reportId: 150,

title: 'Characterization and quantification of various alchols in Octmax products',

documentName: 'TR-19-044.pdf',

wordCount: 627

},

{

reportId: 362,

title: 'Analysis on sheared pump shaft (401-P-03A/B) in Octamax unit, Mathura Refinery ',

documentName: 'TR-21-072.pdf',

wordCount: 1283

},

{

reportId: 332,

title: 'Development of High Octane Fuels',

documentName: 'TR-21-042.pdf',

wordCount: 1051

},

... 1088 more items

]

}

I was very very pleased with the results. The speed was impressive, especially considering the scale: I’d uploaded around 2100 files, resulting in a Token table with roughly 200,000 unique entries and Inverted Index table with about 1.2 million rows.

Step 5 : Ranking the results

Our ranking algorithm worked on a simple principle — the more tokens from the search query a report contained, the higher it ranked. Reports with all the tokens took the top spots. If multiple reports had the same number of matching tokens, the one with the highest total frequency of those tokens ranked higher. A better alternative would be TF-IDF, which prioritizes word significance over raw frequency.

But I didn’t just want to rank only on the basis of number of query tokens matched.I wanted to surface reports that were genuinely similar to the top results(one with all or at least a threshold of token matches). Think of it like a good Netflix recommendation — Don’t just throw me any movie with “detective” in the title — give me recommendations for true Sherlock Holmes-level detective stories.

And then, there was one last algorithm — perhaps the most intriguing one I wanted to implement. I first encountered it in college, thanks to an exceptional professor, and it’s one of the rare techniques that uses randomness elegantly. Enter Locality Sensitive Hashing (LSH) with random hyperplanes — a beautifully chaotic yet efficient way to group similar documents.

Locality Sensitive Hashing (LSH) with Random Hyperplanes

To find similar reports efficiently, we can use a technique called Locality Sensitive Hashing (LSH). LSH helps us group similar items (in our case, reports) together, so we don’t have to compare every single report to every other report. To read more about this visit Locality Sensitive Hashing

Here’s how it works with random hyperplanes in brief:

1. Representing Reports as Vectors

Every report in our system is made up of words. Some words appear frequently, some rarely, and many don’t appear at all. To make this machine-comparable, we convert each report into a vector.

Imagine we have a dictionary of N = 50,000 unique words (every word seen across all documents or in a standard dictionary).

Now, for each report :

- If a word is present in the report, its corresponding vector position gets a 1.

- If the word is absent, it gets a 0.

- Alternatively, we can use word frequency instead of binary 0/1 to create a weighted vector.

If your dictionary has 50,000 unique words, each report would be a 50,000-dimensional vector. This is huge, but LSH helps us handle it.

The key insight to note here is the reports which are related to each other (have more words in common) will lie close to each other in this multi dimensional space.

2. Random Hyperplanes

A hyperplane is like a line that divides a 2D plane. In higher dimensions, it’s a similar concept that divides the space.

Each hyperplane “slices” through our vector space randomly, grouping reports on one side or the other. The key insight is that similar vectors are more likely to fall on the same side of the same hyperplanes.

Why not fixed? The key issue with fixed partitioning is that it can create highly imbalanced buckets, where some partitions end up overcrowded with vectors, while others are nearly empty. This happens because a fixed, predetermined grid doesn’t adapt to the actual distribution of data — it imposes artificial boundaries that may not make sense for the dataset.

Why random? Random hyperplanes divide the space in a way that ensures each bucket has a roughly equal number of document vectors. This randomness gives us a higher probability that each partition will contain a balanced mix of documents, preventing highly skewed clusters.

3. Dot Product for Side Determination

In 2D space, a line can be represented using the equation: ax+by=c

This equation defines a straight line that divides the 2D plane into two halves. Now, for any given point (x₁, y₁), we can determine which side of the line it lies on by computing: ax1+by1−c

- If the result is positive, the point lies on one side of the line.

- If the result is negative, the point lies on the other side.

- If it’s zero, the point is exactly on the line.

Extending to Higher Dimensions (Hyperplanes in N-Dimensional Space)

A hyperplane in a higher-dimensional space follows the same principle. In N-dimensions, a hyperplane is represented by an equation of the form: w1x1+w2x2+…+wnxn=0

Here: w1,w2,…,wn are the weights (or coefficients) defining the hyperplane and x1,x2,…,xn are the coordinates of the document vector.

For a given document vector V represented as: V=(v1,v2,…,vn)

We compute the dot product of the vector with the hyperplane:

w⋅V=w1v1+w2v2+…+wnvn

- If the result is positive, the document lies on one side of the hyperplane.

- If the result is negative, it lies on the other side.

- If it’s zero, the point is exactly on the hyperplane.

By repeating this with multiple random hyperplanes, we can encode each document’s position in space as a binary hash, where each bit represents which side of a hyperplane the document falls on.

4. Generating Hashes

We create a binary hash for each report.

For each hyperplane:

- If the report vector is on one side, we assign a “1” to the hash.

- If the report vector is on the other side or lie on the plane, we assign a “0” to the hash.

- For example, if we have 1000 hyperplanes, each report will be represented by a 1000-bit hash. How This Helps in Search (Using Hashes Instead of Full Vectors) After processing, every report is represented as a hash of N bits (e.g., 1000 hyperplanes) instead of a huge 50,000-dimensional vector or the text comparison of around (2000–5000 words) per report.

Hash comparisons are ultra-fast because they use simple bitwise operations.

Instead of comparing every vector with every other vector (which is computationally expensive), we only compare hashes, which is lightning fast.

Reports that share a high number of matching hash bits are very likely to be similar in content.

By reducing the storage size and comparison time, LSH makes it practical to search for similar reports in massive datasets.

`function generateRandomHyperplanes(numVectors: number, dimensions: number) {

const randomVectors = [];

for (let i = 0; i < numVectors; i++) {

const hyperplane = Array.from(

{ length: dimensions },

() => Math.random() - 0.5

);

// Normalize the vector

const magnitude = Math.sqrt(hyperplane.reduce((sum, x) => sum + x ** 2, 0));

const normalizedHyperplane = hyperplane.map((x) => x / magnitude);

randomVectors.push(normalizedHyperplane);

}

return randomVectors;

}

function createVector(dictionary: any, pdfWords: any, weighted = false) {

let pdfWordFrequency = null;

if (weighted) {

pdfWordFrequency = pdfWords.reduce((acc: any, word: any) => {

acc[word] = (acc[word] || 0) + 1;

return acc;

}, {});

}

return dictionary.map((dictWord: any) =>

weighted ? pdfWordFrequency[dictWord] || 0 : pdfWords.includes(dictWord) ? 1 : 0

);

}

function hashPdfVector(filePath: string, pdfVector: number[]) {

const hyperplanes = JSON.parse(fs.readFileSync(filePath, "utf8"));

if (!Array.isArray(hyperplanes) || hyperplanes.length === 0) {

throw new Error("Hyperplanes must be a non-empty array.");

}

return hyperplanes.map((hyperplane) => {

const dotProduct = pdfVector.reduce(

(sum, value, index) => sum + value * hyperplane[index],

0

);

return dotProduct >= 0 ? 1 : 0;

});

}`

Generating hashes is a computationally intensive task, but fortunately, we only need to compute them once per report during the indexing phase. Once generated, these hashes are stored in the Report table. When a search query is executed, instead of comparing every document from scratch, we first retrieve the top matches based on keyword relevance. Then, to surface even more related reports, we leverage Hamming distance — a simple yet effective way to measure similarity between binary hashes. By setting a reasonable threshold, we can efficiently find documents with closely matching hashes, ensuring that similar reports appear together, making the search results more insightful and connected.

Wrapping It All Up

This article has already grown quite big, so let’s wrap it up for now.

And there you have it — my journey from wanting to conquer the entire internet to building a personal search engine for my mountain of PDFs. Along the way, I wrestled with OCR, dabbled in lemmatization, wrangled an inverted index, and even flirted with Locality Sensitive Hashing. It wasn’t always smooth sailing but in the end, I built something that actually works.

Is it as powerful as Google? Of course not. There’s still plenty of room for improvement — better ranking algorithms, handling more file formats, making the UI sleeker — but for now, my personal Library of Alexandria is tamed.

Thanks for sticking around till the end!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)