Build Chain-of-Thought From Scratch - Tutorial for Dummies

Ever ask an AI a tricky question and get a hilariously wrong answer? Turns out, AI needs to learn how to "think step-by-step" too! This guide shows you how to build that thinking process from scratch, explained simply with code using the tiny PocketFlow framework. We've all seen it. You ask an AI assistant to solve a multi-step math problem, plan a complex trip, or tackle a logic puzzle, and... facepalm. The answer is confidently wrong, completely misses the point, or sounds like it just guessed. Why does this happen even with super-smart AI? Often, it's because the AI tries to jump straight to the answer without "thinking it through." When we face tough problems, we break them down, figure out the steps, maybe jot down some notes, and work towards the solution piece by piece. Good news: we can teach AI to do the same! This step-by-step reasoning is called Chain-of-Thought (CoT), and it's a huge deal in AI right now. It's why the latest cutting-edge models like OpenAI's O1, DeepSeek-R1, Google's Gemini 2.5 Pro, and Anthropic's Claude 3.7 are all emphasizing better reasoning and planning abilities. They know that thinking is key to truly smart AI. Instead of just talking about it, we're going to build a basic Chain-of-Thought system ourselves, from scratch! In this beginner-friendly tutorial, you'll learn: What Chain-of-Thought really means (in simple terms). How a basic CoT loop works using code. How to make an AI plan, execute steps, and even evaluate its own work. We'll use PocketFlow – a super-simple, 100-line Python framework. Forget complicated frameworks that hide everything! PocketFlow lets you see exactly how the thinking process is built, making it perfect for understanding the core ideas from the ground up. Let's teach our AI to show its work! Chain-of-Thought: How AI Learns to Show Its Work So, what exactly is this "Chain-of-Thought" or CoT? Think of it like teaching an AI to solve problems like a meticulous detective solving a complex case, rather than just making a wild guess. Here's how the AI tackles a problem using Chain-of-Thought: Understand the Case: First, the AI looks carefully at the problem you gave it – just like a detective studying the initial crime scene and available clues. Make an Initial Plan: Based on the problem, the AI drafts a high-level plan – a list of steps it thinks it needs to take. Detective: "Okay, first I need to interview the witness, then check the alibi, then analyze the fingerprints." Execute One Step: The AI focuses on just the first pending step in its plan and does the work required for that step. Detective: Conducts the first witness interview. Analyze the Results (Self-Critique!): After completing the step, the AI looks at the result. Did it work? Does the information make sense? Is it leading towards the solution? This evaluation step is crucial. Detective: "Hmm, the witness seemed nervous. Does their story match the known facts?" Update the Case File & Plan: The AI records the results of the step (like adding notes to a case file) and updates its plan. It marks the step as done, adds any new findings, and crucially, might change or add future steps based on the evaluation. Detective: "Okay, interview done. Add 'Verify witness alibi' to the plan. And maybe 'Re-interview later if alibi doesn't check out'." Repeat Until Solved: The AI loops back to Step 3, executing the next step on its updated plan, analyzing, and refining until the entire plan is complete and the problem is solved. The Core Idea: Instead of one giant leap, Chain-of-Thought breaks problem-solving into a cycle of Plan -> Execute -> Evaluate -> Update. PocketFlow: The Tiny Toolkit We Need Alright, so we understand the idea behind Chain-of-Thought – making the AI act like a detective, step-by-step. But how do we actually build this process in code without getting lost in a giant, confusing mess? This is where PocketFlow comes in. Forget massive AI frameworks that feel like trying to learn rocket science overnight. PocketFlow is the opposite: it's a brilliantly simple tool, exactly 100 lines of Python code, designed to make building AI workflows crystal clear. Think of PocketFlow like setting up a mini automated assembly line for our AI's thinking process: Shared Store (The Central Conveyor Belt): This is just a standard Python dictionary (e.g., shared = {}). It holds all the information – the problem, the plan, the history of thoughts – and carries it between steps. Every step can read from and write to this shared dictionary. # Our 'conveyor belt' starts with the problem shared = {"problem": "Calculate (15 + 5) * 3 / 2", "thoughts": []} Node (A Worker Station): This is a Python class representing a single task or stage on our assembly line. For CoT, we'll mostly use just one main worker station node. Each node has a standard way of working defined by three core methods: class Node: def __init__(self): self.su

Ever ask an AI a tricky question and get a hilariously wrong answer? Turns out, AI needs to learn how to "think step-by-step" too! This guide shows you how to build that thinking process from scratch, explained simply with code using the tiny PocketFlow framework.

We've all seen it. You ask an AI assistant to solve a multi-step math problem, plan a complex trip, or tackle a logic puzzle, and... facepalm. The answer is confidently wrong, completely misses the point, or sounds like it just guessed. Why does this happen even with super-smart AI?

Often, it's because the AI tries to jump straight to the answer without "thinking it through." When we face tough problems, we break them down, figure out the steps, maybe jot down some notes, and work towards the solution piece by piece. Good news: we can teach AI to do the same!

This step-by-step reasoning is called Chain-of-Thought (CoT), and it's a huge deal in AI right now. It's why the latest cutting-edge models like OpenAI's O1, DeepSeek-R1, Google's Gemini 2.5 Pro, and Anthropic's Claude 3.7 are all emphasizing better reasoning and planning abilities. They know that thinking is key to truly smart AI.

Instead of just talking about it, we're going to build a basic Chain-of-Thought system ourselves, from scratch! In this beginner-friendly tutorial, you'll learn:

- What Chain-of-Thought really means (in simple terms).

- How a basic CoT loop works using code.

- How to make an AI plan, execute steps, and even evaluate its own work.

We'll use PocketFlow – a super-simple, 100-line Python framework. Forget complicated frameworks that hide everything! PocketFlow lets you see exactly how the thinking process is built, making it perfect for understanding the core ideas from the ground up. Let's teach our AI to show its work!

Chain-of-Thought: How AI Learns to Show Its Work

So, what exactly is this "Chain-of-Thought" or CoT? Think of it like teaching an AI to solve problems like a meticulous detective solving a complex case, rather than just making a wild guess.

Here's how the AI tackles a problem using Chain-of-Thought:

- Understand the Case: First, the AI looks carefully at the problem you gave it – just like a detective studying the initial crime scene and available clues.

-

Make an Initial Plan: Based on the problem, the AI drafts a high-level plan – a list of steps it thinks it needs to take.

Detective: "Okay, first I need to interview the witness, then check the alibi, then analyze the fingerprints."

-

Execute One Step: The AI focuses on just the first pending step in its plan and does the work required for that step.

Detective: Conducts the first witness interview.

-

Analyze the Results (Self-Critique!): After completing the step, the AI looks at the result. Did it work? Does the information make sense? Is it leading towards the solution? This evaluation step is crucial.

Detective: "Hmm, the witness seemed nervous. Does their story match the known facts?"

-

Update the Case File & Plan: The AI records the results of the step (like adding notes to a case file) and updates its plan. It marks the step as done, adds any new findings, and crucially, might change or add future steps based on the evaluation.

Detective: "Okay, interview done. Add 'Verify witness alibi' to the plan. And maybe 'Re-interview later if alibi doesn't check out'."

Repeat Until Solved: The AI loops back to Step 3, executing the next step on its updated plan, analyzing, and refining until the entire plan is complete and the problem is solved.

The Core Idea: Instead of one giant leap, Chain-of-Thought breaks problem-solving into a cycle of Plan -> Execute -> Evaluate -> Update.

PocketFlow: The Tiny Toolkit We Need

Alright, so we understand the idea behind Chain-of-Thought – making the AI act like a detective, step-by-step. But how do we actually build this process in code without getting lost in a giant, confusing mess?

This is where PocketFlow comes in. Forget massive AI frameworks that feel like trying to learn rocket science overnight. PocketFlow is the opposite: it's a brilliantly simple tool, exactly 100 lines of Python code, designed to make building AI workflows crystal clear.

Think of PocketFlow like setting up a mini automated assembly line for our AI's thinking process:

-

Shared Store (The Central Conveyor Belt): This is just a standard Python dictionary (e.g.,

shared = {}). It holds all the information – the problem, the plan, the history of thoughts – and carries it between steps. Every step can read from and write to this shared dictionary.

# Our 'conveyor belt' starts with the problem shared = {"problem": "Calculate (15 + 5) * 3 / 2", "thoughts": []} -

Node (A Worker Station): This is a Python class representing a single task or stage on our assembly line. For CoT, we'll mostly use just one main worker station node. Each node has a standard way of working defined by three core methods:

class Node: def __init__(self): self.successors = {} def prep(self, shared): pass def exec(self, prep_res): pass def post(self, shared, prep_res, exec_res): pass def run(self,shared): p=self.prep(shared); e=self._exec(p); return self.post(shared,p,e)Here's what those methods do:

-

prep: Gets the station ready by pulling necessary data from thesharedstore (conveyor belt). -

exec: Performs the station's main job using the prepared data. This is where our CoT node will call the LLM. -

post: Takes the result, updates thesharedstore, and signals which station should run next (or if the process should loop or end).

-

-

Flow (The Assembly Line Manager): This is the controller that directs the

shareddata from oneNodeto the next based on the signals returned by thepostmethod. You define the connections between stations.

class Flow(Node): def __init__(self, start): self.start = start def _orch(self,shared,params=None): curr = self.start while curr: action=curr.run(shared); curr=curr.successors.get(action)Connecting nodes is super intuitive:

# Define our main thinking station thinker_node = ChainOfThoughtNode() # Define the connections for looping and ending thinker_node - "continue" >> thinker_node # Loop back on "continue" thinker_node - "end" >> None # Stop on "end" (or go to a final node) # Create the flow manager, starting with our thinker node flow = Flow(start=thinker_node) # Run the assembly line! flow.run(shared)

Why PocketFlow Rocks for This: Its simplicity and clear structure (Node, Flow, Shared Store) make it incredibly easy to visualize and build even sophisticated processes like our Chain-of-Thought loop, RAG systems, or complex agent behaviors, without getting bogged down in framework complexity. You see exactly how the data flows and how the logic works.

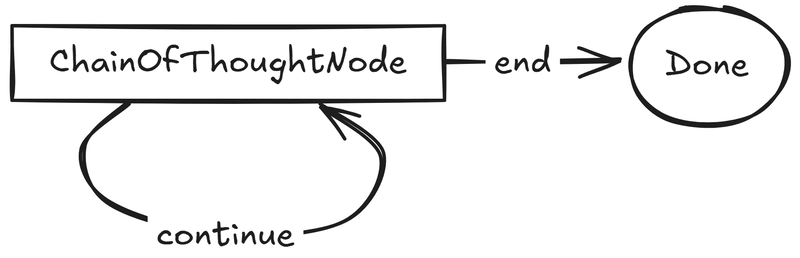

Chain-of-Thought: It's Just a Loop!

Now, here's the really neat part about implementing Chain-of-Thought with PocketFlow, something often obscured by complex frameworks:

The entire step-by-step thinking process can be handled by one single Node looping back onto itself.

Forget needing a complicated chain of different nodes for planning, executing, evaluating, etc. We can build this intelligent behavior using an incredibly simple graph structure:

That's it! This simple graph structure powers our CoT engine:

- One Core Node (

ChainOfThoughtNode): This is our "thinker station". It contains all the logic for interacting with the LLM, managing the plan, and evaluating steps. - One Loop Edge (

"continue"): This arrow goes from the node back to itself. When the node finishes a thinking cycle and decides more work is needed, it sends the"continue"signal. - One Exit Edge (

"end"): This arrow points away (potentially toNoneor a final reporting node). When the node determines the problem is solved, it sends the"end"signal. - PocketFlow's Role: The

Flowmanager simply follows these arrows. It runs theChainOfThoughtNode, gets the signal ("continue"or"end") from itspostmethod, and routes execution accordingly based on the connections we defined (thinker_node - "continue" >> thinker_node).

No complex state machines, no hidden magic – just one node whose internal logic decides whether to continue the loop or finish. The sophisticated, step-by-step reasoning emerges from the combination of:

- The intelligence packed inside the

ChainOfThoughtNode(specifically, how it uses the LLM in itsexecstep). - This ultra-simple looping graph structure enabled by PocketFlow.

Let's look inside that ChainOfThoughtNode to see how it makes the loop work.

Inside the Thinker Node: The Prep-Think-Update Cycle

Our ChainOfThoughtNode is where the step-by-step magic happens. Each time PocketFlow runs this node (which is every loop cycle), it executes a simple three-part process defined by its prep, exec, and post methods. Let's walk through this cycle using our example problem: Calculate (15 + 5) * 3 / 2. We'll show simplified Python code for each step first, then explain it.

1. prep: Gathering the Ingredients

First, the node needs to grab the necessary information from the shared dictionary (our central data store) to know the current state of the problem.

def prep(self, shared):

problem = shared.get("problem")

thoughts_history = shared.get("thoughts", [])

# Helper to get the latest plan from the history

current_plan = self.get_latest_plan(thoughts_history)

return {"problem": problem, "history": thoughts_history, "plan": current_plan}

- Explanation: This

prepmethod acts like getting ingredients before cooking. It looks into theshareddictionary and pulls out:- The original

problemstatement. - The

thoughtshistory: A list containing the results (thinking, plan) from all previous cycles. It starts empty. - The

current_plan: Usually found within the last entry in thethoughtshistory. If the history is empty, the plan is initiallyNone.

- The original

- Example (Start of Loop 1):

sharedis{"problem": "Calculate (15 + 5) * 3 / 2", "thoughts": []}.prepreturns{"problem": "...", "history": [], "plan": None}. - Example (Start of Loop 2):

sharednow contains the result of Loop 1, like{"thoughts": [{"thinking": "...", "planning": [...], ...}]}.prepreturns{"problem": "...", "history": [thought1], "plan": plan_from_thought1}.

2. exec: The Core Thinking - Prompting the LLM

This is the main event where we ask the LLM (AI Brain) to perform one cycle of Chain-of-Thought reasoning. The key is the prompt we send.

def exec(self, prep_res):

# prep_res holds the context from prep()

problem = prep_res["problem"]

history_text = self.format_history(prep_res["history"]) # Make history readable

plan_text = self.format_plan(prep_res["plan"]) # Make plan readable

# --- Construct the Prompt ---

prompt = f"""

You are solving a problem step-by-step.

Problem: {problem}

History (Previous Steps):

{history_text}

Current Plan:

{plan_text}

Your Task Now:

1. Evaluate the LATEST step in History (if any). State if correct/incorrect.

2. Execute the NEXT 'Pending' step in the Current Plan. Show your work.

3. Update the FULL plan (mark step 'Done', add 'result', fix if needed).

4. Decide if the overall problem is solved (`next_thought_needed: true/false`).

Output ONLY in YAML format:

```yaml

thinking: |

Evaluation:

planning:

# The FULL updated plan list

- description: ...

status: ...

result: ...

next_thought_needed:

```

"""

# --- Call LLM and Parse ---

llm_response_text = call_llm(prompt) # Function to call the LLM API

# Assume extracts and parses the YAML block

structured_output = yaml.safe_load(self.extract_yaml(llm_response_text))

return structured_output

- Explanation: The

execmethod orchestrates the LLM interaction:- It takes the

problem,history, andplanprovided byprep. - It formats the history and plan into readable text.

- It builds the prompt. Notice how the prompt explicitly tells the LLM the CoT steps: Evaluate -> Execute -> Update Plan -> Decide Completion. It also specifies the required YAML output format.

- It calls the LLM with this detailed prompt.

- It receives the LLM's response and parses the structured YAML part into a Python dictionary (e.g.,

{'thinking': '...', 'planning': [...], 'next_thought_needed': True}).

- It takes the

-

Example Prompt Built (Start of Loop 2):

You are solving a problem step-by-step. Problem: Calculate (15 + 5) * 3 / 2 History (Previous Steps): Thought 1: thinking: | Evaluation: N/A Calculate 15 + 5 = 20. planning: [...] # Plan with step 1 Done Current Plan: - {'description': 'Calculate 15 + 5', 'status': 'Done', 'result': 20} - {'description': 'Multiply result by 3', 'status': 'Pending'} # ... other steps ... Your Task Now: 1. Evaluate the LATEST step... 2. Execute the NEXT 'Pending' step... ('Multiply result by 3') # ... etc ... Output ONLY in YAML format: # ...

3. post: Saving Progress & Signaling the Loop

After the exec step gets the LLM's response, post saves the progress and tells PocketFlow whether to continue looping or stop.

def post(self, shared, prep_res, exec_res):

# exec_res is the structured dict from exec() like {'thinking': ..., 'planning': ..., 'next_thought_needed': ...}

if "thoughts" not in shared: shared["thoughts"] = []

shared["thoughts"].append(exec_res) # Add the latest thought cycle results to history

# Decide the signal based on LLM's decision

if exec_res.get("next_thought_needed", True):

signal = "continue"

else:

# Optional: Extract final answer for convenience

shared["final_answer"] = self.find_final_result(exec_res.get("planning", []))

signal = "end"

return signal # Return "continue" or "end" to PocketFlow

-

Explanation: The

postmethod is the cleanup and signaling step:- It takes the dictionary returned by

exec(containing the LLM's latestthinking,planning, andnext_thought_neededflag). - It appends this entire dictionary to the

thoughtslist in theshareddictionary, building our step-by-step record. - It checks the

next_thought_neededflag. - It returns the string

"continue"if more steps are needed, or"end"if the LLM indicated the plan is complete.

- It takes the dictionary returned by

Example (End of Loop 2):

exec_rescontains{'next_thought_needed': True, ...}.postappends this toshared['thoughts']and returns"continue".Example (End of Final Loop):

exec_rescontains{'next_thought_needed': False, ...}.postappends this, maybe saves the final answer, and returns"end".

Making it Loop: The PocketFlow Flow

So, the ChainOfThoughtNode completes one cycle (prep -> exec -> post) and returns a signal ("continue" or "end"). But how does the looping actually happen? That's managed by the PocketFlow Flow object, which acts like our assembly line manager.

Here's how we set it up in code:

# 1. Create an instance of our thinker node

thinker_node = ChainOfThoughtNode()

# 2. Define the connections (the workflow rules)

# If the node signals "continue", loop back to itself

thinker_node - "continue" >> thinker_node

# If the node signals "end", stop the flow (or go to a final node)

thinker_node - "end" >> None

# 3. Create the Flow manager, telling it where to start

cot_flow = Flow(start=thinker_node)

# Now we can run the process

# Remember 'shared' holds our initial problem and accumulates thoughts

shared = {"problem": "Calculate (15 + 5) * 3 / 2", "thoughts": []}

cot_flow.run(shared)

# After run() finishes, shared["final_answer"] (if set in post)

# and shared["thoughts"] will contain the full process history.

This combination of the node's internal logic (producing the signal in post) and the Flow's simple routing based on those signals creates the powerful Chain-of-Thought loop.

See It In Action: Solving a Problem Step-by-Step

Let's watch our Chain-of-Thought loop tackle a problem. For illustration, we'll use a simple multi-step arithmetic calculation: Calculate (15 + 5) * 3 / 2.

Note: While this example is simple, this exact looping structure can handle much more complex reasoning, including problems like the **Jane Street die-rolling probability puzzle* often used in quant interviews. To see the detailed output for that challenging problem, check out the Chain-of-Thought Cookbook example on GitHub.*

Here’s a summary of what happens inside the loop when the Flow runs, focusing on the LLM's output and highlighting the plan changes:

Loop 1 / Thought 1: Handling the Parentheses

- LLM Task: Create initial plan, execute step 1.

-

LLM Output (YAML): (Showing full plan this time)

thinking: | Evaluation: N/A (First step) Problem is (15 + 5) * 3 / 2. Handle parentheses first. Calculate 15 + 5 = 20. planning: - {'description': 'Calculate parentheses (15 + 5)', 'status': 'Done', 'result': 20} # <-- Step Executed & Updated - {'description': 'Multiply result by 3', 'status': 'Pending'} - {'description': 'Divide result by 2', 'status': 'Pending'} - {'description': 'State final answer', 'status': 'Pending'} next_thought_needed: true postResult: Saves Thought 1. Returns"continue".

Loop 2 / Thought 2: Multiplication

- LLM Task: Evaluate Thought 1, execute step 2.

-

LLM Output (YAML): (Using ... for unchanged parts)

thinking: | Evaluation: Correct. Addition 15 + 5 = 20 was right. Next: Multiply previous result (20) by 3. Calculate 20 * 3 = 60. planning: - {'description': 'Calculate parentheses (15 + 5)', 'status': 'Done', 'result': 20} - {'description': 'Multiply result by 3', 'status': 'Done', 'result': 60} # <-- Step Executed & Updated - {'description': 'Divide result by 2', 'status': 'Pending'} - {'description': 'State final answer', 'status': 'Pending'} next_thought_needed: true postResult: Saves Thought 2. Returns"continue".

Loop 3 / Thought 3: Division

- LLM Task: Evaluate Thought 2, execute step 3.

-

LLM Output (YAML): (Using ... for unchanged parts)

thinking: | Evaluation: Correct. Multiplication 20 * 3 = 60 was right. Next: Divide previous result (60) by 2. Calculate 60 / 2 = 30. planning: - {'description': 'Calculate parentheses (15 + 5)', 'status': 'Done', 'result': 20} - {'description': 'Multiply result by 3', 'status': 'Done', 'result': 60} - {'description': 'Divide result by 2', 'status': 'Done', 'result': 30} # <-- Step Executed & Updated - {'description': 'State final answer', 'status': 'Pending'} next_thought_needed: true postResult: Saves Thought 3. Returns"continue".

Loop 4 / Thought 4: Conclusion

- LLM Task: Evaluate Thought 3, execute step 4 (Conclusion).

-

LLM Output (YAML): (Using ... for unchanged parts)

thinking: | Evaluation: Correct. Division 60 / 2 = 30 was right. All calculation steps are done. The final result is 30. planning: - {'description': 'Calculate parentheses (15 + 5)', 'status': 'Done', 'result': 20} - {'description': 'Multiply result by 3', 'status': 'Done', 'result': 60} - {'description': 'Divide result by 2', 'status': 'Done', 'result': 30} - {'description': 'State final answer', 'status': 'Done', 'result': 30} # <-- Step Executed & Updated next_thought_needed: false postResult: Saves Thought 4. Saves30toshared['final_answer']. Returns"end". TheFlowstops.

Beyond Our Loop: When AI Learns How to Think

The method we built orchestrates thinking from the outside. However, some cutting-edge AI models are being developed to handle complex reasoning more autonomously, essentially building the "thinking" process internally. Examples include models explicitly trained for reasoning like OpenAI's O1, DeepSeek-R1, Google's Gemini 2.5 Pro, and Anthropic's Claude 3.7.

A key concept powering some of these advanced models is the powerful synergy between step-by-step Thinking and automated Learning (like Reinforcement Learning). Think about learning a complex skill yourself – like mastering a new board game strategy or solving a tough math problem. You learn best not just by trying random things, but by:

- Thinking through your steps and plan.

- Seeing the outcome.

- Reflecting on which parts of your thinking led to success or failure.

That "thinking hard" part makes the learning stick.

AI Models are Learning This Too (e.g., DeepSeek-R1's Approach):

- Generate Thinking: The AI attempts to solve a problem by generating its internal reasoning or "thinking" steps.

- Produce Answer: Based on its thinking, it comes up with a final answer.

- Check Outcome: An automated system checks if the final answer is actually correct.

- Get Reward: If the answer is correct, the AI gets a positive "reward" signal.

- Learn & Improve: This reward signal teaches the AI! It updates its internal parameters to make it more likely to use the successful thinking patterns again next time.

This cycle of think -> check answer -> get reward -> learn helps the AI become genuinely better at reasoning over time. (For the nitty-gritty details on how DeepSeek-R1 does this with techniques like GRPO, check out their research paper!)

Conclusion: You've Taught an AI to Think Step-by-Step!

Now you know the secret behind making AI tackle complex problems: Chain-of-Thought is just a structured thinking loop!

- Plan: Create or review the step-by-step plan.

- Evaluate: Check the result of the previous step for correctness.

- Execute: Perform the next single step in the plan.

- Update: Record the results and refine the plan based on the evaluation and execution.

- Loop (or End): Decide whether to repeat the cycle or finish.

The "thinking" – evaluating, executing, planning, and deciding – happens within the LLM, guided by the prompt we construct in our exec method. The looping itself is effortlessly handled by PocketFlow's simple graph structure and the continue / end signals from our post method. Everything else is just organizing the information!

Next time you hear about advanced AI reasoning, remember this simple Evaluate -> Execute -> Update loop. You now have the fundamental building block to understand how AI can "show its work" and solve problems piece by piece.

Armed with this knowledge, you're ready to explore more complex reasoning systems!

Want to see the full code and try the challenging Jane Street quant interview question? Check out the PocketFlow Chain-of-Thought Cookbook on GitHub!

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[FREE EBOOKS] Machine Learning Hero, AI-Assisted Programming for Web and Machine Learning & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)