Build an AI-Powered Chatbot with Amazon Lex, Bedrock, S3, and RAG

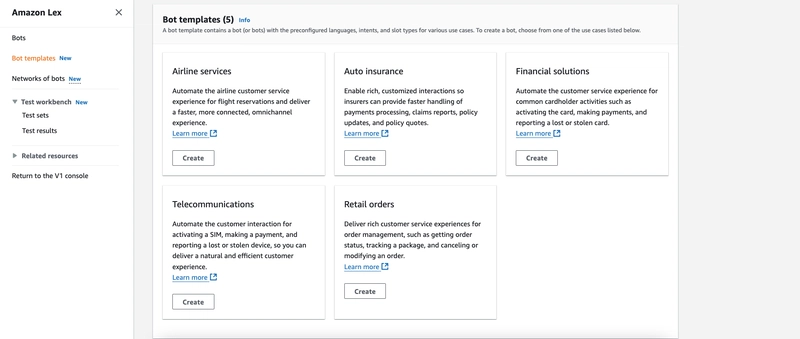

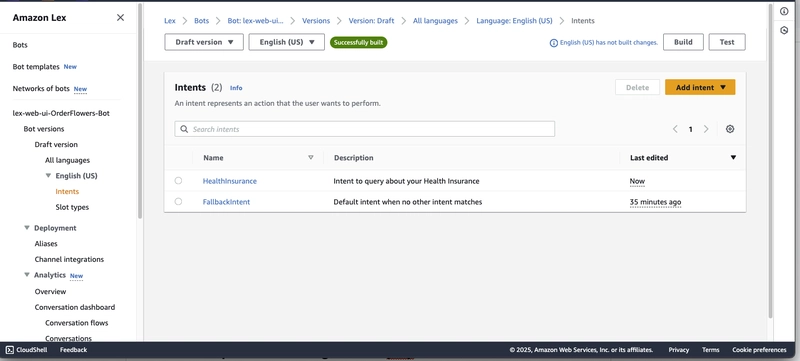

In today's fast-paced digital world, AI-powered chatbots are transforming the way businesses interact with customers. From personalized shopping assistants to 24/7 enterprise and customer support agents, conversational AI is now a critical component of modern applications. In this tutorial, we dive deep into building a smart and scalable chatbot using Amazon Lex, Amazon Bedrock, Amazon S3, and Retrieval-Augmented Generation (RAG) — combining the best of AWS services to deliver real-time, intelligent conversations. We kick things off with a quick overview of Conversational AI, exploring why it’s a game-changer across industries. AI chatbots streamline customer interactions, reduce operational costs, and improve user satisfaction by offering instant, accurate, and human-like responses. With growing expectations for smarter digital interfaces, the need for robust, scalable chatbot architectures has never been greater. Next, we introduce Amazon Lex and Amazon Bedrock, two powerful services that make it easier than ever to build and deploy AI applications. Lex enables natural language understanding, allowing users to interact with applications using voice and text, While Bedrock gives developers easy access to foundation models from providers like Anthropic, Cohere, Meta, and Amazon itself — all without managing infrastructure. One of the core strengths of this demo is the integration of Retrieval-Augmented Generation (RAG) with Amazon S3. Instead of relying on pre-trained knowledge alone, RAG enhances chatbot responses by dynamically retrieving relevant information from a custom knowledge base stored in S3 — like documents, FAQs, or policy files. This makes the chatbot smarter and context-aware, offering precise answers even in domain-specific use cases. The demo walks you through the complete architecture — from user interaction with Amazon Lex to querying S3 documents using Bedrock-powered RAG, and returning contextual responses. You’ll learn how to wire these services together, manage access securely, and ensure smooth information flow across components. A detailed, step-by-step build section showcases how to configure intents, store documents in S3, and bring it all together into a working chatbot. In the live demo, we show the chatbot in action, answering real-time queries based on content stored in S3. Whether it’s a customer asking about insurance policy details or an employee looking for an HR policy, the bot responds quickly with relevant, readable information — no hard-coded responses, just intelligent retrieval backed by AI. Security and scalability are crucial in production applications, so we cover best practices including encryption, IAM roles, API rate limiting, and cost control. You’ll leave with a practical understanding of how to build a chatbot that’s not only smart but also enterprise-ready. Finally, we explore real-world use cases for this architecture. From customer support bots that fetch answers from product manuals, to internal Q&A assistants that help employees navigate company policies, the possibilities are endless. Use Case: AI-Powered Insurance Chatbot with Amazon Lex, Bedrock, S3, and RAG Problem Statement: Insurance companies deal with a high volume of customer queries related to policy details, claims processing, coverage, and premium calculations. Traditional customer support teams face challenges in providing instant, accurate, and personalized responses. Customers often struggle to find relevant information in lengthy insurance policy documents. Solution: Build an AI-powered insurance chatbot using Amazon Lex, Amazon Bedrock, Amazon S3, and Retrieval-Augmented Generation (RAG) to provide real-time, context-aware, and personalized responses to customer queries. Implementation Steps: Customer Interaction via Amazon Lex Customers interact with the chatbot through the company website, mobile app, or WhatsApp support. Example queries: “What does my health insurance cover?” “How do I file a car insurance claim?” “What is the process for policy renewal?” “How much is my premium for this year?” RAG-Based Knowledge Retrieval from Amazon S3 Insurance policy documents, FAQs, and claim guidelines are stored in Amazon S3. Retrieval-Augmented Generation (RAG) dynamically pulls the latest policy details and presents accurate, real-time responses to customers. AI-Powered Response Generation via Amazon Bedrock Amazon Bedrock processes customer queries and generates human-like, contextual responses based on the retrieved information. The chatbot simplifies complex insurance jargon into easy-to-understand answers. Customers can also submit claims directly through the chatbot, reducing the need for phone support. If you're looking to build AI-powered chatbots that go beyond generic responses and offer real value through contextual intelligence, this demo is your blueprint.

In today's fast-paced digital world, AI-powered chatbots are transforming the way businesses interact with customers. From personalized shopping assistants to 24/7 enterprise and customer support agents, conversational AI is now a critical component of modern applications. In this tutorial, we dive deep into building a smart and scalable chatbot using Amazon Lex, Amazon Bedrock, Amazon S3, and Retrieval-Augmented Generation (RAG) — combining the best of AWS services to deliver real-time, intelligent conversations.

We kick things off with a quick overview of Conversational AI, exploring why it’s a game-changer across industries. AI chatbots streamline customer interactions, reduce operational costs, and improve user satisfaction by offering instant, accurate, and human-like responses. With growing expectations for smarter digital interfaces, the need for robust, scalable chatbot architectures has never been greater.

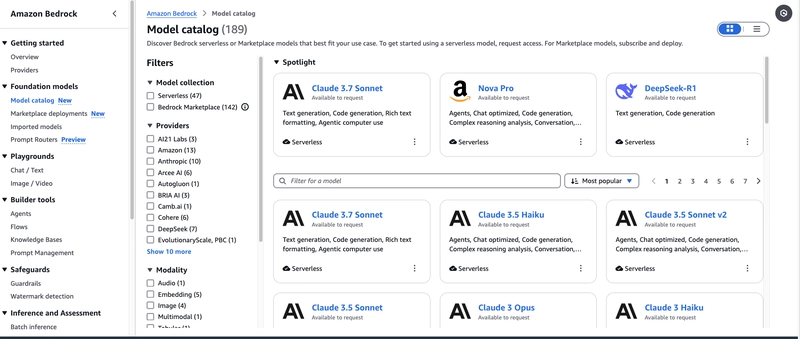

Next, we introduce Amazon Lex and Amazon Bedrock, two powerful services that make it easier than ever to build and deploy AI applications. Lex enables natural language understanding, allowing users to interact with applications using voice and text,

While Bedrock gives developers easy access to foundation models from providers like Anthropic, Cohere, Meta, and Amazon itself — all without managing infrastructure.

One of the core strengths of this demo is the integration of Retrieval-Augmented Generation (RAG) with Amazon S3. Instead of relying on pre-trained knowledge alone, RAG enhances chatbot responses by dynamically retrieving relevant information from a custom knowledge base stored in S3 — like documents, FAQs, or policy files. This makes the chatbot smarter and context-aware, offering precise answers even in domain-specific use cases.

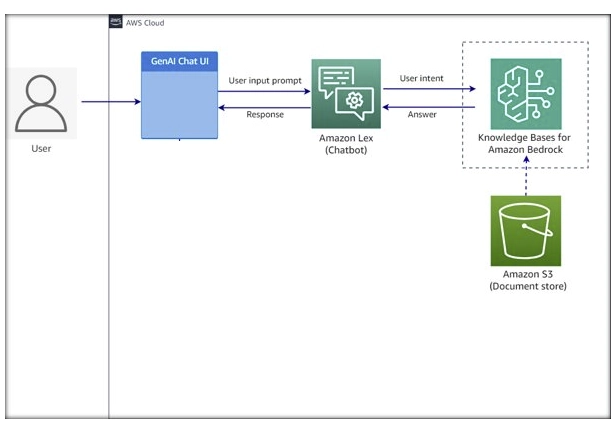

The demo walks you through the complete architecture — from user interaction with Amazon Lex to querying S3 documents using Bedrock-powered RAG, and returning contextual responses. You’ll learn how to wire these services together, manage access securely, and ensure smooth information flow across components. A detailed, step-by-step build section showcases how to configure intents, store documents in S3, and bring it all together into a working chatbot.

In the live demo, we show the chatbot in action, answering real-time queries based on content stored in S3. Whether it’s a customer asking about insurance policy details or an employee looking for an HR policy, the bot responds quickly with relevant, readable information — no hard-coded responses, just intelligent retrieval backed by AI.

Security and scalability are crucial in production applications, so we cover best practices including encryption, IAM roles, API rate limiting, and cost control. You’ll leave with a practical understanding of how to build a chatbot that’s not only smart but also enterprise-ready.

Finally, we explore real-world use cases for this architecture. From customer support bots that fetch answers from product manuals, to internal Q&A assistants that help employees navigate company policies, the possibilities are endless.

Use Case: AI-Powered Insurance Chatbot with Amazon Lex, Bedrock, S3, and RAG

Problem Statement:

Insurance companies deal with a high volume of customer queries related to policy details, claims processing, coverage, and premium calculations. Traditional customer support teams face challenges in providing instant, accurate, and personalized responses. Customers often struggle to find relevant information in lengthy insurance policy documents.

Solution:

Build an AI-powered insurance chatbot using Amazon Lex, Amazon Bedrock, Amazon S3, and Retrieval-Augmented Generation (RAG) to provide real-time, context-aware, and personalized responses to customer queries.

Implementation Steps:

Customer Interaction via Amazon Lex

Customers interact with the chatbot through the company website, mobile app, or WhatsApp support.

Example queries:

“What does my health insurance cover?”

“How do I file a car insurance claim?”

“What is the process for policy renewal?”

“How much is my premium for this year?”

RAG-Based Knowledge Retrieval from Amazon S3

Insurance policy documents, FAQs, and claim guidelines are stored in Amazon S3.

Retrieval-Augmented Generation (RAG) dynamically pulls the latest policy details and presents accurate, real-time responses to customers.

AI-Powered Response Generation via Amazon Bedrock

Amazon Bedrock processes customer queries and generates human-like, contextual responses based on the retrieved information.

The chatbot simplifies complex insurance jargon into easy-to-understand answers.

Customers can also submit claims directly through the chatbot, reducing the need for phone support.

If you're looking to build AI-powered chatbots that go beyond generic responses and offer real value through contextual intelligence, this demo is your blueprint.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)