AWS Glue Interview Questions (and Answers) [Updated 2025]

Navigating the technical interview process for data engineering, ETL development, or cloud architect roles on AWS? You absolutely need to be prepared for AWS Glue interview questions. As AWS's premier serverless data integration service, Glue is fundamental for building scalable data pipelines, data lakes, and enabling analytics. Interviewers use AWS Glue interview questions to assess your grasp of its architecture, components, practical applications, and how it integrates within the broader AWS ecosystem. Feeling the pressure? You've come to the right place. This ultimate guide provides an extensive compilation of Glue AWS interview questions, from foundational concepts to advanced troubleshooting and design patterns. We cover the Glue Data Catalog, Crawlers, ETL Jobs (Spark & Python Shell), DynamicFrames, Glue Studio, DataBrew, workflows, security best practices, performance optimization, and much more. We've included significantly more questions than commonly found elsewhere, ensuring comprehensive preparation. Let's equip you to confidently tackle any AWS Glue interview questions thrown your way! Understanding the Fundamentals: Core AWS Glue Interview Questions Every AWS Glue interview starts with the basics. Ensure you have a strong foundation. 1. What is AWS Glue in simple terms? A: AWS Glue is a fully managed, serverless ETL (Extract, Transform, Load) service on AWS. It helps users discover data across various sources, transform it, and prepare it for analytics, machine learning, or application development without managing any infrastructure. Think of it as the "glue" connecting different data stores and processing frameworks on AWS. 2. What are the primary components of the AWS Glue service? The key components are: AWS Glue Data Catalog: A central metadata repository (Hive metastore compatible). AWS Glue Crawlers: Automate schema discovery and Data Catalog population. AWS Glue ETL Jobs: Execute data transformation scripts (using Apache Spark or Python Shell). AWS Glue Studio: A visual interface for creating, running, and monitoring ETL jobs. AWS Glue DataBrew: A visual tool for data cleaning and normalization (no code). AWS Glue Schema Registry: Manages and enforces schemas for streaming data. Workflows & Triggers: Orchestrate complex ETL pipelines. Development Endpoints: Interactive development environments. Job Bookmarks: Track processed data to avoid duplication. AWS Glue Data Quality: Define and evaluate data quality rules. 3. Explain why AWS Glue is considered "serverless." What are the benefits? AWS Glue is serverless because users don't need to provision, configure, manage, or scale servers or clusters. AWS handles the underlying infrastructure, patching, and scaling automatically based on job requirements. Benefits of AWS Glue: No Infrastructure Management: Focus on ETL logic, not servers. Automatic Scaling: Resources scale up or down based on workload (DPUs). Pay-per-Use: Cost-effective, as you only pay for the compute time consumed while your jobs run. High Availability: Managed service provides inherent availability. 4. What is a DPU (Data Processing Unit) in AWS Glue? How does it relate to job performance and cost? A DPU (Data Processing Unit) is the unit of compute capacity for AWS Glue Spark ETL jobs. It represents a relative measure of processing power, comprising vCPU, memory, and disk resources. Standard Worker: 1 DPU = 4 vCPU, 16 GB RAM, 64 GB Disk. G.1X Worker: 1 DPU = 4 vCPU, 16 GB RAM, 64 GB Disk (same as standard, optimized for shuffle). G.2X Worker: 1 DPU = 8 vCPU, 32 GB RAM, 128 GB Disk (more memory/CPU per DPU). G.4X and G.8X: Offer even more memory per DPU. Python Shell Jobs: Use fractional DPUs (either 1/16 DPU or 1 DPU). Performance & Cost: More DPUs generally mean faster job execution but higher cost. Choosing the right number and type of DPUs is key for balancing performance and budget. 5. What business problems does AWS Glue aim to solve? Glue addresses challenges like: The complexity and cost of setting up and managing traditional ETL infrastructure. The time-consuming process of discovering, cataloging, and understanding data across disparate sources. Scaling ETL workloads efficiently. Integrating data from various AWS services (S3, RDS, Redshift) and on-premises sources. Enabling both code-based and visual ETL development. AWS Glue Data Catalog Interview Questions The Data Catalog is central – expect detailed questions. 1. Describe the AWS Glue Data Catalog and its purpose. The AWS Glue Data Catalog is a fully managed, persistent metadata repository. It acts as a central index for all your data assets, regardless of their physical location (S3, RDS, Redshift, DynamoDB, etc.). It stores table definitions, schemas, partition information, data locations, and other attributes. Its primary purpose is to make data discoverable, queryable, and u

![AWS Glue Interview Questions (and Answers) [Updated 2025]](https://media2.dev.to/dynamic/image/width%3D1000,height%3D500,fit%3Dcover,gravity%3Dauto,format%3Dauto/https:%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2F5wg5cxmzut1lp2lneogs.png)

Navigating the technical interview process for data engineering, ETL development, or cloud architect roles on AWS? You absolutely need to be prepared for AWS Glue interview questions. As AWS's premier serverless data integration service, Glue is fundamental for building scalable data pipelines, data lakes, and enabling analytics. Interviewers use AWS Glue interview questions to assess your grasp of its architecture, components, practical applications, and how it integrates within the broader AWS ecosystem.

Feeling the pressure? You've come to the right place. This ultimate guide provides an extensive compilation of Glue AWS interview questions, from foundational concepts to advanced troubleshooting and design patterns. We cover the Glue Data Catalog, Crawlers, ETL Jobs (Spark & Python Shell), DynamicFrames, Glue Studio, DataBrew, workflows, security best practices, performance optimization, and much more. We've included significantly more questions than commonly found elsewhere, ensuring comprehensive preparation. Let's equip you to confidently tackle any AWS Glue interview questions thrown your way!

Understanding the Fundamentals: Core AWS Glue Interview Questions

Every AWS Glue interview starts with the basics. Ensure you have a strong foundation.

1. What is AWS Glue in simple terms?

A: AWS Glue is a fully managed, serverless ETL (Extract, Transform, Load) service on AWS. It helps users discover data across various sources, transform it, and prepare it for analytics, machine learning, or application development without managing any infrastructure. Think of it as the "glue" connecting different data stores and processing frameworks on AWS.

2. What are the primary components of the AWS Glue service?

The key components are:

- AWS Glue Data Catalog: A central metadata repository (Hive metastore compatible).

- AWS Glue Crawlers: Automate schema discovery and Data Catalog population.

- AWS Glue ETL Jobs: Execute data transformation scripts (using Apache Spark or Python Shell).

- AWS Glue Studio: A visual interface for creating, running, and monitoring ETL jobs.

- AWS Glue DataBrew: A visual tool for data cleaning and normalization (no code).

- AWS Glue Schema Registry: Manages and enforces schemas for streaming data.

- Workflows & Triggers: Orchestrate complex ETL pipelines.

- Development Endpoints: Interactive development environments.

- Job Bookmarks: Track processed data to avoid duplication.

- AWS Glue Data Quality: Define and evaluate data quality rules.

3. Explain why AWS Glue is considered "serverless." What are the benefits?

AWS Glue is serverless because users don't need to provision, configure, manage, or scale servers or clusters. AWS handles the underlying infrastructure, patching, and scaling automatically based on job requirements.

Benefits of AWS Glue:

- No Infrastructure Management: Focus on ETL logic, not servers.

- Automatic Scaling: Resources scale up or down based on workload (DPUs).

- Pay-per-Use: Cost-effective, as you only pay for the compute time consumed while your jobs run.

- High Availability: Managed service provides inherent availability.

4. What is a DPU (Data Processing Unit) in AWS Glue? How does it relate to job performance and cost?

A DPU (Data Processing Unit) is the unit of compute capacity for AWS Glue Spark ETL jobs. It represents a relative measure of processing power, comprising vCPU, memory, and disk resources.

- Standard Worker: 1 DPU = 4 vCPU, 16 GB RAM, 64 GB Disk.

- G.1X Worker: 1 DPU = 4 vCPU, 16 GB RAM, 64 GB Disk (same as standard, optimized for shuffle).

- G.2X Worker: 1 DPU = 8 vCPU, 32 GB RAM, 128 GB Disk (more memory/CPU per DPU).

- G.4X and G.8X: Offer even more memory per DPU.

- Python Shell Jobs: Use fractional DPUs (either 1/16 DPU or 1 DPU).

- Performance & Cost: More DPUs generally mean faster job execution but higher cost. Choosing the right number and type of DPUs is key for balancing performance and budget.

5. What business problems does AWS Glue aim to solve?

Glue addresses challenges like:

- The complexity and cost of setting up and managing traditional ETL infrastructure.

- The time-consuming process of discovering, cataloging, and understanding data across disparate sources.

- Scaling ETL workloads efficiently.

- Integrating data from various AWS services (S3, RDS, Redshift) and on-premises sources.

- Enabling both code-based and visual ETL development.

AWS Glue Data Catalog Interview Questions

The Data Catalog is central – expect detailed questions.

1. Describe the AWS Glue Data Catalog and its purpose.

The AWS Glue Data Catalog is a fully managed, persistent metadata repository. It acts as a central index for all your data assets, regardless of their physical location (S3, RDS, Redshift, DynamoDB, etc.). It stores table definitions, schemas, partition information, data locations, and other attributes. Its primary purpose is to make data discoverable, queryable, and usable by various AWS analytics services like AWS Glue ETL jobs, Amazon Athena, Amazon Redshift Spectrum, Amazon EMR, and AWS Lake Formation. It's compatible with the Apache Hive metastore API.

2. What is the difference between a Database and a Table in the Glue Data Catalog?

- Database: A logical grouping or namespace for tables within the Data Catalog. It helps organize related table definitions but doesn't represent a physical database instance.

- Table: Represents the metadata definition for a specific dataset. It includes the schema (column names, types), data location (e.g., S3 path), data format (CSV, JSON, Parquet), partition keys, and other properties.

3. How is partitioning information stored and used in the Glue Data Catalog?

When a table is partitioned (e.g., by date on S3 like s3://bucket/data/year=2024/month=03/day=15/), the partition keys (year, month, day) are defined in the table metadata. The actual partition values and their corresponding S3 locations are stored as separate partition metadata entries linked to the table. Services like Athena and Glue ETL jobs use this information for partition pruning – scanning only relevant partitions based on query filters (e.g., WHERE year=2024 AND month=03), drastically improving performance and reducing costs.

4. Can the Glue Data Catalog be used by services other than Glue ETL? Give examples.

Absolutely. The Data Catalog is designed for broad integration:

- Amazon Athena: Uses the Data Catalog to run interactive SQL queries directly on data in S3.

- Amazon Redshift Spectrum: Enables Redshift queries to access data in S3 via tables defined in the Data Catalog.

- Amazon EMR: EMR clusters can use the Glue Data Catalog as an external Hive metastore.

- AWS Lake Formation: Leverages the Data Catalog to manage fine-grained permissions on data lakes.

- Amazon SageMaker Data Wrangler: Can use the Data Catalog to discover and import datasets.

5. How does the Glue Data Catalog handle schema evolution? What are the best practices?

- The Catalog stores the current schema. Handling evolution involves:

- Crawler Configuration: Set crawler options to update table definitions or create new ones upon detecting schema changes.

- ETL Job Logic: Write resilient ETL scripts (using DynamicFrames' flexibility or error handling in Spark) to accommodate schema drift (new/missing columns, type changes).

- Schema Versioning (Manual/Lake Formation): While Glue Catalog itself doesn't inherently version table schemas, you can implement versioning manually or leverage Lake Formation's governance features.

- Glue Schema Registry: Use for streaming data sources to enforce compatibility rules (BACKWARD, FORWARD, FULL, NONE).

- Best Practice: Design for evolution. Use flexible formats like Parquet. Configure crawlers appropriately. Write robust ETL code. Use the Schema Registry for streams.

AWS Glue Crawlers Interview Questions

Crawlers automate metadata management – understand their function and limitations.

1. What is the role of an AWS Glue Crawler?

An AWS Glue Crawler connects to a specified data store (S3, JDBC, DynamoDB, etc.), scans the data, automatically infers the schema and format, and then creates or updates table definitions (metadata) in the AWS Glue Data Catalog. They automate the often tedious task of cataloging data assets.

2. List some common data sources supported by Glue Crawlers.

- Amazon S3 (CSV, JSON, Parquet, ORC, Avro, Grok logs, XML, etc.)

- Amazon RDS (MySQL, PostgreSQL, Oracle, SQL Server, MariaDB, Aurora)

- Amazon Redshift

- Amazon DynamoDB

- JDBC-accessible databases (requires network connectivity, often via Glue Connections)

- MongoDB Atlas & Amazon DocumentDB

- Delta Lake tables (on S3)

- Apache Hudi tables (on S3)

- Apache Iceberg tables (on S3)

3. How does a Crawler handle different file formats within the same S3 path?

- By default, a crawler assumes a consistent format within the path it's crawling to define a single table. If multiple formats exist (e.g., CSV and JSON files mixed), it might:

- Pick one format based on its classifiers and sampling.

- Fail to create a coherent table definition.

- Best Practice: Organize data by format in separate S3 prefixes and potentially run separate crawlers or use include/exclude patterns carefully.

4. What are Classifiers in AWS Glue? Differentiate between built-in and custom classifiers.

Classifiers determine the data format and schema.

- Built-in Classifiers: Pre-defined logic for common formats (CSV, JSON, Parquet, ORC, Avro, web logs, etc.). Glue uses these by default.

- Custom Classifiers: User-defined logic for formats not covered by built-in ones. You create them using:

- Grok patterns: For line-based log files or unstructured text.

- XML: Using specific XML tags to define structure.

- JSON: Using JSON paths to define structure.

- Priority: Custom classifiers are evaluated before built-in ones.

5. Explain the configuration options for a Crawler regarding schema updates.

When a crawler runs again and detects changes, you can configure its behavior:

- Update the table definition (add new columns): Modifies the existing table schema. Default behavior for schema additions.

- Update all new and existing partitions with metadata from the table: Ensures partitions inherit table properties.

- Ignore the change and don't update the table in the data catalog: Prevents schema updates (use with caution).

- Update the table definition (deprecate dropped columns): Marks columns no longer detected as deprecated rather than removing them.

- How should AWS Glue handle deleted objects in the data store?: Options include deleting tables/partitions, marking them as deprecated, or ignoring deletions.

6. How can you optimize the performance and cost of running Glue Crawlers?

- Be Specific: Use specific Include Paths instead of broad bucket paths.

- Exclude Patterns: Exclude temporary files, logs, empty directories, or irrelevant prefixes.

- Incremental Crawls: Use S3 event notifications or specified crawl paths for faster runs on frequently updated data (less effective for deep historical partitions).

- Schedule Wisely: Run crawlers only as often as needed based on data arrival frequency.

- Sampling: Crawlers sample files to infer schema. For highly diverse schemas within partitions, you might need to disable sampling (increases runtime and cost but improves accuracy). Default sampling works well for most cases.

- File Size: Many small files (<128MB) are less efficient to crawl than fewer larger files.

AWS Glue ETL Jobs: The Transformation Engine

This is where the core ETL logic resides. Expect in-depth questions on Spark, Python Shell, and DynamicFrames.

1. What are the different types of ETL jobs available in AWS Glue? When would you use each?

- Apache Spark: The most common type. Uses a distributed Spark environment (PySpark or Scala) for large-scale data processing, complex transformations, joins, and aggregations. Use When: Processing large datasets (>GBs/TBs), need distributed computing power, complex transformations, joining large tables.

- Python Shell: Runs a single Python script on a smaller instance (fractional or 1 DPU). Good for lightweight tasks, scripts relying heavily on specific Python libraries not easily usable in Spark, or orchestrating other services. Use When: Small datasets, simple transformations, tasks involving non-Spark libraries (e.g., specific API calls, file manipulation), running short utility scripts.

- Ray: (Newer) A distributed Python framework often used for ML workloads integrated with data pipelines. Use When: Scaling Python-based ML inference/preprocessing or other general-purpose Python parallel computing tasks within an ETL workflow.

2. Explain AWS Glue DynamicFrames. How do they differ from Apache Spark DataFrames?

DynamicFrames are an AWS Glue abstraction built on top of Spark DataFrames, specifically designed for ETL.

- Key Difference: A DynamicFrame represents a collection of DynamicRecords. Each DynamicRecord is self-describing, meaning schema variations between records are possible. A Spark DataFrame enforces a single, rigid schema across all rows.

- Advantages of DynamicFrames:

- Schema Flexibility: Natively handles inconsistent schemas, missing fields, or varying data types within the same logical column (using the Choice type).

- ETL-Specific Transformations: Offers built-in transformations optimized for cleaning and preparing messy data (e.g., ResolveChoice, Relationalize, Unbox, ApplyMapping).

- When to Use Which: Use DynamicFrames early in the pipeline when reading semi-structured or potentially dirty data, or when using Glue-specific transforms. Convert to Spark DataFrames (.toDF()) for complex Spark SQL operations, ML library integrations, or when performance with a fixed schema is critical. Convert back (DynamicFrame.fromDF()) if needed.

3. What is the purpose of the ResolveChoice transformation in Glue?

When a DynamicFrame column contains multiple data types (e.g., a column is sometimes a string, sometimes an integer), Glue represents this using a Choice type. The ResolveChoice transformation allows you to resolve this ambiguity by specifying how to handle the different types, for example:

cast:: Cast all values to a specific type (e.g., cast:long).

make_struct: Create a struct containing fields for each possible type.

project:: Keep only values of a specific type, setting others to null.

make_cols: Create separate columns for each observed type.

4. Explain the ApplyMapping transformation.

- ApplyMapping is one of the most fundamental and frequently used transformations for DynamicFrames. It allows you to:

- Select specific columns from the source.

- Rename columns.

- Change the data type (cast) of columns.

- Reorder columns to match a target schema.

- It takes a mapping list [("source_col", "source_type", "target_col", "target_type")] as input.

5. What are AWS Glue Job Bookmarks? How do they work, and what are their limitations?

Job Bookmarks are an AWS Glue feature that helps process only new data added to a source since the last successful job run, preventing reprocessing of the same data.

- How they work: Glue tracks state information (like file modification times, etags for S3; checkpoint values for JDBC) for the sources defined in the job. When enabled, the job script uses this stored state to filter the input DynamicFrame, processing only data newer than the last recorded state. Upon successful completion, Glue updates the bookmark state.

- Use Cases: Incremental ETL from S3 (time-based partitions), databases (using checkpoint columns).

- Limitations:

- Requires source data to be immutable or reliably indicate newness (e.g., new S3 partitions/files, increasing IDs/timestamps in DB). Modifying already processed files might not trigger reprocessing unless their metadata changes appropriately.

- Transformations within the script can sometimes interfere with bookmarking logic if not handled carefully (though Glue is generally robust).

- Not suitable if you need to reprocess historical data (bookmarks must be paused or reset).

- Requires careful setup for complex JDBC sources.

6. How do you handle errors and bad records in an AWS Glue ETL job?

Several strategies:

- Filtering: Use Filter transformations (DynamicFrame or DataFrame) to remove rows based on quality checks (e.g., filter = Filter.apply(frame = ..., f = lambda x: x["required_field"] is not None)).

- Error Handling in Code: Use try-except blocks within UDFs or complex mapping logic.

- ResolveChoice / Unbox: Handle data type inconsistencies gracefully.

- AWS Glue Data Quality: Define data quality rules (DQDL) as part of your job. Configure actions like failing the job, stopping the job, or logging metrics/results to CloudWatch or S3 if rules fail.

- Quarantine Bad Records: Modify the script to identify bad records based on criteria and write them to a separate "error" location (e.g., a different S3 prefix) for later inspection, while allowing good records to proceed.

- Job Metrics/Logs: Monitor CloudWatch logs and Glue job metrics for errors.

7. How can you pass parameters (e.g., S3 input/output paths, date filters) into a Glue job script?

Use Job Parameters. Define key-value pairs in the Glue Job configuration (Console, CLI, CloudFormation). Inside the PySpark script, use the getResolvedOptions function from awsglue.utils:

import sys

from awsglue.utils import getResolvedOptions

args = getResolvedOptions(sys.argv, ['JOB_NAME', 'S3_INPUT_PATH', 'S3_OUTPUT_PATH', 'PROCESS_DATE'])

print(f"Processing data from: {args['S3_INPUT_PATH']}")

print(f"Writing data to: {args['S3_OUTPUT_PATH']}")

process_date = args['PROCESS_DATE']

Use parameters in your script logic

input_dyf = glueContext.create_dynamic_frame.from_options(connection_type="s3", connection_options={"paths": [args['S3_INPUT_PATH']]}, format="parquet")

... rest of script

Use code with caution.

Python

8. When should you use a Glue Development Endpoint?

Use a Development Endpoint for interactive development and debugging of Glue ETL scripts. It provisions a network environment (connected to your VPC, Data Catalog, S3) where you can connect a notebook (Zeppelin, SageMaker) or use SSH. This allows you to run script snippets, inspect DynamicFrames/DataFrames, test transformations, and iterate much faster than repeatedly running full jobs.

10. Can you use external Python or JAR libraries in your Glue jobs? How?

Yes.

Python (PySpark/Python Shell): Provide .zip or .whl files containing your pure Python libraries or packages with C dependencies (ensure compatibility with Glue's environment - Amazon Linux 2, specific Python/library versions). Specify the S3 paths in the job's Python library path (--extra-py-files job parameter). For packages installable via pip, you can use the --additional-python-modules parameter (e.g., s3fs==2023.12.2,pyarrow==14.0).

Scala/Java (Spark): Provide .jar files containing your compiled code and dependencies. Specify the S3 paths in the job's Dependent JARs path (--extra-jars job parameter).

Best Practice: Package dependencies carefully. Test compatibility in a Development Endpoint. Use --additional-python-modules for pip-installable packages where possible as it's often simpler.

AWS Glue Studio & DataBrew Questions

Understand the visual tools and their place in the ecosystem.

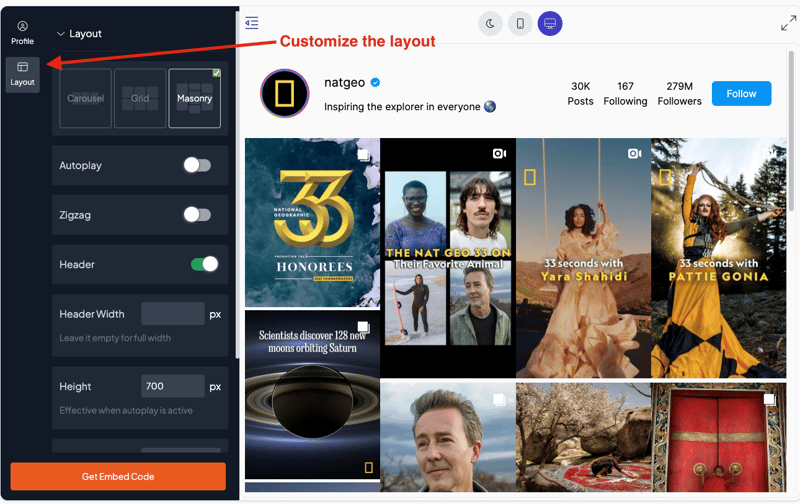

1. What is AWS Glue Studio, and what are its advantages?

A: AWS Glue Studio is a graphical user interface for creating, running, and monitoring AWS Glue ETL jobs. It provides a visual, drag-and-drop canvas.

Advantages:

Ease of Use: Lowers the barrier to entry for ETL development.

Rapid Prototyping: Quickly build standard ETL flows.

Visualization: Clearly shows the data lineage and transformation steps.

Code Generation: Automatically generates PySpark or Scala code for the visual flow.

Integration: Seamlessly integrates with sources/targets defined in the Data Catalog.

2. Can you customize the code generated by Glue Studio?

A: Yes. While Glue Studio is primarily visual, you can:

Edit the Script: Switch to the script editor view and directly modify the auto-generated PySpark/Scala code.

Custom Transform Node: Add a "Custom Transform" node in the visual editor and provide your own Python or Scala code snippet to be inserted at that point in the flow.

Q: What is AWS Glue DataBrew? How does it differ from Glue Studio/ETL?

A: AWS Glue DataBrew is a visual data preparation tool aimed at data analysts and data scientists. It allows users to clean, normalize, profile, and transform data using over 250 pre-built transformations via a point-and-click interface without writing code.

Differences:

Target User: DataBrew -> Analysts/Scientists; Glue Studio/ETL -> ETL Developers/Data Engineers.

Focus: DataBrew -> Data preparation & cleaning; Glue Studio/ETL -> Broader data integration, complex transforms, large-scale processing.

Coding: DataBrew -> No-code; Glue Studio -> Visual/Low-code (generates code); Glue ETL -> Code-centric (Python/Scala).

Output: DataBrew "Recipes" typically output cleaned datasets to S3 for analysis; Glue ETL jobs are often part of larger pipelines loading warehouses, lakes, etc.

Scale: Glue ETL (Spark) is built for larger scale than typical DataBrew use cases.

3. Can Glue DataBrew use the AWS Glue Data Catalog?

A: Yes, DataBrew is integrated with the Glue Data Catalog. You can easily create DataBrew "Datasets" directly from tables defined in your Data Catalog, leveraging the metadata discovered by Crawlers or defined manually. It can also read directly from S3, Redshift, RDS, etc.

Advanced AWS Glue Interview Questions

Expect questions on building robust, secure, and efficient pipelines.

1. Explain AWS Glue Triggers and the different types available.

A: AWS Glue Triggers are used to start Glue jobs and crawlers automatically. Types include:

Scheduled: Run based on a cron-like schedule (e.g., daily at 2 AM).

On-Demand: Started manually via Console, CLI, or SDK.

Job Event: Triggered by the state change of another Glue job or crawler (e.g., start Job B after Job A succeeds). This creates dependencies.

Conditional: Triggered only when multiple preceding jobs or crawlers (watchers) reach specific states (e.g., start Job D only after both Job B AND Job C succeed).

2. What are AWS Glue Workflows? How do they help manage complex ETL processes?

A: AWS Glue Workflows provide a way to author, visualize, run, and monitor complex ETL pipelines involving multiple jobs, crawlers, and triggers. They allow you to define dependencies and execution logic graphically.

Benefits:

Orchestration: Manage sequences and parallel execution of ETL tasks.

Visualization: Provides a clear diagram of the entire pipeline flow.

State Management: Tracks the state of the entire workflow and its components.

Parameter Sharing: Pass parameters between entities within the workflow.

Error Handling: Define conditional paths based on success/failure states.

3. How do you implement security best practices for AWS Glue jobs?

A: Key security measures include:

IAM Roles & Least Privilege: Create specific IAM roles for Glue jobs granting only the necessary permissions (e.g., specific S3 buckets/prefixes, Data Catalog resources, KMS keys, Secrets Manager secrets). Avoid overly permissive policies.

Security Configurations: Define encryption settings within Glue:

S3 Encryption (SSE-S3 or SSE-KMS with customer-managed keys).

CloudWatch Logs Encryption.

Job Bookmark Encryption.

Network Security:

Run jobs within a VPC using Glue Connections (JDBC, MongoDB) to securely access private resources (RDS, Redshift, EC2).

Use VPC Endpoints (Gateway for S3/DynamoDB, Interface for Glue API, CloudWatch, KMS, Secrets Manager) to keep traffic within the AWS network.

Configure Security Groups and Network ACLs appropriately.

Secrets Management: Store database credentials or API keys securely in AWS Secrets Manager and reference them from Glue Connections or retrieve them within the job script using the assigned IAM role.

AWS Lake Formation: Use Lake Formation for fine-grained access control (column-level, row-level, tag-based) on Data Catalog resources, which Glue jobs can respect.

4. Your Glue Spark job is running slowly. What steps would you take to troubleshoot and optimize its performance?

A: A systematic approach:

- Monitor Metrics: Check CloudWatch Metrics for the job (CPU utilization, memory usage per executor, shuffle read/write, execution duration, driver health). Check Spark UI (if enabled or via history server) for stage/task durations, bottlenecks, data skew.

- Check Resource Allocation: Increase DPUs: Add more workers (scale out). Start with doubling and observe. Change Worker Type: If memory usage is high (OOM errors), switch to memory-optimized workers (G.1X, G.2X, G.4X, G.8X).

- Optimize Code: Filter Early: Apply Filter transformations or WHERE clauses as early as possible to reduce data volume. Select Necessary Columns: Use select() or ApplyMapping to drop unused columns early. Avoid UDFs: Prefer built-in Spark/Glue functions over Python/Scala UDFs where possible, as they are often less optimized. Efficient Joins/Aggregations: Review join strategies, check for data skew (use techniques like salting if needed), optimize aggregation logic. Caching: Cache (.cache() or .persist()) intermediate DataFrames/DynamicFrames if they are reused multiple times. Use with caution due to memory implications.

- Optimize Data Format & Layout: Use Columnar Formats: Read/write data using optimized columnar formats like Apache Parquet or ORC. Partitioning: Ensure source data (especially on S3) is effectively partitioned. Use partition predicates (push_down_predicate in Glue options or WHERE clauses on partition columns) to enable partition pruning. File Size: Avoid the "small files problem." Aim for optimally sized files (e.g., 128MB - 1GB). Use techniques like coalesce or repartition before writing, or use Glue's S3 Sink grouping feature.

- Check Source/Target Performance: Ensure the source database or target system isn't the bottleneck.

- Job Bookmarks: Ensure bookmarks are enabled and working correctly for incremental loads to avoid reprocessing.

5. What is data skew in Spark, and how can it impact Glue jobs? How might you address it?

A: Data Skew occurs when data for certain keys is disproportionately large compared to others during operations like joins or group-by aggregations. This leads to a few tasks taking much longer than others, bottlenecking the entire stage and job.

Impact: Slow job performance, potential executor failures (OOM).

Addressing Skew:

Salting: Add a random prefix/suffix to skewed keys before joining/grouping, then aggregate/remove the salt later. This distributes the skewed data across more partitions/tasks.

Repartition/Coalesce: Strategically repartition data before skewed operations (though this involves a shuffle).

Use Skew-Aware Optimizations: Some frameworks have built-in skew handling (e.g., Spark 3's Adaptive Query Execution can sometimes help with join skew).

Isolate Skewed Keys: Process the highly skewed keys separately from the rest of the data if feasible.

Q: How do you monitor Glue jobs? What tools would you use?

A:

AWS Glue Console: Provides job run history, status, basic metrics, links to logs, and workflow monitoring.

Amazon CloudWatch: The primary monitoring tool.

Logs: Detailed driver and executor logs are sent to CloudWatch Logs. Essential for debugging errors.

Metrics: Glue publishes various metrics (elapsed time, DPU usage, memory profile, shuffle metrics, bytes read/written, bookmark progress, Data Quality rule outcomes) to CloudWatch. Set alarms on key metrics (e.g., failure count, long duration).

AWS Cost Explorer: Track DPU-hours and associated costs.

Spark UI: (If enabled during job run or via Glue History Server). Provides detailed insights into Spark stages, tasks, execution times, shuffles, storage, and environment. Crucial for deep performance analysis.

AWS CloudTrail: Log API calls made to the Glue service for auditing and security.

AWS Glue Data Quality: View results and metrics from data quality rule evaluations.

Scenario-Based AWS Glue Interview Questions

These test your ability to apply knowledge in practical situations.

1. Describe how you would design a Glue ETL pipeline to process daily CSV files dropped into an S3 bucket, enrich them with data from an RDS database, and write the output as partitioned Parquet files back to S3.

- 1. Trigger: Use a Scheduled Trigger to run the pipeline daily, or an S3 Event Trigger (via EventBridge/Lambda) if near real-time processing is needed upon file arrival (though batching might be better).

- 2. Crawler (Optional but Recommended): Have a Glue Crawler run periodically (or triggered) on the input S3 path (s3://input-bucket/csv-data/) to keep the Glue Data Catalog updated with the CSV schema.

- 3. Glue ETL Job (Spark):

- Source 1 (S3): Read the daily CSV data from S3 using the Data Catalog table (glueContext.create_dynamic_frame.from_catalog(...)). Use Job Bookmarks (enabled) to process only new files/partitions. Apply necessary cleaning/casting using ApplyMapping.

- Source 2 (RDS): Define a Glue Connection to the RDS database. Read the enrichment data using glueContext.create_dynamic_frame.from_options(connection_type="rds", connection_options={"useConnectionProperties": "true", "connectionName": "my-rds-connection", "dbtable": "enrichment_table"}). Select only needed columns.

- Transform (Join): Convert DynamicFrames to Spark DataFrames (.toDF()) if needed for easier joining. Perform a join operation between the CSV data and the RDS enrichment data based on a common key. Handle potential nulls post-join.

- Transform (Partitioning Column): Add a new column for partitioning (e.g., processing_date).

- Target (S3): Write the resulting DataFrame/DynamicFrame to the output S3 location (s3://output-bucket/parquet-data/) using the Parquet format. Specify the partitionKeys option in the sink (e.g., partitionKeys=["processing_date"]). Consider using enableUpdateCatalog and updateBehavior options in the sink to automatically update the Data Catalog with the output table definition/partitions.

- 4. Workflow (Optional): If multiple steps or dependencies exist (e.g., pre-processing job, post-processing validation), orchestrate them using an AWS Glue Workflow.

- 5. Monitoring: Set up CloudWatch Alarms for job failures or long durations.

2. When would you choose AWS Glue over Amazon EMR for an ETL task? When would EMR be a better choice?

Choose AWS Glue When:

- You prefer a serverless, fully managed experience (no cluster management).

- Workloads are event-driven or scheduled, often intermittent.

- Primary need is ETL and data integration using Spark or Python Shell.

- You want tight integration with the Glue Data Catalog, Studio, DataBrew.

- Development team is comfortable with Glue's abstractions (DynamicFrames) or wants a visual interface (Studio).

Cost optimization for idle time is critical (pay-per-job execution).

Choose Amazon EMR When:You need full control over the cluster environment (specific Hadoop/Spark versions, custom bootstrap actions, instance types, OS level access).

You need to run other Hadoop ecosystem applications (Hive, Presto, HBase, Flink, Hudi/Iceberg with deeper integrations).

You have long-running, persistent clusters for ad-hoc queries or applications.

You need specific instance types not available directly in Glue (e.g., GPU instances for Spark ML).

You have existing complex EMR workflows and expertise.

-

Potentially lower cost for very high utilization, long-running jobs compared to Glue's per-minute DPU billing (requires careful cost analysis).

3. How does AWS Lake Formation interact with AWS Glue?

Lake Formation builds upon Glue components to provide centralized governance and fine-grained access control for data lakes:

- Data Catalog: Lake Formation uses the Glue Data Catalog as its underlying metadata repository.

- Permissions: Instead of just IAM, Lake Formation allows defining permissions (SELECT, INSERT, ALTER, etc.) on Databases, Tables, and even Columns for specific IAM users/roles or SAML/AD users.

- Credentials Vending: Glue ETL jobs (and Athena, Redshift Spectrum) can assume roles governed by Lake Formation, receiving temporary credentials scoped down by Lake Formation permissions when accessing data.

- Crawlers: Lake Formation can manage crawler roles and register data locations.

- Blueprints/Workflows: Lake Formation provides templates (Blueprints) that often use Glue workflows and jobs to ingest data.

- In essence: Glue provides the ETL engine and metadata store; Lake Formation provides the security and governance layer on top. ### 4. You need to process a stream of data from Kinesis Data Streams and store it in S3 partitioned by arrival time. Can Glue handle this? How?

Yes, AWS Glue supports streaming ETL jobs.

- Source: Define the Kinesis Data Stream as the source in your Glue streaming job script using glueContext.create_data_frame.from_options(...) or glueContext.create_dynamic_frame.from_options(...) with connection_type = "kinesis". Specify the stream name, starting position, etc.

- Transformations: Apply necessary transformations to the streaming DataFrame/DynamicFrame (e.g., parsing JSON, simple filtering, adding timestamps). Windowing operations are also possible.

- Target (Sink): Use a Glue streaming sink to write to S3 (glueContext.write_dynamic_frame.from_options(...)).

- Partitioning: Add columns representing year, month, day, hour based on event timestamps or processing time within your transformation logic. Specify these columns in the partitionKeys option of the S3 sink.

- Checkpointing: Glue streaming jobs automatically manage checkpointing to track processed data within the Kinesis stream, ensuring fault tolerance.

- Data Catalog Integration: You can configure the sink to update the Glue Data Catalog with new partitions as they are written.

5. Your Glue job fails intermittently with "ExecutorLostFailure" or OutOfMemoryError (OOM). What are common causes and solutions?

Causes:

- Insufficient Executor Memory: Default memory per executor might not be enough for your data size or transformations (e.g., large shuffles, complex UDFs, caching large datasets).

- Data Skew: One or a few tasks process significantly more data than others, overwhelming their assigned executor's memory.

- Inefficient Code: Operations like collecting large datasets to the driver, inefficient UDFs, or very wide transformations.

- Large Shuffles: Operations like groupBy, join, repartition can require significant memory for shuffling data between executors.

- Incorrect Worker Type: Using standard workers when memory-optimized (G.1X, G.2X etc.) are needed.

Solutions:

- Increase DPU Count: Provides more executors and distributes work, potentially reducing memory pressure on individual executors (if not caused by skew).

- Switch to Memory-Optimized Workers (G.1X, G.2X+): Provides significantly more RAM per executor. Often the most direct solution for OOM.

- Optimize Code: Refactor joins/aggregations, avoid collecting large data to the driver, optimize UDFs, filter/select early.

- Address Data Skew: Implement techniques like salting (see Q34).

- Increase Spark Executor Memory: (Less common in Glue, as worker types are the primary control) You can sometimes pass Spark config like --conf spark.executor.memory=... but changing Worker Type is preferred.

- Tune Shuffle Partitions: Adjust spark.sql.shuffle.partitions if default (200) is inappropriate for your data size/cluster size.

- Review Spark UI: Analyze failing stages/tasks in the Spark UI (if available) to pinpoint the exact operation causing the OOM.

Tips for Answering AWS Glue Interview Questions

- Be Specific: Don't just define a term; explain its purpose and context within Glue.

- Use Keywords: Naturally incorporate terms like "serverless," "Data Catalog," "Crawler," "ETL Job," "DynamicFrame," "DPU," "performance," "security," etc.

- Provide Examples: Illustrate concepts with brief, practical examples (e.g., S3 partitioning, join scenario).

- Structure Your Answers: Start with a concise definition, elaborate on key aspects, and mention benefits or trade-offs.

- Connect Components: Show you understand how different Glue components (Crawler -> Data Catalog -> ETL Job -> Trigger) work together.

- Acknowledge Trade-offs: Discuss pros and cons (e.g., DynamicFrames vs. DataFrames, Glue vs. EMR).

- Mention Best Practices: Incorporate security, performance, and cost optimization best practices into your answers.

- Be Honest: If you don't know an answer, admit it, but perhaps explain how you would approach finding the solution or relate it to a concept you do know.

Conclusion

Mastering AWS Glue interview questions is crucial for anyone aiming for roles involving data processing and analytics on AWS. From understanding the serverless nature and core components like the Data Catalog and Crawlers, to diving deep into ETL job development with Spark, DynamicFrames, performance tuning, and security – a thorough preparation is key.

This guide has provided an extensive list of questions and answers covering the breadth and depth of AWS Glue. By studying these AWS Glue interview questions, understanding the underlying concepts, and practicing articulating your knowledge, you'll significantly boost your confidence and increase your chances of success in your next technical interview. Good luck!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

.jpg?#)

.jpg?#)

![CarPlay app with web browser for streaming video hits App Store [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/11/carplay-apple.jpeg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/21]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![Apple Seeds watchOS 11.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97079/97079/97079-640.jpg)