Apple M1+ web developers are cheating. You should do that, too

Web development in certain communities (E.G.: React, Next, Angular) nearly reached the level of bloat of Java, showing yet another example of humans never learning lessons from the past. Recently someone highlighted how developers working on an M1/M2/M3/M4 Mac can happily run workloads such as those required by Next.js, VSCode, React and most webapps built with these, just to name a few, without feeling much of the pressure, even on a machine that's only equipped with a bare 16GiB of RAM. On other machines, take a random Linux, for instance, there is no way on earth you can keep more than a few open Chrome tabs, VSCode, Next.js running at the same time without constantly ending out of memory and having these crashing catastrophically. So, what's the deal? There must be some rational explanation to all that, right? Apple M chips introduced something called the UMA (Unified Memory Architecture), which keeps the RAM on the same chip as the CPU and the GPU, so depending on the workload, it can be used for a graphics pipeline, crypto mining, AI or general-purpose computation. That's ok, clever and all fine, nothing actually new, though and not enough to explain how can that make bloated and leaking applications and webapps work acceptably on a 16GiB machine. Turns out, MacOS is employing other tricks to mitigate the situation. Most notably it's... memory compression! Aha! That could actually explain a number of things. If an app keeps leaking mostly identical objects in memory, compression may actually take you a very long way. What else? Aggressive swapping is the other one. This one used to be a bad practice back in the days, when early SSD drives were very sensitive to excessive writes. Long story short Ok, so, got the idea? Let's make do in practice on Linux, using the following: Memory compression Aggressive swapping Memory Compression The biggest deal if you want to firefight memory leaks is compression. zram is a kernel module that will turn part of the RAM into a swap device, in which the compression magic happens. Let's assume zram is compiled in your kernel as a module and available for use (if not, you're just a chat away with your favourite AI to built it, enable it, etc, or drop a comment below) For a start, you can manually switch it on with a simple sudo modprobe zram Then, create and activate your new pseudo-swapfile: # Create the virtual device itself sudo zramctl /dev/zram0 --size 4G --algo zstd # Set it up as a swapfile sudo mkswap /dev/zram0 # Activate swapping on it sudo swapon -p 100 /dev/zram0 In the code above, we used zstd as a compression algorithm, which comes with a good compression efficiency at the slight expense of CPU (choice driven by how bad these memory leaking systems are). Choose yours according to your needs. For a list of the compression algorithms available for you via zstd, run cat /sys/block/zram0/comp_algorithm. To persist your changes after reboot, run the following: # autoload the zram module on start echo "zram" | sudo tee -a /etc/modules # automatically create a virtual swap file in memory after the module is loaded echo "KERNEL==\"zram0\", ATTR{comp_algorithm}=\"zstd\", ATTR{disksize}=\"4G\", ACTION==\"add\", RUN+=\"/sbin/mkswap /dev/zram0\"" | sudo tee /etc/udev/rules.d/99-zram.rules # automatically start using this new virtual swap file # Auto-activate it after reboot echo "/sbin/swapon /dev/zram0" | sudo tee -a /etc/rc.local swapon -p 100 is meant to raise the priority of this pseudo-swapfile over other swapfiles you may have, to make sure linux tries to swap here first. "Traditional" Swap Various Linux distributions may install by default with either a swap partition or a swapfile out of the box. A swap partition might be more efficient as there's no file-system layer in the middle. Whether the difference is noticeable I don't know, but there's a hard limit on the amount of the swap file size. Also, given it's a partition, you'd end up writing always the same blocks of your SSD, which might not be ideal. A simple way to create a swapfile in your file system is the following: # Allocate a contiguous 10G file sudo fallocate -l 10G /swapfile # Clean its contents sudo dd if=/dev/zero of=/swapfile bs=1M count=10240 # Make it secure sudo chmod 600 /swapfile # Set it up as a swapfile mkswap /swapfile # Activate it now sudo swapon /swapfile # Auto-activate it after reboot echo "/swapfile swap swap defaults 0 0" | sudo tee -a /etc/fstab swapon --show can help you show the current status of your swap files Swappiness, etc One last thing you should add, is the following settings, to regularly keep your RAM 80% used, 20% free, for a decent balance between usable and... usable: # Tell Linux to regularly free up some RAM before it's full echo "vm.swappiness=80" | sudo tee -a /etc/sysctl.conf # Bite a bit into FS caches, as

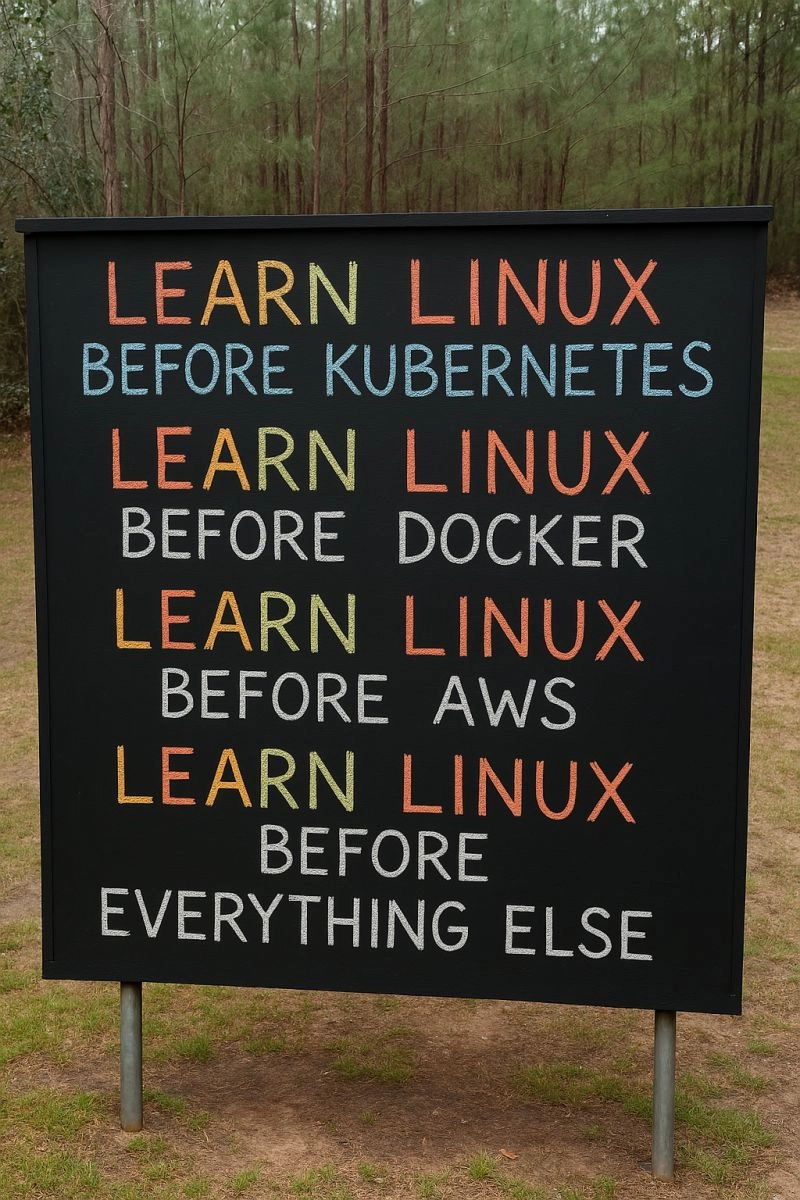

Web development in certain communities (E.G.: React, Next, Angular) nearly reached the level of bloat of Java, showing yet another example of humans never learning lessons from the past.

Recently someone highlighted how developers working on an M1/M2/M3/M4 Mac can happily run workloads such as those required by Next.js, VSCode, React and most webapps built with these, just to name a few, without feeling much of the pressure, even on a machine that's only equipped with a bare 16GiB of RAM.

On other machines, take a random Linux, for instance, there is no way on earth you can keep more than a few open Chrome tabs, VSCode, Next.js running at the same time without constantly ending out of memory and having these crashing catastrophically.

So, what's the deal? There must be some rational explanation to all that, right?

Apple M chips introduced something called the UMA (Unified Memory Architecture), which keeps the RAM on the same chip as the CPU and the GPU, so depending on the workload, it can be used for a graphics pipeline, crypto mining, AI or general-purpose computation.

That's ok, clever and all fine, nothing actually new, though and not enough to explain how can that make bloated and leaking applications and webapps work acceptably on a 16GiB machine.

Turns out, MacOS is employing other tricks to mitigate the situation. Most notably it's... memory compression!

Aha! That could actually explain a number of things.

If an app keeps leaking mostly identical objects in memory, compression may actually take you a very long way.

What else? Aggressive swapping is the other one.

This one used to be a bad practice back in the days, when early SSD drives were very sensitive to excessive writes.

Long story short

Ok, so, got the idea? Let's make do in practice on Linux, using the following:

- Memory compression

- Aggressive swapping

Memory Compression

The biggest deal if you want to firefight memory leaks is compression. zram is a kernel module that will turn part of the RAM into a swap device, in which the compression magic happens.

Let's assume zram is compiled in your kernel as a module and available for use (if not, you're just a chat away with your favourite AI to built it, enable it, etc, or drop a comment below)

For a start, you can manually switch it on with a simple sudo modprobe zram

Then, create and activate your new pseudo-swapfile:

# Create the virtual device itself

sudo zramctl /dev/zram0 --size 4G --algo zstd

# Set it up as a swapfile

sudo mkswap /dev/zram0

# Activate swapping on it

sudo swapon -p 100 /dev/zram0

In the code above, we used zstd as a compression algorithm, which comes with a good compression efficiency at the slight expense of CPU (choice driven by how bad these memory leaking systems are).

Choose yours according to your needs. For a list of the compression algorithms available for you via zstd, run cat /sys/block/zram0/comp_algorithm.

To persist your changes after reboot, run the following:

# autoload the zram module on start

echo "zram" | sudo tee -a /etc/modules

# automatically create a virtual swap file in memory after the module is loaded

echo "KERNEL==\"zram0\", ATTR{comp_algorithm}=\"zstd\", ATTR{disksize}=\"4G\", ACTION==\"add\", RUN+=\"/sbin/mkswap /dev/zram0\"" | sudo tee /etc/udev/rules.d/99-zram.rules

# automatically start using this new virtual swap file

# Auto-activate it after reboot

echo "/sbin/swapon /dev/zram0" | sudo tee -a /etc/rc.local

swapon -p 100 is meant to raise the priority of this pseudo-swapfile over other swapfiles you may have, to make sure linux tries to swap here first.

"Traditional" Swap

Various Linux distributions may install by default with either a swap partition or a swapfile out of the box. A swap partition might be more efficient as there's no file-system layer in the middle. Whether the difference is noticeable I don't know, but there's a hard limit on the amount of the swap file size. Also, given it's a partition, you'd end up writing always the same blocks of your SSD, which might not be ideal.

A simple way to create a swapfile in your file system is the following:

# Allocate a contiguous 10G file

sudo fallocate -l 10G /swapfile

# Clean its contents

sudo dd if=/dev/zero of=/swapfile bs=1M count=10240

# Make it secure

sudo chmod 600 /swapfile

# Set it up as a swapfile

mkswap /swapfile

# Activate it now

sudo swapon /swapfile

# Auto-activate it after reboot

echo "/swapfile swap swap defaults 0 0" | sudo tee -a /etc/fstab

swapon --show can help you show the current status of your swap files

Swappiness, etc

One last thing you should add, is the following settings, to regularly keep your RAM 80% used, 20% free, for a decent balance between usable and... usable:

# Tell Linux to regularly free up some RAM before it's full

echo "vm.swappiness=80" | sudo tee -a /etc/sysctl.conf

# Bite a bit into FS caches, as well

echo "vm.vfs_cache_pressure=50" | sudo tee -a /etc/sysctl.conf

Results

Don't expect a holy grail here. Memory leaks are memory leaks. As some applications will keep allocating memory forever, at some point you'll hit a hard limit anyway, just slow enough to buy you enough time to get some work done.

Philosophical considerations

Now that you have a "solution" to the "problem", is it ethical to continue using and supporting a development ecosystem that's devastated by bloat, memory leaks and requires unacceptable amounts of energy for basic webapps, let alone complex ones, even if it may take a little extra effort?

Leave a comment below to share your thoughts.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_roibu_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Android 16 Beta 4: How to bring back Pixel Battery health [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Android-16-logos-5.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)