Understanding Load Balancers: Concept, Use Cases & Practical Application.

In today's digital landscape, where applications and services must handle millions of requests per second, ensuring availability, reliability, and efficient traffic distribution is crucial. This is where Load Balancers come into play. A Load Balancer acts as an intelligent traffic manager, distributing network or application traffic across multiple servers to optimize resource utilization, maximize throughput, and minimize response time. This article explores the concept, types, functionality, use cases, and a step-by-step approach to setting up a load balancer. What is a Load Balancer? A Load Balancer is a networking solution that distributes incoming traffic across multiple servers to ensure high availability, scalability, and reliability of applications. It acts as an intermediary between users and backend servers, preventing any single server from becoming overwhelmed. Load Balancers operate at different layers of the OSI model, typically at: Layer 4 (Transport Layer): Directs traffic based on IP and TCP/UDP information. Layer 7 (Application Layer): Routes traffic based on application-level data (HTTP headers, cookies, etc.). Let's break the Network Component of it down first. Role in Network Traffic Management A load balancer sits between clients and backend servers, acting as an intermediary that routes network traffic efficiently.It ensures that no single server is overwhelmed, improving network performance and reliability. Works at Network Layers (OSI Model)as mentioned above. Uses Network Protocols Load balancers handle requests using protocols such as TCP, UDP, HTTP, and HTTPS. These protocols are fundamental to how devices communicate over a network. Integral Part of Network Infrastructure Load balancers are deployed within a network architecture to ensure high availability, redundancy, and security. They interact with firewalls, DNS, and backend servers, making them a crucial part of modern network infrastructure. A load balancer is responsible for directing and managing network traffic at different layers of the OSI model,In AWS, Elastic Load Balancing (ELB) plays this role within a Virtual Private Cloud (VPC) setup, ensuring scalable and fault-tolerant network operations. How Load Balancers Work When a client makes a request, the Load Balancer: Receives the request – determines the best server to handle it. The Load Balancer acts as an intermediary between users and backend servers. Distributes the request based on a configured algorithm: -Round Robin: Assigns requests sequentially to servers. -Least Connections: Directs traffic to the server with the fewest active connections. -IP Hashing: Routes users to the same backend based on their IP. Performs Health Checks: Continuously monitors the health of servers and reroutes traffic if one fails. Implements SSL/TLS Termination: Decrypts HTTPS requests before forwarding them to backend servers. Uses Sticky Sessions (Session Persistence): Ensures a user remains connected to the same server when needed. Caches Content for Faster Performance: In the case of ALB, caching helps improve response time and reduce load. For instance, global e-commerce marketplaces eg Temu, Jumia, etc use Load Balancers to distribute millions of requests across multiple backend services. During peak shopping seasons, its infrastructure scales dynamically, ensuring fast response times for users worldwide. Why Use a Load Balancer? High Availability & Fault Tolerance – Automatically redirect traffic if a server becomes unhealthy. I.e If one server fails, traffic is rerouted to healthy servers. Improved Performance – Balances the workload across multiple instances, reducing response time. Scalability – Dynamically adjust the number of servers to handle traffic spikes or reduce costs during low demand. As demand increases, additional instances can be added, and the load balancer efficiently manages them. Security Enhancement – Acts as a protective layer, preventing direct access to backend servers. Use Cases of AWS Load Balancers AWS Load Balancers are used in various scenarios, including: Web Applications: Distributes HTTP/HTTPS traffic among multiple EC2 instances. Database Load Balancing: Manages database read/write operations efficiently. Auto Scaling Integration: Automatically adjusts the number of backend instances based on demand. Microservices and Containers: Directs traffic between containers in AWS ECS/Kubernetes. Security and Compliance: Prevents exposure of backend server IPs and protects against DDoS attacks. Types of AWS Load Balancers AWS provides three types of Load Balancers: Application Load Balancer (ALB) – Best for HTTP/HTTPS traffic, Operates at OSI layer 7, the application layer is used for application architectures and supports advanced routing features. Network Load Balancer (NLB) – Best for handling millions of requests per second at low-latency TCP/UDP connections. used for TLS offloading, UDP, and static IP addresses.

In today's digital landscape, where applications and services must handle millions of requests per second, ensuring availability, reliability, and efficient traffic distribution is crucial. This is where Load Balancers come into play. A Load Balancer acts as an intelligent traffic manager, distributing network or application traffic across multiple servers to optimize resource utilization, maximize throughput, and minimize response time. This article explores the concept, types, functionality, use cases, and a step-by-step approach to setting up a load balancer.

What is a Load Balancer?

A Load Balancer is a networking solution that distributes incoming traffic across multiple servers to ensure high availability, scalability, and reliability of applications. It acts as an intermediary between users and backend servers, preventing any single server from becoming overwhelmed. Load Balancers operate at different layers of the OSI model, typically at:

- Layer 4 (Transport Layer): Directs traffic based on IP and TCP/UDP information.

- Layer 7 (Application Layer): Routes traffic based on application-level data (HTTP headers, cookies, etc.).

Let's break the Network Component of it down first.

- Role in Network Traffic Management A load balancer sits between clients and backend servers, acting as an intermediary that routes network traffic efficiently.It ensures that no single server is overwhelmed, improving network performance and reliability.

- Works at Network Layers (OSI Model)as mentioned above.

- Uses Network Protocols Load balancers handle requests using protocols such as TCP, UDP, HTTP, and HTTPS. These protocols are fundamental to how devices communicate over a network.

- Integral Part of Network Infrastructure Load balancers are deployed within a network architecture to ensure high availability, redundancy, and security. They interact with firewalls, DNS, and backend servers, making them a crucial part of modern network infrastructure.

A load balancer is responsible for directing and managing network traffic at different layers of the OSI model,In AWS, Elastic Load Balancing (ELB) plays this role within a Virtual Private Cloud (VPC) setup, ensuring scalable and fault-tolerant network operations.

How Load Balancers Work

When a client makes a request, the Load Balancer:

- Receives the request – determines the best server to handle it. The Load Balancer acts as an intermediary between users and backend servers.

Distributes the request based on a configured algorithm:

-Round Robin: Assigns requests sequentially to servers.

-Least Connections: Directs traffic to the server with the

fewest active connections.

-IP Hashing: Routes users to the same backend based on their IP.Performs Health Checks: Continuously monitors the health of servers and reroutes traffic if one fails.

Implements SSL/TLS Termination: Decrypts HTTPS requests before forwarding them to backend servers.

Uses Sticky Sessions (Session Persistence): Ensures a user remains connected to the same server when needed.

Caches Content for Faster Performance: In the case of ALB, caching helps improve response time and reduce load.

For instance, global e-commerce marketplaces eg Temu, Jumia, etc use Load Balancers to distribute millions of requests across multiple backend services. During peak shopping seasons, its infrastructure scales dynamically, ensuring fast response times for users worldwide.

Why Use a Load Balancer?

- High Availability & Fault Tolerance – Automatically redirect traffic if a server becomes unhealthy. I.e If one server fails, traffic is rerouted to healthy servers.

- Improved Performance – Balances the workload across multiple instances, reducing response time.

- Scalability – Dynamically adjust the number of servers to handle traffic spikes or reduce costs during low demand. As demand increases, additional instances can be added, and the load balancer efficiently manages them.

- Security Enhancement – Acts as a protective layer, preventing direct access to backend servers.

Use Cases of AWS Load Balancers

AWS Load Balancers are used in various scenarios, including:

Web Applications: Distributes HTTP/HTTPS traffic among multiple EC2 instances.

Database Load Balancing: Manages database read/write operations efficiently.

Auto Scaling Integration: Automatically adjusts the number of backend instances based on demand.

Microservices and Containers: Directs traffic between containers in AWS ECS/Kubernetes.

Security and Compliance: Prevents exposure of backend server IPs and protects against DDoS attacks.

Types of AWS Load Balancers

AWS provides three types of Load Balancers:

Application Load Balancer (ALB) – Best for HTTP/HTTPS traffic, Operates at OSI layer 7, the application layer

is used for application architectures and supports advanced routing features.

Network Load Balancer (NLB) – Best for handling millions of requests per second at low-latency TCP/UDP connections. used for TLS offloading, UDP, and static IP addresses. Operates at OSI layer 4, the transport layer

Classic Load Balancer (CLB)– Operates at OSI layers 3 and 7, the transport and application layers

are used if upgrading to other load balancers is not feasible.

Load Balancer Components

Listener: Defines how the Load Balancer accepts traffic. (e.g., HTTP, HTTPS, TCP).

Target Group: A collection of registered backend instances.

Targets: EC2 instances, Lambda functions, IP addresses, or containers.

Rules: Control request routing using host-based or path-based rules (for ALB).

Step-by-Step Guide to Setting Up an AWS Load Balancer.

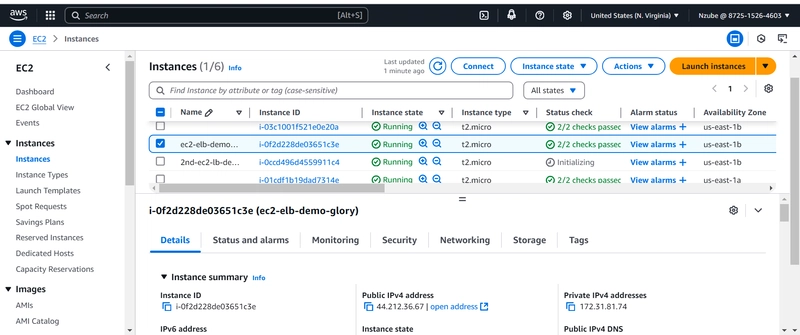

Step 1: Launch Two EC2 Instances (check steps and config in my dashboard)

- Launch two EC2 instances with Amazon Linux or Ubuntu.

- Assign them to the same security group.

- Attach a User Data script to install a web server automatically.

Example User Data for Instance 1:

#!/bin/bash

sudo yum update -y

sudo yum install -y httpd

sudo systemctl start httpd

sudo systemctl enable httpd

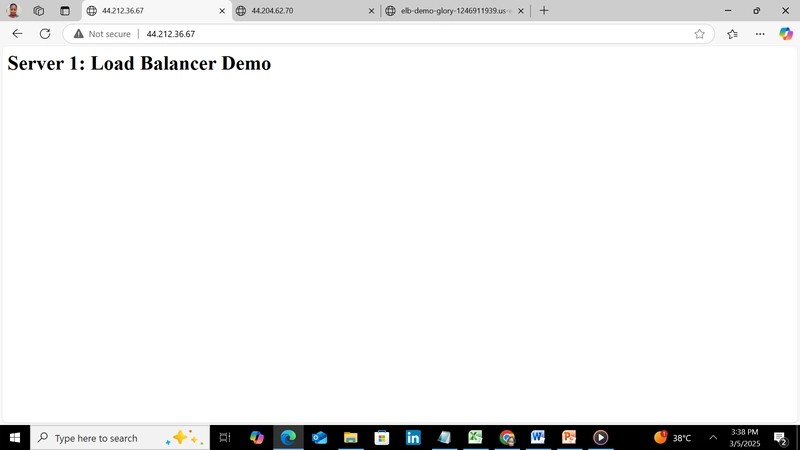

echo "Server 1: Load Balancer Demo

" > /var/www/html/index.html

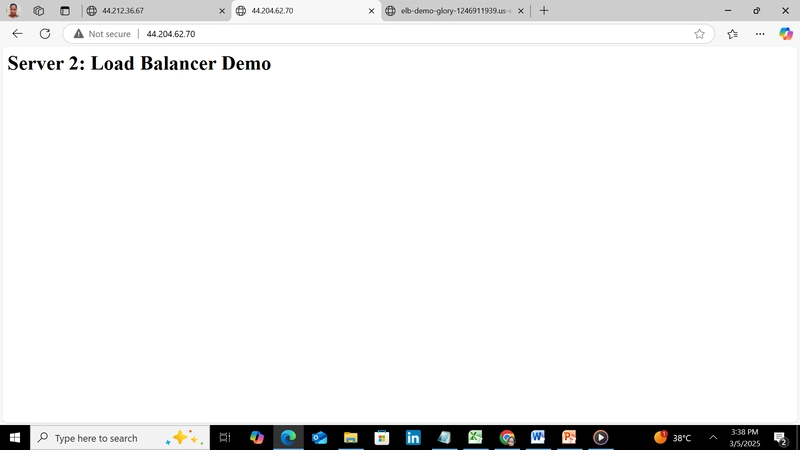

Example User Data for Instance 2:

#!/bin/bash

sudo yum update -y

sudo yum install -y httpd

sudo systemctl start httpd

sudo systemctl enable httpd

echo "Server 2: Load Balancer Demo

" > /var/www/html/index.html

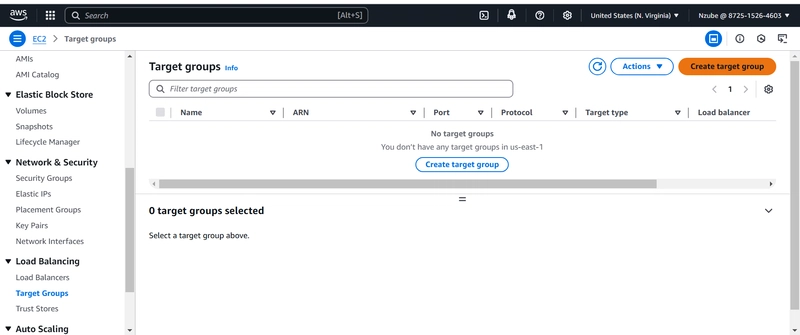

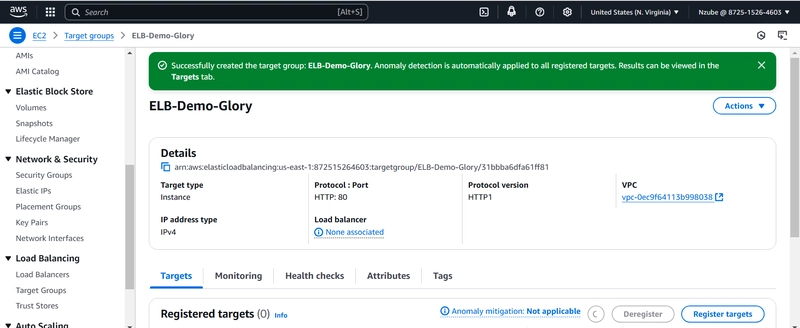

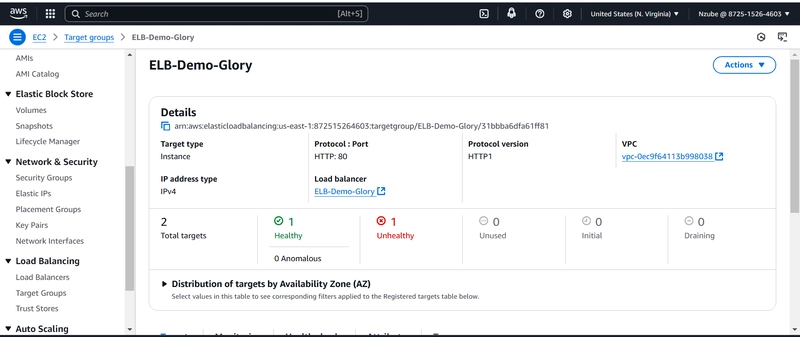

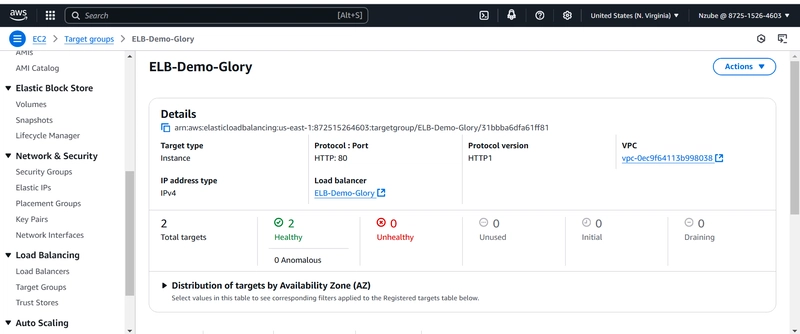

Step 2: Configure Target Group

- Go to Target Groups and create a new one.

- Register the previously launched EC2 instances.

- Define a Health Check path (e.g., /index.html).

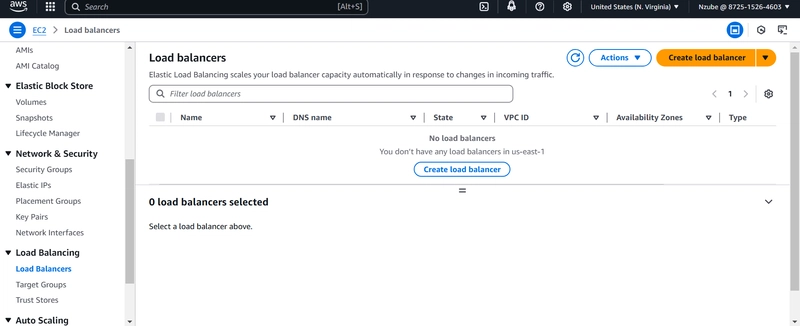

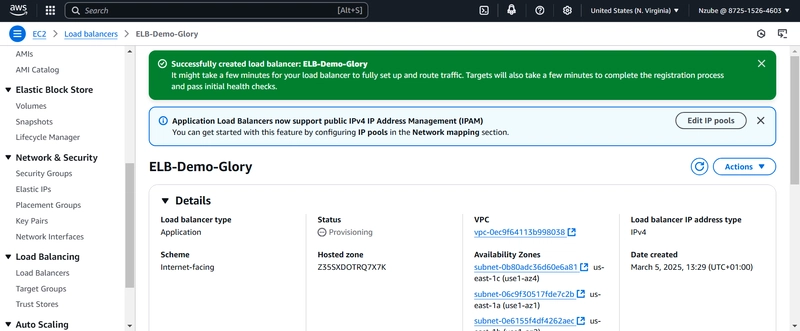

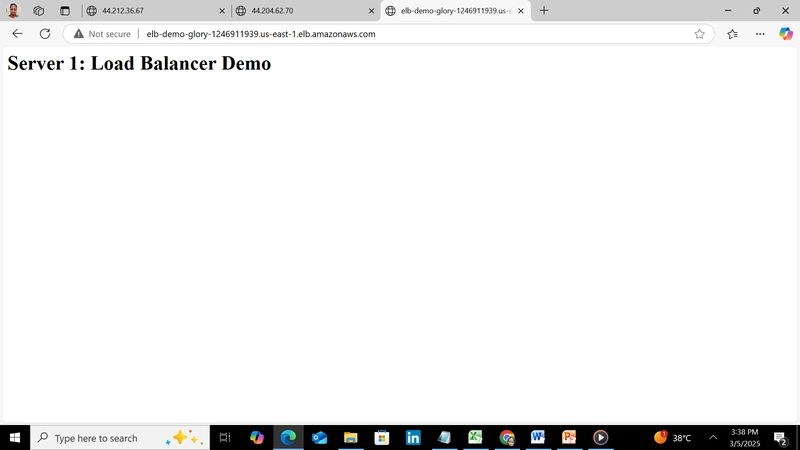

Step 3: Create an AWS Load Balancer

- Go to AWS Management Console > EC2 > Load Balancers.

- Choose Application Load Balancer (ALB).

- Set a Listener (HTTP/HTTPS).

- Select at least two availability zones for high availability.

Step 4: Attach the Target Group to the Load Balancer

- Navigate to Listeners in your Load Balancer settings.

- Forward traffic to the Target Group created earlier.

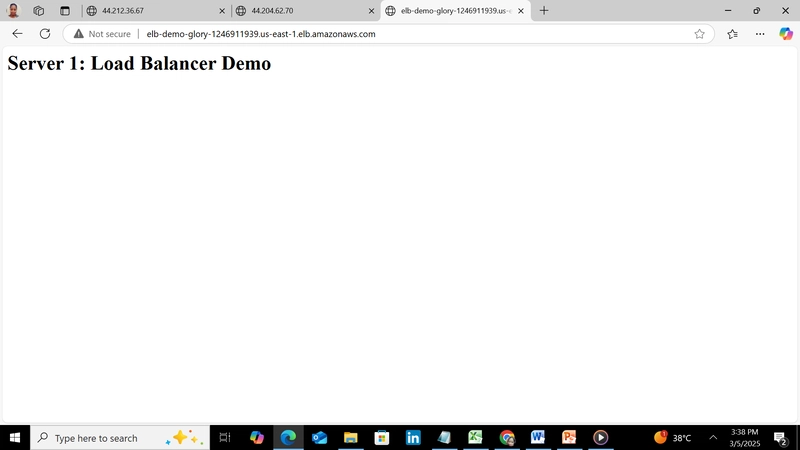

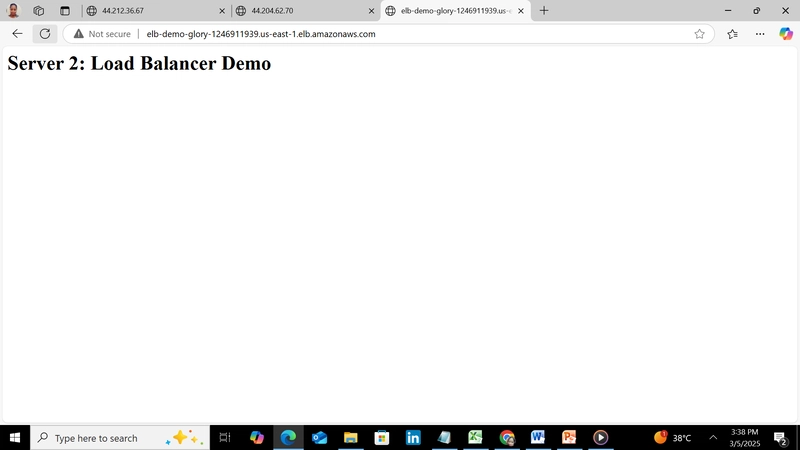

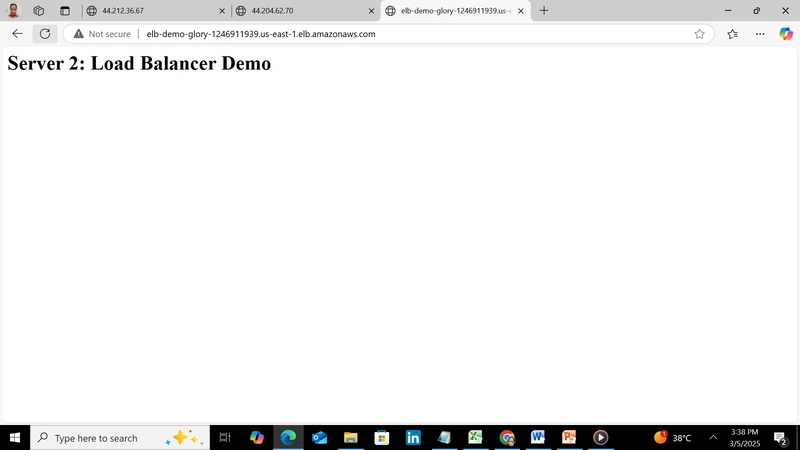

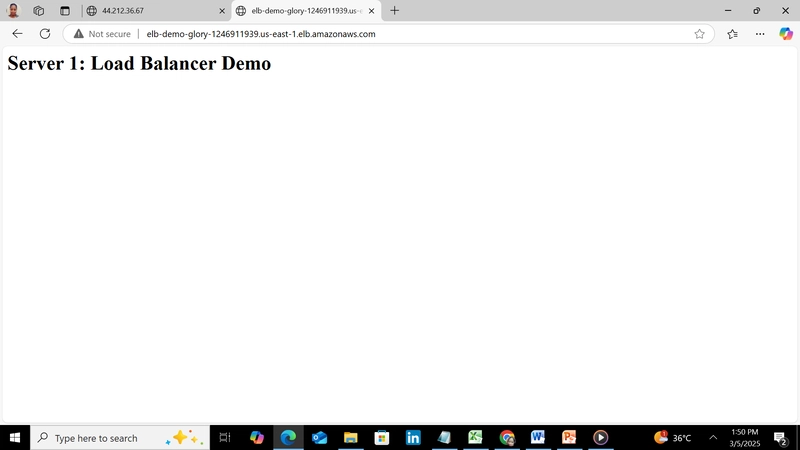

Step 5: Test the Load Balancer

- Review the Load Balancer settings and create it.

- Copy the DNS name of the Load Balancer.

- Open a browser and visit http://your-load-balancer-dns-name, and refresh multiple times to see different responses from the two instances (demonstrating traffic distribution).

- Traffic should alternate between Server 1 and Server 2. Mine read Server 1: Load Balancer Demo, Server 2: Load Balancer Demo

NB: After creating your instances, copy your IP address and test-run your servers. here's mine

then here's my Lb

refreshed and

refreshed and

Step 7: Monitor and Optimize

- Use AWS CloudWatch to monitor performance metrics.

- Enable Auto Scaling to dynamically adjust instance count.

Health Check & Key Considerations When Creating a Load Balancer in AWS

Setting up a Load Balancer (LB) in AWS requires careful configuration to ensure proper traffic distribution, high availability, and fault tolerance. If misconfigured, issues such as unhealthy targets, uneven traffic distribution, or instances not registering can occur. Below are crucial aspects to consider:

1. Selecting the Right Load Balancer Type

AWS provides different types of Load Balancers:

Application Load Balancer (ALB) – Best for HTTP/HTTPS traffic, routes based on URLs, headers, or cookies.

Network Load Balancer (NLB) – Best for high-performance TCP/UDP traffic, handles millions of requests per second.

Classic Load Balancer (CLB) – Older generation, supports both HTTP and TCP but lacks advanced features.

Use case example: If you are hosting a website, use an ALB. If handling financial transactions or gaming servers, use an NLB for low latency.

2. Configuring the Network Mapping

Your Load Balancer and EC2 instances must be properly mapped to ensure smooth communication:

- VPC & Subnet Selection: The Load Balancer must be deployed in the same VPC as your EC2 instances.

- Availability Zones (AZs): Distribute instances across multiple AZs to ensure high availability.

Subnet Requirements:

- ALB requires at least two public subnets in different AZs.

- NLBcan work with one or more subnets but benefits from multiple AZs. If your Load Balancer and instances are in different subnets or AZs, some instances may not receive traffic properly.

3. Security Groups and Firewalls

Misconfigured security groups can cause instances to appear unhealthy or fail to receive traffic.

e.g

Load Balancer Security Group:

- Allow inbound traffic on port 80 (HTTP) or port 443 (HTTPS).

- Allow requests from anywhere (0.0.0.0/0 ) or a specific trusted IP range.

Instance Security Group:

- Allow inbound traffic on the application port (e.g., 80, 443, 8080) from the Load Balancer's security group (not 0.0.0.0/0).

- Allow outbound traffic to the Load Balancer on the same ports. Incorrect security group rules can cause "unhealthy target" errors, meaning your Load Balancer can't reach your instances.

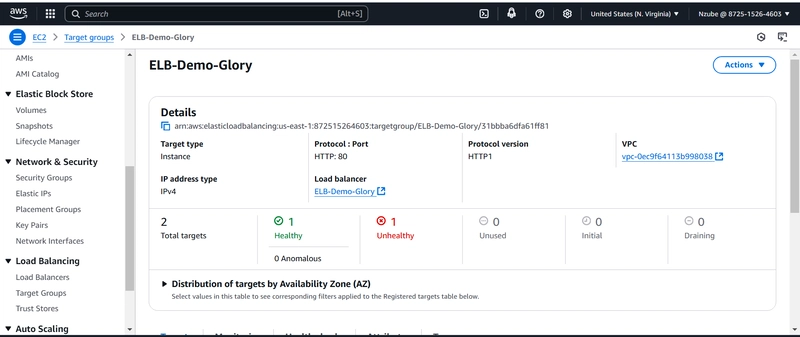

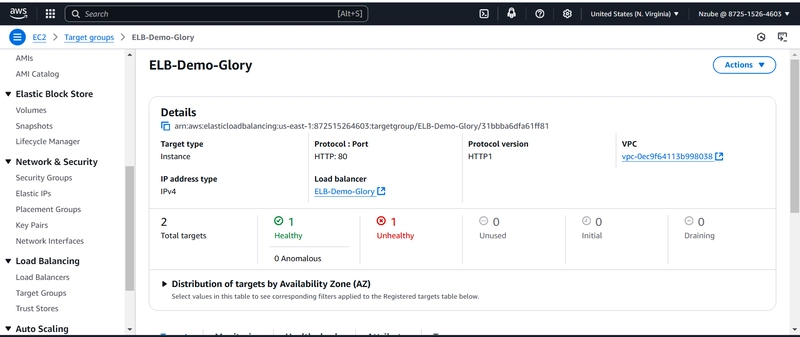

4. Target Group & Health Checks

The target group ensures that the Load Balancer correctly forwards traffic to healthy instances.

From the image below, you can see that my target group initially showed one unhealthy instance out of two total targets. After troubleshooting, I discovered the issue was due to a Subnet and Availability Zone mismatch. I reconfigured the settings, ensuring proper alignment between the load balancer, subnets, and availability zones, which resolved the issue and restored proper functionality.

Target Type: Choose "Instance" if routing to EC2 or "IP" for specific IP addresses.

Health Check Configuration:

Port & Protocol: This should match your instance's application (e.g., HTTP:80).

Path: Default is /, but if your app runs elsewhere (/status, /health), update it.

Thresholds: Set appropriate intervals and success thresholds to determine health status.

If the health check fails, your instance won’t receive traffic! You may need to troubleshoot by checking instance logs or security settings.

5. DNS & Domain Name Mapping

AWS Load Balancers do not have static IPs (except NLB), so you should use:

AWS Route 53 to map your domain to the Load Balancer's DNS name.

CNAME Record: If using a custom domain, configure a CNAME to point to the Load Balancer.

If only one instance receives traffic, check whether your DNS resolution points to the correct Load Balancer and if your health checks are correctly configured.

6. Logging & Monitoring

Enable monitoring tools to detect and fix issues early:

- AWS CloudWatch: Monitor request counts, latency, and unhealthy hosts.

- AWS Access Logs: Store detailed logs of incoming requests.

- AWS X-Ray: Helps trace request flow and detect delays. If your Load Balancer is unresponsive, check CloudWatch metrics to identify traffic spikes, high latency, or instance failures.

7. Auto Scaling Considerations

To handle varying traffic loads, integrate your Load Balancer with Auto Scaling Groups (ASG):

**ASG **will automatically launch new EC2 instances when traffic increases.

Make sure new instances register with the target group upon creation.

Without auto-scaling, your instances may overload during traffic spikes, causing performance issues.

Practical Example: Troubleshooting Unhealthy Instances

Problem: You noticed only one of your two servers was receiving traffic due to an "unhealthy" target issue.

for instance, only one of my instances was showing,

because of

because of

Steps to Fix:

Check Target Group Health:

Go to EC2 Dashboard → Target Groups → Targets and check the health status.

If an instance is marked unhealthy, hover over it to see the reason.

Verify Health Check Settings:Ensure the health check path (e.g., /health) returns a 200 OK response.

Use curl or a browser to test http://INSTANCE-IP/health.

Check Security Groups & Network ACLs:

Make sure instances allow traffic from the Load Balancer’s security group.

Verify inbound rules allow traffic on ports 80/443.

Review Subnet & AZ Mappings:Ensure the Load Balancer and instances are in the same subnets and AZs.

If your instance is in us-east-1a, ensure the LB also has a subnet in us-east-1a.

Check Instance Application Logs

After troubleshooting, refresh the AWS Console and verify both instances are now receiving traffic.

Both instances are now properly registered and passed health checks. The load balancer should now be distributing traffic evenly between the two targets.

Both instances are now properly registered and passed health checks. The load balancer should now be distributing traffic evenly between the two targets.

Setting up an AWS Load Balancer correctly requires configuring subnets, security groups, health checks, DNS settings, and target groups. If any of these are misconfigured, issues like unhealthy targets, partial traffic distribution, or inaccessible servers may occur. By carefully aligning your Load Balancer with your EC2 instances, you ensure high availability, fault tolerance, and efficient load distribution for your application.

Benefits of Using AWS Load Balancer for E-commerce Marketplace

- Scalability – Automatically scales to handle high traffic during shopping events.

- High Availability – Ensures zero downtime by rerouting traffic from unhealthy instances.

- Security – Integrates with AWS Shield and WAF to prevent DDoS attacks.

- Performance Optimization – Reduces latency by routing requests to the closest healthy server.

Conclusion

Load Balancers are a crucial component in modern IT infrastructures, Whether you are running a simple web application or a large-scale cloud deployment, implementing a proper Load Balancing strategy is essential for maintaining optimal performance, security, availability, and scalability. Companies like Temu leverage ALB and NLB to distribute massive traffic efficiently, ensuring seamless shopping experiences. By following this practical setup, you can implement load balancing in your AWS environment.

Criticism and observations are highly welcome! I am still learning and practicing, so please feel free to share your suggestions and feedback on anything you noticed right or wrong in this article to help me improve. Thank you!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)