The Synthetic Data Paradox: Are We Surrendering to Automatic Thinking?

Look around. Everything we consume today—from news to technical knowledge—is mediated by artificial intelligence. We don’t stop to think about it, but every Google query, every line of code Copilot suggests, every article we read on a digital platform has something in common: AI has played a role in the process. And here’s the unsettling question: Are we evolving with AI, or are we simply letting it think for us? We live in a world where immediacy is the new norm. Learning any discipline used to require effort—researching, making mistakes, questioning. Now, a simple ChatGPT query delivers a structured answer in seconds. But are we truly understanding what we consume, or are we just accepting it without question? This isn’t a theoretical problem; it’s happening right now. Developers copy AI-generated code without verifying it, trusting blindly that "it must be right." But what if it’s not? Designers use AI tools to generate interfaces without asking if they’re actually usable. Journalists publish AI-generated articles without checking if the data is accurate. Journalism is a prime example of this issue. According to Reuters, 30% of digital news is already AI-generated. Bloomberg and The Washington Post use automated systems to draft content. But if AI starts feeding on its own content without human intervention, what happens to diversity of thought? How long until we stop questioning sources simply because everything comes in the same tone, the same words, the same invisible bias? This is where synthetic data comes in. According to Gartner, by 2024, 60% of data used in AI projects was already synthetically generated. This means AI is learning from information it created itself. It sounds efficient, but it’s dangerously cyclical. An Oxford study found that AI models lose 38% accuracy after just three training cycles on synthetic data. In other words, AI could be reinforcing its own mistakes without anyone noticing. So let’s go back to the original question: Are we really training AI, or are we just handing over control? When a developer uses Copilot to write code, do they review and understand it, or just copy and run it without hesitation? When a company uses AI to filter résumés, does anyone check if the model is unfairly rejecting valuable candidates due to invisible biases? When a content creator relies on AI to write articles, do they verify the information, or do they publish it blindly, assuming "AI knows what it’s doing"? The real danger isn’t that AI will replace us—it’s that we’ll get used to questioning nothing. AI has no critical thinking; it only imitates patterns and repeats what it finds. If we stop asking questions, if we accept any answer without verifying, understanding, or challenging it, then AI won’t need to replace us. We will have made ourselves irrelevant. The solution isn’t to reject AI but to use it responsibly. It’s not enough to ask for answers—we must understand, challenge, and refine them. It’s not enough to accept AI’s decisions—we must analyze, confront, and improve them. Critical thinking is the only real advantage we have over machines. If we lose it, then yes, AI will have won.

Look around. Everything we consume today—from news to technical knowledge—is mediated by artificial intelligence. We don’t stop to think about it, but every Google query, every line of code Copilot suggests, every article we read on a digital platform has something in common: AI has played a role in the process. And here’s the unsettling question: Are we evolving with AI, or are we simply letting it think for us?

We live in a world where immediacy is the new norm. Learning any discipline used to require effort—researching, making mistakes, questioning. Now, a simple ChatGPT query delivers a structured answer in seconds. But are we truly understanding what we consume, or are we just accepting it without question?

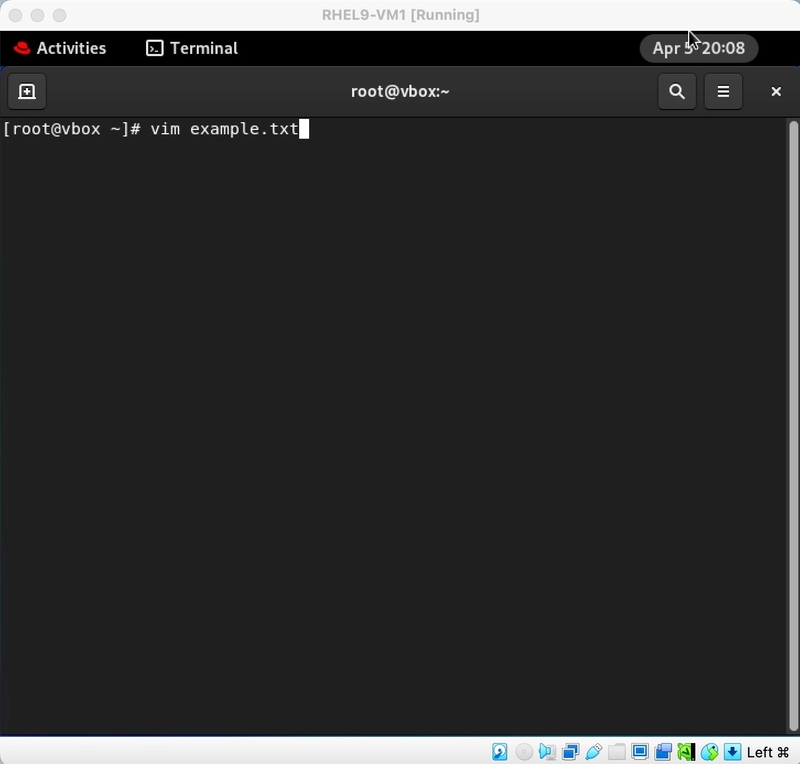

This isn’t a theoretical problem; it’s happening right now. Developers copy AI-generated code without verifying it, trusting blindly that "it must be right." But what if it’s not? Designers use AI tools to generate interfaces without asking if they’re actually usable. Journalists publish AI-generated articles without checking if the data is accurate.

Journalism is a prime example of this issue. According to Reuters, 30% of digital news is already AI-generated. Bloomberg and The Washington Post use automated systems to draft content. But if AI starts feeding on its own content without human intervention, what happens to diversity of thought? How long until we stop questioning sources simply because everything comes in the same tone, the same words, the same invisible bias?

This is where synthetic data comes in. According to Gartner, by 2024, 60% of data used in AI projects was already synthetically generated. This means AI is learning from information it created itself. It sounds efficient, but it’s dangerously cyclical. An Oxford study found that AI models lose 38% accuracy after just three training cycles on synthetic data. In other words, AI could be reinforcing its own mistakes without anyone noticing.

So let’s go back to the original question: Are we really training AI, or are we just handing over control?

- When a developer uses Copilot to write code, do they review and understand it, or just copy and run it without hesitation?

- When a company uses AI to filter résumés, does anyone check if the model is unfairly rejecting valuable candidates due to invisible biases?

- When a content creator relies on AI to write articles, do they verify the information, or do they publish it blindly, assuming "AI knows what it’s doing"?

The real danger isn’t that AI will replace us—it’s that we’ll get used to questioning nothing. AI has no critical thinking; it only imitates patterns and repeats what it finds. If we stop asking questions, if we accept any answer without verifying, understanding, or challenging it, then AI won’t need to replace us. We will have made ourselves irrelevant.

The solution isn’t to reject AI but to use it responsibly. It’s not enough to ask for answers—we must understand, challenge, and refine them. It’s not enough to accept AI’s decisions—we must analyze, confront, and improve them. Critical thinking is the only real advantage we have over machines. If we lose it, then yes, AI will have won.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)