The 3 Best Python Frameworks To Build UIs for AI Apps

Python offers various packages and frameworks for building interactive, production-ready AI app interfaces, including chat UIs. This article details the top platforms. AI Chat UIs: Overview Chat UIs for AI applications provide the front-end interface to interact with AI assistants, local, and cloud-based large language models (LLMs), and agentic workflows. Like popular AI apps Grok for iOS, Le Chat, and ChatGPT for the Web and mobile, UIs are available for sending and generating text, images, audio, or video (multimodal input and output). Generally, developers can utilize chat SDKs and ready-made AI UI components from Stream to assemble interactive chatbots and assistants. One advantage of this approach is that developers can implement these AI components for popular platforms such as iOS, Android, Flutter, React, React Native, and NodeJS with minimal effort using available code samples and taking inspiration from demo apps. How To Build AI Apps in Python Developers can use several model provider APIs when building AI apps. Popular AI model solutions include OpenAI, Anthropic, Mistral, Grok, and DeepSeek. Typically, developers build these AI solutions and agents using platforms like LlamaIndex, LangChain, PydanticAI, Embedchain, LangGraph, CrewAI, and more. Using the command line to test and debug these applications is excellent. However, an intuitive interface is needed to interact with the underlying AI functionalities to perform specific tasks, test them with real users, or share them through the world for feedback. Additionally, an AI app you build in Python may need to connect to other external services to access knowledge bases, tools to execute tasks, monitor performance, etc. Why Use Python Libraries For AI Chat UIs? Creating UIs for an AI app involves many considerations, such as text generation style, response streaming, output structuring, and how all the user-AI interactions create a coherent experience. Depending on the platform you choose to build the front end of your AI app, there are numerous built-in functionalities and AI chat components you get out of the box. Output streaming: An LLM's response streaming is complex if you need to implement it from scratch for your AI project. Although many AI models implement the feature by default, most UI-building platforms provide easy ways to enable output streaming unless custom streaming is preferred. Chatbot animations: You can get free chatbot response animations, such as typing indication and thinking, by using some libraries to build UIs for your AI projects. Feedback: They help to get feedback quickly for a project's improvement. Monitor and visualize performance: Chainlit, for example, uses Literal AI (AI observability service) playground to observe the performance of underlying LLMs, manage prompts, and see analytics. Easy to test: These libraries provide easy ways to test and demo AI apps to people. AI Chat UI Builders and Use Cases You can build the front end of AI apps for many use cases, depending on the app's target. Generally, the AI chat UI you may want to make will fall into some of the following categories. Multimodal AI: AI apps in this category involve Audio-text-to-text, Image-text-to-text, Visual question answering, Document question answering, Video-text-to-text, Visual document retrieval, etc. Computer vision: In this category, you can build AI apps for text and image classification in a chat messaging app, video classification in a conferencing app like Zoom, and Text-to-video generation. Natural Language Processing: In the natural language processing category, developers can build AI app UIs for summarization in a video calling app, translation in a chat app, and an AI chatbot for question answering and text generation. Audio: In this category, you can easily create front-end UIs for Text-to-speech, Text-to-audio, Automatic speech recognition for video conferencing, Audio classification for voice messaging, and Voice activity detection AI apps. Tools For Building AI Chat UIs in Python Depending on a project's objectives, several ways and UI styles exist to build AI apps. For example, a multi-modal AI app that allows users to send text, voice, and image inputs may differ from the one supporting only voice cloning. For Python-based AI projects, you can build the front ends using frameworks like Gradio, Streamlit, and Chainlit. There are other Python-based frameworks for building web and data apps. However, those are beyond the scope of this article. Our primary focus is on creating front-end interfaces for AI apps. The tools mentioned here have their strength and limitations, so we discuss them in the following sections, demonstrating sample code and implementation examples to help you pick the suitable framework for your next AI app. 1. Gradio: Build UIs for Testing and Deploying AI Apps Gradio is an open-source Python lib

Python offers various packages and frameworks for building interactive, production-ready AI app interfaces, including chat UIs. This article details the top platforms.

AI Chat UIs: Overview

Chat UIs for AI applications provide the front-end interface to interact with AI assistants, local, and cloud-based large language models (LLMs), and agentic workflows. Like popular AI apps Grok for iOS, Le Chat, and ChatGPT for the Web and mobile, UIs are available for sending and generating text, images, audio, or video (multimodal input and output).

Generally, developers can utilize chat SDKs and ready-made AI UI components from Stream to assemble interactive chatbots and assistants. One advantage of this approach is that developers can implement these AI components for popular platforms such as iOS, Android, Flutter, React, React Native, and NodeJS with minimal effort using available code samples and taking inspiration from demo apps.

How To Build AI Apps in Python

Developers can use several model provider APIs when building AI apps. Popular AI model solutions include OpenAI, Anthropic, Mistral, Grok, and DeepSeek. Typically, developers build these AI solutions and agents using platforms like LlamaIndex, LangChain, PydanticAI, Embedchain, LangGraph, CrewAI, and more. Using the command line to test and debug these applications is excellent.

However, an intuitive interface is needed to interact with the underlying AI functionalities to perform specific tasks, test them with real users, or share them through the world for feedback. Additionally, an AI app you build in Python may need to connect to other external services to access knowledge bases, tools to execute tasks, monitor performance, etc.

Why Use Python Libraries For AI Chat UIs?

Creating UIs for an AI app involves many considerations, such as text generation style, response streaming, output structuring, and how all the user-AI interactions create a coherent experience. Depending on the platform you choose to build the front end of your AI app, there are numerous built-in functionalities and AI chat components you get out of the box.

- Output streaming: An LLM's response streaming is complex if you need to implement it from scratch for your AI project. Although many AI models implement the feature by default, most UI-building platforms provide easy ways to enable output streaming unless custom streaming is preferred.

- Chatbot animations: You can get free chatbot response animations, such as typing indication and thinking, by using some libraries to build UIs for your AI projects.

- Feedback: They help to get feedback quickly for a project's improvement.

- Monitor and visualize performance: Chainlit, for example, uses Literal AI (AI observability service) playground to observe the performance of underlying LLMs, manage prompts, and see analytics.

- Easy to test: These libraries provide easy ways to test and demo AI apps to people.

AI Chat UI Builders and Use Cases

You can build the front end of AI apps for many use cases, depending on the app's target. Generally, the AI chat UI you may want to make will fall into some of the following categories.

- Multimodal AI: AI apps in this category involve Audio-text-to-text, Image-text-to-text, Visual question answering, Document question answering, Video-text-to-text, Visual document retrieval, etc.

- Computer vision: In this category, you can build AI apps for text and image classification in a chat messaging app, video classification in a conferencing app like Zoom, and Text-to-video generation.

- Natural Language Processing: In the natural language processing category, developers can build AI app UIs for summarization in a video calling app, translation in a chat app, and an AI chatbot for question answering and text generation.

- Audio: In this category, you can easily create front-end UIs for Text-to-speech, Text-to-audio, Automatic speech recognition for video conferencing, Audio classification for voice messaging, and Voice activity detection AI apps.

Tools For Building AI Chat UIs in Python

Depending on a project's objectives, several ways and UI styles exist to build AI apps. For example, a multi-modal AI app that allows users to send text, voice, and image inputs may differ from the one supporting only voice cloning. For Python-based AI projects, you can build the front ends using frameworks like Gradio, Streamlit, and Chainlit. There are other Python-based frameworks for building web and data apps. However, those are beyond the scope of this article.

Our primary focus is on creating front-end interfaces for AI apps. The tools mentioned here have their strength and limitations, so we discuss them in the following sections, demonstrating sample code and implementation examples to help you pick the suitable framework for your next AI app.

1. Gradio: Build UIs for Testing and Deploying AI Apps

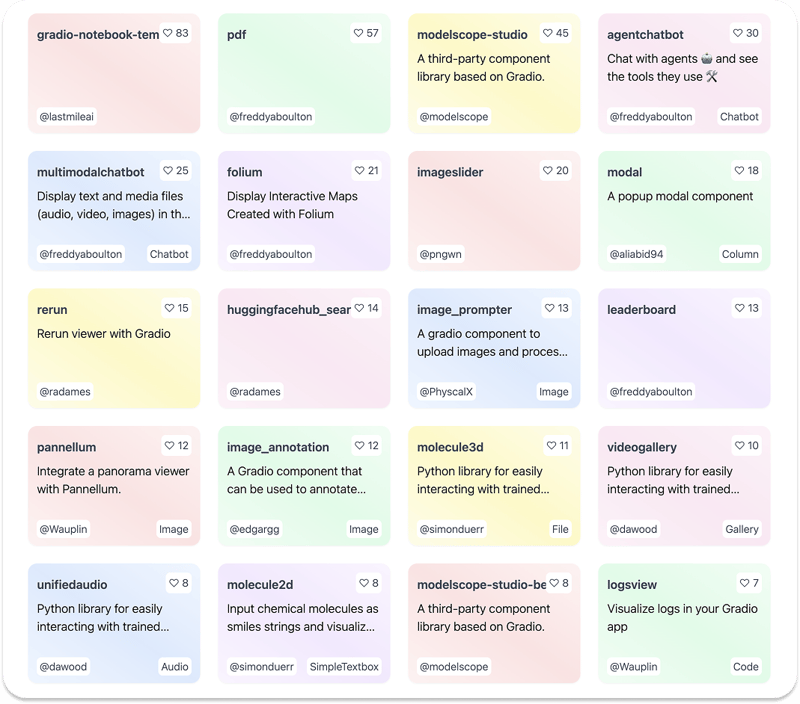

Gradio is an open-source Python library from Hugging Face that allows developers to create UIs for LLMs, agents, and real-time AI voice and video applications. It provides a fast and easy way to test and share AI applications through a web interface. Gradio offers an easy-to-use and low-code platform for building UIs for unlimited AI use cases.

- Easy to start: You can quickly begin with Gradio and set up a fully functioning interface for an AI app shortly with a few lines of Python code.

- Showcase and share: Easily embed UIs in Jupyter Notebook, Google Colab or share them on Hugging Face using a public link.

- Deploy to production: You can permanently host the UI of a Gradio-powered AI project on Hugging Face Spaces.

Key Features and Benefits of Gradio

Gradio is one of the most famously used Python libraries for building the front end for machine learning (ML) applications. Its popularity and widespread use result from feature-rich components and building blocks for constructing AI chat interfaces with minimal effort. The following are the key features of Gradio.

- Custom components: Create custom-made components as UI libraries and integrate them into your AI apps powered by Gradio's UI. Custom components can be published as Python packages and distributed to the developer community.

- Agent UI: Easily create a Gradio interface for text-to-image generation, etc., using Hugging Face's transformers.agents.

- Support for leading AI building platforms: Gradio integrates well into leading Python frameworks for creating AI and agent applications such as LangChain, LlamaIndex, Embedchain, and more.

- Native intermediate thoughts: Leverage the built-in support for chain of thought (CoT) to build interfaces and demos for projects implementing reasoning LLMs.

- Support for handling different AI app use cases: One essential characteristic when looking for a UI-building framework for an AI app is the platform's ability to handle different app use cases. Luckily, Gradio's gradio.Interface class allows developers to create interfaces around four distinct use cases. The standard use case allows you to build UIs with input and output. This interface type is excellent for creating image classifiers or speech-to-text AI apps. You can create an interface that supports only an output use case, like image generation. It also supports an input-only interface, which can be used to build an LLM app that stores uploaded images in external databases. With the unified interface option, you can make UIs whereby input and output do not differ. In this option, the output overrides the input. An excellent example in this category is an AI UI that autocompletes text or code.

- Custom interfaces: Blocks in Gradio allow you to implement bespoke UIs quickly for AI projects.

-

Chatbot UI: Use Gradio's high-level abstraction

gr.ChatInterfaceto quickly build chatbot and assistant interfaces.

Gradio Installation and Quickstart

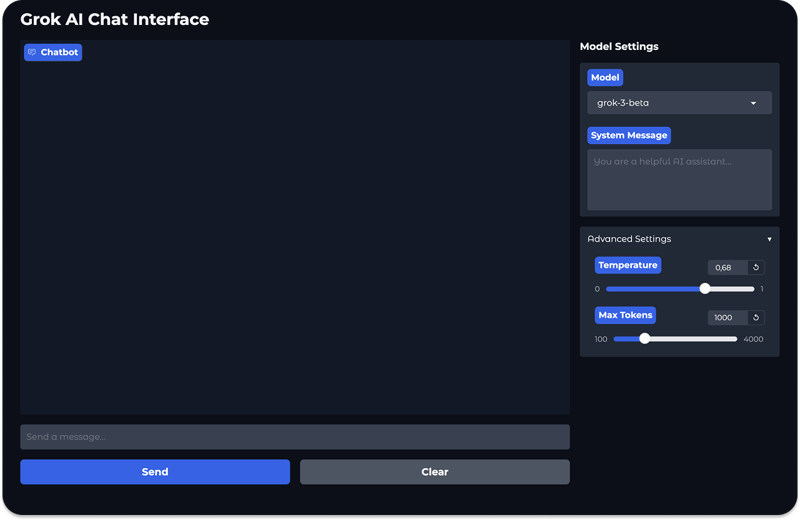

This section uses Gradio's building blocks to create the chatbot UI in the image above. First, in your favorite IDE, you should set up a Python virtual environment and install Gradio's Python package using the following commands.

python -m venv venv

source venv/bin/activate

pip install gradio

Next, create a Python file grok_ui.py and replace its content with this sample code.

import gradio as gr

import os

from dotenv import load_dotenv

# Load environment variables from .env file

load_dotenv()

# Function to handle chat interactions

def chat_with_grok(message, history, system_message, model_name, temperature, max_tokens):

# In a real implementation, this would call the Grok API

# For now, we'll just echo the inputs to demonstrate the UI is working

bot_message = f"You selected model: {model_name}\nSystem message: {system_message}\nTemperature: {temperature}\nMax tokens: {max_tokens}\n\nYour message: {message}"

return bot_message

# Create the Gradio interface

with gr.Blocks(theme=gr.themes.Soft(primary_hue="blue")) as demo:

gr.Markdown("# Grok AI Chat Interface")

with gr.Row():

with gr.Column(scale=3):

# Main chat interface

chatbot = gr.Chatbot(

height=600,

show_copy_button=True,

avatar_images=("

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)