LLAMA 4: Meta's New AI Models Break Context Length Records and Challenge Industry Leaders

LLAMA 4: Meta's New AI Models Break Context Length Records and Challenge Industry Leaders Llama 4: Leading Multimodal Intelligence - Newest model suite offering unrivaled speed and efficiency Meta has surprised the AI community with its unexpected release of LLAMA 4, the latest generation of its open-source large language models. With groundbreaking features like a 10-million token context window and mixture-of-experts architecture, these models are poised to transform how developers and businesses leverage AI. Let's dive into what makes LLAMA 4 significant and how it compares to other leading AI models. The LLAMA 4 Family: Three Powerful Models As shown in the image above, Meta has introduced three models in the LLAMA 4 lineup, each with distinct capabilities: LLAMA 4 Scout - The smallest model with 17 billion active parameters (109 billion total) featuring an industry-leading 10 million token context window. Despite its relatively small size, it outperforms models like Gemma 3 and Gemini 2.0 Flash across multiple benchmarks. Optimized for inference speed. LLAMA 4 Maverick - A 400 billion parameter model (17 billion active) with 128 experts, offering a 1 million token context window and native multimodal capabilities. Benchmarks place it second only to Gemini 2.5 Pro Experimental, outperforming GPT-4 and Claude 3.5. LLAMA 4 Behemoth - An enormous 2 trillion parameter model (288 billion active) with 16 experts that's still in training. Described as "The most intelligent teacher model for distillation," preliminary benchmarks suggest it will outperform Claude Sonnet 3.7, Gemini 2.0 Pro, and GPT-4.5, particularly in coding and reasoning tasks. Game-Changing Context Length The most revolutionary aspect of LLAMA 4 is Scout's unprecedented 10 million token context window - approximately 7,500 pages of text in a single prompt. For perspective: You could input the entire works of Shakespeare, Lord of the Rings, and Harry Potter series simultaneously It can process millions of lines of code in a single request It can analyze over 20 hours of video footage This is a massive leap from LLAMA 3's 128,000 token context window and far exceeds what competitors offer. Even the most advanced models from OpenAI and Anthropic typically max out at 128,000 tokens, while Gemini models reach 1 million. Mixture of Experts: The Secret Sauce LLAMA 4's efficiency comes from its "mixture of experts" (MoE) architecture. Unlike traditional LLMs where every token activates all parameters, MoE models use a selective approach: Each token activates only the parameters it needs For example, LLAMA 4 Maverick has 400 billion total parameters but only uses 17 billion active parameters per token The token passes through a shared expert and just one of 128 specialized experts The result is comparable intelligence to a much larger model at a fraction of the computational cost This architecture enables LLAMA 4 Maverick to run on a single H100 GPU, making it significantly more accessible for developers and businesses while reducing costs and latency. Multimodal Capabilities All LLAMA 4 models are natively multimodal, able to process: Text Images Video (in the open-source versions) They were trained with a wide variety of images and video frames, giving them broad visual understanding, including temporal activities and related images. This multimodal approach positions LLAMA 4 to handle complex tasks requiring cross-modal reasoning. Performance Against Competitors LLAMA 4 Maverick is particularly impressive in benchmarks: Price-performance ratio much better than GPT-4 Best-in-class for image reasoning and understanding Outperforms GPT-4 on multilingual capability Achieves 69.8 on GPQA Diamond compared to GPT-4's 53.6 Ranked first on LM Arena alongside Gemini 2.5 Pro with an ELO score around 420 LLAMA 4 Scout also shows remarkable results across benchmarks, outperforming previous generation models and competitors like Mistral and Gemma in most categories. Practical Applications The massive context window of LLAMA 4 Scout opens up new possibilities: Multi-document summarization Parsing extensive user activities Advanced personalization Reasoning over vast codebases Video content analysis Some experts suggest this could potentially eliminate the need for retrieval-augmented generation (RAG) in many use cases, as the model can directly process enormous amounts of information without external retrieval systems. Accessibility and Pricing Both Scout and Maverick are available on platforms like Groq with competitive pricing: Scout: 11¢ per million input tokens, 34¢ per million output tokens Maverick: 50¢ per million input tokens, 77¢ per million output tokens At 460+ tokens per second on Groq, Scout also offers impressive inference speed. You can download the models directly from the official Llama website. The Open Source Adv

LLAMA 4: Meta's New AI Models Break Context Length Records and Challenge Industry Leaders

Llama 4: Leading Multimodal Intelligence - Newest model suite offering unrivaled speed and efficiency

Meta has surprised the AI community with its unexpected release of LLAMA 4, the latest generation of its open-source large language models. With groundbreaking features like a 10-million token context window and mixture-of-experts architecture, these models are poised to transform how developers and businesses leverage AI. Let's dive into what makes LLAMA 4 significant and how it compares to other leading AI models.

The LLAMA 4 Family: Three Powerful Models

As shown in the image above, Meta has introduced three models in the LLAMA 4 lineup, each with distinct capabilities:

LLAMA 4 Scout - The smallest model with 17 billion active parameters (109 billion total) featuring an industry-leading 10 million token context window. Despite its relatively small size, it outperforms models like Gemma 3 and Gemini 2.0 Flash across multiple benchmarks. Optimized for inference speed.

LLAMA 4 Maverick - A 400 billion parameter model (17 billion active) with 128 experts, offering a 1 million token context window and native multimodal capabilities. Benchmarks place it second only to Gemini 2.5 Pro Experimental, outperforming GPT-4 and Claude 3.5.

LLAMA 4 Behemoth - An enormous 2 trillion parameter model (288 billion active) with 16 experts that's still in training. Described as "The most intelligent teacher model for distillation," preliminary benchmarks suggest it will outperform Claude Sonnet 3.7, Gemini 2.0 Pro, and GPT-4.5, particularly in coding and reasoning tasks.

Game-Changing Context Length

The most revolutionary aspect of LLAMA 4 is Scout's unprecedented 10 million token context window - approximately 7,500 pages of text in a single prompt. For perspective:

- You could input the entire works of Shakespeare, Lord of the Rings, and Harry Potter series simultaneously

- It can process millions of lines of code in a single request

- It can analyze over 20 hours of video footage

This is a massive leap from LLAMA 3's 128,000 token context window and far exceeds what competitors offer. Even the most advanced models from OpenAI and Anthropic typically max out at 128,000 tokens, while Gemini models reach 1 million.

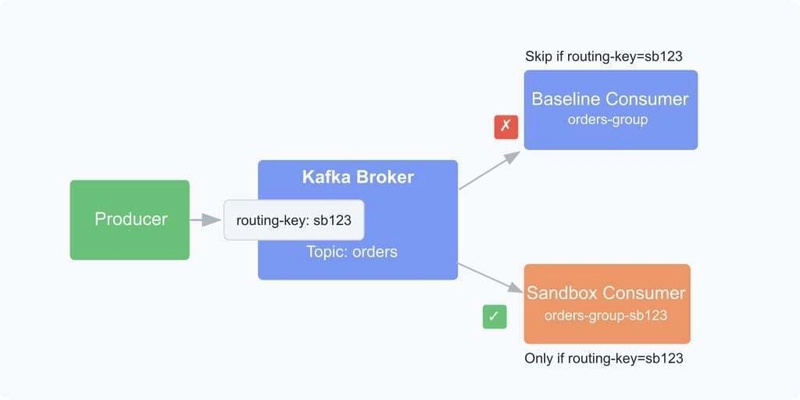

Mixture of Experts: The Secret Sauce

LLAMA 4's efficiency comes from its "mixture of experts" (MoE) architecture. Unlike traditional LLMs where every token activates all parameters, MoE models use a selective approach:

- Each token activates only the parameters it needs

- For example, LLAMA 4 Maverick has 400 billion total parameters but only uses 17 billion active parameters per token

- The token passes through a shared expert and just one of 128 specialized experts

- The result is comparable intelligence to a much larger model at a fraction of the computational cost

This architecture enables LLAMA 4 Maverick to run on a single H100 GPU, making it significantly more accessible for developers and businesses while reducing costs and latency.

Multimodal Capabilities

All LLAMA 4 models are natively multimodal, able to process:

- Text

- Images

- Video (in the open-source versions)

They were trained with a wide variety of images and video frames, giving them broad visual understanding, including temporal activities and related images. This multimodal approach positions LLAMA 4 to handle complex tasks requiring cross-modal reasoning.

Performance Against Competitors

LLAMA 4 Maverick is particularly impressive in benchmarks:

- Price-performance ratio much better than GPT-4

- Best-in-class for image reasoning and understanding

- Outperforms GPT-4 on multilingual capability

- Achieves 69.8 on GPQA Diamond compared to GPT-4's 53.6

- Ranked first on LM Arena alongside Gemini 2.5 Pro with an ELO score around 420

LLAMA 4 Scout also shows remarkable results across benchmarks, outperforming previous generation models and competitors like Mistral and Gemma in most categories.

Practical Applications

The massive context window of LLAMA 4 Scout opens up new possibilities:

- Multi-document summarization

- Parsing extensive user activities

- Advanced personalization

- Reasoning over vast codebases

- Video content analysis

Some experts suggest this could potentially eliminate the need for retrieval-augmented generation (RAG) in many use cases, as the model can directly process enormous amounts of information without external retrieval systems.

Accessibility and Pricing

Both Scout and Maverick are available on platforms like Groq with competitive pricing:

- Scout: 11¢ per million input tokens, 34¢ per million output tokens

- Maverick: 50¢ per million input tokens, 77¢ per million output tokens

At 460+ tokens per second on Groq, Scout also offers impressive inference speed.

You can download the models directly from the official Llama website.

The Open Source Advantage (With Caveats)

While Meta describes LLAMA 4 as open source, there are some limitations:

- Applications with over 700 million users need permission to use the models

- Users must acknowledge "built with LLAMA" on their websites

- Download requires completing a form on Hugging Face

These limitations aside, the open-source nature of LLAMA 4 brings several benefits:

- Local deployment for those with sufficient hardware

- Ability to run on specialized hardware for faster inference

- Lower costs compared to API-based models

- Freedom to build and customize without API usage fees

What This Means for Developers

For developers and AI engineers, LLAMA 4 represents a significant advancement:

Efficient Local Deployment: The mixture of experts architecture makes running powerful models locally more feasible than ever before.

Extended Context Applications: The 10 million token context enables entirely new applications that previously weren't possible.

Competitive Alternative: With performance rivaling proprietary models at lower costs, LLAMA 4 provides a viable alternative to GPT-4 and Claude.

Multilingual Improvement: With 10x more multilingual tokens than LLAMA 3, these models perform better for non-English use cases.

Looking Forward

Meta has indicated that these models are just the beginning of the LLAMA 4 collection. We can expect:

- Improved versions of current models

- The official release of LLAMA 4 Behemoth (currently in preview according to the image)

- Potential specialized variants for specific domains

With each generation of LLAMA models improving rapidly, the gap between open-source and proprietary models continues to narrow, giving developers more powerful and accessible tools to build AI-powered applications.

LLAMA 4 represents another leap forward in AI model capabilities, particularly in context length and efficiency. As these models become available to developers worldwide, we can expect a new wave of applications that leverage extended context to solve problems previously considered impractical for AI. Whether you're building complex multimodal systems or simply looking for powerful, cost-effective AI models, LLAMA 4 brings exciting possibilities to the AI ecosystem.

For more information and to access the models, visit the Llama website.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

(1).jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

-Mario-Kart-World-Hands-On-Preview-Is-It-Good-00-08-36.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_NicoElNino_Alamy.png?#)

_Igor_Mojzes_Alamy.jpg?#)

.webp?#)

.webp?#)

![Gemini can be the biggest AI platform so long as Google integrates it in more areas [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Live-with-Im-in-my-Gemini-era-sticker.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)

![[Tutorial] Chapter 7: Workflow](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fuk4svcapth41bdi8mgmm.png)