LangGraph + DeepSeek-R1 + Function Call + Agentic RAG (Insane Results)

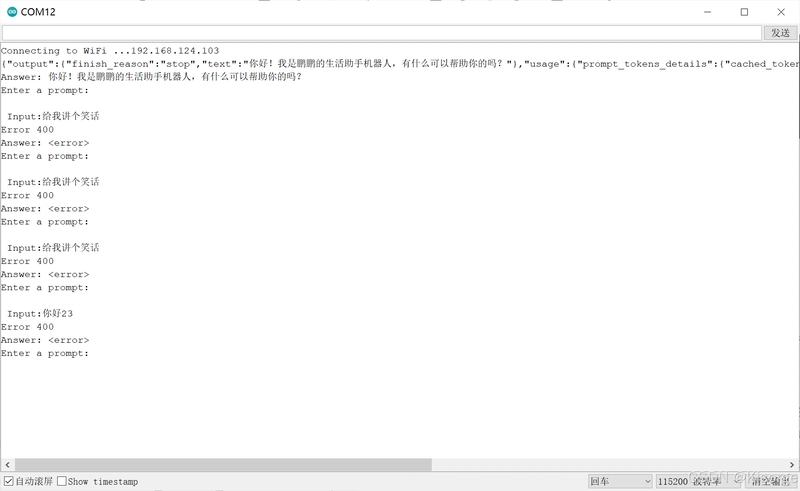

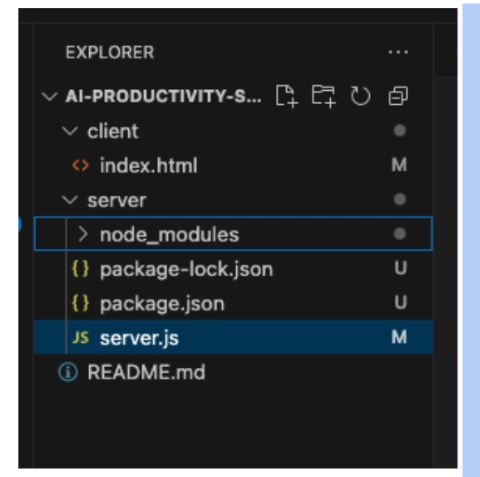

In this Story, I have a super quick tutorial showing you how to create a multi-agent chatbot using LangGraph, Deepseek-R1, function calling, and Agentic RAG to build a powerful agent chatbot for your business or personal use. In my last Story, I talked about LangChain and Deepseek-R1. One of the viewers asked if I could do it with LangGraph. I have fulfilled that request — but not only that, I have also enhanced the chatbot with function calling and Agentic RAG. “But Gao, Deepseek-R1 doesn’t support function calls!” Yes, you’re right — but let me tell you, I came up with a clever idea. If you stay until the end of the video, I’ll show you how to do it, too! I have mentioned the function call many times in my previous article, we already know that the function call is a technique that allows LLM to autonomously select and call predefined functions based on the conversation content. These functions can be used to perform various tasks. Agentic RAG is a type of RAG that improves the problems of general RAG by using agents. The problem with conventional RAG is that the AI processes everything “in order”, so if an error occurs, such as not being able to retrieve data, all subsequent processing becomes meaningless. Agentic RAG addresses these issues by using an “agent.” The agent not only chooses the tool to go to but also allows for thought loops, so it can loop through multiple processes until it gets the information it needs and meets the user’s expectations. So, let me give you a quick demo of a live chatbot to show you what I mean. We have two different databases, research and development, where we can get our answers. I have created some example questions that you can test. Let me enter the question: ‘What is the status of Project A?’ If you look at how the chatbot generates the output, It uses RecursiveCharacterTextSplitter to split a text into smaller chunks and convert it into a document using splitter.create_documents(). These documents were stored as vector embeddings in ChromaDB for efficient similarity search. A retriever was created using create_retriever_tool in LangChain, and an AI agent was developed to classify user queries as research or development, connect to DeepSeek-R1 (temperature = 0.7) for responses, and retrieve relevant documents. One of the big problems I faced when I developed a chatbot was that DeepSeek-R1 does not support function calling like OpenAI, so I created a text-based command system instead of using function calls. Rather than forcing DeepSeek-R1 to use functions directly, I designed it to output specific text formats. Then, I built a wrapper to convert these text commands into tool actions. This way, we get the same functionality as function calling, but in a way, DeepSeek-R1 can handle it. A grading function checks if retrieved documents exist — if found, the process continues; if not, the query is rewritten for clarity. A generation function summarizes retrieved documents using DeepSeek-R1 and formats the response. A decision function determines whether tools are needed based on the message content, and a LangGraph workflow was created to manage the process, starting with the agent, routing queries to retrieval if needed, generating an answer when documents are found, or rewriting the question and retrying if necessary. Finally, it generates the final answer in a green box. By the end of this video, you will understand what is Agentic RAG, why we need Agentic RAG and how a function call + DeepSeek-R1 can be used to create a super AI Agent. Before we start!

In this Story, I have a super quick tutorial showing you how to create a multi-agent chatbot using LangGraph, Deepseek-R1, function calling, and Agentic RAG to build a powerful agent chatbot for your business or personal use.

In my last Story, I talked about LangChain and Deepseek-R1. One of the viewers asked if I could do it with LangGraph.

I have fulfilled that request — but not only that, I have also enhanced the chatbot with function calling and Agentic RAG.

“But Gao, Deepseek-R1 doesn’t support function calls!”

Yes, you’re right — but let me tell you, I came up with a clever idea. If you stay until the end of the video, I’ll show you how to do it, too!

I have mentioned the function call many times in my previous article, we already know that the function call is a technique that allows LLM to autonomously select and call predefined functions based on the conversation content. These functions can be used to perform various tasks.

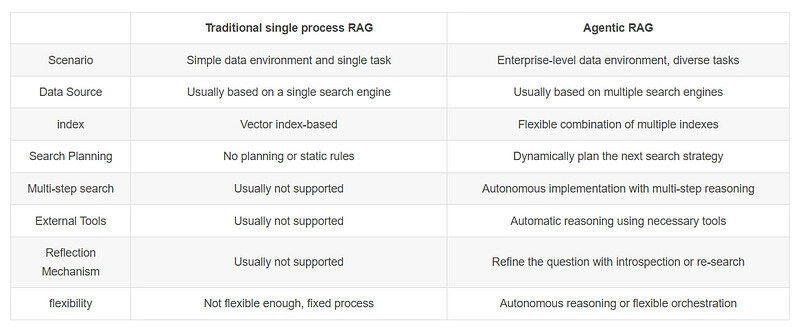

Agentic RAG is a type of RAG that improves the problems of general RAG by using agents. The problem with conventional RAG is that the AI processes everything “in order”, so if an error occurs, such as not being able to retrieve data, all subsequent processing becomes meaningless.

Agentic RAG addresses these issues by using an “agent.” The agent not only chooses the tool to go to but also allows for thought loops, so it can loop through multiple processes until it gets the information it needs and meets the user’s expectations.

So, let me give you a quick demo of a live chatbot to show you what I mean.

We have two different databases, research and development, where we can get our answers. I have created some example questions that you can test. Let me enter the question: ‘What is the status of Project A?’ If you look at how the chatbot generates the output,

It uses RecursiveCharacterTextSplitter to split a text into smaller chunks and convert it into a document using splitter.create_documents(). These documents were stored as vector embeddings in ChromaDB for efficient similarity search.

A retriever was created using create_retriever_tool in LangChain, and an AI agent was developed to classify user queries as research or development, connect to DeepSeek-R1 (temperature = 0.7) for responses, and retrieve relevant documents.

One of the big problems I faced when I developed a chatbot was that DeepSeek-R1 does not support function calling like OpenAI, so I created a text-based command system instead of using function calls. Rather than forcing DeepSeek-R1 to use functions directly, I designed it to output specific text formats.

Then, I built a wrapper to convert these text commands into tool actions. This way, we get the same functionality as function calling, but in a way, DeepSeek-R1 can handle it.

A grading function checks if retrieved documents exist — if found, the process continues; if not, the query is rewritten for clarity. A generation function summarizes retrieved documents using DeepSeek-R1 and formats the response.

A decision function determines whether tools are needed based on the message content, and a LangGraph workflow was created to manage the process, starting with the agent, routing queries to retrieval if needed, generating an answer when documents are found, or rewriting the question and retrying if necessary. Finally, it generates the final answer in a green box.

By the end of this video, you will understand what is Agentic RAG, why we need Agentic RAG and how a function call + DeepSeek-R1 can be used to create a super AI Agent.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)