Getting LLMs to Create, Play, Evaluate, and Improve Games

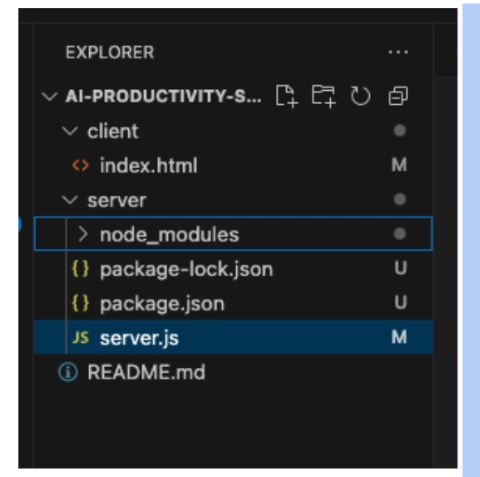

The fish powers up with bubbles and can crush rocks. I managed to make Claude handle everything from game concept creation and evaluation to implementation and improvement, creating all this without writing a single line of code, just through conversation abagames.github.io/chat-craft-c...— ABA (@abagames.bsky.social) 2025-03-20T23:23:25.057Z When I was using Claude 3.5, I created a prompt project that handled everything from game idea generation to implementation, but I still needed to directly adjust the code at the end. Creating Mini-Games in the Age of Generative AI - Generating Ideas, Code, Graphics, and Sound ABA Games ・ Jan 26 The problem was that Claude itself wasn't playing the games. So Claude couldn't tell whether a game's balance was good or bad. So this time, I decided to have Claude play the games it created. abagames / chat-craft-click ChatCraftClick is a platform that enables you to easily create, test, and improve high-quality one-button games using interactive AI. ChatCraftClick: AI-Assisted One-Button Game Development Platform English | 日本語 ChatCraftClick is a platform that enables you to easily create, test, and improve high-quality one-button games using interactive AI. It allows anyone to produce innovative and balanced games in a short time, even without technical knowledge. Project Overview This platform aims to enable anyone to create high-quality one-button games, regardless of their game development experience. Its distinctive feature is the ability to interactively execute the entire process from idea generation to automatic evaluation, implementation, and refinement. It resolves the challenges of trial-and-error and subjective evaluation in traditional game development, realizing a data-driven, objective development approach. Features 1. Data-Driven Game Design and Automatic Evaluation System A quantitative indicator-based game design approach that doesn't rely on subjective judgment Automatic measurement of metrics like "skill gap," "monotonous input resistance," and "difficulty progression" A test environment that simulates player behavior patterns to provide objective evaluation … View on GitHub Claude has a feature called the Analytics tool (REPL), which allows it to execute code on its own and adjust its behavior based on the results. This time, I used it to create a system that simulates games and automatically evaluates them. I prepared a framework called one-button-game-test-framework.js that simulates player inputs and object behaviors in the game. By running this in the Analytics tool, Claude can evaluate whether the game is actually playable and if the game balance is appropriate. It first considers five game concepts, evaluates each one, adopts the one with the best evaluation, and then implements it while making improvements based on the evaluation results. Also, this time I implemented the games using p5.js, so they can be played directly in the chat UI as a Claude Artifact. If you have a complaint after playing, like "there are too many enemies," you can simply input that. Claude will make reasonable adjustments. By having Claude itself play the games, the probability of creating games that aren't viable (like those where you immediately get a game over, or where holding down a button makes you invincible) has decreased. As a result, playable games can now be created with just conversation-based fine-tuning, without having to directly modify the code. However, there are still plenty of issues: The success rate is low. You need to make quite a few attempts before an enjoyable game appears. It's common for all five game concepts to get poor evaluations and be unusable. The games have become mediocre. Before implementing the evaluation step, sometimes games would emerge that weren't viable as games but had novel and interesting player movements. Now that these are filtered out by the evaluation, more conventional games are being created. I hope these issues can be resolved with something like Claude 4.0, and I've noticed that my recent blog posts often end with expectations for the next generation of LLMs. I think it's good to have ideas about what might be possible with more accurate models, rather than being satisfied with current models - that's part of the fun of living in the AI era, in my opinion.

The fish powers up with bubbles and can crush rocks. I managed to make Claude handle everything from game concept creation and evaluation to implementation and improvement, creating all this without writing a single line of code, just through conversation abagames.github.io/chat-craft-c...— ABA (@abagames.bsky.social) 2025-03-20T23:23:25.057Z

When I was using Claude 3.5, I created a prompt project that handled everything from game idea generation to implementation, but I still needed to directly adjust the code at the end.

Creating Mini-Games in the Age of Generative AI - Generating Ideas, Code, Graphics, and Sound

ABA Games ・ Jan 26

The problem was that Claude itself wasn't playing the games. So Claude couldn't tell whether a game's balance was good or bad.

So this time, I decided to have Claude play the games it created.

abagames

/

chat-craft-click

abagames

/

chat-craft-click

ChatCraftClick is a platform that enables you to easily create, test, and improve high-quality one-button games using interactive AI.

ChatCraftClick: AI-Assisted One-Button Game Development Platform

English | 日本語

ChatCraftClick is a platform that enables you to easily create, test, and improve high-quality one-button games using interactive AI. It allows anyone to produce innovative and balanced games in a short time, even without technical knowledge.

Project Overview

This platform aims to enable anyone to create high-quality one-button games, regardless of their game development experience. Its distinctive feature is the ability to interactively execute the entire process from idea generation to automatic evaluation, implementation, and refinement. It resolves the challenges of trial-and-error and subjective evaluation in traditional game development, realizing a data-driven, objective development approach.

Features

1. Data-Driven Game Design and Automatic Evaluation System

- A quantitative indicator-based game design approach that doesn't rely on subjective judgment

- Automatic measurement of metrics like "skill gap," "monotonous input resistance," and "difficulty progression"

- A test environment that simulates player behavior patterns to provide objective evaluation

- …

Claude has a feature called the Analytics tool (REPL), which allows it to execute code on its own and adjust its behavior based on the results. This time, I used it to create a system that simulates games and automatically evaluates them.

I prepared a framework called one-button-game-test-framework.js that simulates player inputs and object behaviors in the game. By running this in the Analytics tool, Claude can evaluate whether the game is actually playable and if the game balance is appropriate. It first considers five game concepts, evaluates each one, adopts the one with the best evaluation, and then implements it while making improvements based on the evaluation results.

Also, this time I implemented the games using p5.js, so they can be played directly in the chat UI as a Claude Artifact. If you have a complaint after playing, like "there are too many enemies," you can simply input that. Claude will make reasonable adjustments.

By having Claude itself play the games, the probability of creating games that aren't viable (like those where you immediately get a game over, or where holding down a button makes you invincible) has decreased. As a result, playable games can now be created with just conversation-based fine-tuning, without having to directly modify the code.

However, there are still plenty of issues:

- The success rate is low. You need to make quite a few attempts before an enjoyable game appears. It's common for all five game concepts to get poor evaluations and be unusable.

- The games have become mediocre. Before implementing the evaluation step, sometimes games would emerge that weren't viable as games but had novel and interesting player movements. Now that these are filtered out by the evaluation, more conventional games are being created.

I hope these issues can be resolved with something like Claude 4.0, and I've noticed that my recent blog posts often end with expectations for the next generation of LLMs. I think it's good to have ideas about what might be possible with more accurate models, rather than being satisfied with current models - that's part of the fun of living in the AI era, in my opinion.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)