Fast Tokenizers: How Rust is Turbocharging NLP

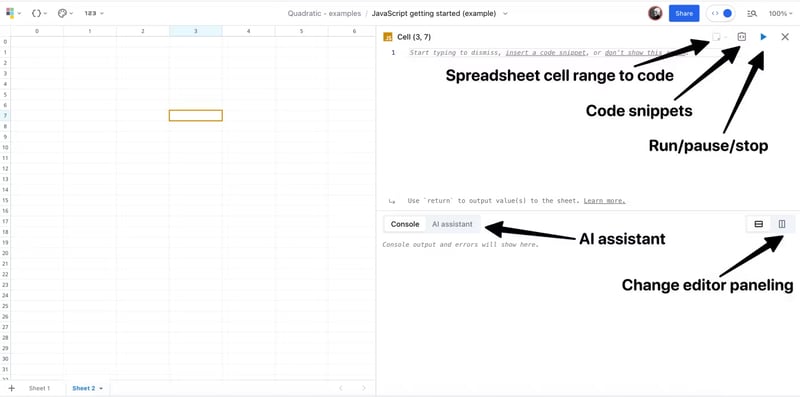

In the breakneck world of Natural Language Processing (NLP), speed isn't just a bonus - it's a critical necessity. As we build colossal language models like Llama and Gemma, the very first step of processing text - tokenization - becomes a potential bottleneck. Enter "Fast" tokenizers, the unsung heroes quietly revolutionizing NLP performance. You've probably seen the "Fast" suffix appended to tokenizer names in libraries like Hugging Face Transformers: LlamaTokenizerFast, GemmaTokenizerFast, and a growing family. But what does "Fast" actually mean? Is it just marketing hype, or is there a real performance revolution happening under the hood? It's a full-blown revolution. "Fast" tokenizers aren't just a bit faster; they are transformatively faster, unlocking performance levels previously unattainable. And the secret weapon behind this revolution? Rust. What Are "Fast Tokenizers" and Why Rust is the Game Changer At their core, tokenizers are the essential first step in any NLP pipeline. They break down raw text into manageable units called tokens - words, subwords, or even characters - that machine learning models can understand. Speed here is paramount, especially when dealing with massive datasets or real-time applications like chatbots, where delays can cripple user experience. Traditional tokenizers, often built in Python, struggle to keep pace with these demands. This is where Rust, a systems programming language, steps into the spotlight. Rust is turbocharging tokenizers, delivering speeds comparable to C and C++ while guaranteeing memory safety. This means blazing-fast processing without the bug-prone pitfalls often associated with performance-focused languages. Hugging Face and the Rust-Powered Revolution Hugging Face, a leading force in NLP, recognized this potential and built their groundbreaking tokenizers library in Rust. This library, seamlessly integrated into their widely-used transformers library, is the engine behind "Fast" tokenizers. The results are astonishing. Hugging Face's Rust-based tokenizers can process a gigabyte of text in under 20 seconds on a standard server CPU. This is not just incrementally faster; it's a quantum leap compared to Python-based tokenizers, which can take significantly longer for the same task. This dramatic speed-up is a game-changer for researchers and companies working with big data, drastically reducing training times, computational costs, and development cycles. Why Rust Delivers Unprecedented Tokenization Speed: A Deep Dive Rust's exceptional performance in tokenization isn't magic; it's rooted in concrete technical advantages: Compiled Speed: Machine Code Advantage Rust is a compiled language, translating code directly into efficient machine code before it runs. Python, as an interpreted language, executes code line by line, adding runtime overhead. Rust's compiled nature means code runs directly on the CPU at near-hardware speed, eliminating interpretation delays and boosting execution speed dramatically. Memory Safety Without the Slowdown Rust's innovative ownership model guarantees memory safety without relying on garbage collection, a common feature in Python. Garbage collection, while convenient, can cause performance hiccups. Rust's precise memory management ensures efficient memory use, minimizing slowdowns and optimizing performance, especially when handling massive text datasets. Efficient Multithreading Rust's built-in support for concurrency enables parallel processing. "Fast" tokenizers leverage this to distribute tokenization tasks across multiple CPU cores, significantly boosting throughput for large batches of text - crucial for pre-processing data for large language models. Seamless Python Integration Despite being written in Rust, tokenizers integrate seamlessly with Python using PyO3, a Rust library for creating Python bindings. This means developers can call Rust-based tokenizers from their existing Python NLP pipelines without significant modifications. Cross-Platform Compatibility Rust's portability allows tokenizers to run efficiently across different platforms, including Linux, macOS, and Windows. The ability to compile to WebAssembly (WASM) further extends its usability in browser-based NLP applications. Benchmarks That Speak Volumes: A 43x Speed Increase The performance gains are not just theoretical. While direct comparisons vary, the speed increase is undeniable. Remember that Hugging Face claims under 20 seconds to tokenize a gigabyte. But independent benchmarks show even more astonishing results. One study highlighted a 43x speed increase for "Fast" tokenizers compared to Python-based versions on a subset of the SQUAD2 dataset. That's not just faster; it's a complete transformation of processing speed, turning hours of work into minutes, and minutes into seconds. Beyond Speed: Essential Features for Modern NLP "Fast" tokenizers offer more than just raw speed

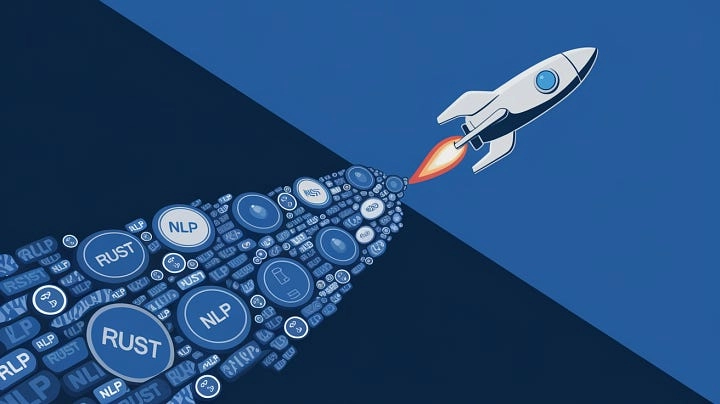

In the breakneck world of Natural Language Processing (NLP), speed isn't just a bonus - it's a critical necessity. As we build colossal language models like Llama and Gemma, the very first step of processing text - tokenization - becomes a potential bottleneck. Enter "Fast" tokenizers, the unsung heroes quietly revolutionizing NLP performance.

You've probably seen the "Fast" suffix appended to tokenizer names in libraries like Hugging Face Transformers: LlamaTokenizerFast, GemmaTokenizerFast, and a growing family. But what does "Fast" actually mean? Is it just marketing hype, or is there a real performance revolution happening under the hood?

It's a full-blown revolution. "Fast" tokenizers aren't just a bit faster; they are transformatively faster, unlocking performance levels previously unattainable. And the secret weapon behind this revolution? Rust.

What Are "Fast Tokenizers" and Why Rust is the Game Changer

At their core, tokenizers are the essential first step in any NLP pipeline. They break down raw text into manageable units called tokens - words, subwords, or even characters - that machine learning models can understand. Speed here is paramount, especially when dealing with massive datasets or real-time applications like chatbots, where delays can cripple user experience.

Traditional tokenizers, often built in Python, struggle to keep pace with these demands. This is where Rust, a systems programming language, steps into the spotlight. Rust is turbocharging tokenizers, delivering speeds comparable to C and C++ while guaranteeing memory safety. This means blazing-fast processing without the bug-prone pitfalls often associated with performance-focused languages.

Hugging Face and the Rust-Powered Revolution

Hugging Face, a leading force in NLP, recognized this potential and built their groundbreaking tokenizers library in Rust. This library, seamlessly integrated into their widely-used transformers library, is the engine behind "Fast" tokenizers.

The results are astonishing. Hugging Face's Rust-based tokenizers can process a gigabyte of text in under 20 seconds on a standard server CPU. This is not just incrementally faster; it's a quantum leap compared to Python-based tokenizers, which can take significantly longer for the same task. This dramatic speed-up is a game-changer for researchers and companies working with big data, drastically reducing training times, computational costs, and development cycles.

Why Rust Delivers Unprecedented Tokenization Speed: A Deep Dive

Rust's exceptional performance in tokenization isn't magic; it's rooted in concrete technical advantages:

Compiled Speed: Machine Code Advantage

Rust is a compiled language, translating code directly into efficient machine code before it runs. Python, as an interpreted language, executes code line by line, adding runtime overhead. Rust's compiled nature means code runs directly on the CPU at near-hardware speed, eliminating interpretation delays and boosting execution speed dramatically.Memory Safety Without the Slowdown

Rust's innovative ownership model guarantees memory safety without relying on garbage collection, a common feature in Python. Garbage collection, while convenient, can cause performance hiccups. Rust's precise memory management ensures efficient memory use, minimizing slowdowns and optimizing performance, especially when handling massive text datasets.Efficient Multithreading

Rust's built-in support for concurrency enables parallel processing. "Fast" tokenizers leverage this to distribute tokenization tasks across multiple CPU cores, significantly boosting throughput for large batches of text - crucial for pre-processing data for large language models.Seamless Python Integration

Despite being written in Rust, tokenizers integrate seamlessly with Python using PyO3, a Rust library for creating Python bindings. This means developers can call Rust-based tokenizers from their existing Python NLP pipelines without significant modifications.Cross-Platform Compatibility

Rust's portability allows tokenizers to run efficiently across different platforms, including Linux, macOS, and Windows. The ability to compile to WebAssembly (WASM) further extends its usability in browser-based NLP applications.

Benchmarks That Speak Volumes: A 43x Speed Increase

The performance gains are not just theoretical. While direct comparisons vary, the speed increase is undeniable. Remember that Hugging Face claims under 20 seconds to tokenize a gigabyte. But independent benchmarks show even more astonishing results.

One study highlighted a 43x speed increase for "Fast" tokenizers compared to Python-based versions on a subset of the SQUAD2 dataset. That's not just faster; it's a complete transformation of processing speed, turning hours of work into minutes, and minutes into seconds.

Beyond Speed: Essential Features for Modern NLP

"Fast" tokenizers offer more than just raw speed. They are packed with features crucial for advanced NLP tasks:

- Alignment Tracking (Offset Mapping): "Fast" tokenizers meticulously track the original text spans corresponding to each token. This offset mapping is vital for tasks like Named Entity Recognition (NER) and error analysis, providing a precise link between tokens and their source text.

- Versatile Tokenization Techniques: They seamlessly support state-of-the-art methods like WordPiece, Byte-Pair Encoding (BPE), and Unigram, adapting to diverse datasets and NLP tasks.

- Comprehensive Pre-processing: "Fast" tokenizers handle normalization, pre-tokenization, and post-processing, offering a complete and efficient text preparation pipeline.

Conclusion: Rust and "Fast Tokenizers" - The Future of NLP is Here

"Fast" tokenizers, powered by Rust, represent a fundamental shift in NLP. They offer a potent combination of blazing speed, robust memory safety, and advanced features, making them indispensable for modern NLP tasks, especially in the age of large language models and real-time applications.

Rust is not just improving tokenization; it's potentially revolutionizing the entire NLP landscape. As NLP continues to evolve, expect Rust's influence to expand, driving innovation and scalability far beyond tokenization, shaping the future of how we interact with language through machines.

Have you experienced the transformative speed of "Fast" tokenizers? How are they changing your NLP workflows? Share your thoughts and experiences in the comments!

Further Reading:

- Hugging Face Blog: "Introducing the Tokenizers Library" - A detailed announcement of the library's features.

Citations:

- Mozilla Research, "Rust Language Overview," Rust Official Website, 2023. Link

- Hugging Face, "Tokenizers Documentation," Hugging Face Docs, 2024. Link

- Sennrich et al., "Neural Machine Translation of Rare Words with Subword Units," ACL Proceedings, 2016. Link

- Hugging Face, "Tokenizers GitHub Repository," GitHub, 2024. Link

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)