Data Accessing, Gathering, and Framework Approaches in Data Science

Introduction Data is at the core of every data science project. The ability to efficiently gather, access, and clean data is crucial for extracting meaningful insights. This article explores the fundamental approaches to data gathering, accessing techniques, and frameworks for handling data efficiently. Data Gathering Before analyzing data, we must first acquire it. Data can be gathered from various sources, including: 1. CSV Files CSV (Comma-Separated Values) files are one of the most common formats for storing structured data. They are easy to use and can be read using libraries like Pandas in Python. import pandas as pd data = pd.read_csv("data.csv") 2. APIs (Application Programming Interfaces) APIs allow users to access real-time or static data from external sources, such as social media platforms, financial markets, and weather services. import requests response = requests.get("https://api.example.com/data") data = response.json() 3. Web Scraping When data is not available through an API, web scraping can be used to extract information from web pages using tools like BeautifulSoup or Scrapy. from bs4 import BeautifulSoup import requests url = "https://example.com" response = requests.get(url) soup = BeautifulSoup(response.text, 'html.parser') print(soup.title.text) 4. Databases Data is often stored in databases like MySQL, PostgreSQL, or MongoDB. SQL queries are used to extract data from relational databases. import mysql.connector conn = mysql.connector.connect(host="localhost", user="user", password="password", database="test") cursor = conn.cursor() cursor.execute("SELECT * FROM table_name") data = cursor.fetchall() Data Accessing After gathering data, the next step is to explore and understand it. Proper access and assessment of data ensure better cleaning and processing. 1. Understanding Data Structure Knowing the data structure helps in identifying anomalies and missing values. data.info() data.describe() data.head() 2. Handling Missing Data Data often contains missing values that need to be handled properly. # Checking for missing values data.isnull().sum() # Filling missing values data.fillna(method='ffill', inplace=True) 3. Data Type Conversion Ensuring correct data types is crucial for further analysis. data['column_name'] = pd.to_datetime(data['column_name']) data['numeric_column'] = pd.to_numeric(data['numeric_column']) Framework Approaches for Data Processing A structured framework helps in organizing data efficiently. Here are common approaches: 1. ETL (Extract, Transform, Load) ETL is a widely used framework in data engineering. Extract: Gather data from various sources. Transform: Clean and preprocess data. Load: Store data into a database or data warehouse. 2. Data Cleaning Framework Data cleaning is essential for accurate analysis. The key steps include: Identifying missing or inconsistent data. Handling outliers. Standardizing data formats. 3. Data Pipeline Automation Automating data pipelines using frameworks like Apache Airflow ensures smooth data flow. from airflow import DAG from airflow.operators.python import PythonOperator def extract_data(): # Code to extract data pass def transform_data(): # Code to transform data pass def load_data(): # Code to load data pass Conclusion Efficient data gathering, accessing, and framework implementation are critical in data science. Whether collecting data from APIs, scraping the web, or structuring ETL pipelines, mastering these techniques ensures data quality and reliability. Understanding these processes will help data scientists make better decisions and build more effective machine learning models.

Introduction

Data is at the core of every data science project. The ability to efficiently gather, access, and clean data is crucial for extracting meaningful insights. This article explores the fundamental approaches to data gathering, accessing techniques, and frameworks for handling data efficiently.

Data Gathering

Before analyzing data, we must first acquire it. Data can be gathered from various sources, including:

1. CSV Files

CSV (Comma-Separated Values) files are one of the most common formats for storing structured data. They are easy to use and can be read using libraries like Pandas in Python.

import pandas as pd

data = pd.read_csv("data.csv")

2. APIs (Application Programming Interfaces)

APIs allow users to access real-time or static data from external sources, such as social media platforms, financial markets, and weather services.

import requests

response = requests.get("https://api.example.com/data")

data = response.json()

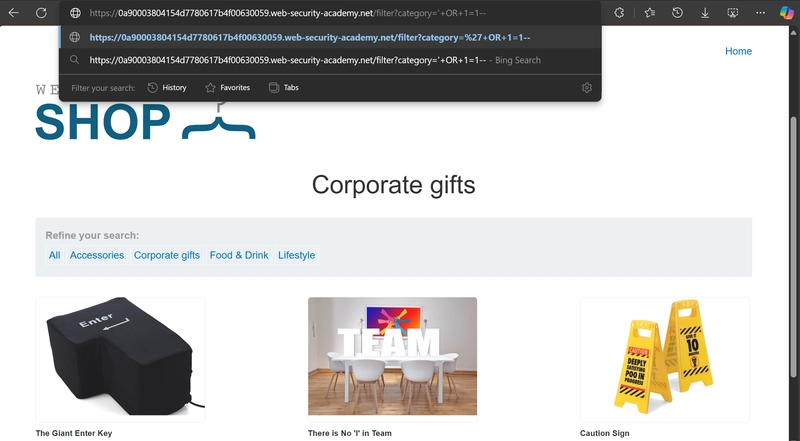

3. Web Scraping

When data is not available through an API, web scraping can be used to extract information from web pages using tools like BeautifulSoup or Scrapy.

from bs4 import BeautifulSoup

import requests

url = "https://example.com"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

print(soup.title.text)

4. Databases

Data is often stored in databases like MySQL, PostgreSQL, or MongoDB. SQL queries are used to extract data from relational databases.

import mysql.connector

conn = mysql.connector.connect(host="localhost", user="user", password="password", database="test")

cursor = conn.cursor()

cursor.execute("SELECT * FROM table_name")

data = cursor.fetchall()

Data Accessing

After gathering data, the next step is to explore and understand it. Proper access and assessment of data ensure better cleaning and processing.

1. Understanding Data Structure

Knowing the data structure helps in identifying anomalies and missing values.

data.info()

data.describe()

data.head()

2. Handling Missing Data

Data often contains missing values that need to be handled properly.

# Checking for missing values

data.isnull().sum()

# Filling missing values

data.fillna(method='ffill', inplace=True)

3. Data Type Conversion

Ensuring correct data types is crucial for further analysis.

data['column_name'] = pd.to_datetime(data['column_name'])

data['numeric_column'] = pd.to_numeric(data['numeric_column'])

Framework Approaches for Data Processing

A structured framework helps in organizing data efficiently. Here are common approaches:

1. ETL (Extract, Transform, Load)

ETL is a widely used framework in data engineering.

- Extract: Gather data from various sources.

- Transform: Clean and preprocess data.

- Load: Store data into a database or data warehouse.

2. Data Cleaning Framework

Data cleaning is essential for accurate analysis. The key steps include:

- Identifying missing or inconsistent data.

- Handling outliers.

- Standardizing data formats.

3. Data Pipeline Automation

Automating data pipelines using frameworks like Apache Airflow ensures smooth data flow.

from airflow import DAG

from airflow.operators.python import PythonOperator

def extract_data():

# Code to extract data

pass

def transform_data():

# Code to transform data

pass

def load_data():

# Code to load data

pass

Conclusion

Efficient data gathering, accessing, and framework implementation are critical in data science. Whether collecting data from APIs, scraping the web, or structuring ETL pipelines, mastering these techniques ensures data quality and reliability. Understanding these processes will help data scientists make better decisions and build more effective machine learning models.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)