CI is not CD

The terms ‘continuous integration’ and ‘continuous delivery’ are used so often together that it’s easy to forget how they differ. CI/CD. This combination of two-letter initialisms is used so widely that many people don’t have a complete understanding of what they mean. Many are missing the importance of the individual parts, why they are different and how their individual strengths complement each other. What Is Continuous Integration? The CI in CI/CD stands for continuous integration — the practice of continuously merging code into the main branch in source control. Kent Beck wrote about the practice in his book Extreme Programming (XP) Explained. The concept is simple: After a couple of hours of work, you commit your changes to the main branch. For this to work well, it must be supported by some additional practices. In XP, test-driven development, pair programming, and continual refactoring are crucial supporting practices for continuous integration: Test-driven development gives you high confidence that changes haven’t accidentally changed system behavior. Pair programming halves the potential for merge conflicts and accelerates code review. Refactoring breaks the system into small objects and methods, which makes conflicts less likely. When you commit a change, you want to know if there’s a problem as quickly as possible. A fast automated test suite gives you high confidence your changes work as expected and reduces the surface area for problem-solving when something goes wrong. Before great CI tools were available in the marketplace, you could achieve this process manually. Teams used a shared physical object, such as a build hat or merge hammer, to ensure only one team member integrated code at a time. You’d take the object from its place on a visible shelf and follow a simple checklist to get the latest main branch changes into your local copy. You’d build the code, run the tests and commit the new version if everything was green. If there were a problem, you’d resolve it and repeat the process. The key point is that continuous integration is a human-guided process, no matter which tools you use to support it. This process is the most effective way to handle the trade-off between individual productivity and the need to scale software development using teams of people working on shared code. What Is Continuous Delivery? The CD in CI/CD means continuous delivery, a software delivery method based on the principle of writing software in a way that makes sure it is deployable at all times. Dave Farley and Jez Humble detailed this approach in their book Continuous Delivery: Reliable Software Releases Through Build, Test and Deployment Automation. Inspired partly by XP, continuous delivery provides a concrete set of technical practices that help you achieve always deployable software. Your deployment pipeline doesn’t need to satisfy the same trade-offs found in continuous integration, partly because the CI process has already handled the collaboration around changes. It’s entirely possible to have a fully automated pipeline that asks for human intervention only when there’s a problem (or, autonomation, as Taiichi Ohno, founder of the Toyota Production System, which inspired Lean Manufacturing, called it, which means “automation with a human touch”). Once you have a good software version, you must prevent changes in your artifacts and processes as you progress them through your environments. Applying the same artifacts and processes ensures that both have been tested together multiple times before you deploy the code to production. Failing to lock them together could result in using a new deployment process against a software version it’s not compatible with or using the process for a production deployment before it has been tested with preproduction deployments. Farley and Humble dedicate a whole chapter of their book on continuous delivery to the continuous integration process and another to deployment pipelines. Though we may take them for granted together, these are important subjects to understand independently. Two Halves with Different Drivers The continuous delivery method includes continuous integration and automated deployment pipelines. It is these two concepts we are really talking about when we say “CI/CD.” The CI process is developer-centric and driven by source code, whereas deployments are a broader collaboration driven by artifacts and environments. Many teams are increasingly treating CI as CD, and it’s giving them headaches. When you attempt to make your build server aware of infrastructure, environments and configuration, things can get painful. You essentially create a backward step, as it’s more like the scripted deployments of the past than a modern deployment pipeline. Your build process involves steps to get the latest changes, build the software, run some tests and produce a finished artifact. Any problems during the build process in

The terms ‘continuous integration’ and ‘continuous delivery’ are used so often together that it’s easy to forget how they differ.

CI/CD. This combination of two-letter initialisms is used so widely that many people don’t have a complete understanding of what they mean. Many are missing the importance of the individual parts, why they are different and how their individual strengths complement each other.

What Is Continuous Integration?

The CI in CI/CD stands for continuous integration — the practice of continuously merging code into the main branch in source control. Kent Beck wrote about the practice in his book Extreme Programming (XP) Explained.

The concept is simple: After a couple of hours of work, you commit your changes to the main branch. For this to work well, it must be supported by some additional practices. In XP, test-driven development, pair programming, and continual refactoring are crucial supporting practices for continuous integration:

- Test-driven development gives you high confidence that changes haven’t accidentally changed system behavior.

- Pair programming halves the potential for merge conflicts and accelerates code review.

- Refactoring breaks the system into small objects and methods, which makes conflicts less likely.

When you commit a change, you want to know if there’s a problem as quickly as possible. A fast automated test suite gives you high confidence your changes work as expected and reduces the surface area for problem-solving when something goes wrong.

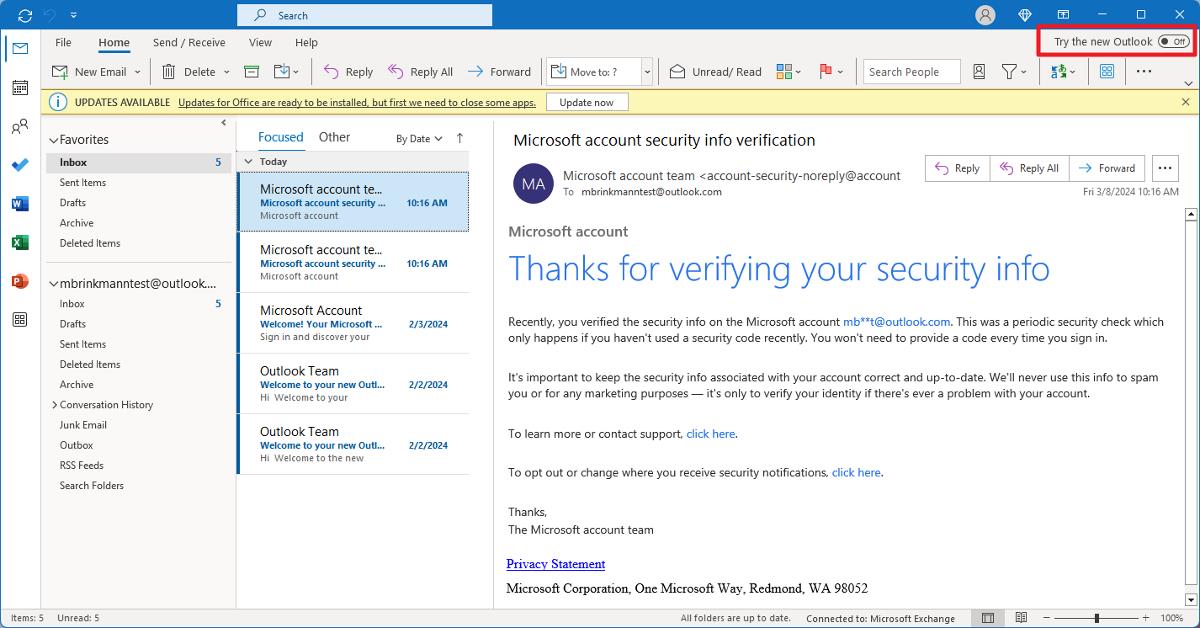

Before great CI tools were available in the marketplace, you could achieve this process manually. Teams used a shared physical object, such as a build hat or merge hammer, to ensure only one team member integrated code at a time. You’d take the object from its place on a visible shelf and follow a simple checklist to get the latest main branch changes into your local copy. You’d build the code, run the tests and commit the new version if everything was green. If there were a problem, you’d resolve it and repeat the process.

The key point is that continuous integration is a human-guided process, no matter which tools you use to support it. This process is the most effective way to handle the trade-off between individual productivity and the need to scale software development using teams of people working on shared code.

What Is Continuous Delivery?

The CD in CI/CD means continuous delivery, a software delivery method based on the principle of writing software in a way that makes sure it is deployable at all times. Dave Farley and Jez Humble detailed this approach in their book Continuous Delivery: Reliable Software Releases Through Build, Test and Deployment Automation. Inspired partly by XP, continuous delivery provides a concrete set of technical practices that help you achieve always deployable software.

Your deployment pipeline doesn’t need to satisfy the same trade-offs found in continuous integration, partly because the CI process has already handled the collaboration around changes. It’s entirely possible to have a fully automated pipeline that asks for human intervention only when there’s a problem (or, autonomation, as Taiichi Ohno, founder of the Toyota Production System, which inspired Lean Manufacturing, called it, which means “automation with a human touch”).

Once you have a good software version, you must prevent changes in your artifacts and processes as you progress them through your environments. Applying the same artifacts and processes ensures that both have been tested together multiple times before you deploy the code to production. Failing to lock them together could result in using a new deployment process against a software version it’s not compatible with or using the process for a production deployment before it has been tested with preproduction deployments.

Farley and Humble dedicate a whole chapter of their book on continuous delivery to the continuous integration process and another to deployment pipelines. Though we may take them for granted together, these are important subjects to understand independently.

Two Halves with Different Drivers

The continuous delivery method includes continuous integration and automated deployment pipelines. It is these two concepts we are really talking about when we say “CI/CD.” The CI process is developer-centric and driven by source code, whereas deployments are a broader collaboration driven by artifacts and environments.

Many teams are increasingly treating CI as CD, and it’s giving them headaches. When you attempt to make your build server aware of infrastructure, environments and configuration, things can get painful. You essentially create a backward step, as it’s more like the scripted deployments of the past than a modern deployment pipeline.

Your build process involves steps to get the latest changes, build the software, run some tests and produce a finished artifact. Any problems during the build process invalidate the artifact and prevent the build from completing. The build process is complete once you have lodged an artifact in a repository or rejected the software version.

Conversely, your deployment process must contend with transient issues like expired tokens, network faults and deployment targets that aren’t active. You don’t want to fail your build because of these issues, and retrying deployments and deployment steps is a valid strategy. Additionally, it’s not easy to model your deployment process using build steps without excessive complexity.

Beyond Development Teams

A crucial difference I’ve often observed is that CI and CD tools have different audiences. While developers are often active on both sides of CI/CD, CD tools are frequently used by a wider group of people.

For example, test analysts and support team members may use your CD tool to pull a specific software version into an environment to reproduce a bug. Your product manager may use the CD dashboard to see which software versions have been deployed to each environment, customer or location.

CD tools have a range of subtle features that make it easier to handle deployment scenarios. They have a way to manage environments and infrastructure. This mechanism applies the correct configuration for each deployment and provides a way to handle deployments at scale, such as managing tenant-specific infrastructure or deployments to different locations (such as retail stores, hospitals or cloud regions).

Alongside practical deployment features, CD tools also make the state of deployments visible to everyone who needs to know what software versions are where. This removes the need for people to ask for status updates, just as your task board handles work items. If you want to know your bank balance, you don’t want to phone your bank; you want to self-serve the answer instantly. The same is true for your deployments.

CI and CD Are Better When Decoupled

The slash in the middle of “CI/CD” is one of nature’s rare decoupling opportunities. There are few examples of such joyfully loose coupling. Your build server has created an artifact. Its job is done.

While you are unlikely to differentiate your offering through your build process (though you may through the technical practices that support it), you can certainly add tremendous value through your deployment pipelines. There is a competitive advantage to using reliable, repeatable deployments.

I originally published this article on The New Stack.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

.webp?#)

![Here’s the first live demo of Android XR on Google’s prototype smart glasses [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/google-android-xr-ted-glasses-demo-3.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New Beats USB-C Charging Cables Now Available on Amazon [Video]](https://www.iclarified.com/images/news/97060/97060/97060-640.jpg)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)