Case Study: Deploying a Python AI Application with Ollama and FastAPI

Case Study: Deploying a Python AI Application with Ollama and FastAPI An AI project built locally, perhaps using powerful tools like Ollama and FastAPI, eventually needs deployment to a server for wider access or reliable 24/7 operation. This document details a common process for deploying such a Python-based AI application onto a Linux server. The steps cover connecting to the server, setting up the environment, managing AI models, running the application manually for testing, and configuring it to run reliably as a background service using system. While the steps are based on deploying an application using FastAPI and Ollama, many illustrate standard deployment practices applicable to various Python web applications. Table of Contents Case Study: Deploying a Python AI Application with Ollama and FastAPI Table of Contents Connecting to the Server via SSH Generating SSH Keys Connecting Cloning the Project Repository Environment Setup 1. Installing uv (Optional but Recommended) 2. Installing Python 3. Installing Software Dependencies Project Dependencies Installing and Running Ollama 4. Preparing the AI Models Uploading/Downloading Models Building Models with Ollama 5. Setting Up Environment Variables 6. Running the Project Manually (Testing) Deploying as a Service with systemd Creating the Service File Enabling and Starting the Service Frequently Encountered Issues (FAQ) Environment Configuration Issues Runtime Issues Conclusion Connecting to the Server via SSH Secure Shell (SSH) is the standard method for securely connecting to and managing remote Linux servers. This is typically the first step in the deployment process. Generating SSH Keys If you don't already have an SSH key pair on your local machine, you'll need to generate one: a private key (kept secure locally) and a public key (shared with the server). Use the ssh-keygen command on your local machine. Following the prompts is usually sufficient. Common algorithms are ED25519 (recommended) or RSA. # Example using ED25519 ssh-keygen -t ed25519 -C "your_email@example.com" # Follow the prompts to save the key (default location is usually fine) and optionally set a passphrase. Reference: GitHub Docs: Generating a new SSH key On macOS and Linux, keys are typically stored in the ~/.ssh directory. Use ls -al ~/.ssh to check: id_ed25519 or id_rsa: The private key. Never share this file. id_ed25519.pub or id_rsa.pub: The public key. Copy the contents of this file to provide to the server administrator or add it yourself if you have access. The server administrator (or you) must add the contents of your public key file (id_xxx.pub) to the ~/.ssh/authorized_keys file within the target user's home directory on the server. Connecting Once your public key is authorized on the server, you can establish a connection using your private key: ssh -i /path/to/your/private_key your_server_username@your_server_hostname_or_ip -p Replace the placeholders: /path/to/your/private_key: The path to your private key file (e.g., ~/.ssh/id_ed25519). your_server_username: Your username on the remote server. your_server_hostname_or_ip: The server's IP address or resolvable hostname. : The SSH port number configured on the server (default is 22, but often changed for security). Note: Omit the angle brackets () when replacing placeholders like . On the first connection to a new server, you'll likely see a host authenticity warning. This is normal. Type yes to proceed. The authenticity of host '...' can't be established. ... key fingerprint is ... Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Cloning the Project Repository With a successful SSH connection, get your project code onto the server using Git: git clone your_repository_link # Example: git clone git@github.com:your_username/your_project.git # Or: git clone https://github.com/your_username/your_project.git Replace your_repository_link with the actual SSH or HTTPS URL of your Git repository. Navigate into the cloned directory: cd your_project_directory_name. Environment Setup Set up the necessary software and environment on the server. 1. Installing uv (Optional but Recommended) uv is a very fast Python package installer and resolver written in Rust. Using it can significantly speed up dependency installation compared to standard pip. It's optional, but recommended. # Using pip to install uv (ensure pip is available) pip install uv # Alternatively, follow official instructions: https://github.com/astral-sh/uv#installation Verify the installation: uv --version # Expected output similar to: uv x.y.z 2. Installing Python Ensure a compatible Python version (e.g., 3.8-3.11, check your project's needs) is installed. If you installed uv, you can use it to install Python: # Replace 3.x.y wi

Case Study: Deploying a Python AI Application with Ollama and FastAPI

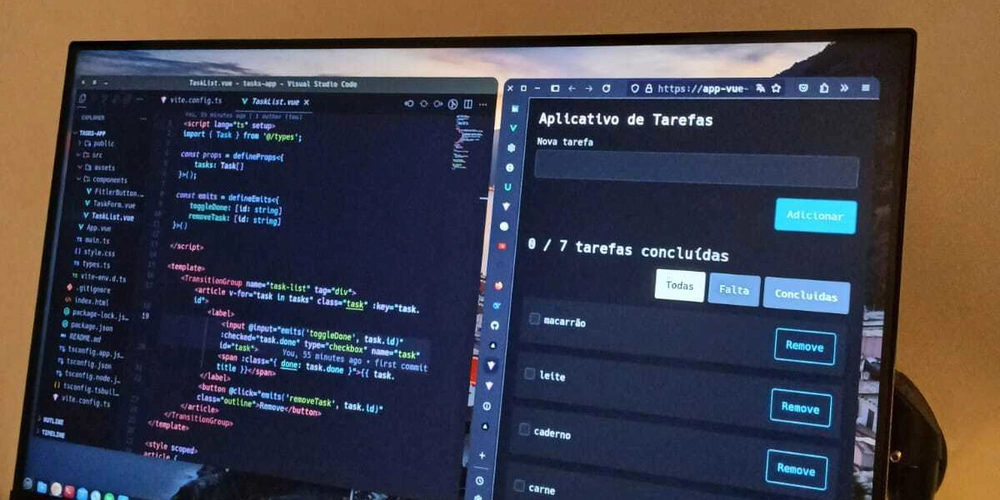

An AI project built locally, perhaps using powerful tools like Ollama and FastAPI, eventually needs deployment to a server for wider access or reliable 24/7 operation. This document details a common process for deploying such a Python-based AI application onto a Linux server.

The steps cover connecting to the server, setting up the environment, managing AI models, running the application manually for testing, and configuring it to run reliably as a background service using system. While the steps are based on deploying an application using FastAPI and Ollama, many illustrate standard deployment practices applicable to various Python web applications.

Table of Contents

-

Case Study: Deploying a Python AI Application with Ollama and FastAPI

- Table of Contents

- Connecting to the Server via SSH

- Generating SSH Keys

- Connecting

- Cloning the Project Repository

- Environment Setup

- 1. Installing

uv(Optional but Recommended) - 2. Installing Python

-

3. Installing Software Dependencies

- Project Dependencies

- Installing and Running Ollama

-

4. Preparing the AI Models

- Uploading/Downloading Models

- Building Models with Ollama

- 5. Setting Up Environment Variables

- 6. Running the Project Manually (Testing)

- Deploying as a Service with

systemd - Creating the Service File

- Enabling and Starting the Service

- Frequently Encountered Issues (FAQ)

- Environment Configuration Issues

- Runtime Issues

- Conclusion

Connecting to the Server via SSH

Secure Shell (SSH) is the standard method for securely connecting to and managing remote Linux servers. This is typically the first step in the deployment process.

Generating SSH Keys

If you don't already have an SSH key pair on your local machine, you'll need to generate one: a private key (kept secure locally) and a public key (shared with the server).

Use the ssh-keygen command on your local machine. Following the prompts is usually sufficient. Common algorithms are ED25519 (recommended) or RSA.

# Example using ED25519

ssh-keygen -t ed25519 -C "your_email@example.com"

# Follow the prompts to save the key (default location is usually fine) and optionally set a passphrase.

- Reference: GitHub Docs: Generating a new SSH key

On macOS and Linux, keys are typically stored in the ~/.ssh directory. Use ls -al ~/.ssh to check:

-

id_ed25519orid_rsa: The private key. Never share this file. -

id_ed25519.puborid_rsa.pub: The public key. Copy the contents of this file to provide to the server administrator or add it yourself if you have access.

The server administrator (or you) must add the contents of your public key file (id_xxx.pub) to the ~/.ssh/authorized_keys file within the target user's home directory on the server.

Connecting

Once your public key is authorized on the server, you can establish a connection using your private key:

ssh -i /path/to/your/private_key your_server_username@your_server_hostname_or_ip -p

Replace the placeholders:

-

/path/to/your/private_key: The path to your private key file (e.g.,~/.ssh/id_ed25519). -

your_server_username: Your username on the remote server. -

your_server_hostname_or_ip: The server's IP address or resolvable hostname. -

Note: Omit the angle brackets (<>) when replacing placeholders like

On the first connection to a new server, you'll likely see a host authenticity warning. This is normal. Type yes to proceed.

The authenticity of host '...' can't be established.

... key fingerprint is ...

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Cloning the Project Repository

With a successful SSH connection, get your project code onto the server using Git:

git clone your_repository_link

# Example: git clone git@github.com:your_username/your_project.git

# Or: git clone https://github.com/your_username/your_project.git

Replace your_repository_link with the actual SSH or HTTPS URL of your Git repository. Navigate into the cloned directory: cd your_project_directory_name.

Environment Setup

Set up the necessary software and environment on the server.

1. Installing uv (Optional but Recommended)

uv is a very fast Python package installer and resolver written in Rust. Using it can significantly speed up dependency installation compared to standard pip. It's optional, but recommended.

# Using pip to install uv (ensure pip is available)

pip install uv

# Alternatively, follow official instructions: https://github.com/astral-sh/uv#installation

Verify the installation:

uv --version

# Expected output similar to: uv x.y.z

2. Installing Python

Ensure a compatible Python version (e.g., 3.8-3.11, check your project's needs) is installed.

If you installed uv, you can use it to install Python:

# Replace 3.x.y with your target version (e.g., 3.10.13)

uv python install 3.x.y

# Follow any prompts regarding PATH updates.

Alternatively, use the system's package manager (common on Linux servers):

# Example for Debian/Ubuntu

sudo apt update

sudo apt install python3 python3-pip python3-venv

# Example for CentOS/RHEL

sudo yum update

sudo yum install python3 python3-pip

Confirm the Python installation:

python3 --version

# Or sometimes just 'python --version' depending on PATH setup

- Reference: Installing Python with uv

3. Installing Software Dependencies

Project Dependencies

Your project should have a requirements.txt file listing Python dependencies. Navigate to your project directory and install them.

Using uv (recommended if installed):

cd /path/to/your/project

uv pip install -r requirements.txt

Alternatively, using pip (usually within a virtual environment):

cd /path/to/your/project

# Best practice: Create and activate a virtual environment first

# python3 -m venv venv

# source venv/bin/activate

pip install -r requirements.txt

# Or pip3 install -r requirements.txt

Note: Replace /path/to/your/project with the actual absolute path to your cloned repository.

Installing and Running Ollama

Ollama allows running large language models locally. Install it on the Linux server using the official script:

curl -fsSL https://ollama.com/install.sh | sh

For initial testing, you can start the Ollama server manually in the background:

ollama serve & # The '&' runs it in the background for the current session only.

Note: For production, Ollama should be set up as a systemd service (the installation script often does this automatically, check with systemctl status ollama). Running ollama serve & is primarily for temporary testing.

Verify Ollama is running and accessible (this command talks to the running server):

ollama list

# Should show an empty list or any models already pulled/created.

# If it hangs or errors, the server isn't running correctly.

- Reference: Ollama Linux Installation Guide

4. Preparing the AI Models

Make the required AI model files available on the server. Choose a suitable location, perhaps a models/ subdirectory within your project or a central /opt/ai-models/ directory.

Uploading/Downloading Models

-

From Hugging Face: Use

huggingface-cli(install withpip install huggingface_hub):

huggingface-cli download repo_id path/to/model.gguf --local-dir /path/on/server/models --local-dir-use-symlinks False

# Replace repo_id, model filename, and /path/on/server/models

-

From Local Machine: Use

scp(Secure Copy) to upload models from your development machine. Replace placeholders.

# Upload a single model file

scp /path/on/local/model.gguf your_server_username@your_server_hostname_or_ip:/path/on/server/models/

# Upload an Ollama Modelfile

scp /path/on/local/Modelfile your_server_username@your_server_hostname_or_ip:/path/on/server/models/

# Upload an entire directory recursively

scp -r /path/on/local/model_directory your_server_username@your_server_hostname_or_ip:/path/on/server/models/

-

GGUF Merging: If a model is split (e.g.,

model-part-1.gguf,model-part-2.gguf), you might needllama.cpptools to merge them. Clonellama.cpp, build it, and usellama-gguf-split:

# Assuming llama.cpp tools are built and in PATH

# llama-gguf-split --merge input_part_*.gguf output_merged.gguf

Building Models with Ollama

To integrate local model files (like .gguf) with Ollama or define custom model parameters, use a Modelfile. Create this text file (e.g., MyModelModelfile) in the same directory as the base model file (.gguf) on the server.

Example Modelfile content:

# Use FROM with a relative path to the model file in the same directory

FROM ./local_model_filename.gguf

# (Optional) Define a prompt template

TEMPLATE """[INST] {{ .Prompt }} [/INST]"""

# (Optional) Set parameters

PARAMETER temperature 0.7

PARAMETER top_k 40

# Add other parameters as needed (stop sequences, etc.)

Build and register the model with Ollama using a chosen name:

# Ensure Ollama server is running

# Navigate to the directory containing the Modelfile and the .gguf file

cd /path/on/server/models/

# Create the model - use a descriptive name

ollama create your_custom_model_name -f MyModelModelfile

Verify the model is available: ollama list.

5. Setting Up Environment Variables

Applications often require configuration like API keys or database URLs, best managed via environment variables. A common method is using a .env file in the project's root directory. Never commit your .env file to Git. Add .env to your .gitignore file.

Create a file named .env in your project root (/path/to/your/project/.env) with content like this (replace placeholder values):

# Example .env file content

# Framework secret key (generate a strong random key)

SECRET_KEY=your_strong_random_secret_key

# API keys for external services

EXTERNAL_API_KEY=your_external_api_key_value

# Ollama configuration if your app needs to explicitly connect

OLLAMA_HOST=http://127.0.0.1:11434

# Other configuration variables

DATABASE_URL=your_database_connection_string

Your Python application code needs to load these variables, often using a library like python-dotenv (pip install python-dotenv).

6. Running the Project Manually (Testing)

Before setting up the service, run the application manually from the terminal to ensure it starts and functions correctly.

- Ensure Ollama is Running: Use

ollama listorsystemctl status ollama(if installed as a service) to verify. - Activate Environment (if using venv):

source /path/to/your/project/venv/bin/activate -

Start Application: Navigate to the project root directory. Use a ASGI server like

uvicornfor FastAPI. Replace placeholders.

cd /path/to/your/project # Example command using uvicorn # assumes your FastAPI app instance is named 'app' in 'main.py' # use the port your app is configured to listen on uvicorn main:app --host 0.0.0.0 --port--reload

- `--host 0.0.0.0`: Makes the app accessible from other machines on the network (ensure firewall rules permit traffic on ``).

- `--port `: The port the application will listen on (e.g., 8000).

- `--reload`: **Use only for testing.** Enables auto-reloading on code changes. **Remove this flag for production deployment.**

- Test:

-

API Endpoints: Use tools like

curl, Postman, or Insomnia to send requests (e.g.,curl http://your_server_hostname_or_ip:)./api/some_endpoint -

Web UI: Access any web interface via a browser at

http://your_server_hostname_or_ip:. - Logs: Check the terminal output for errors.

-

API Endpoints: Use tools like

Deploying as a Service with systemd

Running applications manually in the terminal isn't suitable for production. systemd is the standard Linux service manager used to:

- Start the application automatically on server boot.

- Restart the application automatically if it crashes.

- Manage the application as a background process with proper logging.

Creating the Service File

Create a service definition file using a text editor with sudo privileges. Use a descriptive name (e.g., your-app-name.service).

sudo nano /etc/systemd/system/your-app-name.service

Paste the following template into the file. Carefully read the comments and replace ALL placeholders.

[Unit]

Description=My Python AI Application Service # A descriptive name for your service

After=network.target

# If your app strictly requires Ollama to be running first (and Ollama is a systemd service), uncomment:

# Wants=ollama.service

# After=network.target ollama.service

[Service]

# !!! SECURITY BEST PRACTICE: DO NOT RUN AS ROOT !!!

# Create a dedicated, non-root user for your application.

# Replace 'your_app_user' with the actual username. Ensure this user has permissions

# to read project files and write to necessary directories (e.g., logs, uploads).

User=your_app_user

# Group=your_app_group # Often the same as the user, uncomment if needed

# Set the working directory to your project's root absolute path

WorkingDirectory=/path/to/your/project # !!! REPLACE THIS ABSOLUTE PATH !!!

# Command to start the application. Use the ABSOLUTE PATH to the executable (uvicorn, gunicorn, etc.).

# Find the path: 'which uvicorn' (as your_app_user, possibly after activating venv)

# Or it might be like: /path/to/your/project/venv/bin/uvicorn OR /home/your_app_user/.local/bin/uvicorn

# Adjust 'main:app', host, port, and other args. REMOVE --reload!

ExecStart=/path/to/executable/uvicorn main:app --host 0.0.0.0 --port --forwarded-allow-ips='*' # !!! REPLACE PATH, APP, PORT & CHECK ARGS !!!

# Restart policy

Restart=always

RestartSec=5 # Wait 5 seconds before restarting

# Logging: Redirect stdout/stderr to systemd journal

StandardOutput=journal

StandardError=journal

# Optional: Load environment variables from the .env file

# Ensure the path is absolute. Permissions must allow 'your_app_user' to read it.

# EnvironmentFile=/path/to/your/project/.env # !!! REPLACE THIS ABSOLUTE PATH !!!

# Note: Variables here might override system-wide or user-specific environment settings.

[Install]

WantedBy=multi-user.target # Start the service during normal system boot

Crucial Placeholders and Settings to Verify:

-

Description: A clear name. -

User/Group: Must be changed to a dedicated, non-root user (your_app_user). Ensure file/directory permissions are correct for this user. -

WorkingDirectory: The absolute path to your project's root. -

ExecStart:- The absolute path to the

uvicorn(or other WSGI/ASGI server) executable. If using a virtual environment, it's typically insidevenv/bin/. -

main:appmust match your Python file and FastAPI/Flask app instance. -

--host, and other arguments must be correct for production. Remove--reload. -

--forwarded-allow-ips='*'may be needed if behind a reverse proxy (like Nginx or Apache). Adjust as needed for security.

- The absolute path to the

- (Optional)

EnvironmentFile: If used, the absolute path to your.envfile.

Save the file and exit the editor (Ctrl+X, then Y, then Enter in nano).

Enabling and Starting the Service

Manage the new service using systemctl:

-

Reload

systemdconfiguration: To make it aware of the new file.

sudo systemctl daemon-reload -

Enable the service: To make it start automatically on boot. Use the same filename you created.

sudo systemctl enable your-app-name.service -

Start the service: To run it immediately.

sudo systemctl start your-app-name.service -

Check the status: To verify it's running.

sudo systemctl status your-app-name.serviceLook for

active (running). If it saysfailedor isn't active, check the logs. -

View logs: To see application output and troubleshoot errors.

sudo journalctl -u your-app-name.service -f

- `-u your-app-name.service`: Filters logs for your specific service.

- `-f`: Follow the logs in real-time (like `tail -f`). Press `Ctrl+C` to stop following.

- To see older logs, remove `-f`: `sudo journalctl -u your-app-name.service --since "1 hour ago"`

Your AI application should now be running as a managed background service.

Frequently Encountered Issues (FAQ)

Common problems during setup or runtime.

Environment Configuration Issues

-

Slow SSH Connection/Typing:

- Cause: High network latency.

-

Solution: Enable SSH compression with the

-Cflag:

ssh -C your_server_username@your_server_hostname_or_ip ...

Runtime Issues

-

Missing Environment Variables: Errors like

KeyError: 'SECRET_KEY'orValueError: Required setting X not found.- Cause: The application needs environment variables that aren't defined in its running context.

- Solution: Ensure required variables are set either:

- In the

.envfile, and the application usespython-dotenvto load it. - Or listed in the

systemdservice file using theEnvironment="VAR_NAME=value"directive (less common for many variables) or theEnvironmentFile=directive pointing to your.envfile. Verify thesystemdservice user has permission to read theEnvironmentFile. Reload (daemon-reload) and restart the service after changes.

-

Ollama Runtime Error:

Error: llama runner process has terminated: exit status X.- Cause: Varies. Could be Ollama bugs, insufficient RAM/VRAM for the model, model file corruption, or incompatibility.

- Solution:

- Check Ollama's logs:

journalctl -u ollama.service(if running as systemd service). - Search the Ollama GitHub Issues for the specific error message or exit status.

- Ensure the server meets the resource requirements for the model.

- Try a different (perhaps smaller) model to isolate the issue.

- Consider trying a different Ollama version (downgrade or upgrade), checking compatibility notes.

-

Ollama Slow/No Response/Long Inference Time:

-

Cause 1: Network proxies interfering.

Solution 1: If you are running Ollama manually or configuring its environment, try unsetting proxy variables:

unset http_proxy https_proxy NO_PROXY. For thesystemservice, proxies might need to be configured/disabled system-wide or within the service's environment settings. - Cause 2: Insufficient hardware resources (CPU, RAM, VRAM if using GPU).

-

Solution 2: Verify model requirements vs. server specs. Monitor resource usage (

htop,nvidia-smiif GPU). Use smaller models or upgrade hardware if needed.

-

Cause 1: Network proxies interfering.

Solution 1: If you are running Ollama manually or configuring its environment, try unsetting proxy variables:

-

ollama serveFails: Port Already in Use: Error likelisten tcp 127.0.0.1:11434: bind: address already in use.- Cause: Another process (likely another Ollama instance or your app if misconfigured) is using port 11434.

- Solution: Stop the conflicting process.

- If Ollama is a service:

sudo systemctl stop ollama. - Find and kill manually:

sudo lsof -i :11434 # Find the PID using the port sudo kill# Replace with the process ID found # Or more forcefully (use with caution): # sudo pkill ollama # sudo killall ollama- After stopping the conflict, try starting your service/Ollama again.

Conclusion

Deploying a Python AI application involves careful environment setup, dependency management, model handling, and robust process management. A solid foundation is provided by tools like uv for speed, Ollama for local model serving, FastAPI for the API, and system for reliable service operation. Remember to prioritize security by using non-root users and managing secrets appropriately through environment variables. By following these steps and adapting them to your specific project needs, you can successfully deploy your application for continuous operation.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes April 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)