Build an Instant HR Policy Q&A Bot with Chainlit

HR teams are crucial, but they often get bogged down answering the same policy questions repeatedly. "What's our remote work policy?" "How do expense reports work?" "Details on parental leave?" Providing instant, accurate answers frees up HR for more strategic tasks and empowers employees with 24/7 access to information. This post shows how to build an AI-powered Q&A bot using Chainlit and LangChain. Employees can ask questions in plain English, and the bot will answer based solely on your company's official HR documents, even citing the sources! We'll pre-load the documents so HR doesn't need to upload anything during the chat. Chainlit (Official Website) is an open-source Python framework perfect for this. It quickly turns your AI/LLM code into a shareable web application with a chat interface, visualization of the AI's thinking process (Steps Concept), and great integrations. The Plan: Pre-Loaded Knowledge with RAG Instead of uploading documents live, we'll use Retrieval-Augmented Generation (RAG) with a pre-built knowledge base: (One-time/Offline Prep): Load your HR documents (PDFs, TXT files). (One-time/Offline Prep): Split them into smaller text chunks. (One-time/Offline Prep): Convert these chunks into numerical representations (embeddings). (One-time/Offline Prep): Store these embeddings in a persistent vector database (we'll use ChromaDB saved to disk). (In the Chainlit App): When a user asks a question: Load the pre-built vector database. Find the most relevant document chunks related to the question. Send the question and the relevant chunks to an LLM (like GPT-4o-mini). The LLM generates an answer based only on the provided document chunks. Chainlit displays the answer and the source chunks. Prerequisites Python: Version 3.9+ installed. Chainlit: pip install chainlit (Installation Docs) Supporting Libraries: pip install langchain langchain-openai openai chromadb tiktoken pypdf python-dotenv langchain, langchain-openai, openai: For LLM interaction and RAG components (LangChain Docs). chromadb: For the local vector store (Chroma Docs). tiktoken: Used by LangChain for text splitting based on tokens. pypdf: To load PDF documents (PyPDF Docs). python-dotenv: To load your API key securely. OpenAI API Key: Required for embeddings and the LLM. Get one from OpenAI. HR Policy Documents: Your official policy documents (e.g., employee_handbook.pdf, benefits_guide.txt) ready in a known location. Step 1: Prepare Your HR Documents Gather your policy documents (PDF, TXT are easiest to start with). Create a folder named hr_docs in your project directory and place your documents inside it. hr_bot/ ├── hr_docs/ │ ├── employee_handbook.pdf │ └── expense_policy.txt ├── .env ├── build_vectordb.py # We'll create this next └── app.py # The Chainlit app Step 2: Build the Knowledge Base (Vector Store) This script needs to be run once initially, and then again whenever your HR policies are updated. Create a file named build_vectordb.py: Imports and Loading: First, import necessary libraries and load documents from your hr_docs folder. # build_vectordb.py import os from dotenv import load_dotenv from langchain_community.document_loaders import PyPDFLoader, TextLoader, DirectoryLoader from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain_openai import OpenAIEmbeddings from langchain_community.vectorstores import Chroma # Load API Key from .env load_dotenv() # Constants DOCS_DIR = "hr_docs" # Directory containing your HR documents VECTORD B_DIR = "hr_vector_db" # Directory where ChromaDB will store data print(f"Loading documents from {DOCS_DIR}...") # Configure loader for PDF and TXT files loader = DirectoryLoader( DOCS_DIR, glob="**/*", # Load all files loader_cls=lambda p: PyPDFLoader(p) if p.endswith('.pdf') else TextLoader(p), show_progress=True, use_multithreading=True ) documents = loader.load() if not documents: print("No documents found. Please add PDF or TXT files to the 'hr_docs' directory.") exit() print(f"Loaded {len(documents)} documents.") (Explanation: This part loads your OpenAI key using dotenv, sets up constants for directories, and uses LangChain's DirectoryLoader to smartly load both PDF and TXT files from the specified folder.) Splitting and Embedding: Now, split the loaded documents into smaller chunks and store their embeddings in ChromaDB. # build_vectordb.py (continued) text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=150) docs = text_splitter.split_documents(documents) print(f"Split documents into {len(docs)} chunks.") print("Creating embeddings and vector store...") embeddings = OpenAIEmbeddings() # Create Chroma vector store and persist it to disk vectordb = Chroma.from_documents( documents=docs, embedding=embeddings, persist_directory=VECTORD B_DIR ) print(f"

HR teams are crucial, but they often get bogged down answering the same policy questions repeatedly. "What's our remote work policy?" "How do expense reports work?" "Details on parental leave?" Providing instant, accurate answers frees up HR for more strategic tasks and empowers employees with 24/7 access to information.

This post shows how to build an AI-powered Q&A bot using Chainlit and LangChain. Employees can ask questions in plain English, and the bot will answer based solely on your company's official HR documents, even citing the sources! We'll pre-load the documents so HR doesn't need to upload anything during the chat.

Chainlit (Official Website) is an open-source Python framework perfect for this. It quickly turns your AI/LLM code into a shareable web application with a chat interface, visualization of the AI's thinking process (Steps Concept), and great integrations.

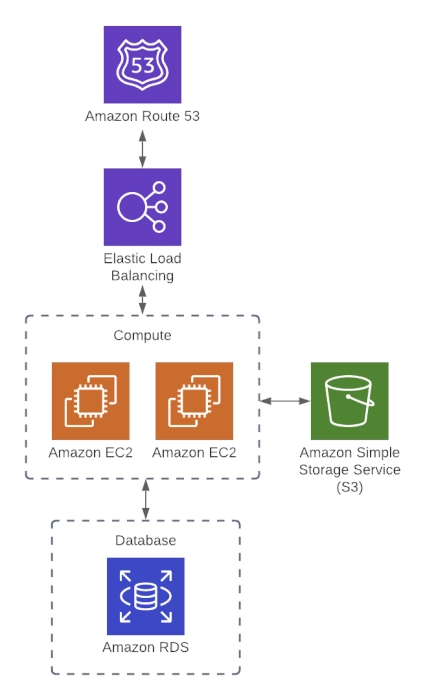

The Plan: Pre-Loaded Knowledge with RAG

Instead of uploading documents live, we'll use Retrieval-Augmented Generation (RAG) with a pre-built knowledge base:

- (One-time/Offline Prep): Load your HR documents (PDFs, TXT files).

- (One-time/Offline Prep): Split them into smaller text chunks.

- (One-time/Offline Prep): Convert these chunks into numerical representations (embeddings).

- (One-time/Offline Prep): Store these embeddings in a persistent vector database (we'll use ChromaDB saved to disk).

- (In the Chainlit App): When a user asks a question:

- Load the pre-built vector database.

- Find the most relevant document chunks related to the question.

- Send the question and the relevant chunks to an LLM (like GPT-4o-mini).

- The LLM generates an answer based only on the provided document chunks.

- Chainlit displays the answer and the source chunks.

Prerequisites

- Python: Version 3.9+ installed.

- Chainlit:

pip install chainlit(Installation Docs) - Supporting Libraries:

pip install langchain langchain-openai openai chromadb tiktoken pypdf python-dotenv-

langchain,langchain-openai,openai: For LLM interaction and RAG components (LangChain Docs). -

chromadb: For the local vector store (Chroma Docs). -

tiktoken: Used by LangChain for text splitting based on tokens. -

pypdf: To load PDF documents (PyPDF Docs). -

python-dotenv: To load your API key securely.

-

- OpenAI API Key: Required for embeddings and the LLM. Get one from OpenAI.

- HR Policy Documents: Your official policy documents (e.g.,

employee_handbook.pdf,benefits_guide.txt) ready in a known location.

Step 1: Prepare Your HR Documents

Gather your policy documents (PDF, TXT are easiest to start with). Create a folder named hr_docs in your project directory and place your documents inside it.

hr_bot/

├── hr_docs/

│ ├── employee_handbook.pdf

│ └── expense_policy.txt

├── .env

├── build_vectordb.py # We'll create this next

└── app.py # The Chainlit app

Step 2: Build the Knowledge Base (Vector Store)

This script needs to be run once initially, and then again whenever your HR policies are updated. Create a file named build_vectordb.py:

Imports and Loading:

First, import necessary libraries and load documents from your hr_docs folder.

# build_vectordb.py

import os

from dotenv import load_dotenv

from langchain_community.document_loaders import PyPDFLoader, TextLoader, DirectoryLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import Chroma

# Load API Key from .env

load_dotenv()

# Constants

DOCS_DIR = "hr_docs" # Directory containing your HR documents

VECTORD B_DIR = "hr_vector_db" # Directory where ChromaDB will store data

print(f"Loading documents from {DOCS_DIR}...")

# Configure loader for PDF and TXT files

loader = DirectoryLoader(

DOCS_DIR,

glob="**/*", # Load all files

loader_cls=lambda p: PyPDFLoader(p) if p.endswith('.pdf') else TextLoader(p),

show_progress=True,

use_multithreading=True

)

documents = loader.load()

if not documents:

print("No documents found. Please add PDF or TXT files to the 'hr_docs' directory.")

exit()

print(f"Loaded {len(documents)} documents.")

(Explanation: This part loads your OpenAI key using dotenv, sets up constants for directories, and uses LangChain's DirectoryLoader to smartly load both PDF and TXT files from the specified folder.)

Splitting and Embedding:

Now, split the loaded documents into smaller chunks and store their embeddings in ChromaDB.

# build_vectordb.py (continued)

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=150)

docs = text_splitter.split_documents(documents)

print(f"Split documents into {len(docs)} chunks.")

print("Creating embeddings and vector store...")

embeddings = OpenAIEmbeddings()

# Create Chroma vector store and persist it to disk

vectordb = Chroma.from_documents(

documents=docs,

embedding=embeddings,

persist_directory=VECTORD B_DIR

)

print(f"Successfully created and persisted vector store in '{VECTORD B_DIR}'.")

print("Knowledge base build complete.")

(Explanation: RecursiveCharacterTextSplitter breaks down the text. OpenAIEmbeddings prepares the embedding function. Chroma.from_documents does the heavy lifting: it creates embeddings for all chunks and saves them, along with the text, into the specified persist_directory. This directory now contains your knowledge base.)

Run the Build Script:

Execute this script from your terminal in the hr_bot directory:

python build_vectordb.py

This will create the hr_vector_db folder containing your knowledge base. You only need to run this again if your HR documents change.

Step 3: Set Up Your Chainlit Application File (app.py)

Now, create the actual Chainlit app file (app.py).

Imports and Initial Setup:

Import Chainlit, LangChain components, and load the environment variables.

# app.py

import os

from dotenv import load_dotenv

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain.chains import ConversationalRetrievalChain

from langchain.memory import ConversationBufferMemory

from langchain_community.vectorstores import Chroma

import chainlit as cl

# Load environment variables

load_dotenv()

# Constants for vector store

VECTORD B_DIR = "hr_vector_db"

# Chainlit decorators to define app logic

# ... (on_chat_start and on_message functions will go here) ...

(Explanation: Standard imports. We load the API key and define the location of our pre-built vector store.)

on_chat_start Function:

This function runs when a user starts a new chat. It loads the existing vector store and sets up the conversational chain.

# app.py (continued)

@cl.on_chat_start

async def on_chat_start():

# Load existing vector store

embeddings = OpenAIEmbeddings()

vectordb = Chroma(persist_directory=VECTORD B_DIR, embedding_function=embeddings)

# Initialize conversation memory

memory = ConversationBufferMemory(

memory_key="chat_history",

output_key="answer",

return_messages=True,

)

# Create the Conversational Retrieval Chain

chain = ConversationalRetrievalChain.from_llm(

ChatOpenAI(model_name="gpt-4o-mini", temperature=0, streaming=True),

chain_type="stuff",

retriever=vectordb.as_retriever(), # Use the loaded vector store

memory=memory,

return_source_documents=True,

)

await cl.Message(content="HR Policy Bot is ready. Ask me anything about our policies!").send()

# Store the chain in the user session

cl.user_session.set("chain", chain)

(Explanation: Instead of asking for a file, we directly load the Chroma database from the hr_vector_db directory using Chroma(persist_directory=..., embedding_function=...). The rest is similar: set up memory and the ConversationalRetrievalChain, but using the loaded retriever. We then send a welcome message and store the chain.) Links: @cl.on_chat_start, cl.user_session, LangChain Memory, ConversationalRetrievalChain.

on_message Function:

This handles incoming user questions, queries the chain, and displays the results.

# app.py (continued)

@cl.on_message

async def main(message: cl.Message):

# Retrieve the chain from user session

chain = cl.user_session.get("chain") # type: ConversationalRetrievalChain

cb = cl.AsyncLangchainCallbackHandler() # For visualizing steps

# Call the chain with the user's message

res = await chain.ainvoke(message.content, callbacks=[cb])

answer = res["answer"]

source_documents = res["source_documents"]

# Format sources for display

text_elements = []

if source_documents:

for source_idx, source_doc in enumerate(source_documents):

source_name = f"Source {source_idx + 1}"

# Create Text elements for sources; display="side" puts them in a collapsible sidebar

text_elements.append(

cl.Text(content=source_doc.page_content, name=source_name, display="side")

)

source_names = [text_el.name for text_el in text_elements]

# Add source references to the answer message

if source_names:

answer += f"\n\nSources: {', '.join(source_names)}"

else:

answer += "\n\nNo relevant sources found in the policy documents."

# Send the response

await cl.Message(content=answer, elements=text_elements).send()

(Explanation: This code retrieves the chain, calls it using ainvoke with the user's query (message.content), and includes the Chainlit callback handler cb to show processing steps in the UI. It extracts the answer and sources. It creates clickable cl.Text elements for each source and appends references to these elements in the final answer string before sending it back.) Links: @cl.on_message, cl.Message, cl.Text Element, LangchainCallbackHandler.

Step 4: Run and Test Your HR Bot

- Ensure Knowledge Base Exists: Make sure you have run

python build_vectordb.pyat least once and thehr_vector_dbdirectory exists. -

Run Chainlit: In your terminal (in the

hr_botfolder):

chainlit run app.py -w Chat: Open

http://localhost:8000in your browser. The bot should greet you. Ask questions related to your HR documents (e.g., "What is the process for submitting expenses?" or "How many sick days do we get?").

Next Steps & Improvements

- Authentication: Secure your internal bot using Chainlit's authentication features (Auth Overview).

- Deployment: Deploy the app for wider internal access (Deployment Docs).

- Better UI: Customize the look using logos, themes, and custom CSS (Customization Docs). Add helpful starter questions (Starters Concept).

- Data & Feedback: Use Chainlit's data persistence (Data Persistence Overview) with a tool like Literal AI to log conversations, collect feedback, and understand where the bot needs improvement.

- Advanced RAG: Explore different LangChain retrievers (e.g.,

MultiQueryRetriever, Parent Document Retriever) or text splitters for potentially better accuracy on complex questions or documents.

You now have a robust, document-grounded HR Q&A bot built with Chainlit, ready to save time and empower your employees!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)