6 Types of AI Workloads, Challenges and Critical Best Practices

What Are AI Workloads? AI workloads are tasks performed by artificial intelligence systems, which typically involve processing large amounts of data and performing complex computations. Examples of AI workloads are data preparation and pre-processing, traditional machine learning models, deep learning models, natural language processing (NLP), generative AI, and computer vision. Unlike traditional computing tasks, AI workloads demand high levels of computational power and efficiency to handle the iterative processes of learning and adaptation in AI algorithms. These tasks vary widely depending on the application, from simple predictive analytics models to large language models with hundreds of billions of parameters. AI workloads often rely on specialized hardware and software environments optimized for parallel processing and high-speed data analytics. Managing these workloads involves considerations around data handling, computational resources, and algorithm optimization to achieve desired outcomes. Types of AI Workloads Here are some of the main workloads associated with AI. Data Processing Workloads Data processing workloads in AI involve handling, cleaning, and preparing data for further analysis or model training. This step is crucial as the quality and format of the data directly impact the performance of AI models. These workloads are characterized by tasks such as extracting data from various sources, transforming it into a consistent format, and loading it into a system where it can be accessed and used by AI algorithms (ETL processes). They may include more complex operations like feature extraction, where specific attributes of the data are identified and extracted as inputs. Machine Learning Workloads These workloads cover the development, training, and deployment of algorithms capable of learning from and making predictions on data. They require iterative processing over large datasets to adjust model parameters and improve accuracy. The training phase is particularly resource-intensive, often necessitating parallel computing environments and specialized hardware like GPUs or TPUs to speed up computations. Once trained, these models are deployed to perform inference tasks—making predictions based on new data inputs. Deep Learning Workloads Deep learning workloads focus on training and deploying neural networks, a subset of machine learning that mimics the human brain’s structure. They are characterized by their depth, involving multiple layers of artificial neurons that process input data through a hierarchy of increasing complexity and abstraction. Deep learning is particularly effective for tasks involving image recognition, speech recognition, and natural language processing, but requires substantial computational resources to manage the vast amounts of data and complex model architectures. High-performance GPUs or other specialized hardware accelerators are often needed to perform parallel computations. Natural Language Processing (NLP) NLP workloads involve algorithms that enable machines to understand, interpret, and generate human language. This includes tasks like sentiment analysis, language translation, and speech recognition. NLP systems require the ability to process and analyze large volumes of text data, understanding context, grammar, and semantics to accurately interpret or produce human-like responses. To effectively manage NLP workloads, it’s crucial to have computational resources capable of handling complex linguistic models and the nuances of human language. Generative AI Generative AI workloads involve creating new content, such as text, images, and videos, using advanced machine learning models. Large Language Models (LLMs) generate human-like text by predicting the next word in a sequence based on the input provided. These models are trained on vast datasets and can produce coherent, contextually relevant text, making them useful for applications like chatbots, content creation, and automated reporting. In addition to LLMs, diffusion models are the state of the art method for generating high-quality images and videos. These models iteratively refine random noise into coherent visual content by reversing a diffusion process. This approach is effective in generating detailed and diverse images and videos, useful in fields like entertainment, marketing, and virtual reality. The computational demands of training and running diffusion models are significant, often requiring extensive GPU resources and optimized data pipelines. Computer Vision Computer vision enables machines to interpret and make decisions based on visual data, mimicking human visual understanding. This field involves tasks such as image classification, object detection, and facial recognition. Modern computer vision algorithms are based on deep learning architectures, most notably Convolutional Neural Networks. Newer approaches to computer vision leverage transfo

What Are AI Workloads?

AI workloads are tasks performed by artificial intelligence systems, which typically involve processing large amounts of data and performing complex computations. Examples of AI workloads are data preparation and pre-processing, traditional machine learning models, deep learning models, natural language processing (NLP), generative AI, and computer vision.

Unlike traditional computing tasks, AI workloads demand high levels of computational power and efficiency to handle the iterative processes of learning and adaptation in AI algorithms. These tasks vary widely depending on the application, from simple predictive analytics models to large language models with hundreds of billions of parameters.

AI workloads often rely on specialized hardware and software environments optimized for parallel processing and high-speed data analytics. Managing these workloads involves considerations around data handling, computational resources, and algorithm optimization to achieve desired outcomes.

Types of AI Workloads

Here are some of the main workloads associated with AI.

- Data Processing Workloads

Data processing workloads in AI involve handling, cleaning, and preparing data for further analysis or model training. This step is crucial as the quality and format of the data directly impact the performance of AI models.

These workloads are characterized by tasks such as extracting data from various sources, transforming it into a consistent format, and loading it into a system where it can be accessed and used by AI algorithms (ETL processes). They may include more complex operations like feature extraction, where specific attributes of the data are identified and extracted as inputs.

- Machine Learning Workloads

These workloads cover the development, training, and deployment of algorithms capable of learning from and making predictions on data. They require iterative processing over large datasets to adjust model parameters and improve accuracy.

The training phase is particularly resource-intensive, often necessitating parallel computing environments and specialized hardware like GPUs or TPUs to speed up computations.

Once trained, these models are deployed to perform inference tasks—making predictions based on new data inputs.

- Deep Learning Workloads

Deep learning workloads focus on training and deploying neural networks, a subset of machine learning that mimics the human brain’s structure. They are characterized by their depth, involving multiple layers of artificial neurons that process input data through a hierarchy of increasing complexity and abstraction.

Deep learning is particularly effective for tasks involving image recognition, speech recognition, and natural language processing, but requires substantial computational resources to manage the vast amounts of data and complex model architectures. High-performance GPUs or other specialized hardware accelerators are often needed to perform parallel computations.

- Natural Language Processing (NLP)

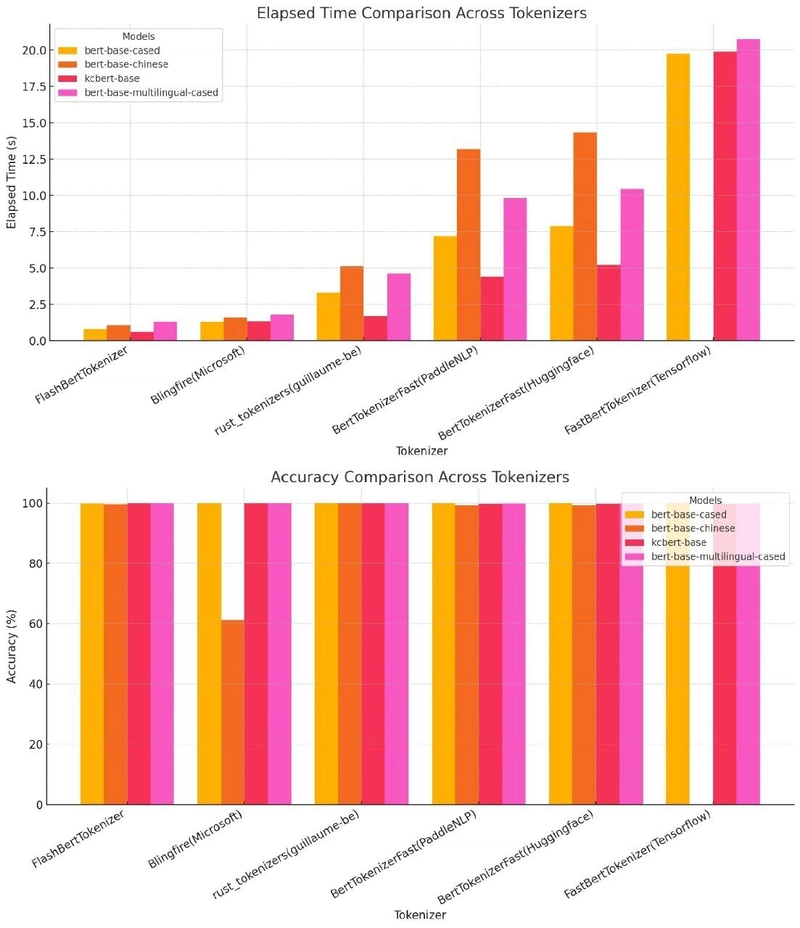

NLP workloads involve algorithms that enable machines to understand, interpret, and generate human language. This includes tasks like sentiment analysis, language translation, and speech recognition.

NLP systems require the ability to process and analyze large volumes of text data, understanding context, grammar, and semantics to accurately interpret or produce human-like responses. To effectively manage NLP workloads, it’s crucial to have computational resources capable of handling complex linguistic models and the nuances of human language.

- Generative AI

Generative AI workloads involve creating new content, such as text, images, and videos, using advanced machine learning models. Large Language Models (LLMs) generate human-like text by predicting the next word in a sequence based on the input provided. These models are trained on vast datasets and can produce coherent, contextually relevant text, making them useful for applications like chatbots, content creation, and automated reporting.

In addition to LLMs, diffusion models are the state of the art method for generating high-quality images and videos. These models iteratively refine random noise into coherent visual content by reversing a diffusion process. This approach is effective in generating detailed and diverse images and videos, useful in fields like entertainment, marketing, and virtual reality. The computational demands of training and running diffusion models are significant, often requiring extensive GPU resources and optimized data pipelines.

- Computer Vision

Computer vision enables machines to interpret and make decisions based on visual data, mimicking human visual understanding. This field involves tasks such as image classification, object detection, and facial recognition. Modern computer vision algorithms are based on deep learning architectures, most notably Convolutional Neural Networks. Newer approaches to computer vision leverage transformers and multi-modal large language models.

Managing computer vision workloads requires powerful computational resources to process and analyze high volumes of image or video data in real time. This demands high-performance GPUs for intensive computations and optimized algorithms that can efficiently process visual information with high accuracy.

Benefits of AI Workloads

AI workloads offer several advantages to modern organizations:

Enhanced efficiency and automation: This frees up resources, allowing organizations to allocate human talent to more complex and strategic initiatives. For example, in manufacturing, AI-driven predictive maintenance can analyze equipment data in real time to predict failures before they occur, reducing downtime and maintenance costs.

Enhanced decision making: Data analytics and machine learning can provide insights that inform strategic decisions. By processing and analyzing vast amounts of data, AI systems can identify trends, predict outcomes, and recommend actions that are not immediately obvious to human analysts. This capability is useful in areas like financial services, where AI can assess market conditions and investment opportunities.

Driving innovation: By applying advanced AI algorithms, organizations can solve complex problems, develop new products, and enhance services. For example, in healthcare, AI-driven diagnostic tools analyze medical images more accurately than human professionals, enabling earlier disease detection and personalized treatment.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

.webp?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)