From Zero to EKS and Hybrid-Nodes —Part 3: Setting up the NLB, Ingress, and Services on a Hybrid EKS Infrastructure

Introduction: In the previous part, we set up the EKS cluster and deployed the first hybrid nodes. At that point, the network topology looked like this: Now, in this third part, we’re going to set up the Network Load Balancer (NLB), configure Ingress, and deploy services to expose our application running on this hybrid infrastructure. We’ll also observe and analyze the behavior of a Kubernetes Deployment in this mixed node environment. Network Topology Changes: To enable communication between pods running on AWS-managed nodes and those on hybrid nodes, we need to configure specific routing rules on our VPN gateway/router. In a production environment, you could enable BGP in Cilium (if your network supports it), but since this is a lab setup, we’ll handle routing manually via static routes on the gateway. Disabling kube-proxy on Hybrid Nodes: Since we’re replacing kube-proxy functionality with Cilium, we’ll prevent kube-proxy from running on hybrid nodes using the following patch: kubectl patch daemonset kube-proxy \ -n kube-system \ --type='json' \ -p='[ { "op": "add", "path": "/spec/template/spec/affinity/nodeAffinity/requiredDuringSchedulingIgnoredDuringExecution/nodeSelectorTerms/0/matchExpressions/2/values/-", "value": "hybrid" } ]' Get EKS API Server Endpoint: This will be needed in the next step: aws eks describe-cluster --name my-eks-cluster --query "cluster.endpoint" Updating the Cilium Configuration: Next, we’ll update our cilium-values.yaml file to: Enable kubeProxyReplacement. Enable Layer 2 (L2) announcements. Define the /25 subnets to be used for pod IPs. Set the Kubernetes API server endpoint and port. affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: eks.amazonaws.com/compute-type operator: In values: - hybrid ipam: mode: cluster-pool operator: clusterPoolIPv4MaskSize: 25 clusterPoolIPv4PodCIDRList: - 192.168.101.0/25 - 192.168.101.128/25 operator: replicas: 1 affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: eks.amazonaws.com/compute-type operator: In values: - hybrid unmanagedPodWatcher: restart: false envoy: enabled: false kubeProxyReplacement: true l2announcements: leaseDuration: 2s leaseRenewDeadline: 1s leaseRetryPeriod: 200ms enabled: true externalIPs: enabled: true k8sClientRateLimit: qps: 100 burst: 150 k8sServiceHost: k8sServicePort: 443 Apply the changes: cd commands/02-cilium-updates helm upgrade -i cilium cilium/cilium -n kube-system -f cilium-values.yaml Verifying Cilium Agents: You should now see cilium-agent pods running on the hybrid nodes, and no kube-proxy pods there: kubectl -n kube-system get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES aws-node-k8qw5 2/2 Running 0 31m 10.0.21.61 ip-10-0-21-61.ec2.internal cilium-lxphl 1/1 Running 0 48s 192.168.100.102 mi-00a55e151790a4c81 cilium-operator-7658767979-f57v5 1/1 Running 0 52s 192.168.100.101 mi-014887c08038cb6c2 cilium-znwdm 1/1 Running 0 49s 192.168.100.101 mi-014887c08038cb6c2 coredns-6b9575c64c-fxzv9 1/1 Running 0 35m 10.0.25.252 ip-10-0-21-61.ec2.internal eks-pod-identity-agent-k559t 1/1 Running 0 31m 10.0.21.61 ip-10-0-21-61.ec2.internal kube-proxy-6j74v 1/1 Running 0 15m 10.0.21.61 ip-10-0-21-61.ec2.internal Static Routing: Now it’s time to configure static routes on the VPN router. First, check which hybrid node is responsible for each /25 subnet: kubectl get ciliumnodes NAME CILIUMINTERNALIP INTERNALIP AGE mi-00a55e151790a4c81 192.168.101.51 192.168.100.102 8m14s mi-014887c08038cb6c2 192.168.101.192 192.168.100.101 8m14s Set the routes: ip route add 192.168.101.0/25 via 192.168.100.102 ip route add 192.168.101.128/25 via 192.168.100.101 Now out network will look like this: Installing the Load Balancer Controller: Add the following Terraform file to your project to install the AWS Load Balancer Controller via Helm, and create the required IAM role: resource "helm_release" "aws-load-balancer-controller" { name = "aws-load-balancer-controller" chart = "aws-load-balancer-controller"

Introduction:

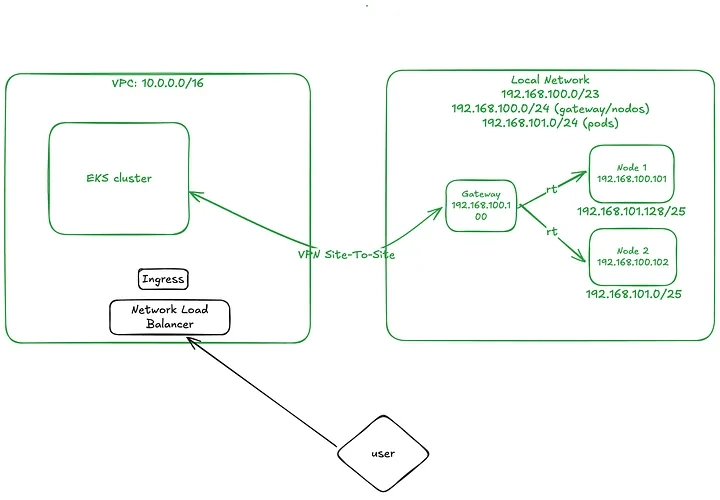

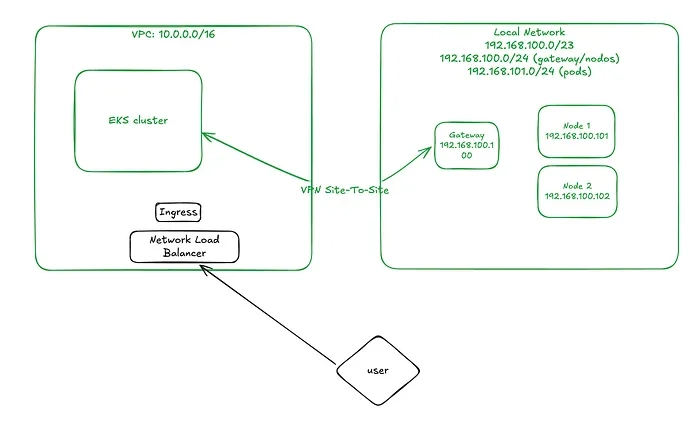

In the previous part, we set up the EKS cluster and deployed the first hybrid nodes. At that point, the network topology looked like this:

Now, in this third part, we’re going to set up the Network Load Balancer (NLB), configure Ingress, and deploy services to expose our application running on this hybrid infrastructure. We’ll also observe and analyze the behavior of a Kubernetes Deployment in this mixed node environment.

Network Topology Changes:

To enable communication between pods running on AWS-managed nodes and those on hybrid nodes, we need to configure specific routing rules on our VPN gateway/router.

In a production environment, you could enable BGP in Cilium (if your network supports it), but since this is a lab setup, we’ll handle routing manually via static routes on the gateway.

Disabling kube-proxy on Hybrid Nodes:

Since we’re replacing kube-proxy functionality with Cilium, we’ll prevent kube-proxy from running on hybrid nodes using the following patch:

kubectl patch daemonset kube-proxy \

-n kube-system \

--type='json' \

-p='[

{

"op": "add",

"path": "/spec/template/spec/affinity/nodeAffinity/requiredDuringSchedulingIgnoredDuringExecution/nodeSelectorTerms/0/matchExpressions/2/values/-",

"value": "hybrid"

}

]'

Get EKS API Server Endpoint:

This will be needed in the next step:

aws eks describe-cluster --name my-eks-cluster --query "cluster.endpoint"

Updating the Cilium Configuration:

Next, we’ll update our cilium-values.yaml file to:

- Enable kubeProxyReplacement.

- Enable Layer 2 (L2) announcements.

- Define the /25 subnets to be used for pod IPs.

- Set the Kubernetes API server endpoint and port.

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: eks.amazonaws.com/compute-type

operator: In

values:

- hybrid

ipam:

mode: cluster-pool

operator:

clusterPoolIPv4MaskSize: 25

clusterPoolIPv4PodCIDRList:

- 192.168.101.0/25

- 192.168.101.128/25

operator:

replicas: 1

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: eks.amazonaws.com/compute-type

operator: In

values:

- hybrid

unmanagedPodWatcher:

restart: false

envoy:

enabled: false

kubeProxyReplacement: true

l2announcements:

leaseDuration: 2s

leaseRenewDeadline: 1s

leaseRetryPeriod: 200ms

enabled: true

externalIPs:

enabled: true

k8sClientRateLimit:

qps: 100

burst: 150

k8sServiceHost: Apply the changes:

cd commands/02-cilium-updates

helm upgrade -i cilium cilium/cilium -n kube-system -f cilium-values.yaml

Verifying Cilium Agents:

You should now see cilium-agent pods running on the hybrid nodes, and no kube-proxy pods there:

kubectl -n kube-system get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aws-node-k8qw5 2/2 Running 0 31m 10.0.21.61 ip-10-0-21-61.ec2.internal

cilium-lxphl 1/1 Running 0 48s 192.168.100.102 mi-00a55e151790a4c81

cilium-operator-7658767979-f57v5 1/1 Running 0 52s 192.168.100.101 mi-014887c08038cb6c2

cilium-znwdm 1/1 Running 0 49s 192.168.100.101 mi-014887c08038cb6c2

coredns-6b9575c64c-fxzv9 1/1 Running 0 35m 10.0.25.252 ip-10-0-21-61.ec2.internal

eks-pod-identity-agent-k559t 1/1 Running 0 31m 10.0.21.61 ip-10-0-21-61.ec2.internal

kube-proxy-6j74v 1/1 Running 0 15m 10.0.21.61 ip-10-0-21-61.ec2.internal

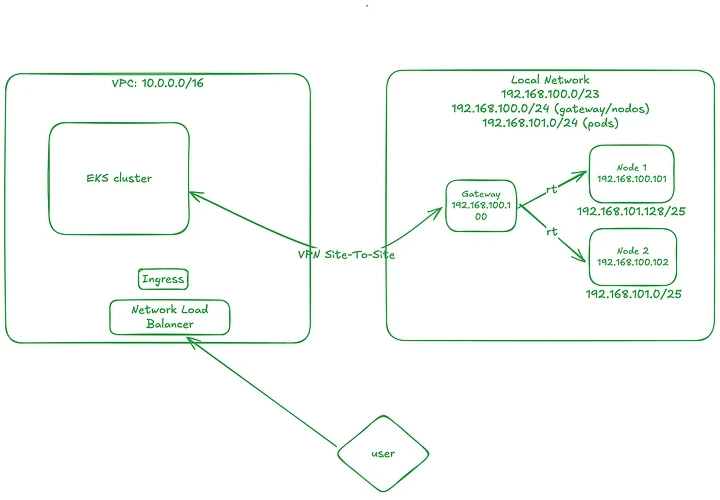

Static Routing:

Now it’s time to configure static routes on the VPN router. First, check which hybrid node is responsible for each /25 subnet:

kubectl get ciliumnodes

NAME CILIUMINTERNALIP INTERNALIP AGE

mi-00a55e151790a4c81 192.168.101.51 192.168.100.102 8m14s

mi-014887c08038cb6c2 192.168.101.192 192.168.100.101 8m14s

Set the routes:

ip route add 192.168.101.0/25 via 192.168.100.102

ip route add 192.168.101.128/25 via 192.168.100.101

Now out network will look like this:

Installing the Load Balancer Controller:

Add the following Terraform file to your project to install the AWS Load Balancer Controller via Helm, and create the required IAM role:

resource "helm_release" "aws-load-balancer-controller" {

name = "aws-load-balancer-controller"

chart = "aws-load-balancer-controller"

repository = "https://aws.github.io/eks-charts"

namespace = "kube-system"

version = "1.12.0"

set {

name = "serviceAccount.create"

value = "true"

}

set {

name = "serviceAccount.name"

value = module.eks_loadbalancer_iam.iam_role_name

}

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = module.eks_loadbalancer_iam.iam_role_arn

}

set {

name = "clusterName"

value = module.eks.cluster_name

}

set {

name = "replicaCount"

value = "1"

}

set {

name = "vpcId"

value = data.terraform_remote_state.vpc.outputs.vpc_id

}

# Optional affinity rule

set {

name = "affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[0].key"

value = "eks.amazonaws.com/capacityType"

}

set {

name = "affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[0].operator"

value = "Exists"

}

}

module "eks_loadbalancer_iam" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

version = "5.54.0"

role_name = "load-balancer-controller"

attach_load_balancer_controller_policy = true

oidc_providers = {

ex = {

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["kube-system:load-balancer-controller"]

}

}

}

Deploy it:

cd ../../EKS-HYBRID

cp ../load-balancer/load-balancer.tf .

tofu init

tofu apply

Verify the changes:

kubectl get deployment/aws-load-balancer-controller -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

aws-load-balancer-controller 1/1 1 1 5m

Deploying the Demo App:

Now let’s deploy a simple app with 6 replicas to observe pod distribution across AWS and hybrid nodes.

apiVersion: v1

kind: Namespace

metadata:

name: whoami

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami

namespace: whoami

spec:

replicas: 6

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

topologySpreadConstraints:

- maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app: whoami

containers:

- image: containous/whoami

imagePullPolicy: Always

name: whoami

---

apiVersion: v1

kind: Service

metadata:

name: whoami

namespace: whoami

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: "external"

spec:

type: ClusterIP

ports:

- name: http

port: 80

targetPort: 80

selector:

app: whoami

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: whoami

namespace: whoami

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-type: nlb

spec:

ingressClassName: alb

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: whoami

port:

number: 80

Apply it:

cd ../commands/03-create-service

kubectl apply -f service.yaml

Check pod distribution:

kubectl get pods -n whoami -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-7cb8f48c8-bhrvw 1/1 Running 0 2m6s 192.168.101.187 mi-014887c08038cb6c2

whoami-7cb8f48c8-gd48g 1/1 Running 0 6s 10.0.21.186 ip-10-0-21-61.ec2.internal

whoami-7cb8f48c8-hhs49 1/1 Running 0 2m9s 192.168.101.212 mi-014887c08038cb6c2

whoami-7cb8f48c8-n64gh 1/1 Running 0 2m9s 192.168.101.108 mi-00a55e151790a4c81

whoami-7cb8f48c8-q8bhj 1/1 Running 0 2m9s 192.168.101.56 mi-00a55e151790a4c81

whoami-7cb8f48c8-rr7kp 1/1 Running 0 9s 10.0.27.231 ip-10-0-21-61.ec2.internal

Test Round-Robin Behavior:

Get the Ingress address:

kubectl get ingress -n whoami

NAME CLASS HOSTS ADDRESS PORTS AGE

whoami alb * k8s-whoami-whoami-xxxx-yyyy.us-east-1.elb.amazonaws.com 80 36s

Then run:

for i in $(seq 1 10);

do curl -s http:///api | jq .ip

; done

[

"127.0.0.1",

"::1",

"192.168.101.212",

"fe80::b405:92ff:fe92:72f4"

]

[

"127.0.0.1",

"::1",

"192.168.101.212",

"fe80::b405:92ff:fe92:72f4"

]

[

"127.0.0.1",

"::1",

"10.0.21.186",

"fe80::6c8f:6aff:fe38:5e55"

]

[

"127.0.0.1",

"::1",

"10.0.27.231",

"fe80::a8a6:26ff:feed:d649"

]

[

"127.0.0.1",

"::1",

"192.168.101.108",

"fe80::28bc:50ff:fe48:d3d9"

]

[

"127.0.0.1",

"::1",

"192.168.101.56",

"fe80::7869:ccff:fee5:b61b"

]

[

"127.0.0.1",

"::1",

"192.168.101.187",

"fe80::14f6:88ff:fe17:f9ae"

]

[

"127.0.0.1",

"::1",

"192.168.101.212",

"fe80::b405:92ff:fe92:72f4"

]

[

"127.0.0.1",

"::1",

"10.0.21.186",

"fe80::6c8f:6aff:fe38:5e55"

]

[

"127.0.0.1",

"::1",

"10.0.27.231",

"fe80::a8a6:26ff:feed:d649"

]

You should see requests being served by both AWS and hybrid nodes.

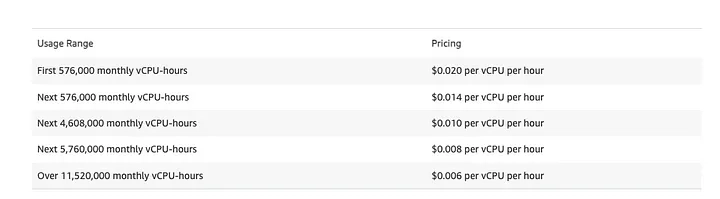

Pricing Overview:

Hybrid nodes do not incur EC2 costs, but you’ll still pay:

- EKS control plane fees

- Data transfer

- Hybrid node usage based on vCPU-hours

Example:

A 32 vCPU hybrid node = 32 × 24 × 30 = 23,040 vCPU-hours/month

At $0.02 per vCPU-hour, that’s ~$460.80/month

The cheapest AWS instance with 32 vCPU and GPU (e.g. g5g.xlarge) costs ~$987/month — nearly 2x more expensive

Conclusion:

As you can see, it’s entirely possible to run a hybrid EKS environment combining AWS-managed and on-prem nodes. While this is not a common setup, it’s a powerful tool for organizations with existing infrastructure or specific compliance requirements.

This lab setup uses static routing, eks_managed_node_groups, and minimal redundancy. In a production scenario, you’d likely adopt:

- VPC CNI custom networking

- Karpenter for dynamic scaling

- BGP routing for flexibility

- A real VPN appliance

But as a proof of concept, it offers a strong foundation and a practical look into what’s required to implement EKS hybrid clusters successfully.

All the files used by this lab, could be found here

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes April 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)