Enhancing AI Efficiency: Tips, Innovations, and Collaboration Insights

Originally published at ssojet Google Cloud has announced a suite of new tools and features designed to help organizations reduce costs and improve efficiency of AI workloads across their cloud infrastructure. The announcement comes as enterprises increasingly seek ways to optimize spending on AI initiatives while maintaining performance and scalability. The new features focus on three key areas: compute resource optimization, specialized hardware acceleration, and intelligent workload scheduling. In the announcement, Google Cloud's VP of AI Products said, "Organizations are increasingly looking for ways to optimize their AI costs without sacrificing performance or capability, these new features directly address that need by providing more efficient ways to run machine learning training and inference." Google Cloud's approach begins with strategic platform selection. Organizations now have multiple options ranging from fully-managed services to highly customizable solutions. Vertex AI offers a unified, fully managed AI development platform, while Cloud Run with GPU support provides a scalable inference service option. For long-running tasks, Cloud Batch combined with Spot Instances can significantly reduce costs. Organizations with Kubernetes expertise may benefit from Google Kubernetes Engine, while those requiring maximum control can utilize Google Compute Engine. A key recommendation focuses on optimizing container performance. When working with inference containers in environments like GKE or Cloud Run, Google advises keeping containers lightweight by externally storing models using Cloud Storage with FUSE, Filestore, or shared read-only persistent disks. Storage selection is critical in optimization. Google Cloud recommends Filestore for smaller AI workloads, Cloud Storage for object storage at any scale, and Cloud Storage FUSE for mounting storage buckets as a file system. For lower latency, Parallelstore provides sub-millisecond access times, while Hyperdisk ML delivers high-performance storage. To prevent costly delays in resource acquisition, Google Cloud emphasizes the importance of Dynamic Workload Scheduler and Future Reservations, guaranteeing availability when required while optimizing the procurement process for popular hardware components. Deployment efficiency can be enhanced through custom disk images. Organizations can create and maintain custom disk images that allow new, fully-configured workers to deploy in seconds rather than hours. AI cost management has become increasingly critical across industries. In response, both AWS and Microsoft Azure have also ramped up efforts to support enterprise AI workloads with cost-aware tools within their respective platforms. Qumulo Launches NeuralCache for AI Qumulo has introduced NeuralCache, a predictive caching solution designed to enhance data performance for AI-driven enterprise applications and critical line-of-business workloads. Integrated into Qumulo’s Cloud Data Fabric, this technology leverages AI and machine learning models to optimize read/write caching, delivering efficiency and scalability across both cloud and on-premises environments. “The Qumulo NeuralCache redefines how organizations manage and access massive datasets... by adapting in real-time to multi-variate factors,” said Qumulo Chief Technology Officer Kiran Bhageshpur. This predictive model-based caching system enhances application performance, reduces latency, while ensuring data consistency. Key features of Qumulo NeuralCache include: Dynamic Predictive Tuning: NeuralCache continuously tunes itself based on real-time data patterns, improving efficiency and performance with each cache hit or miss. Cost Efficiency in the Hybrid Cloud: The system intelligently stacks object writes, minimizing API charges in public cloud environments while optimizing I/O cycles for on-premises deployments. Scalability for Massive Datasets: NeuralCache excels with applications handling exabyte-sized datasets, learning and improving as data volume and workload complexity grow. Consistency and Correctness: Fully integrated into Qumulo’s filesystem, NeuralCache ensures that users always access the most current data. NeuralCache is available immediately as part of Qumulo’s latest software release, enabling existing customers to upgrade seamlessly. Procter & Gamble Study Finds AI Could Help Office Collaboration Procter & Gamble recently conducted a study assessing the technology's potential value to its operations, with partial funding from Harvard Business School. The study involved 776 professionals tasked with real-world product innovation challenges to determine the impact of generative AI on collaborative performance. The results showed that AI replicates many benefits of human collaboration, acting as a "cybernetic teammate." The researchers found that individuals working with AI produced solutions comparable to those generated by two-person t

Originally published at ssojet

Google Cloud has announced a suite of new tools and features designed to help organizations reduce costs and improve efficiency of AI workloads across their cloud infrastructure. The announcement comes as enterprises increasingly seek ways to optimize spending on AI initiatives while maintaining performance and scalability. The new features focus on three key areas: compute resource optimization, specialized hardware acceleration, and intelligent workload scheduling.

In the announcement, Google Cloud's VP of AI Products said, "Organizations are increasingly looking for ways to optimize their AI costs without sacrificing performance or capability, these new features directly address that need by providing more efficient ways to run machine learning training and inference."

Google Cloud's approach begins with strategic platform selection. Organizations now have multiple options ranging from fully-managed services to highly customizable solutions. Vertex AI offers a unified, fully managed AI development platform, while Cloud Run with GPU support provides a scalable inference service option. For long-running tasks, Cloud Batch combined with Spot Instances can significantly reduce costs. Organizations with Kubernetes expertise may benefit from Google Kubernetes Engine, while those requiring maximum control can utilize Google Compute Engine.

A key recommendation focuses on optimizing container performance. When working with inference containers in environments like GKE or Cloud Run, Google advises keeping containers lightweight by externally storing models using Cloud Storage with FUSE, Filestore, or shared read-only persistent disks.

Storage selection is critical in optimization. Google Cloud recommends Filestore for smaller AI workloads, Cloud Storage for object storage at any scale, and Cloud Storage FUSE for mounting storage buckets as a file system. For lower latency, Parallelstore provides sub-millisecond access times, while Hyperdisk ML delivers high-performance storage.

To prevent costly delays in resource acquisition, Google Cloud emphasizes the importance of Dynamic Workload Scheduler and Future Reservations, guaranteeing availability when required while optimizing the procurement process for popular hardware components.

Deployment efficiency can be enhanced through custom disk images. Organizations can create and maintain custom disk images that allow new, fully-configured workers to deploy in seconds rather than hours.

AI cost management has become increasingly critical across industries. In response, both AWS and Microsoft Azure have also ramped up efforts to support enterprise AI workloads with cost-aware tools within their respective platforms.

Qumulo Launches NeuralCache for AI

Qumulo has introduced NeuralCache, a predictive caching solution designed to enhance data performance for AI-driven enterprise applications and critical line-of-business workloads. Integrated into Qumulo’s Cloud Data Fabric, this technology leverages AI and machine learning models to optimize read/write caching, delivering efficiency and scalability across both cloud and on-premises environments.

“The Qumulo NeuralCache redefines how organizations manage and access massive datasets... by adapting in real-time to multi-variate factors,” said Qumulo Chief Technology Officer Kiran Bhageshpur. This predictive model-based caching system enhances application performance, reduces latency, while ensuring data consistency.

Key features of Qumulo NeuralCache include:

- Dynamic Predictive Tuning: NeuralCache continuously tunes itself based on real-time data patterns, improving efficiency and performance with each cache hit or miss.

- Cost Efficiency in the Hybrid Cloud: The system intelligently stacks object writes, minimizing API charges in public cloud environments while optimizing I/O cycles for on-premises deployments.

- Scalability for Massive Datasets: NeuralCache excels with applications handling exabyte-sized datasets, learning and improving as data volume and workload complexity grow.

- Consistency and Correctness: Fully integrated into Qumulo’s filesystem, NeuralCache ensures that users always access the most current data.

NeuralCache is available immediately as part of Qumulo’s latest software release, enabling existing customers to upgrade seamlessly.

Procter & Gamble Study Finds AI Could Help Office Collaboration

Procter & Gamble recently conducted a study assessing the technology's potential value to its operations, with partial funding from Harvard Business School. The study involved 776 professionals tasked with real-world product innovation challenges to determine the impact of generative AI on collaborative performance.

The results showed that AI replicates many benefits of human collaboration, acting as a "cybernetic teammate." The researchers found that individuals working with AI produced solutions comparable to those generated by two-person teams. AI assistance allows individuals to bridge gaps in their knowledge similarly to consulting with a colleague.

P&G's chief research and innovation officer, Victor Aguilar, remarked, “This study affirms what we've long suspected: AI is a game-changer for innovation,” emphasizing AI's role in accelerating speed to innovation.

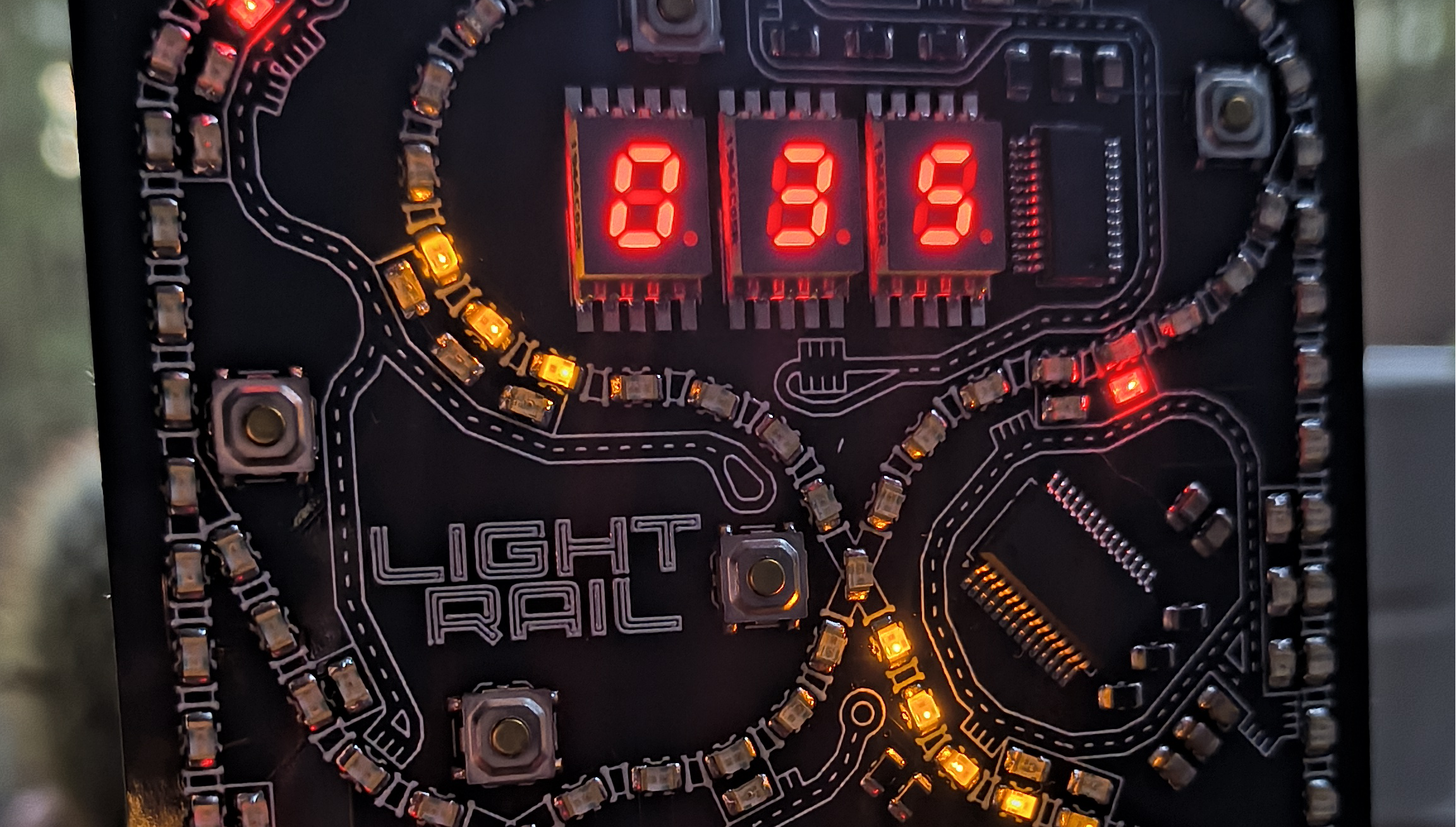

World's First Light-Powered Neural Processing Units (NPUs)

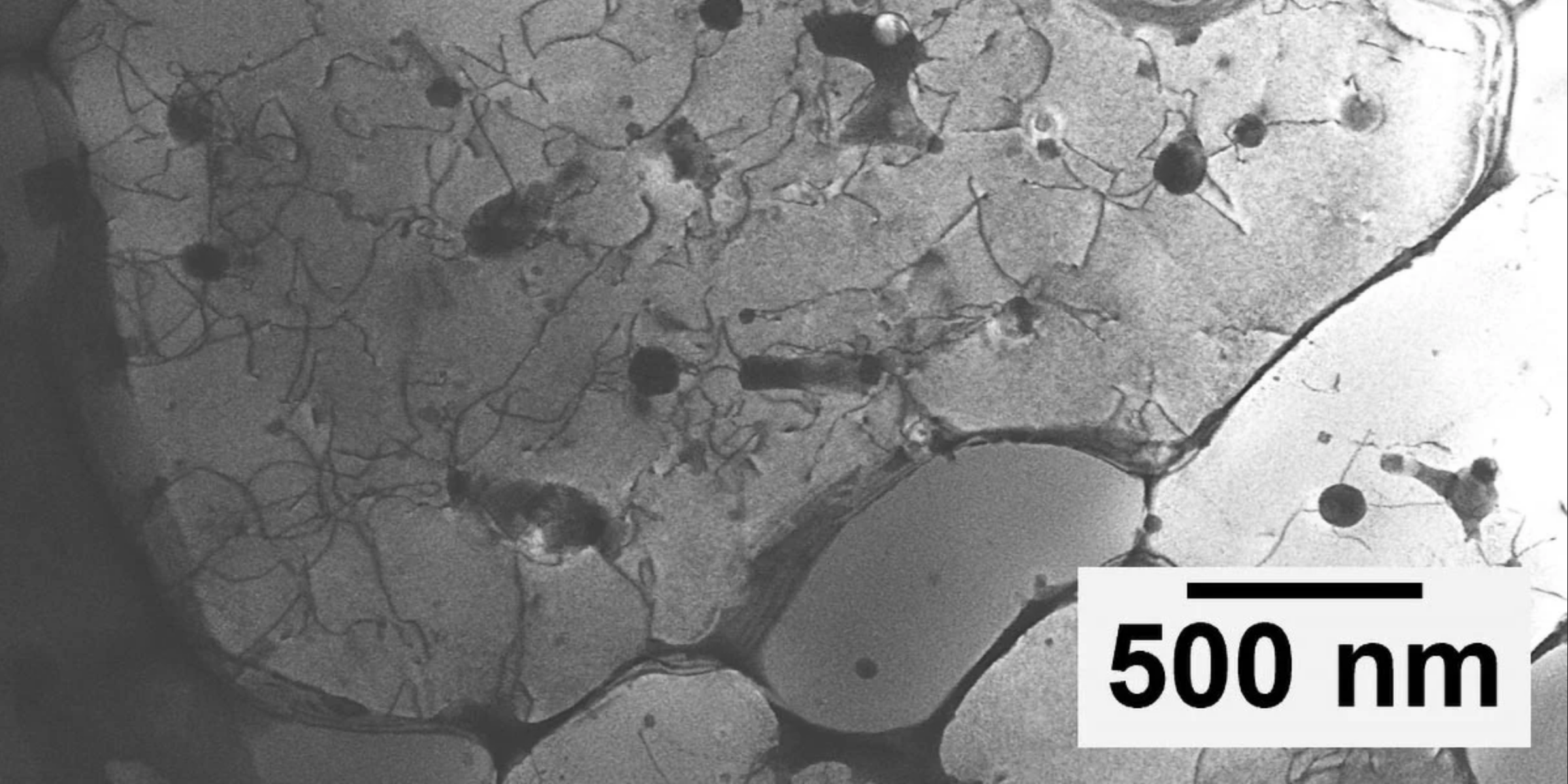

Q.ANT has developed a light-powered computer chip aimed at driving AI data centers and enhancing high-performance computing sustainability. This photonic AI chip is expected to deliver a 30-fold increase in energy efficiency and a 50-fold boost in computing speed compared to silicon-based chips.

Pilot production of the chip is underway at IMS Chips in Germany, where Q.ANT has invested 14 million euros to repurpose a semiconductor factory. The chip uses thin-film lithium niobate (TFLN), which allows it to modulate optical signals with extreme precision.

Jens Anders, a professor at the University of Stuttgart, stated, “As AI and data-intensive applications push conventional semiconductor technology to its limits, we need to rethink the way we approach computing at the core.”

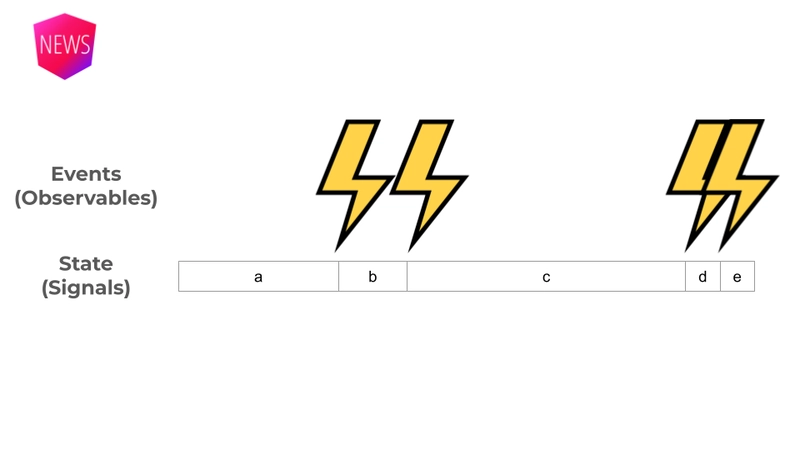

Researchers Teach LLMs to Solve Complex Planning Challenges

MIT researchers have introduced a framework that guides large language models (LLMs) to break down complex planning problems and solve them using a software tool. The framework allows users to describe their problem in natural language, with the model encoding the input into a format that can be unraveled by an optimization solver.

The researchers tested their framework on nine complex challenges, achieving an 85 percent success rate. The LLM-based Formalized Programming (LLMFP) framework can be applied to various multistep planning tasks, such as scheduling airline crews or managing factory machine time.

Yilun Hao, a graduate student involved in the research, stated, “Our research introduces a framework that essentially acts as a smart assistant for planning problems.”

Explore how SSOJet can enhance your enterprise's authentication and user management with secure SSO solutions. Our API-first platform features directory sync, SAML, OIDC, and magic link authentication. Visit https://ssojet.com to learn more or contact us for a demo.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

![Here’s the first live demo of Android XR on Google’s prototype smart glasses [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/google-android-xr-ted-glasses-demo-3.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New Beats USB-C Charging Cables Now Available on Amazon [Video]](https://www.iclarified.com/images/news/97060/97060/97060-640.jpg)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)