Talk to Your Kubernetes Cluster Using AI

Today, I explored kubectl-ai, a powerful CLI from Google Cloud that lets you interact with your Kubernetes cluster using natural language, powered by local LLMs like Mistral (via Ollama) or cloud models like Gemini. Imagine saying things like: “List all pods in default namespace” “generate a deployment with 3 nginx replicas” “debug a pod stuck in CrashLoopBackOff” “Generate a YAML for a CronJob that runs every 5 minutes” And your terminal does the work — no YAML guessing, no docs tab-hopping. How does Kubectl-ai work? 1) You type a natural language prompt like “List all pods in kube-system”. 2) The kubectl-ai CLI sends your prompt to a connected LLM (like Ollama or Gemini). 3) The LLM interprets the request and returns either a plain explanation, a suggested kubectl command, or a tool-call instruction. 4) kubectl-ai processes the response: If --dry-run is enabled, it just prints the command. If --enable-tool-use-shim is used, it extracts and runs the command. 5) The actual kubectl command is executed on your active Kubernetes cluster. 6) The cluster returns the result (like pod lists or deployment status). 7) The output is shown in your terminal — just like you ran the command manually. kubectl-ai supports the Model Context Protocol (MCP), the emerging open protocol for AI tool interoperability.

Today, I explored kubectl-ai, a powerful CLI from Google Cloud that lets you interact with your Kubernetes cluster using natural language, powered by local LLMs like Mistral (via Ollama) or cloud models like Gemini.

Imagine saying things like:

“List all pods in default namespace”

“generate a deployment with 3 nginx replicas”

“debug a pod stuck in CrashLoopBackOff”

“Generate a YAML for a CronJob that runs every 5 minutes”

And your terminal does the work — no YAML guessing, no docs tab-hopping.

How does Kubectl-ai work?

1) You type a natural language prompt like “List all pods in kube-system”.

2) The kubectl-ai CLI sends your prompt to a connected LLM (like Ollama or Gemini).

3) The LLM interprets the request and returns either a plain explanation, a suggested kubectl command, or a tool-call instruction.

4) kubectl-ai processes the response:

If --dry-run is enabled, it just prints the command.

If --enable-tool-use-shim is used, it extracts and runs the command.

5) The actual kubectl command is executed on your active Kubernetes cluster.

6) The cluster returns the result (like pod lists or deployment status).

7) The output is shown in your terminal — just like you ran the command manually.

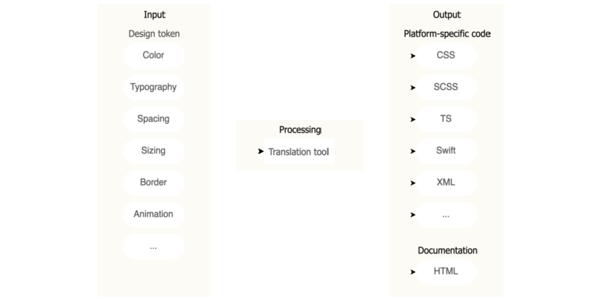

kubectl-ai supports the Model Context Protocol (MCP), the emerging open protocol for AI tool interoperability.

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

.jpeg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-xl.jpg)

![Apple Working on Brain-Controlled iPhone With Synchron [Report]](https://www.iclarified.com/images/news/97312/97312/97312-640.jpg)